Language model training method, device and equipment and computer readable storage medium

A language model and training method technology, applied in computing, neural learning methods, biological neural network models, etc., can solve the problem that the model has low sensitivity to nouns and accuracy, and the model cannot learn Chinese semantic information. Chinese entity relationship information and other problems to achieve the effect of increasing the accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0025] It should be understood that the specific embodiments described here are only used to explain the present invention, not to limit the present invention.

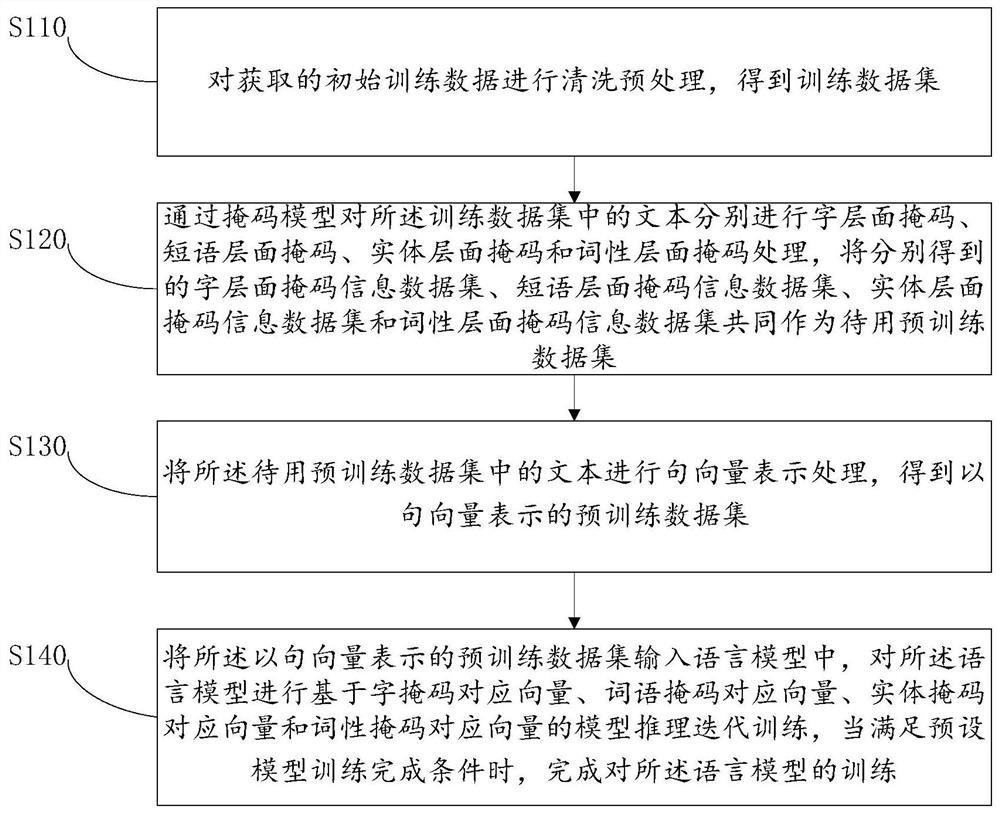

[0026] The invention provides a language model training method. refer to figure 1 As shown, it is a schematic flowchart of a language model training method provided by an embodiment of the present invention. The method may be performed by a device, and the device may be implemented by software and / or hardware.

[0027] In this embodiment, the language model training method includes:

[0028] Step S110, performing cleaning and preprocessing on the acquired initial training data to obtain a training data set.

[0029] Specifically, when the processor receives an instruction for language model training, it obtains the initial training data from the text database. Since there may be some special symbols, numbers, and some special formats in the initial training data that will affect the subsequent model training, there...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com