Method and system for multi-level caching of main thread shared by tasks in thread pool, and equipment

A thread pool and main thread technology, applied in special data processing applications, instruments, electrical and digital data processing, etc., can solve the problems that asynchronous tasks cannot share the data of main thread tasks, and the system processing capacity becomes low, and achieves fast processing speed. , the effect of reducing stress

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0036] The implementations described in the following exemplary examples do not represent all implementations consistent with the present disclosure. Rather, they are merely examples of approaches consistent with aspects of the disclosure as recited in the appended claims.

[0037] The terminology used in the present disclosure is for the purpose of describing particular embodiments only, and is not intended to limit the present disclosure. As used in this disclosure and the appended claims, the singular forms "a", "the", and "the" are intended to include the plural forms as well, unless the context clearly dictates otherwise. It should also be understood that the term "and / or" as used herein refers to and includes any and all possible combinations of one or more of the associated listed items.

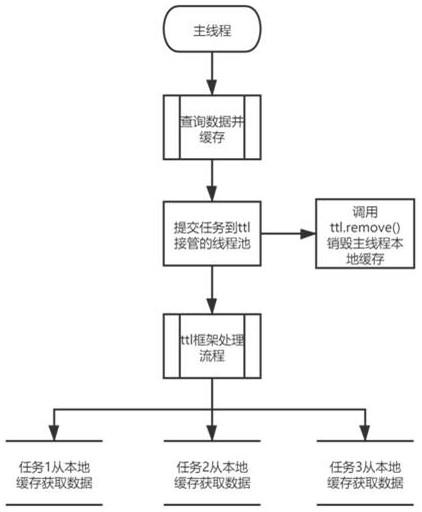

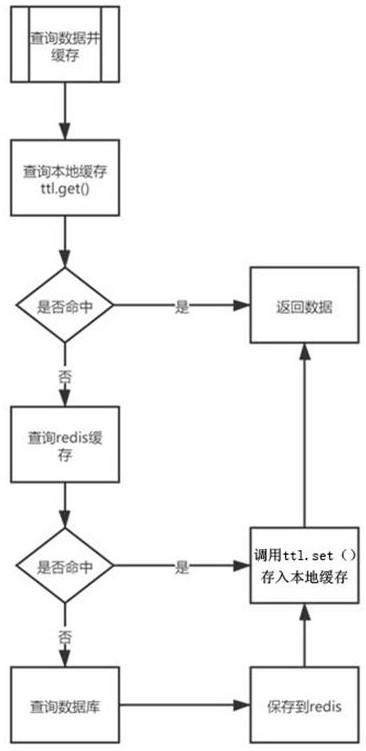

[0038] see figure 1 and figure 2 . figure 1 It is an exemplary flow chart of the multi-level caching method for sharing the main thread by tasks in the thread pool of the present...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com