Patents

Literature

37 results about "Task sharing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

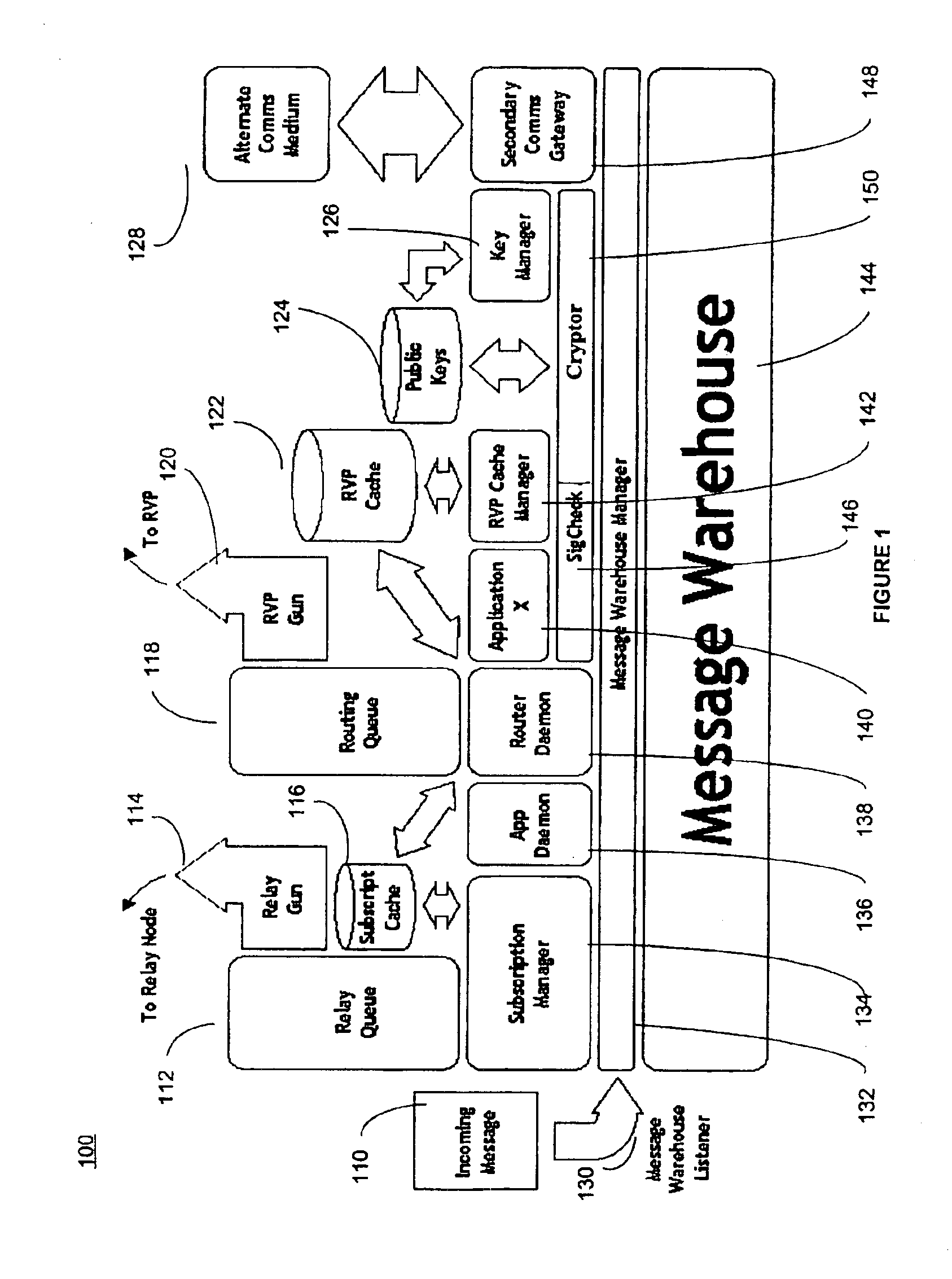

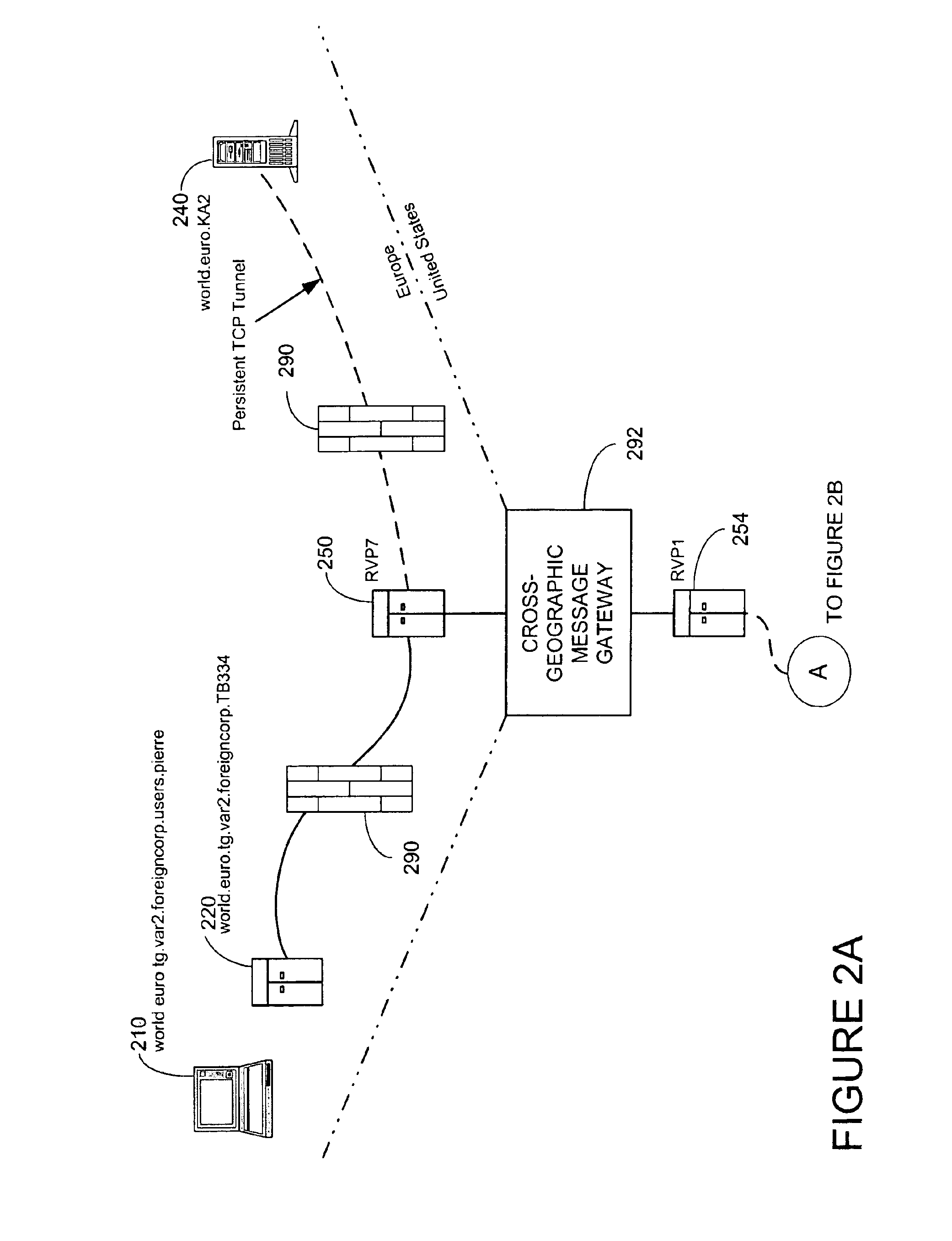

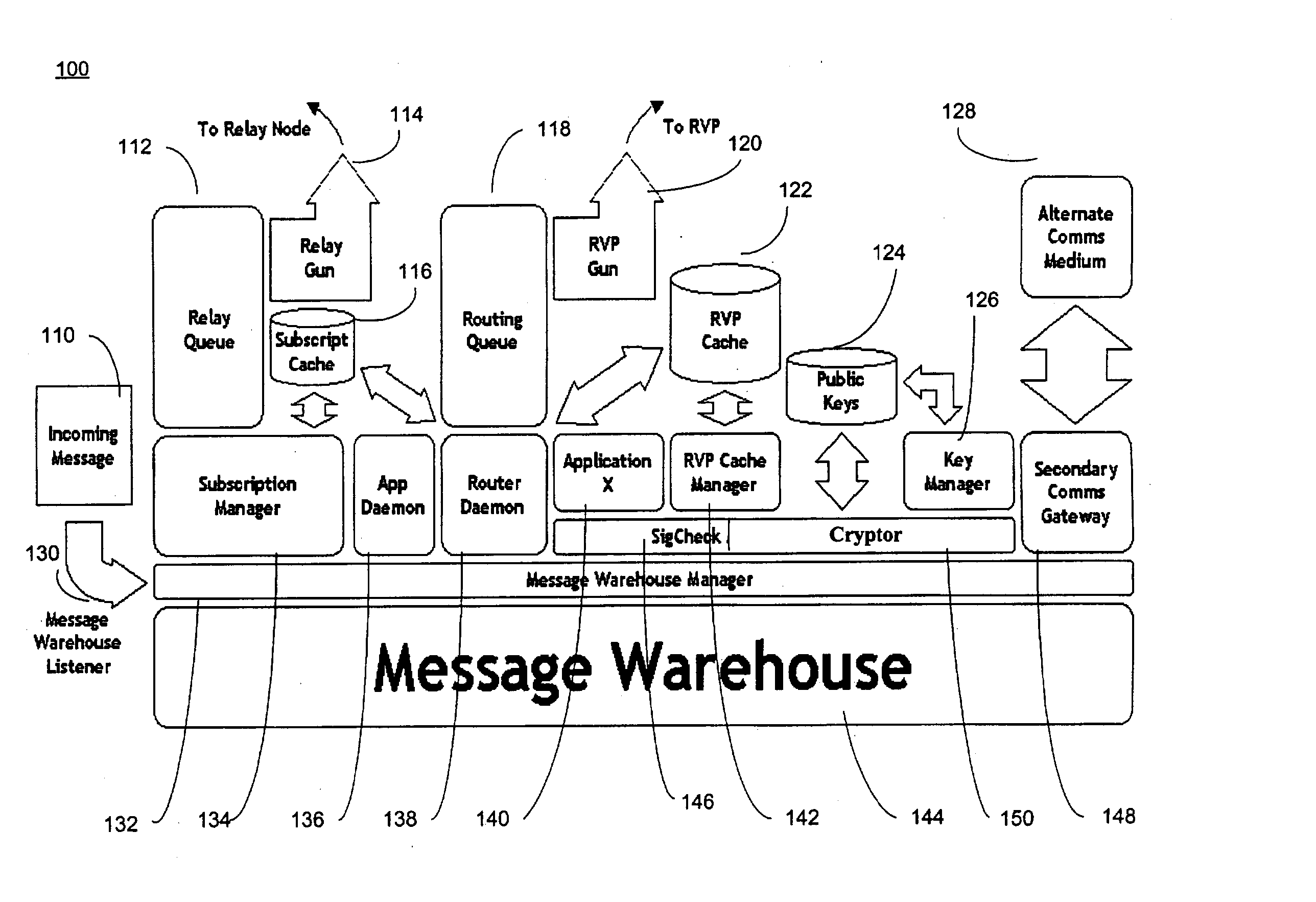

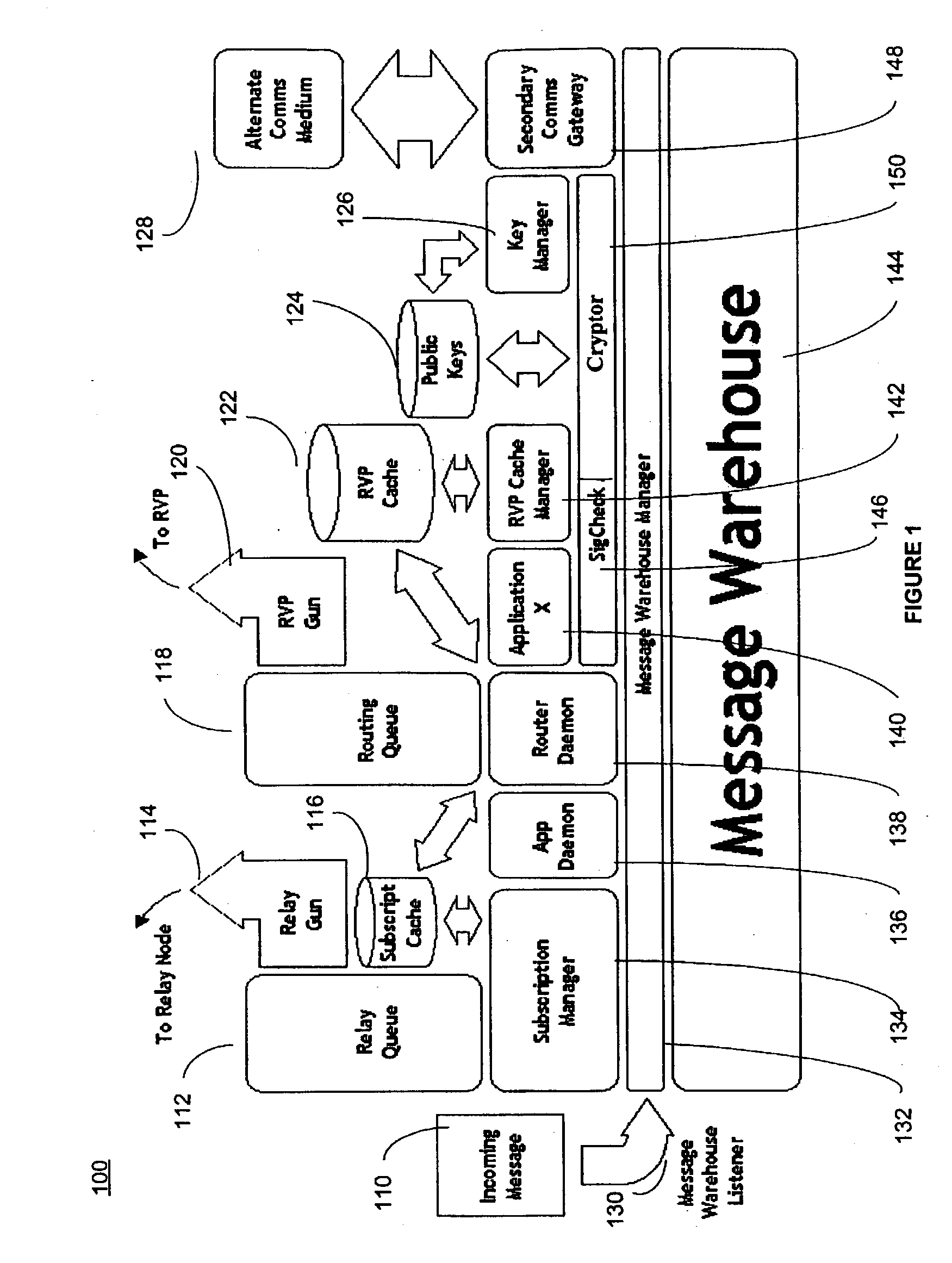

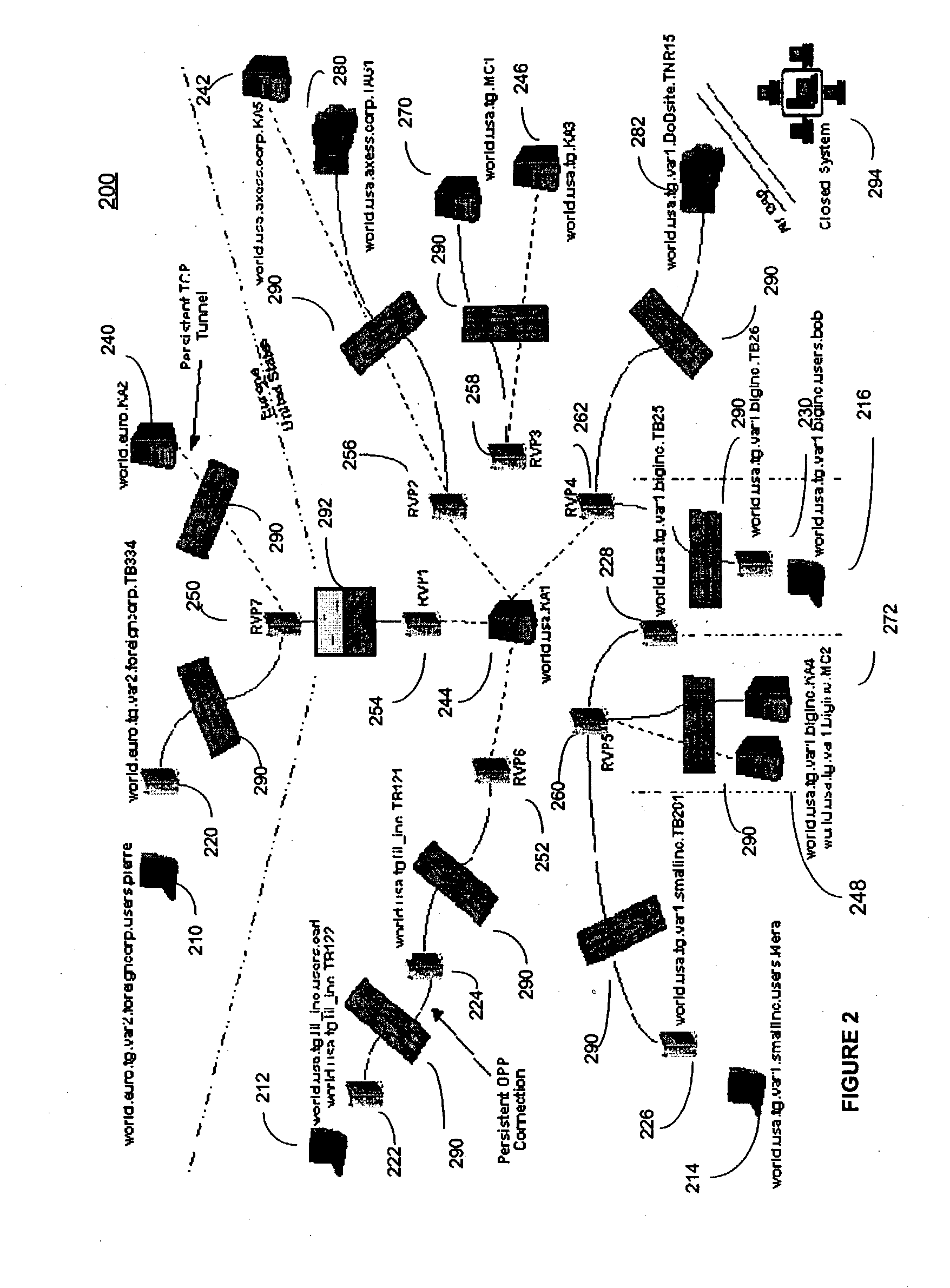

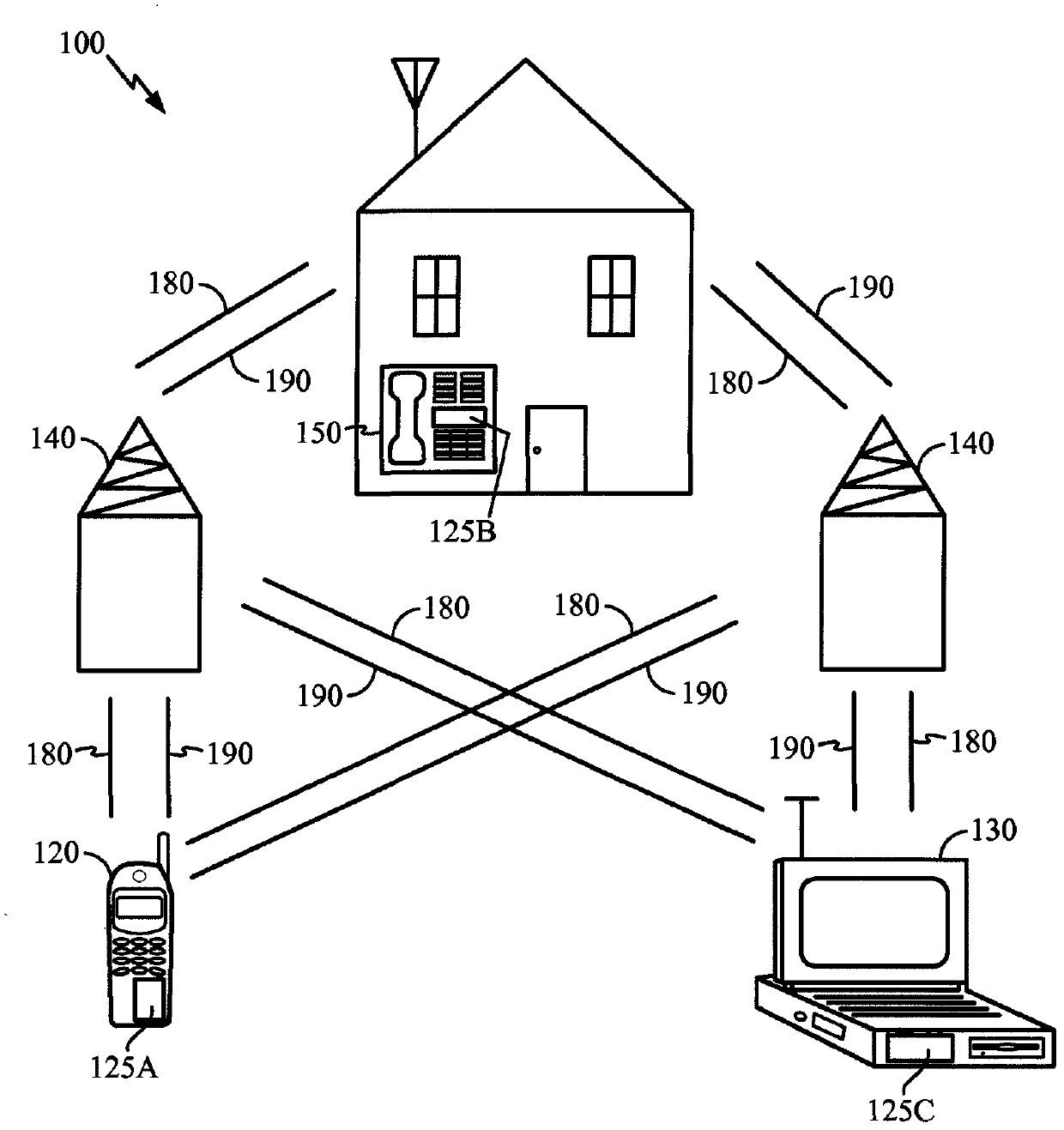

System and method for secure message-oriented network communications

InactiveUS6959393B2Minimize threatFacilitate and control accessKey distribution for secure communicationDigital data processing detailsSecurity MeasureNetwork communication

The present invention provides a message-oriented middleware solution for securely transmitting messages and files across public networks unencumbered by intervening network barriers implemented as security measures. It also provides a dynamic, dedicated, application level VPN solution that is facilitated by the message-oriented middleware. Standard encryption algorithms are used to minimize the threat of eavesdropping and an Open-Pull Protocol (OPP) that allows target nodes to pull and verify the credentials of requestors prior to the passing of any data. Messaging can be segregated into multiple and distinct missions that all share the same nodes. The security network's architecture is built to resist and automatically recover from poor, slow, and degrading communications channels. Peers are identifiable by hardware appliance, software agent, and personally identifiable sessions. The security network provides a dynamic, private transport for sensitive data over existing non-secure networks without the overhead and limited security associated with traditional VPN solutions.

Owner:THREATGUARD

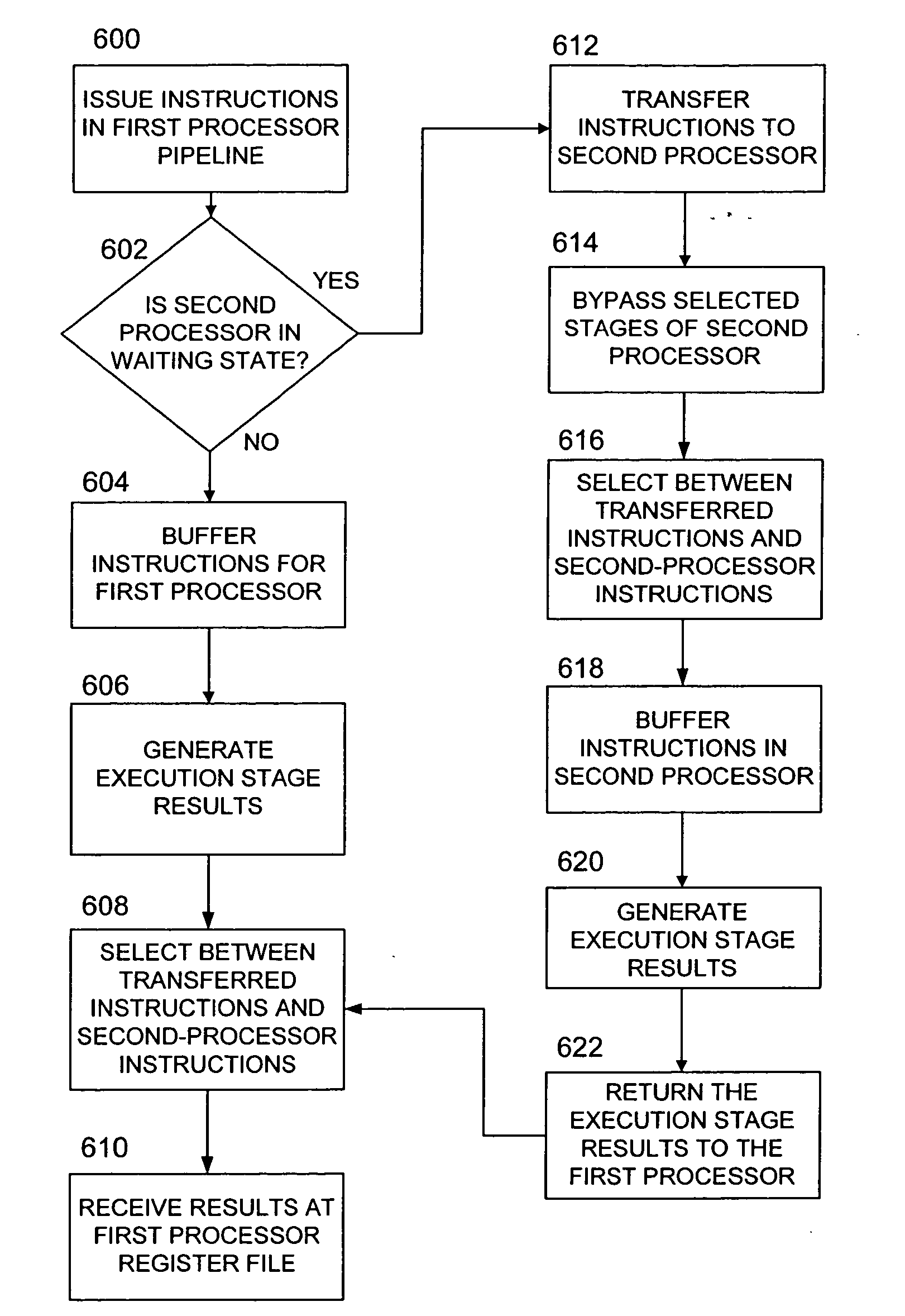

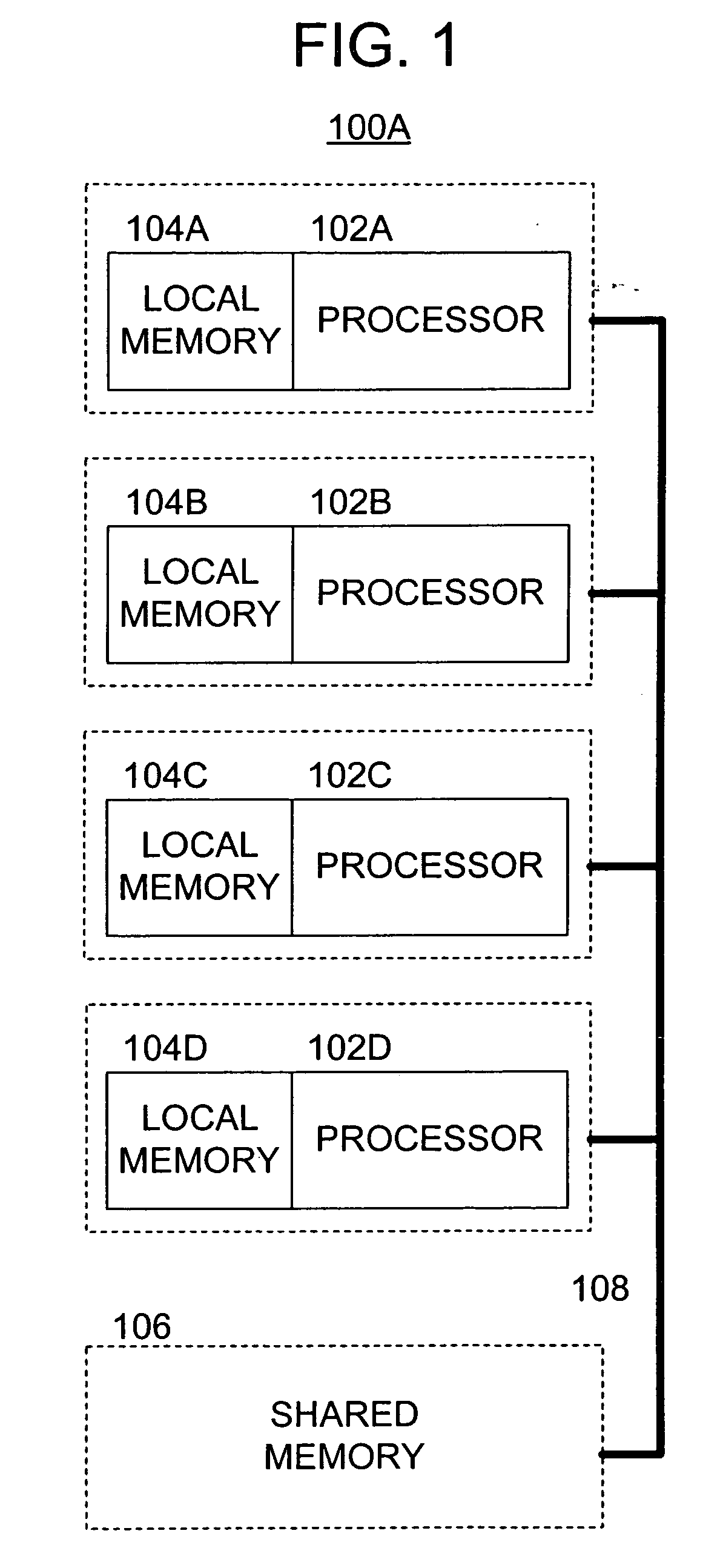

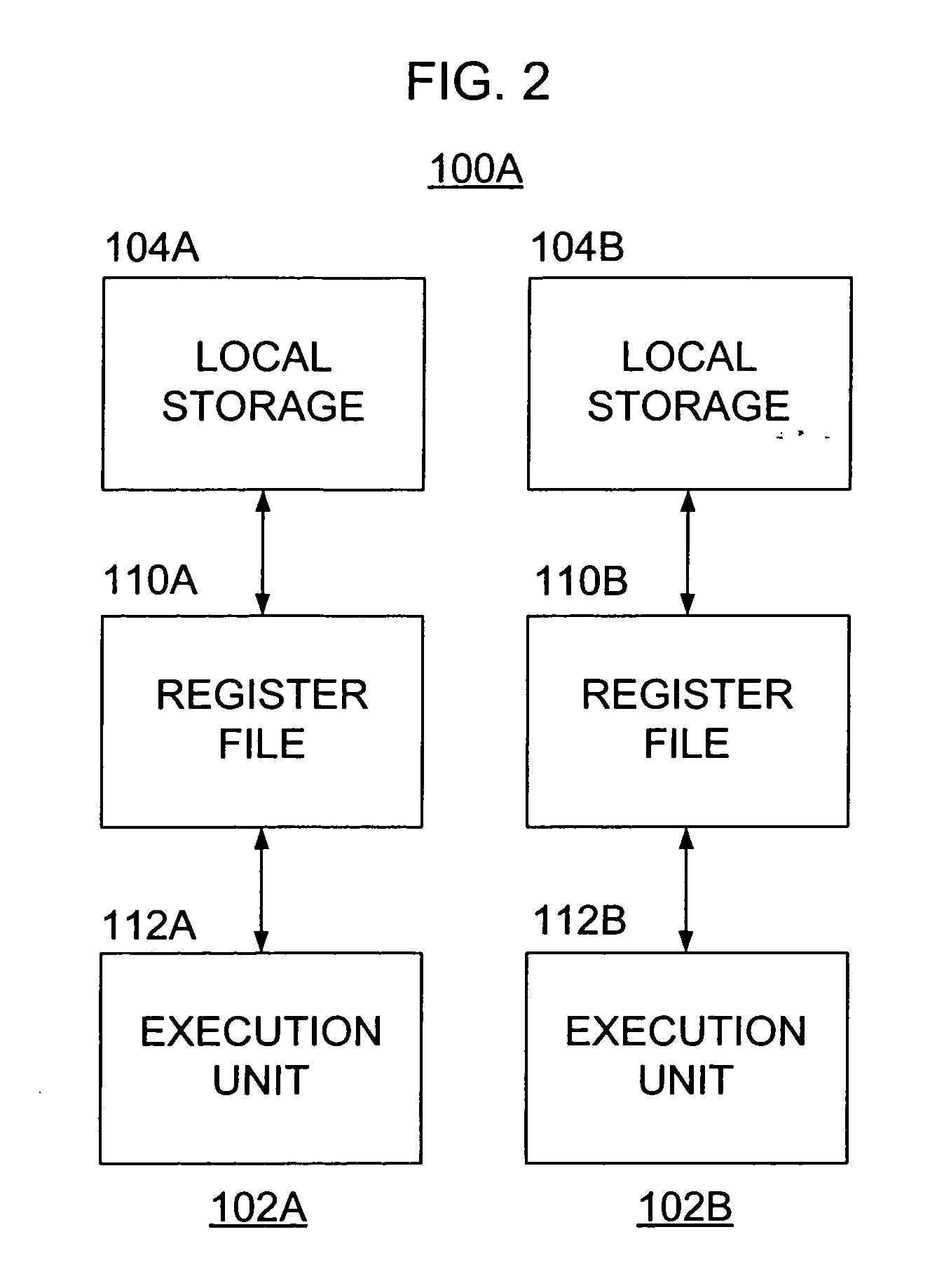

Methods and apparatus for task sharing among a plurality of processors

InactiveUS20070083870A1Reduce stepsReduce operating frequencyMultiprogramming arrangementsMemory systemsMulti processorParallel computing

A method is disclosed which may include issuing a plurality of instructions in a processing pipeline of a first processor within a multiprocessor system; determining whether a second processor in the multiprocessor system is in at least one of a running state and a waiting state; and transferring at least one of the instructions to execution stages of a processing pipeline of the second processor and bypassing at least one earlier stage of the processing pipeline of the second processor, when the second processor is in the waiting state.

Owner:SONY COMPUTER ENTERTAINMENT INC

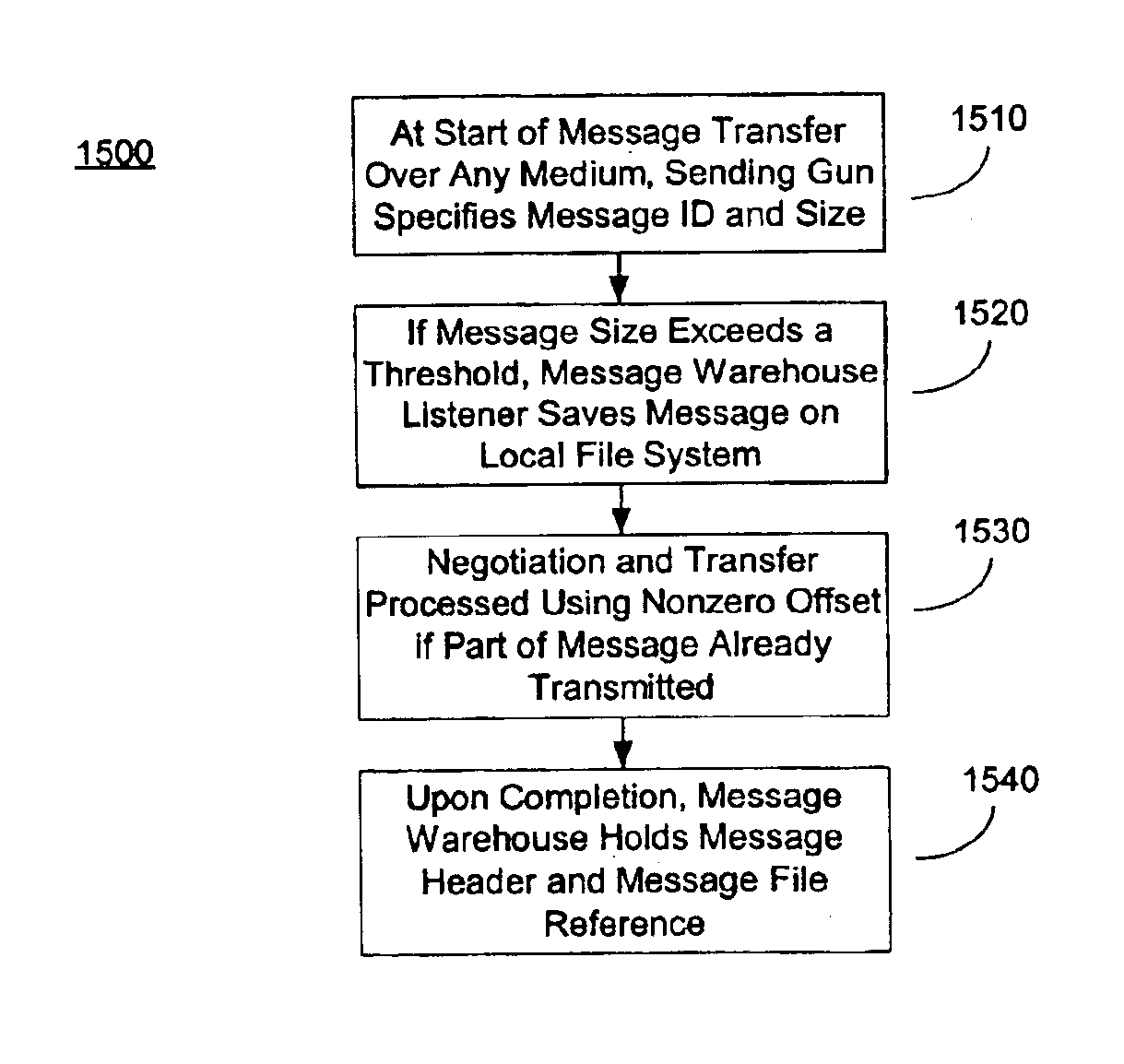

System and Method for Secure Message-Oriented Network Communications

InactiveUS20030202663A1Minimize threatFacilitate and control accessKey distribution for secure communicationDigital data processing detailsCommunications systemSecurity Measure

The present invention provides a message-oriented middleware solution for securely transmitting messages and files across public networks unencumbered by intervening network barriers implemented as security measures. It also provides a dynamic, dedicated, application level VPN solution that is facilitated by the message-oriented middleware. Standard encryption algorithms are used to minimize the threat of eavesdropping and an Open-Pull Protocol (OPP) that allows target nodes to pull and verify the credentials of requestors prior to the passing of any data. Messaging can be segregated into multiple and distinct missions that all share the same nodes. The security network's architecture is built to resist and automatically recover from poor, slow, and degrading communications channels. Peers are identifiable by hardware appliance, software agent, and personally identifiable sessions. The security network provides a dynamic, private transport for sensitive data over existing non-secure networks without the overhead and limited security associated with traditional VPN solutions.

Owner:THREATGUARD

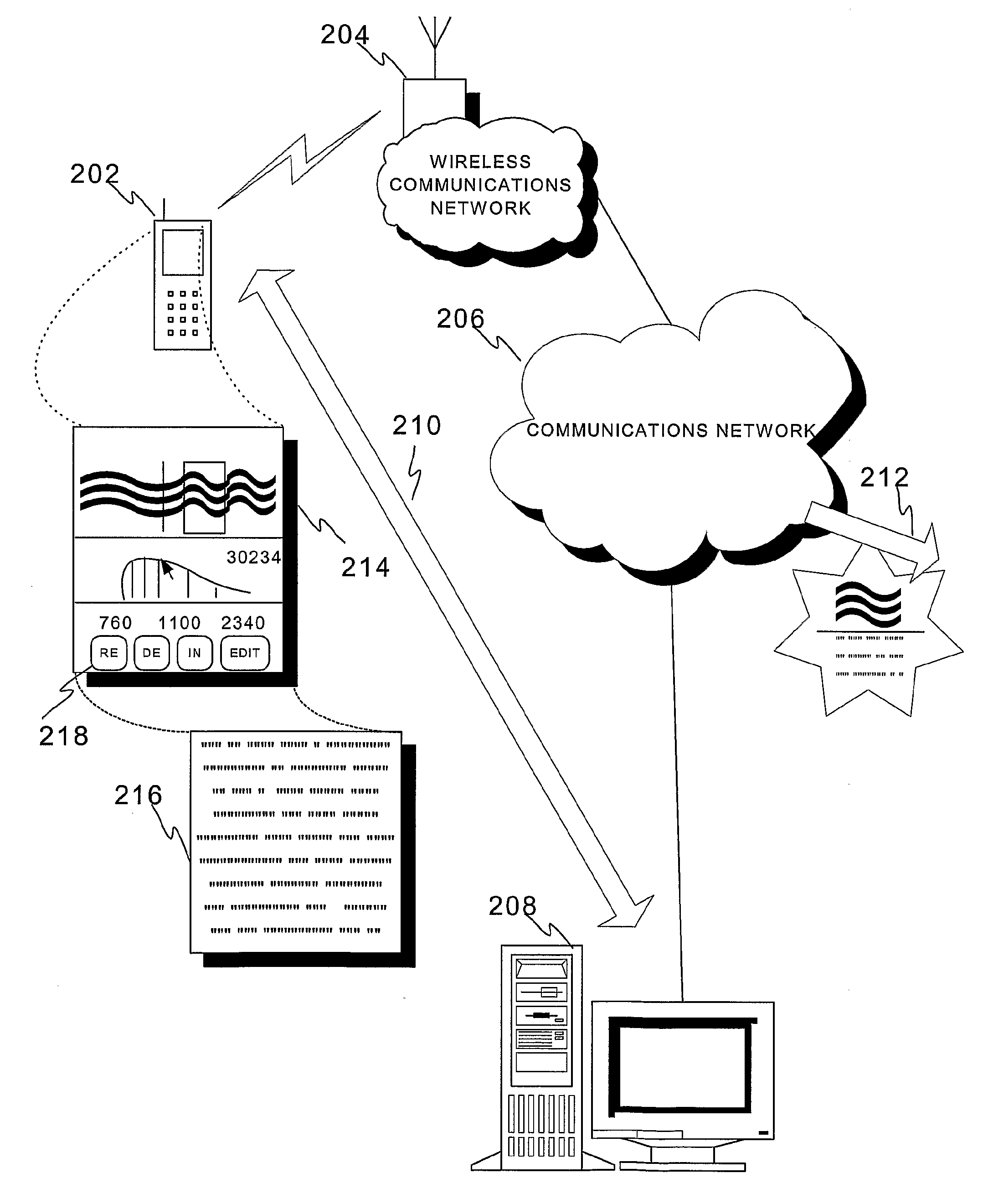

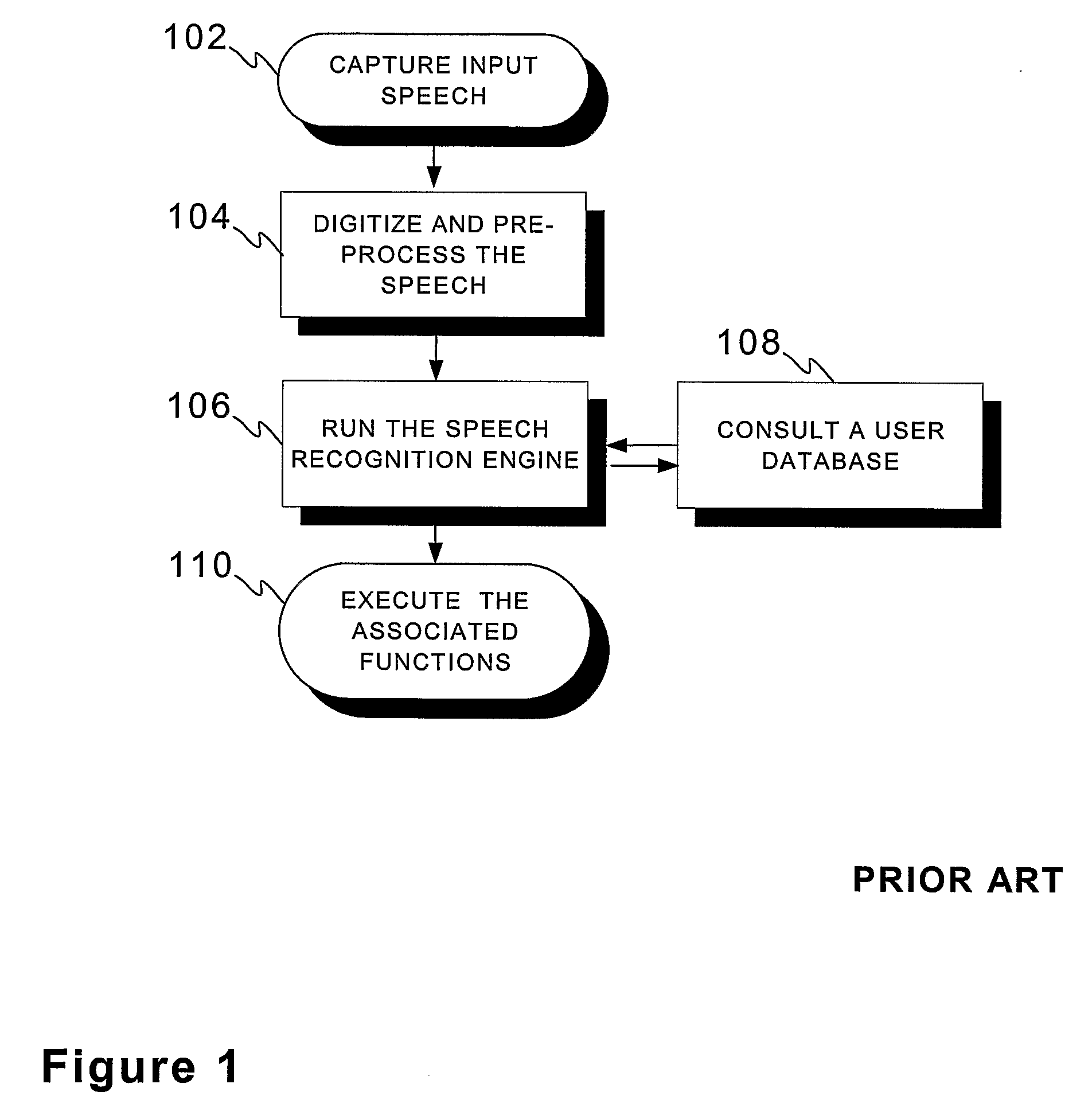

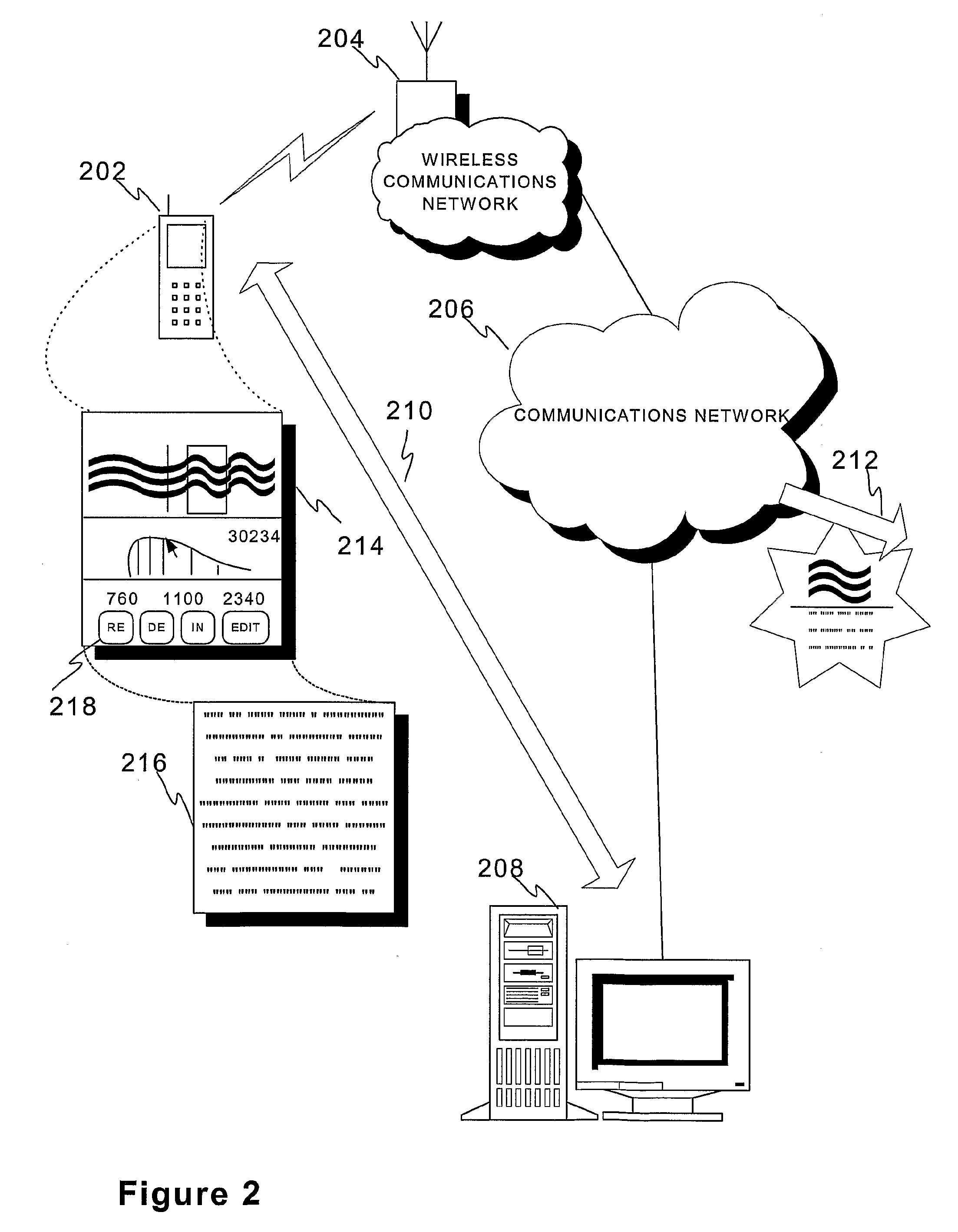

Method, and a device for converting speech by replacing inarticulate portions of the speech before the conversion

InactiveUS9123343B2Increase flexibilityEasy loadingSpeech recognitionSpeech identificationMobile device

An arrangement for converting speech into text comprises a mobile device (202) and a server entity (208) configured to perform the conversion and additional optional processes in co-operation. The user of the mobile device (202) may locally edit the speech signal prior to or between the execution of the actual speech recognition tasks, by replacing an inarticulate portion of the speech signal with a new version being recording of the portion. Task sharing details can be negotiated dynamically based on a number of parameters.

Owner:DICTA DIRECT LLC

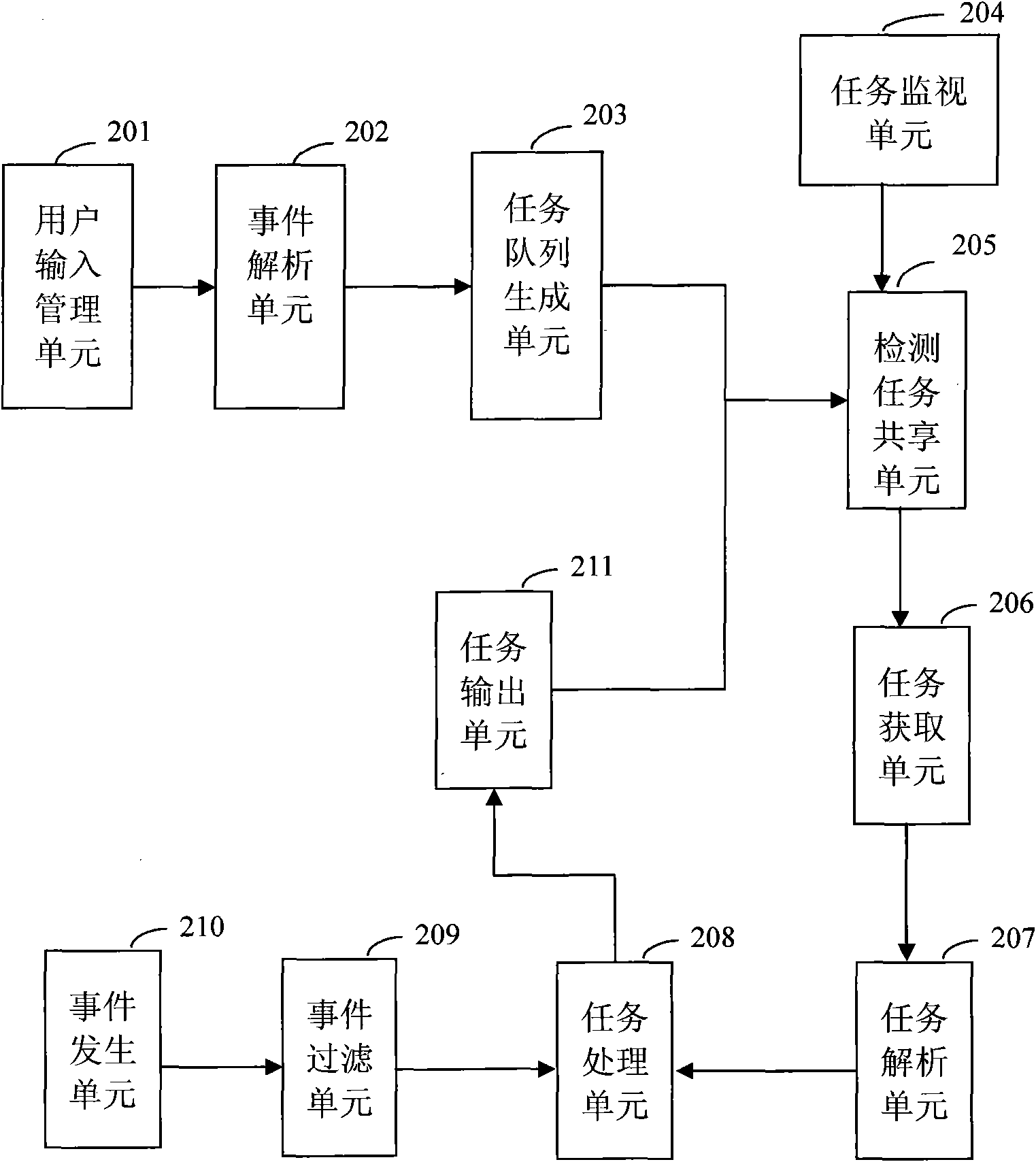

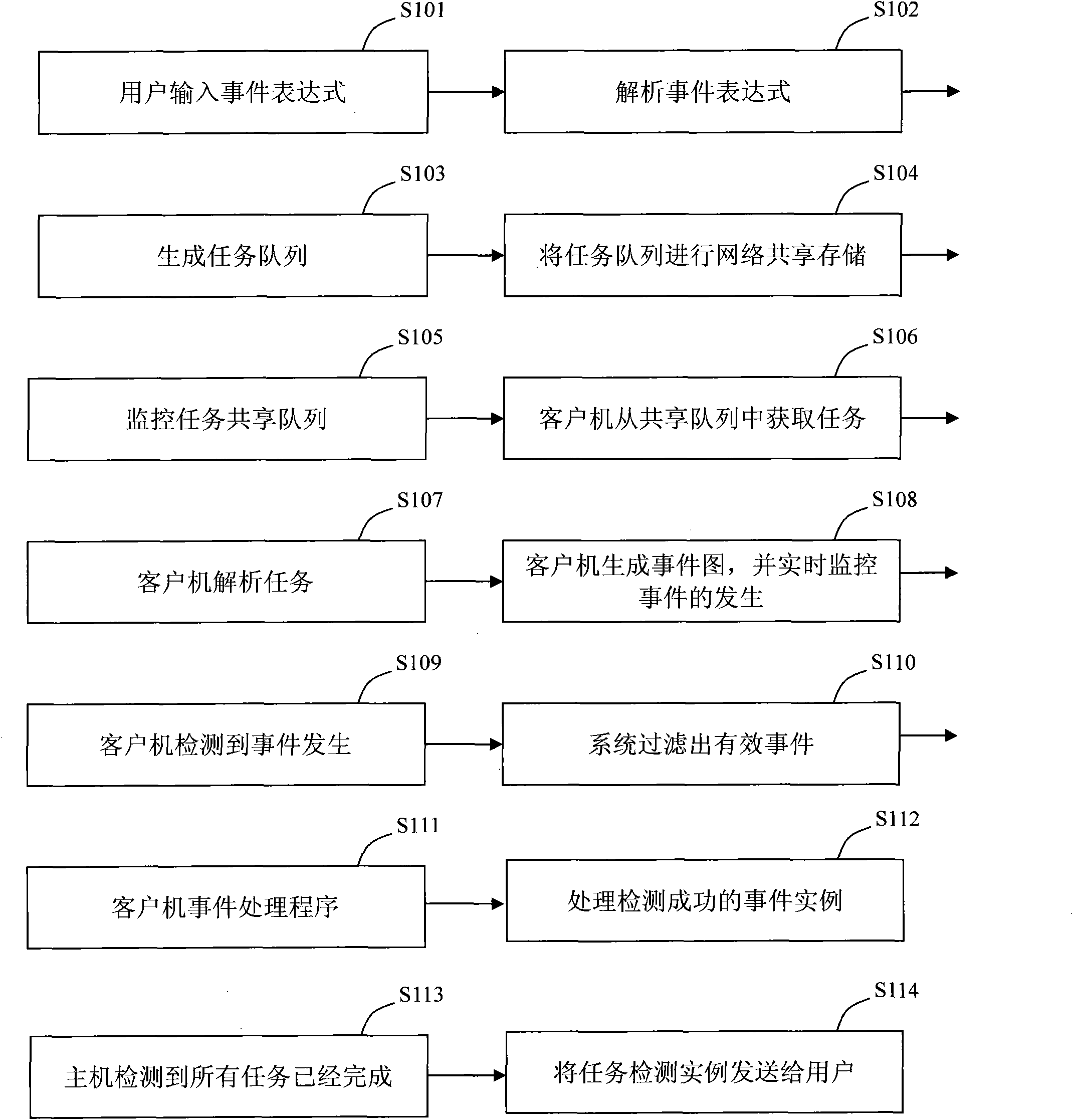

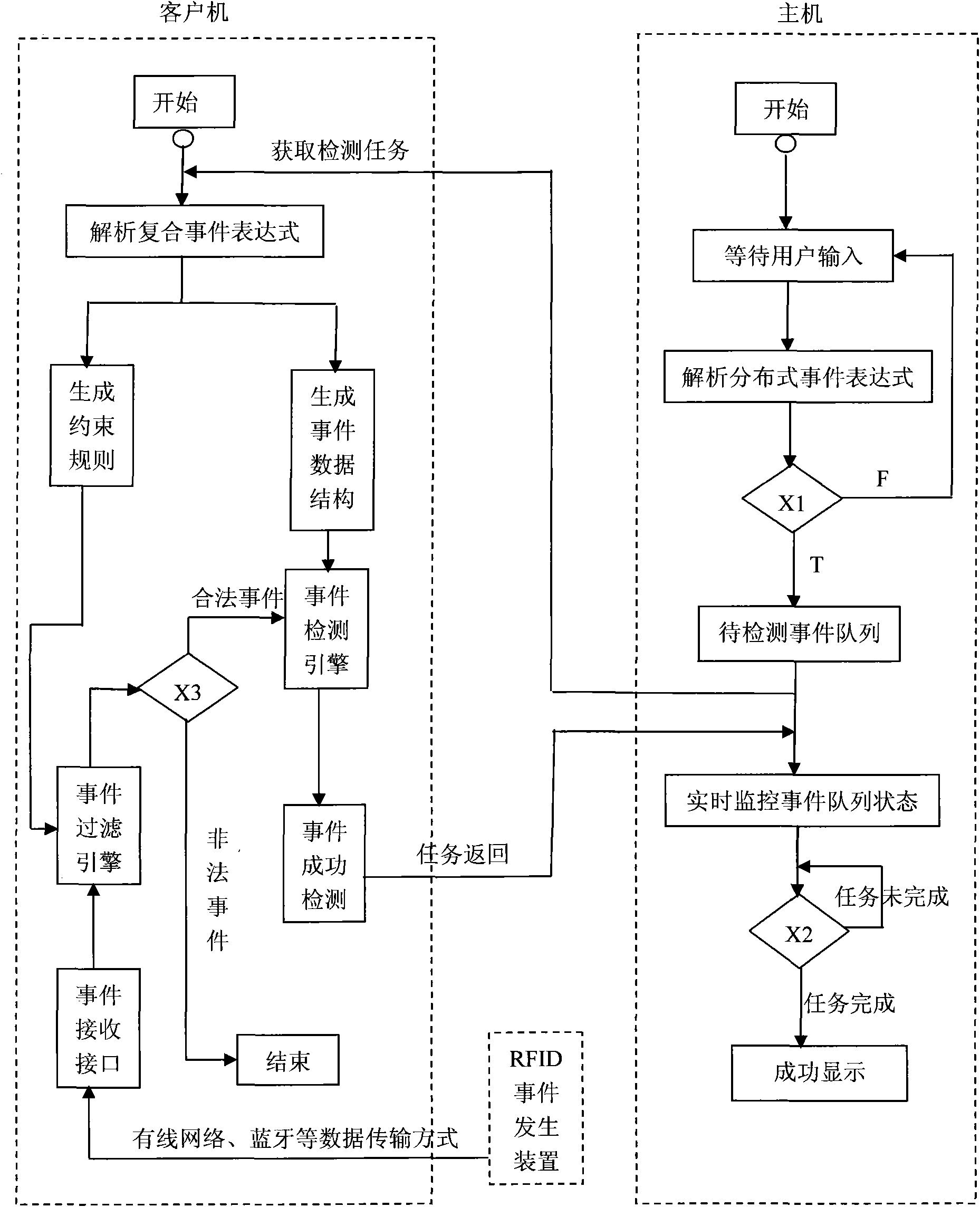

System and method for distributed complex event detection under RFID (Radio Frequency Identification Devices) equipment network environment

InactiveCN101883098AEasy accessFlexible deploymentSensing record carriersData switching networksUser inputClient-side

The invention discloses a system and a method for distributed complex event detection under an RFID (Radio Frequency Identification Devices) equipment network environment. The system comprises a server side, a client side, an RFID recognizer and a label which can be recognized by the recognizer, wherein the server side comprises a used input processing unit, an event analyzing unit, a task queue generating unit, a detection task sharing unit and a task monitoring unit; the client side comprises a task acquisition unit, a task analyzing unit, an event generating unit, an event filtering unit, a task processing unit and a task output unit. Compound event detection under the RFID equipment network environment is realized through event filtering, compound event detection and the event processing of an event aggregate function. The system supports distributed environments, multiple event construction symbols, non-spontaneous events and aggregated events.

Owner:DALIAN MARITIME UNIVERSITY

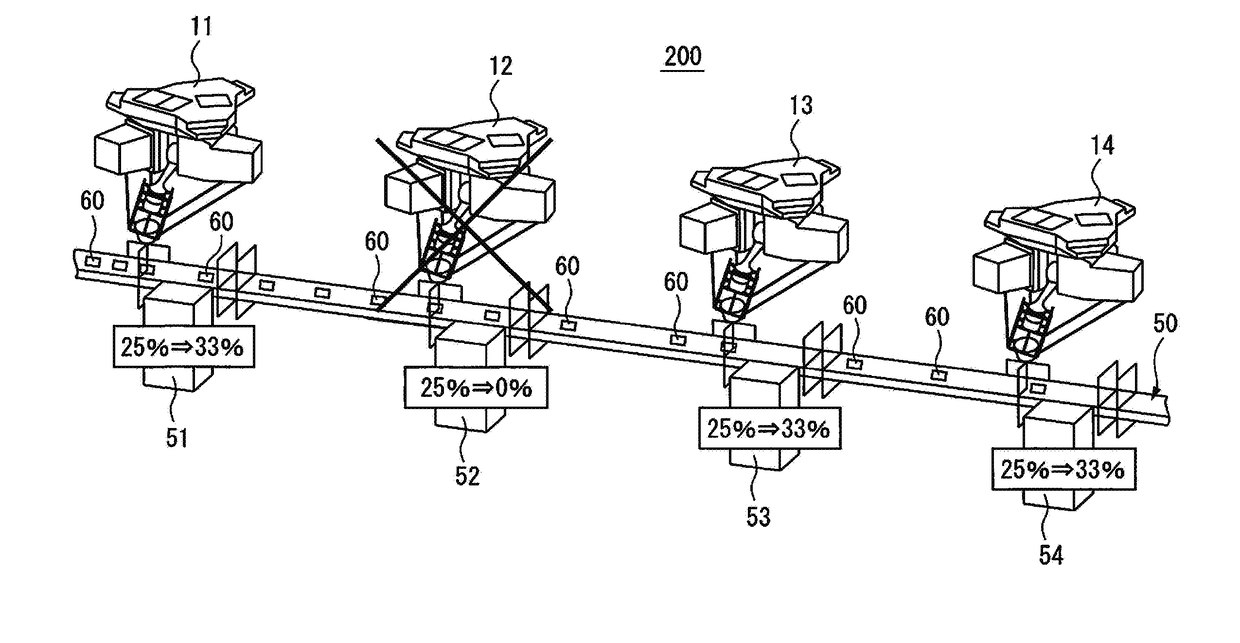

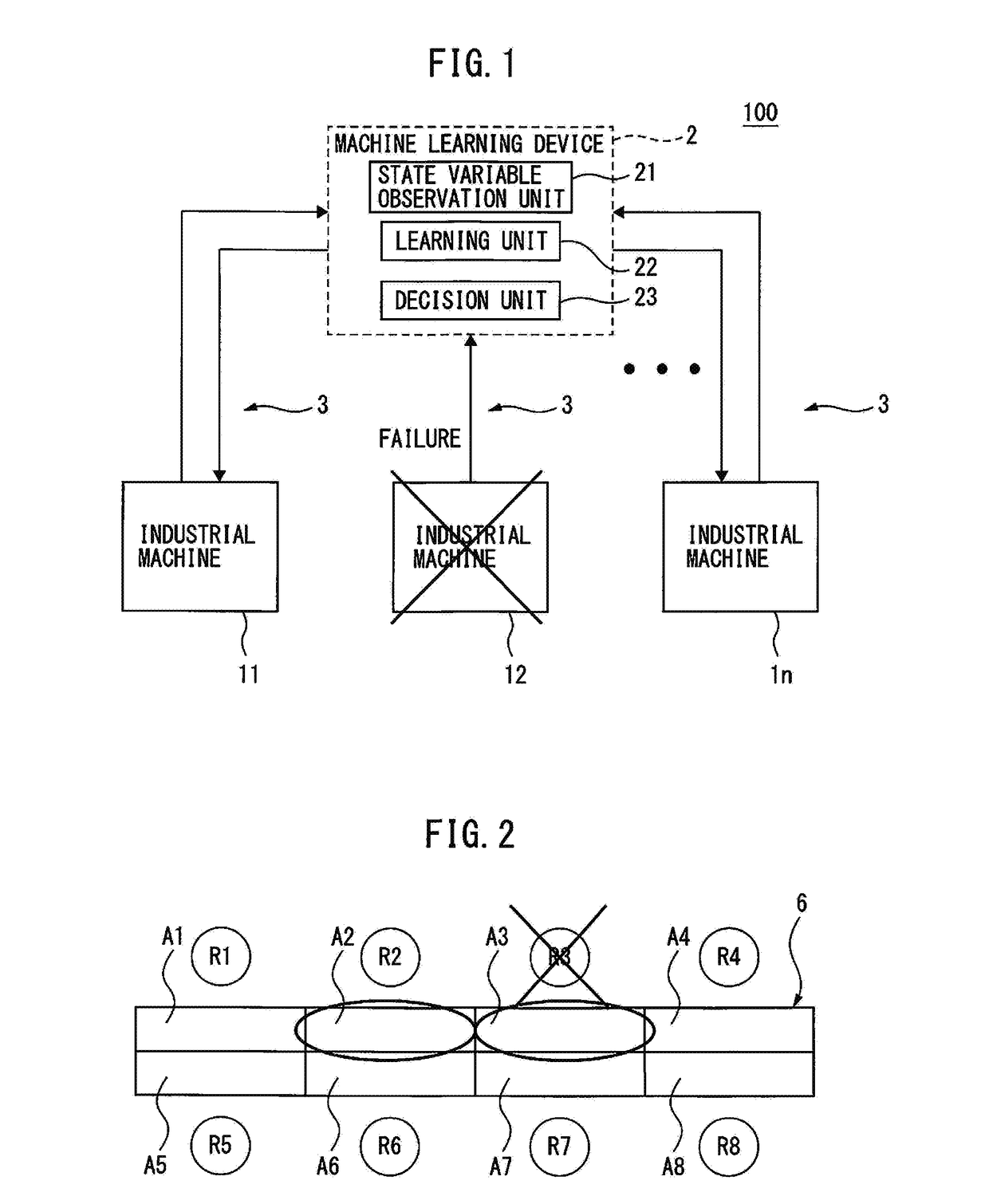

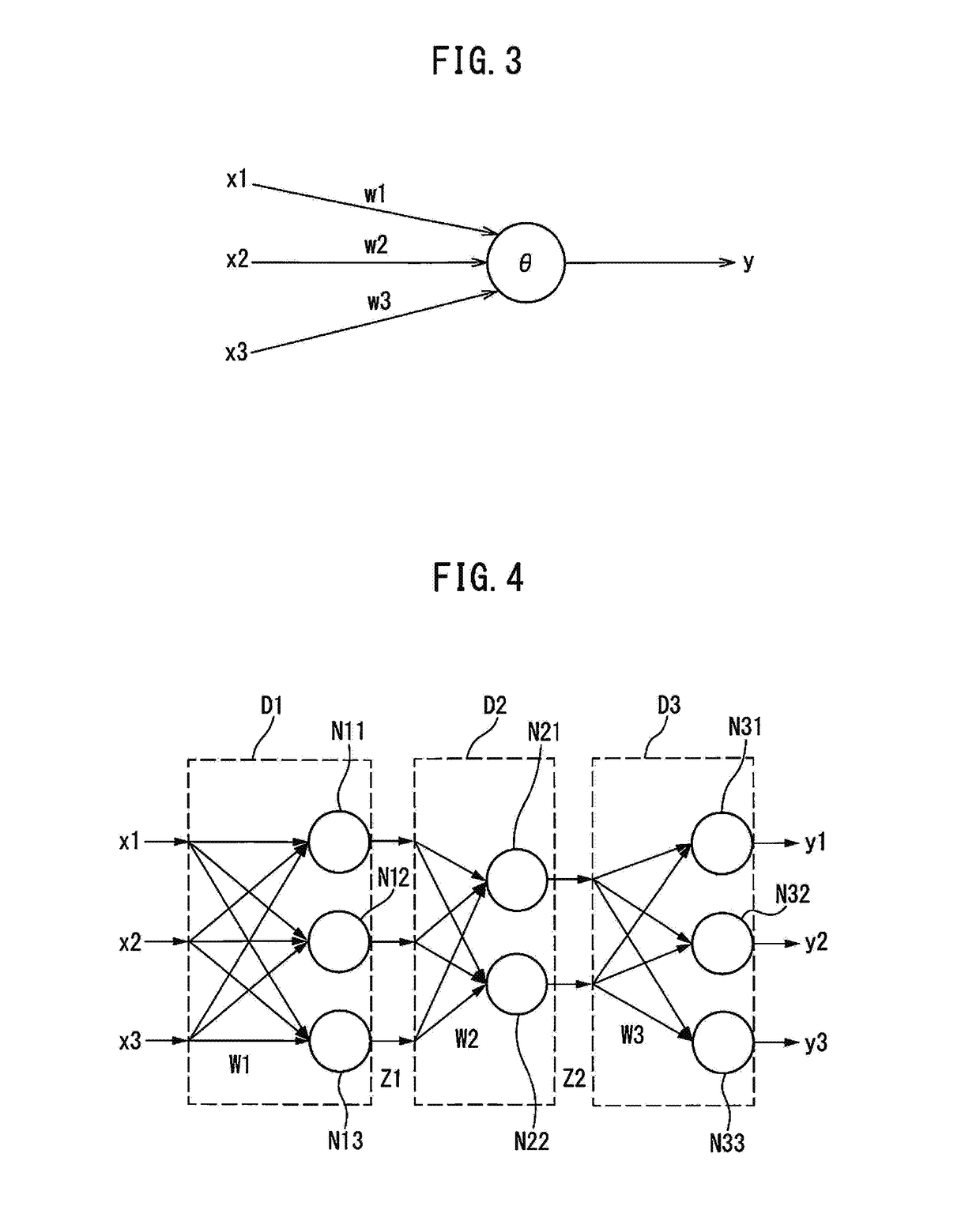

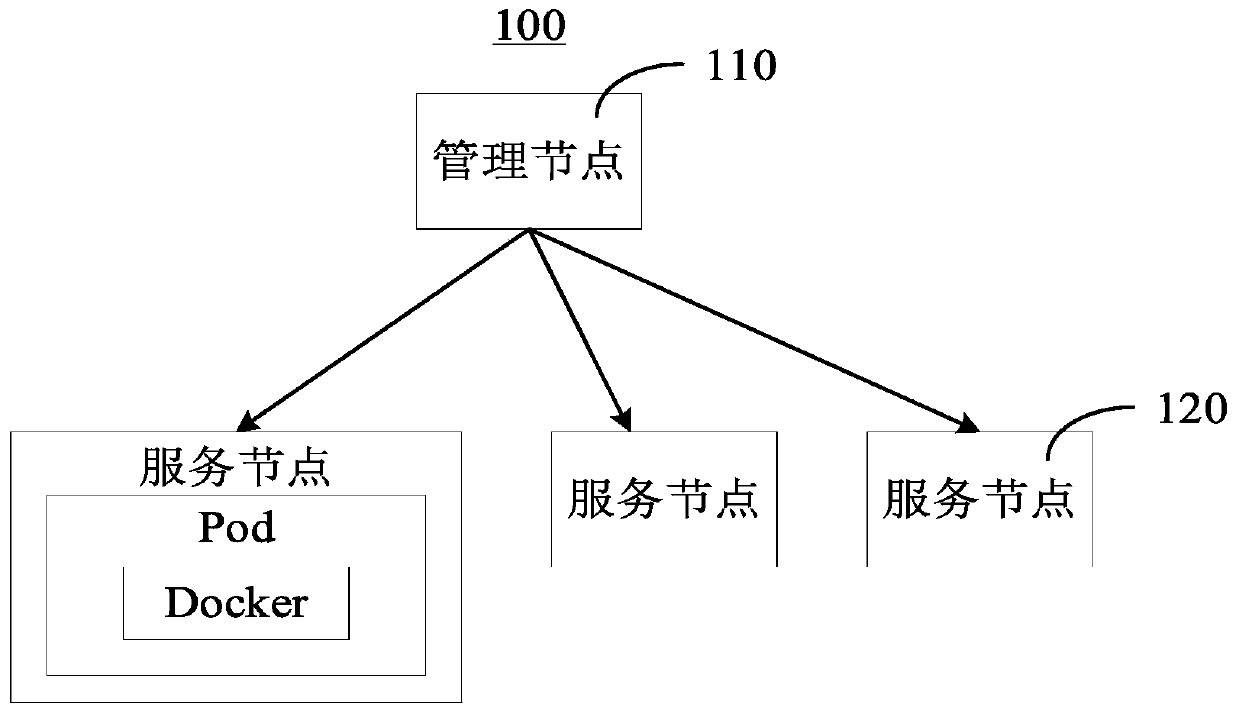

Machine learning device, industrial machine cell, manufacturing system, and machine learning method for learning task sharing among plurality of industrial machines

ActiveUS20170243135A1Volume maximizationMaintain volumeArtificial lifeMachine learningLearning unitState variable

A machine learning device, which performs a task using a plurality of industrial machines and learns task sharing for the plurality of industrial machines, includes a state variable observation unit which observes state variables of the plurality of industrial machines; and a learning unit which learns task sharing for the plurality of industrial machines, on the basis of the state variables observed by the state variable observation unit.

Owner:FANUC LTD

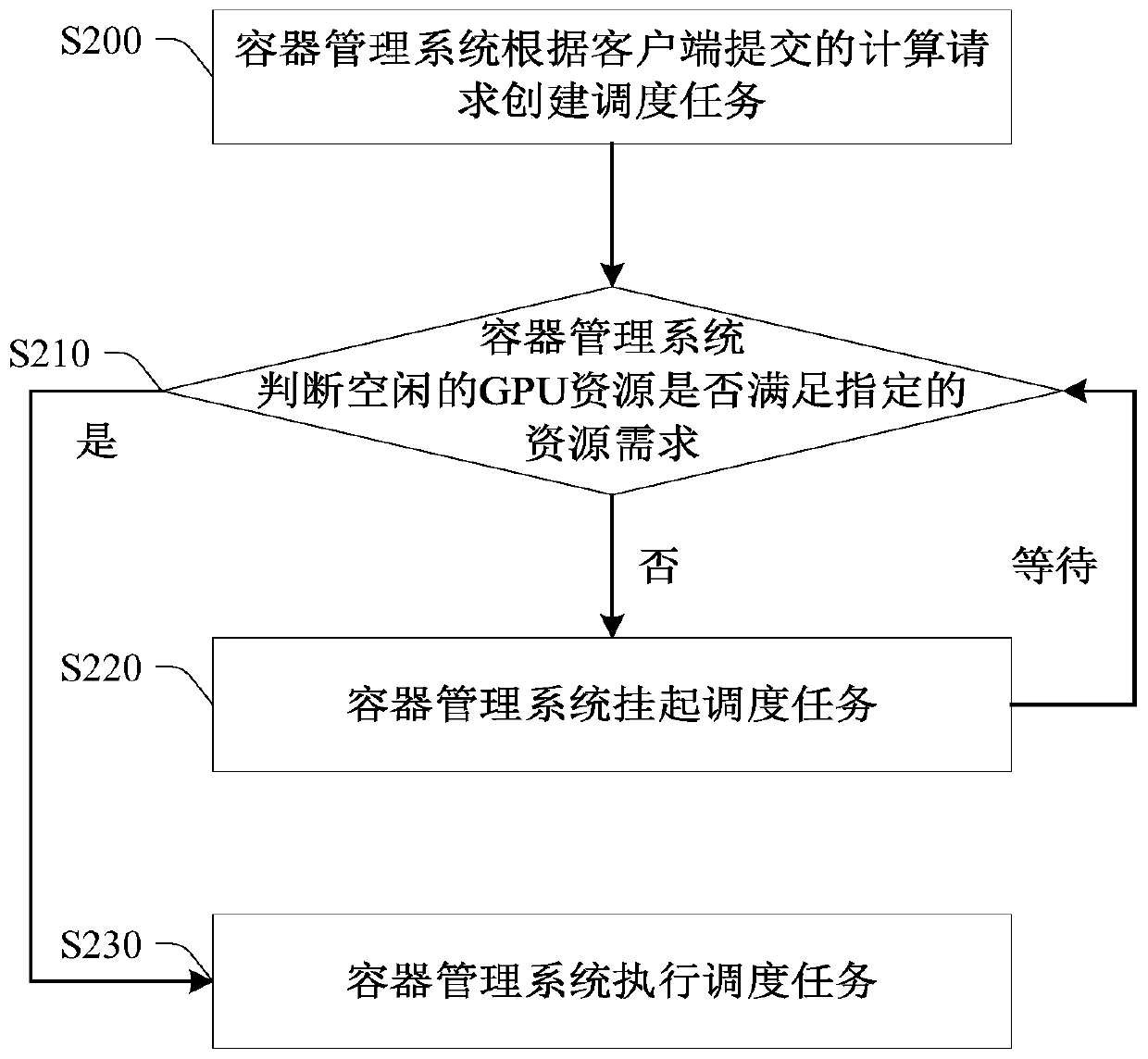

GPU resource using method and device and storage medium

ActiveCN110888743AProgram initiation/switchingResource allocationPerformance computingParallel computing

The invention relates to the technical field of high-performance computing, and provides a GPU resource using method and device and a storage medium. The GPU resource using method comprises the stepsthat a container management system creates a scheduling task according to a calculation request submitted by a client; the container management system judges whether the idle GPU resources in the cluster meet the resource requirements specified in the instruction for creating the GPU container or not; if the requirement is not met, the container management system suspends the scheduling task firstand then executes the scheduling task until the requirement is met; and when the scheduling task is suspended, the container management system does not create the GPU container. In this method, the container management system queues the scheduling tasks according to the use conditions of the GPU resources; therefore, for each computing task, the GPU resources can be used exclusively, the GPU resources used by the computing task are not shared with other computing tasks, and the execution progress and the computing result of the computing task can be carried out according to a plan and cannotbe affected by other computing tasks.

Owner:中科曙光国际信息产业有限公司

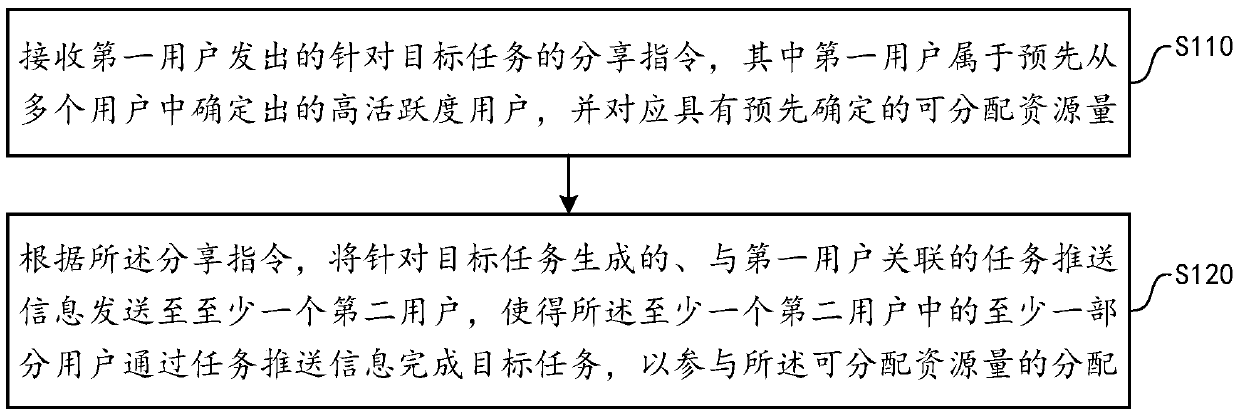

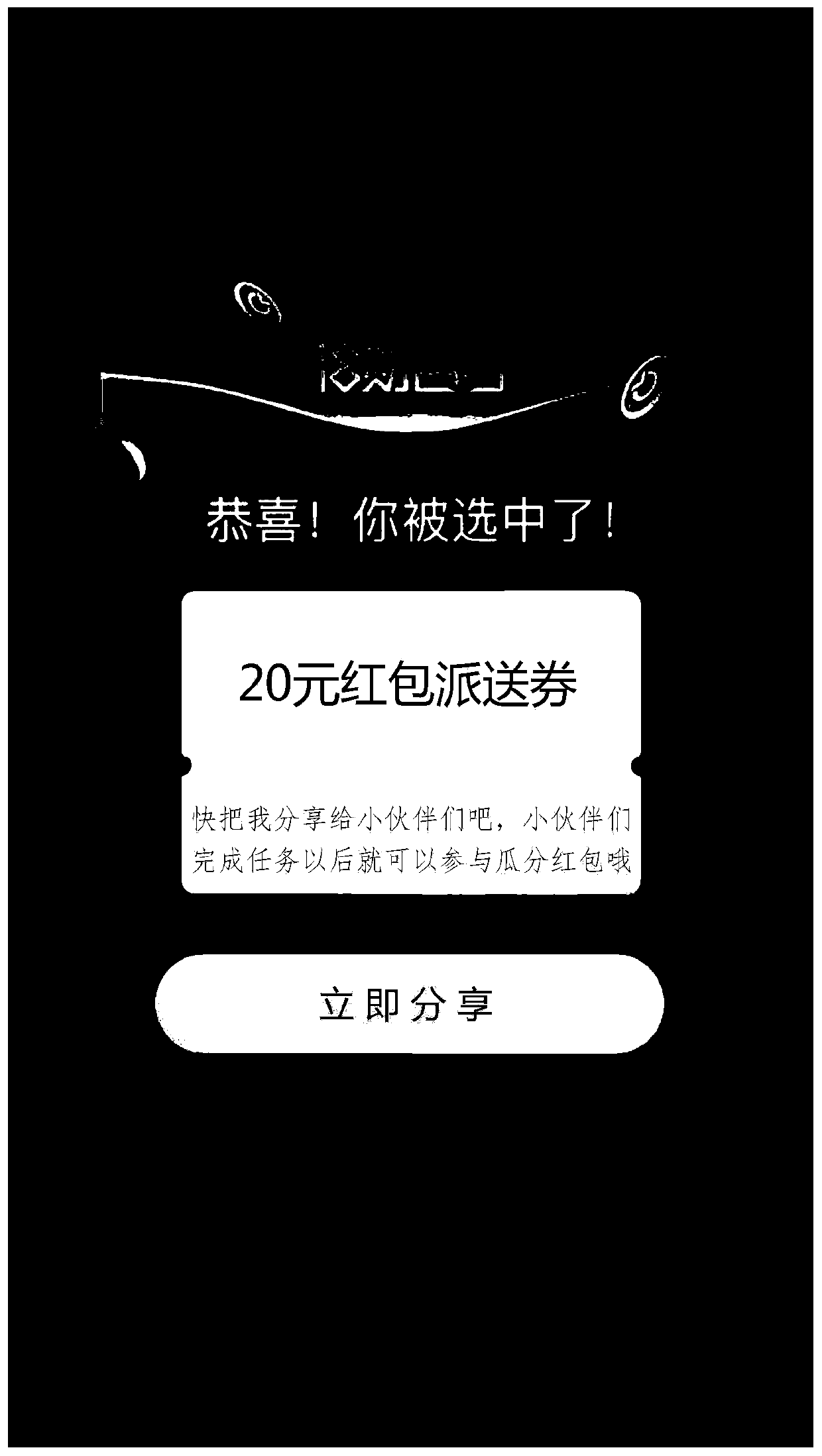

Target task sharing method and device

PendingCN110020888AImprove experienceImprove satisfactionMarketingHigh activityHuman–computer interaction

The embodiment of the invention provides a target task sharing method, which comprises the following steps: firstly, receiving a sharing instruction sent by a first user for a target task, the first user belonging to a high-activity user determined from a plurality of users in advance and correspondingly having a predetermined allocatable resource quantity; then, according to the sharing instruction, sending task push information generated for the target task and associated with the first user to at least one second user, so that at least one part of the at least one second user completes thetarget task through the task push information to participate in the allocation of the allocatable resource amount.

Owner:ADVANCED NEW TECH CO LTD

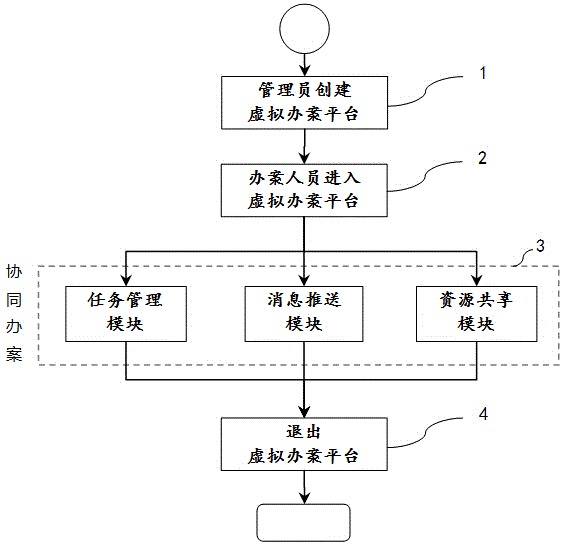

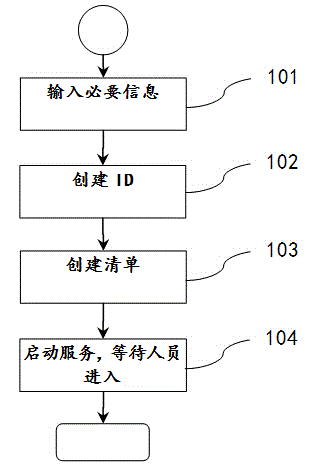

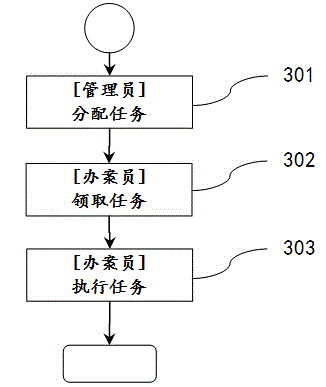

Collaborative case handling method and system thereof

InactiveCN104463475AImprove collaborative case handling capabilitiesEffective strikeResourcesInformation sharingResource utilization

The invention discloses a collaborative case handling method. The method includes the following steps that firstly, a manager establishes a virtual case handling platform; secondly, a case handling person logs into the virtual case handling platform through the Internet; thirdly, resource allocation, task sharing, information sharing and collaborative case handling are conducted; fourthly, the person quits the virtual case handling platform after case handling is finished. The invention further discloses a system based on the method. The system comprises a server, a command office, a technology office, a conference room, a case handling room and an evidence obtaining office. The server is connected with devices in the offices. The case handling platform based on the network can have the communication function, the technical assistance function, the report function, the command function, the data sharing function and other functions, the collaborative case handling capacity of all local public security organizations is improved, the work efficiency and interoperability are improved, the case progress can be rapidly known, and the resource utilization rate is increased.

Owner:XLY SALVATIONDATA TECHNOLOGY INC

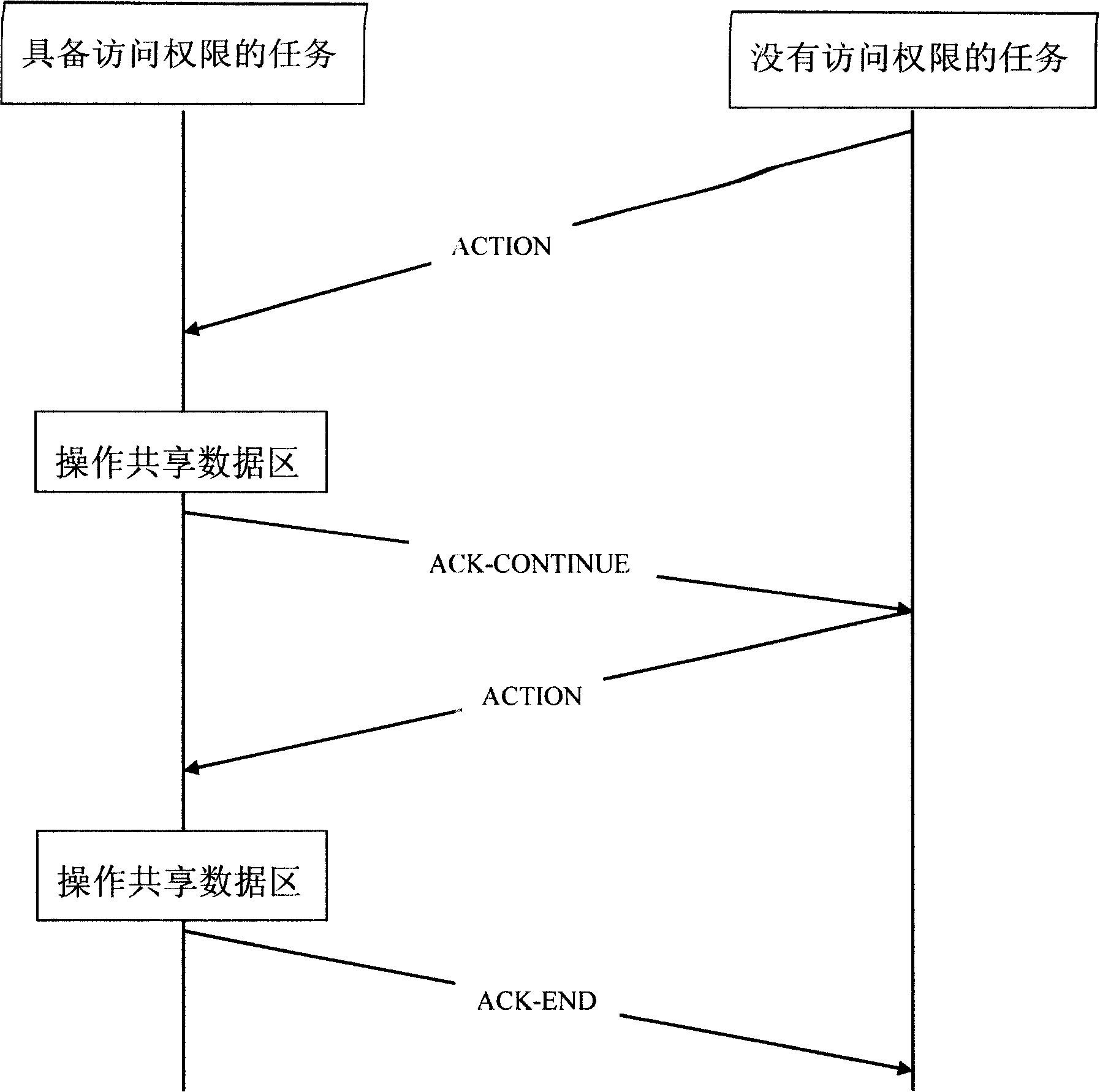

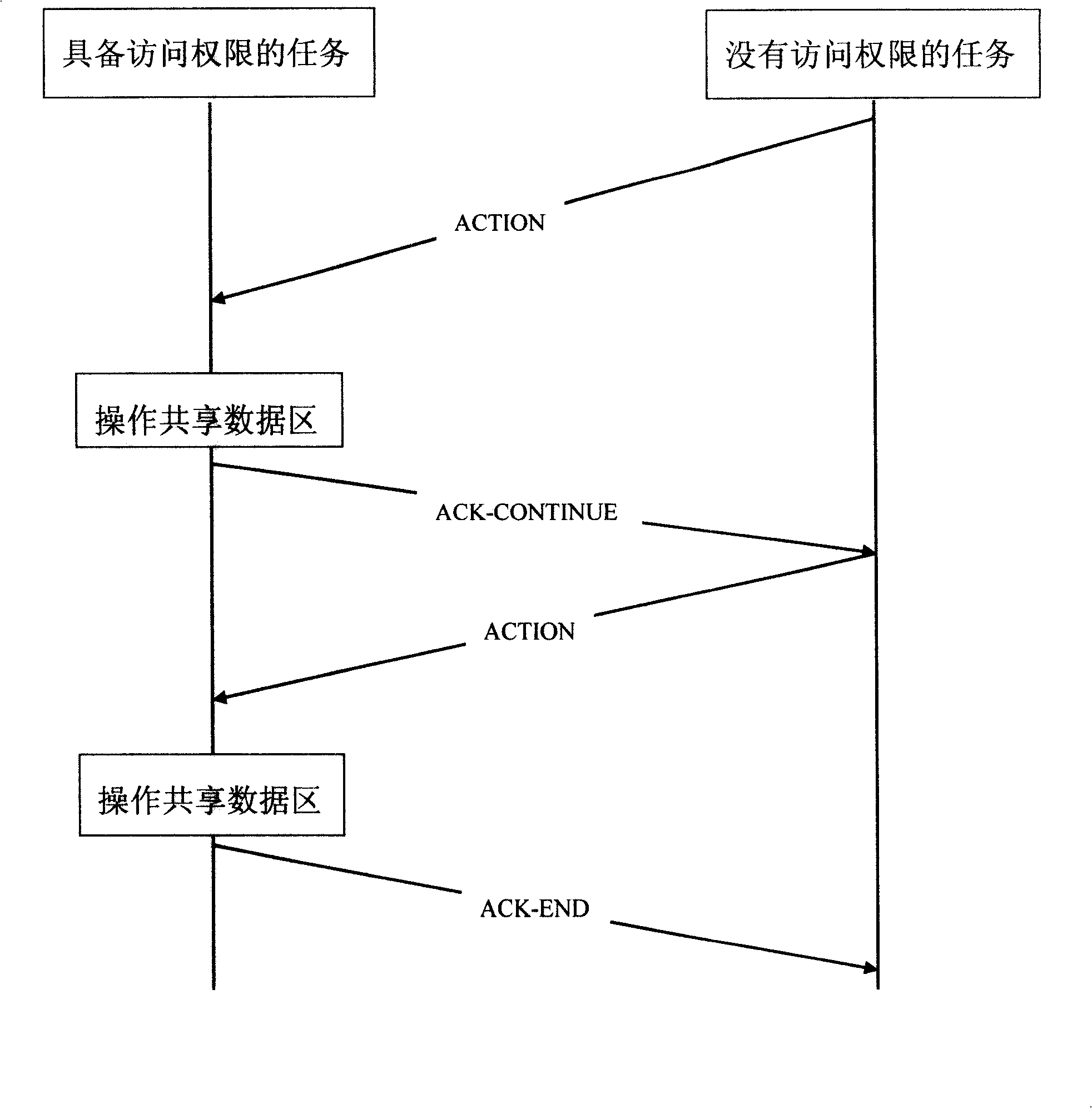

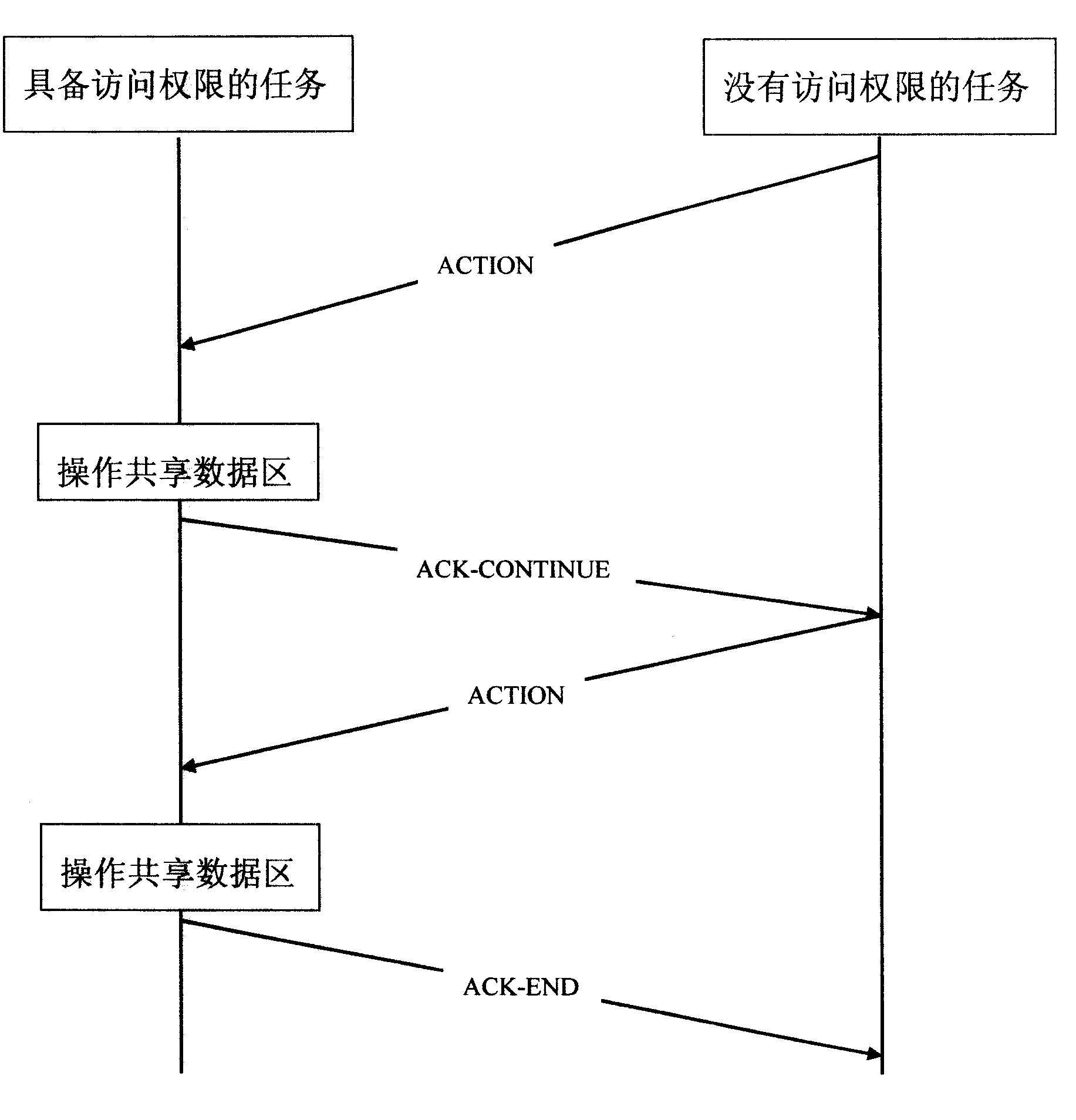

Method of implementing inter-task sharing data

InactiveCN101163094AEasy accessSafe handlingError prevention/detection by using return channelDigital computer detailsProtection mechanismData sharing

The invention discloses a method for realizing data sharing among tasks. The problems that deadlock is easy to be generated by multi tasks when visiting the sharing data area and the fault is not easy to be localized are solved. The invention includes that the task without the authority to visit the sharing data area sends an ACTION message to the task with visit authority; after the task with visit authority receives the message, the task runs corresponding program and operates the sharing data area according to the content of the message; after the running is over, the task with visit authority sends a responses message to the sender of the ACTION message; the responses message comprises the result of running; the sharing data area of the invention need not to be protected and the sharing data area data can be safely visited among tasks. The lock and other protection mechanisms are not considered and the code is simplified. The deadlock can not be generated without lock ,so the problem of deadlock is completely avoided.

Owner:杭州戈虎达科技有限公司

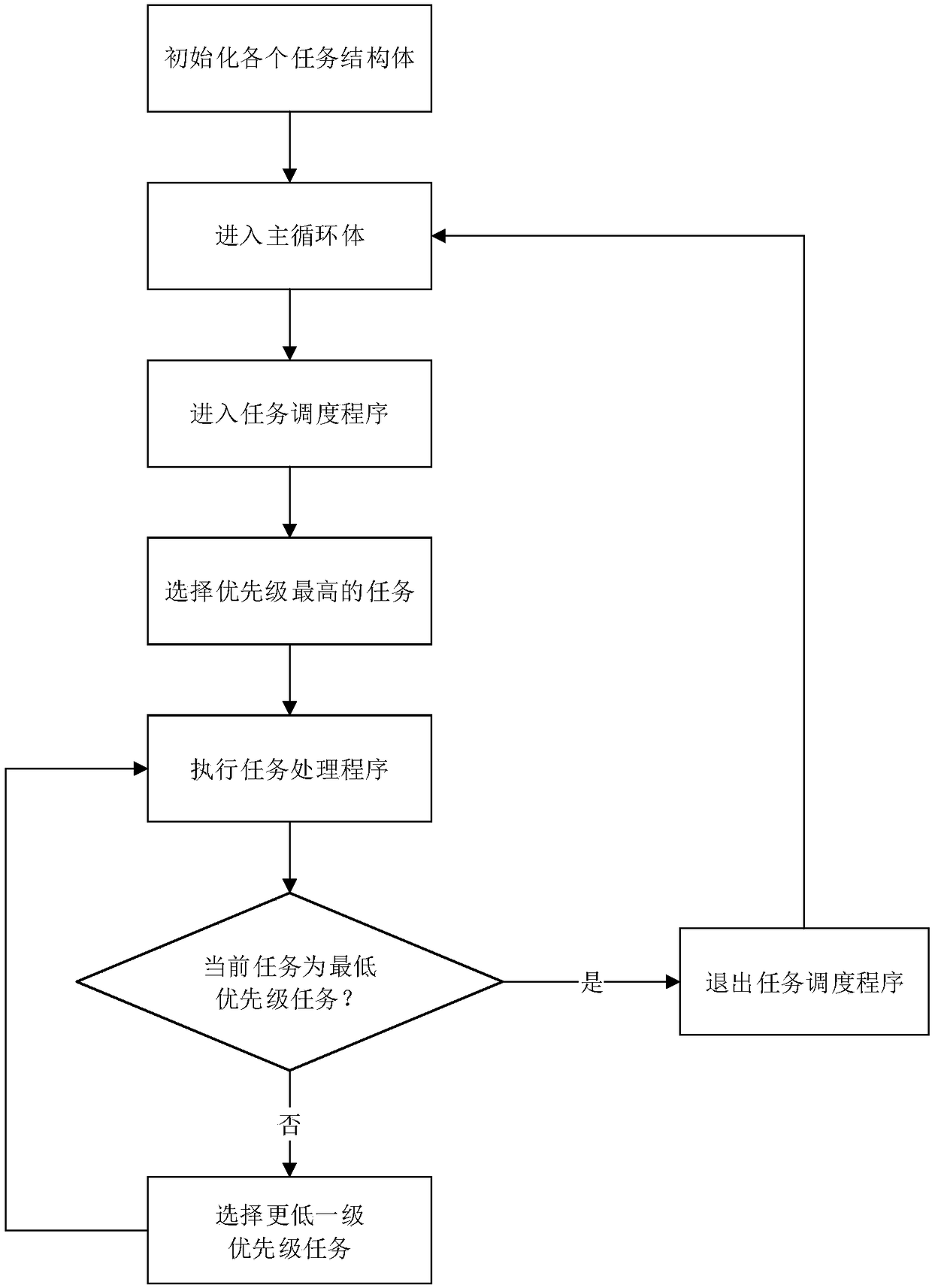

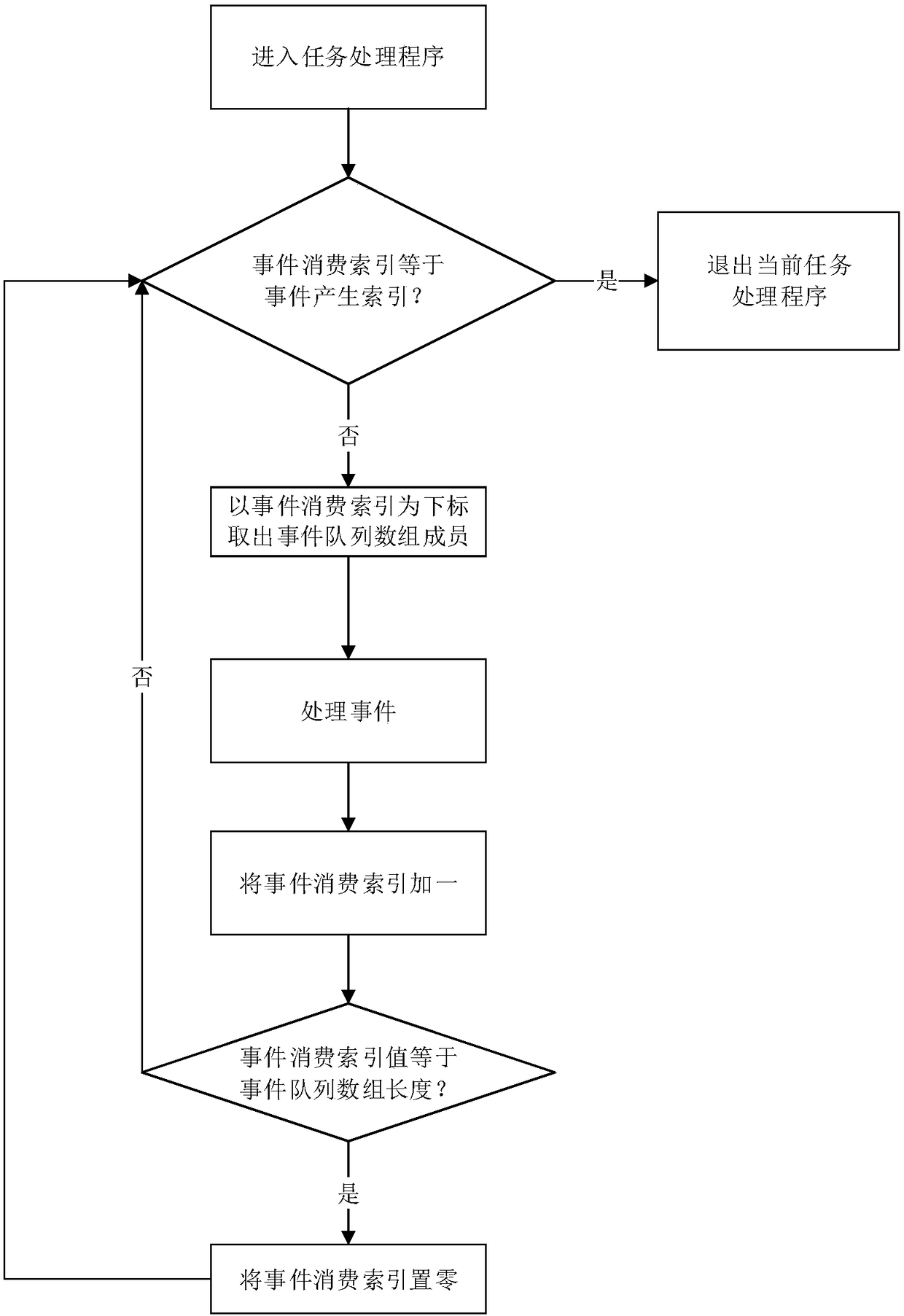

Task scheduling method and task scheduling device for embedded software

ActiveCN108536527AQuality improvementReduce couplingProgram initiation/switchingInterprogram communicationOperational systemRandom access memory

The invention discloses a task scheduling method and a task scheduling device for embedded software. The task scheduling method includes partitioning a plurality of logic tasks according to particularapplication. A system stack is shared by the logic tasks, each logic task is provided with independent event queues and task processing programs, and the tasks are communicated with one another in event transmission forms. The task scheduling method and the task scheduling device have the advantages that system RAM (random access memory) resource and MCU (microprogrammed control unit) computationresource consumption of loading operating systems can be prevented, and task scheduling mechanisms similar to operating systems can be implemented.

Owner:SHANDONG ACAD OF SCI INST OF AUTOMATION

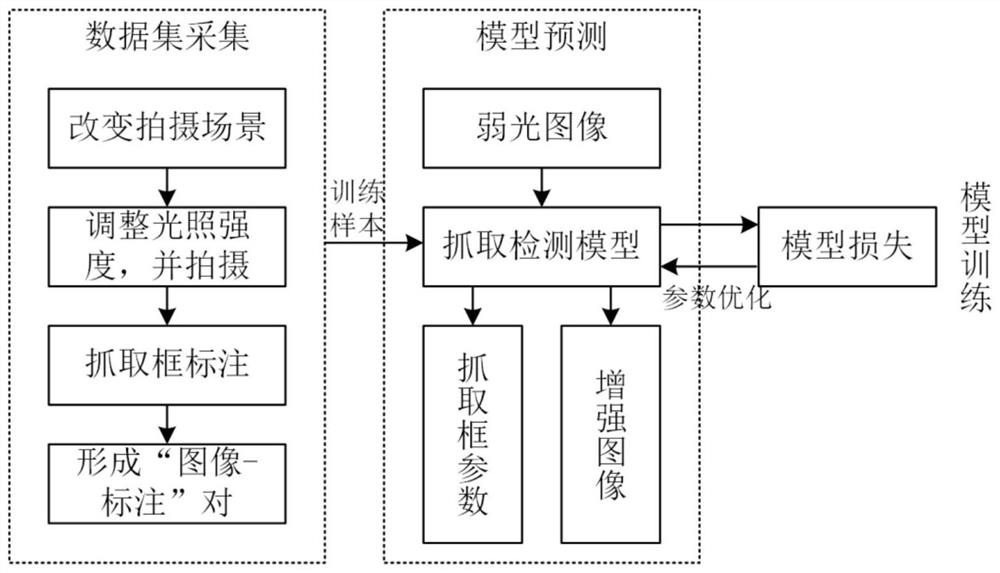

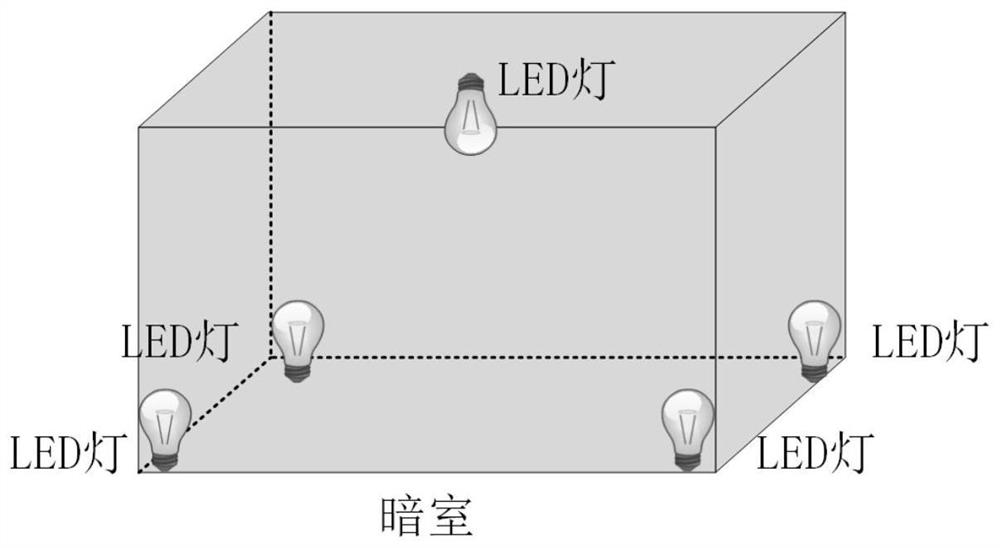

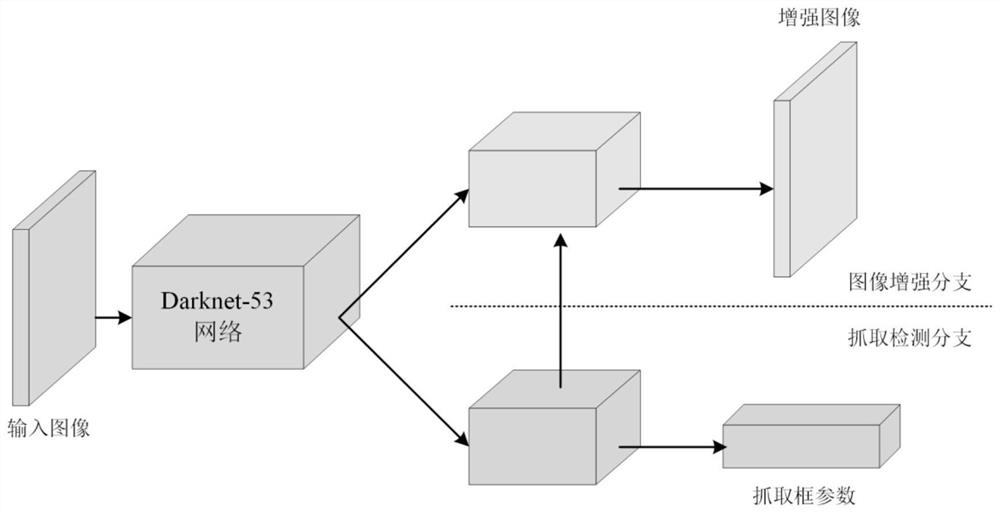

Robot weak light environment grabbing detection method based on multi-task sharing network

ActiveCN112949452AReduce energy consumptionLower control costsProgramme-controlled manipulatorCharacter and pattern recognitionData setModel extraction

The invention discloses a robot weak light environment grabbing detection method based on a multi-task sharing network, and belongs to the technical field of computer vision and intelligent robots. According to the method, strict matching of shot images in a weak light environment and a normal light environment is ensured through image acquisition, a corresponding data set d is constructed, then Darknet-53 is adopted as a backbone network to construct a weak light environment capture detection model, multi-scale features with strong feature expression ability are extracted, and, through a parallel cascade capture detection module and an image enhancement module, capturing detection and weak light image enhancement tasks are realized respectively; training samples are randomly selected from the data set d, a weak light environment capture detection model is used for prediction, and when the change of a loss value within the iteration number iter is smaller than a threshold value t, the capture detection model G is converged, that is, training of the model G is completed; and an image Ilow shot in the weak light environment is input into the trained model G to obtain a predicted capture frame parameter and an enhanced image, and a capture detection task in the weak light environment is completed.

Owner:SHANXI UNIV

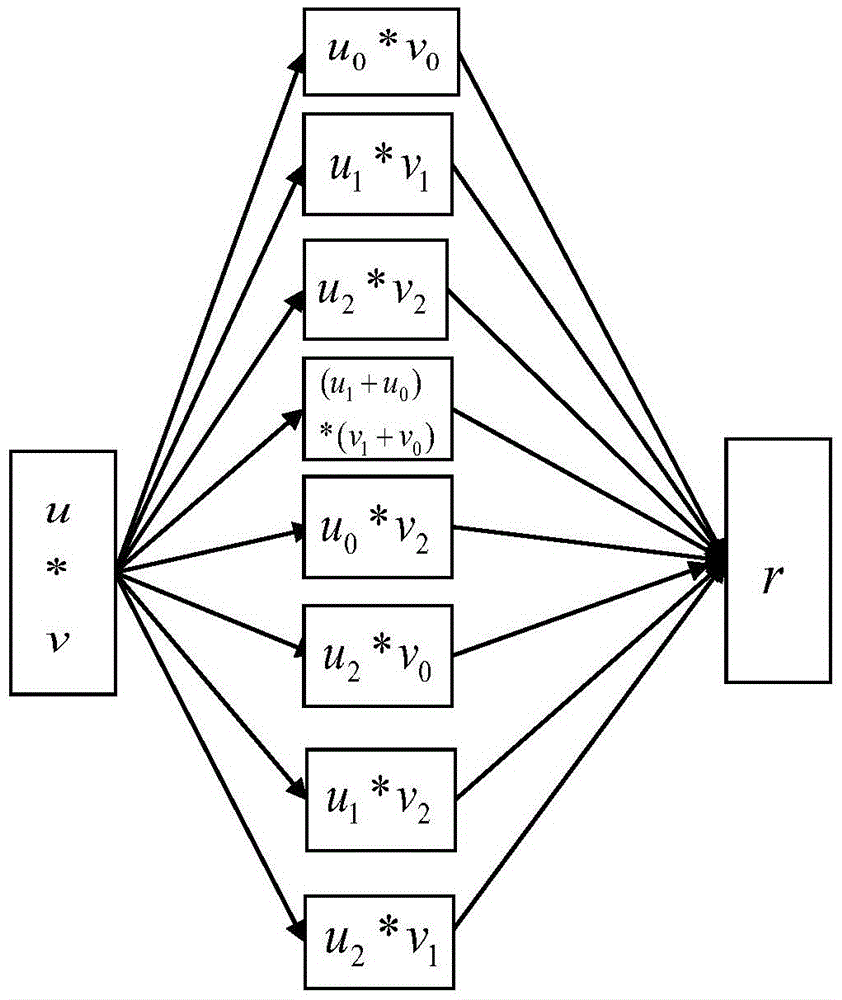

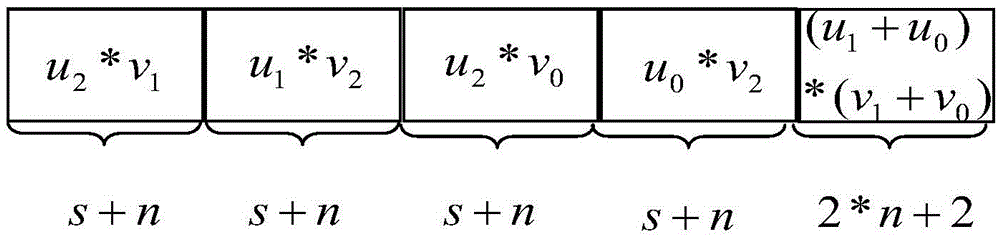

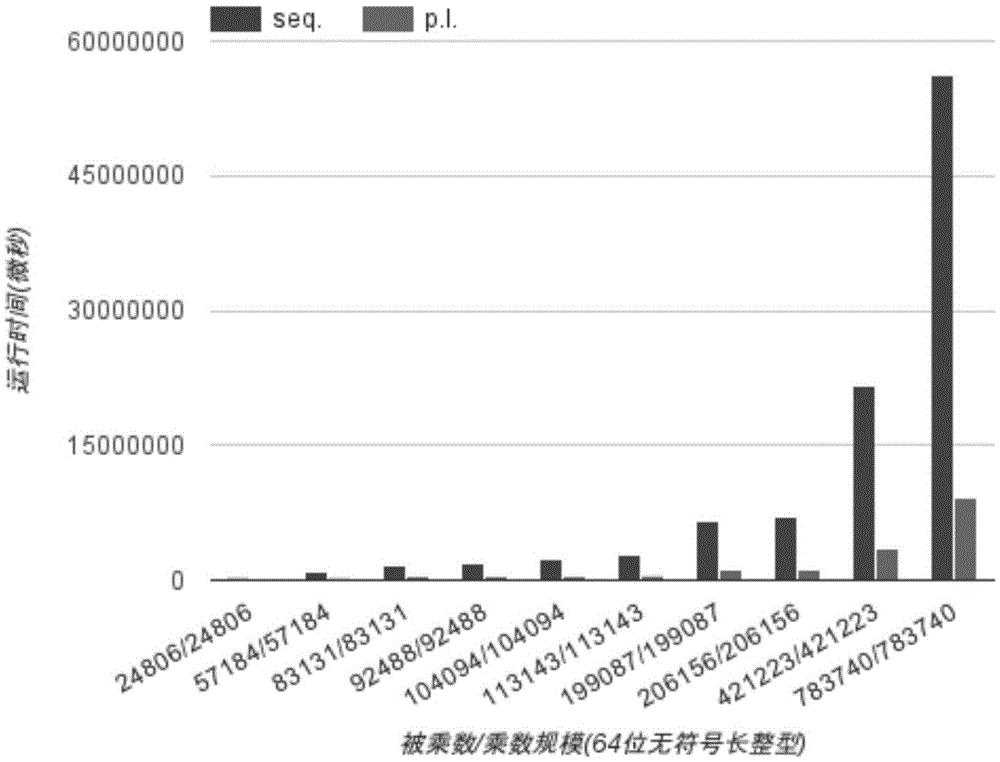

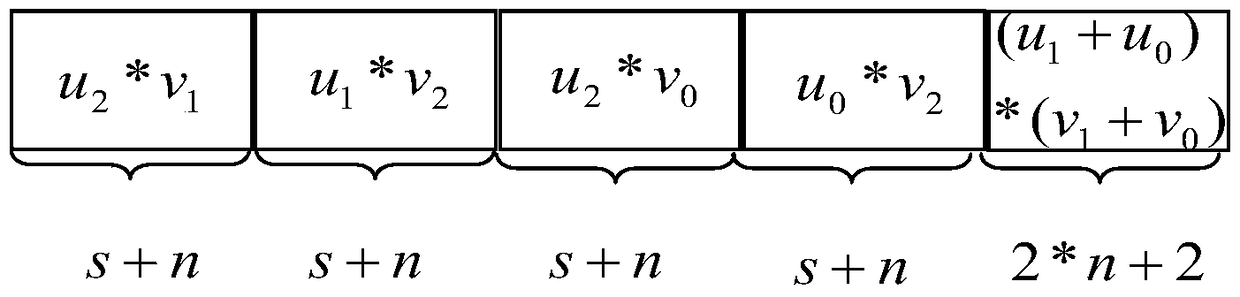

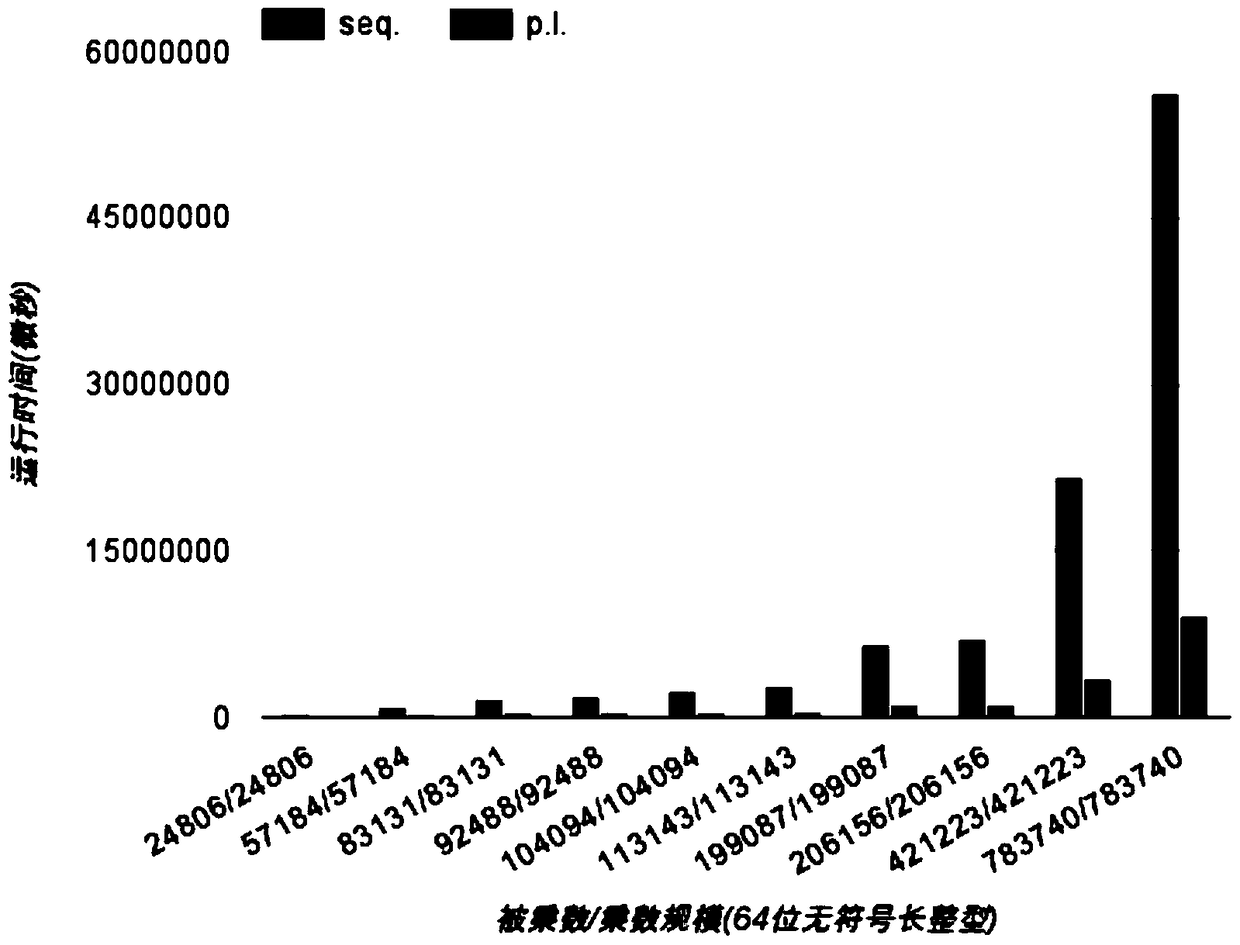

Parallel implementation method of big integer Karatsuba algorithm

ActiveCN105653239AImprove efficiencySolving Data Dependency IssuesComputation using non-contact making devicesTheoretical computer scienceKaratsuba algorithm

The invention discloses a parallel implementation method of a big data Karatsuba algorithm. According to the method, the correlation problems of storage and calculation of partial products are solved through ingenious formula transformation technique, pointer operation and storage manner on the basis of 64-bit unsigned long integer operation, the algorithm is parallelized by adopting a section task sharing policy through OpenMP multi-thread programming, so that the first-layer parallelization of 8 threads in a recursive program is started to solve 8 partial products, each section is responsible for the calculation task of one partial product, and after all the partial products are solved, serial merging is carried out, so that the Karatsuba algorithm is parallelized and the efficiency of the algorithm is improved.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI

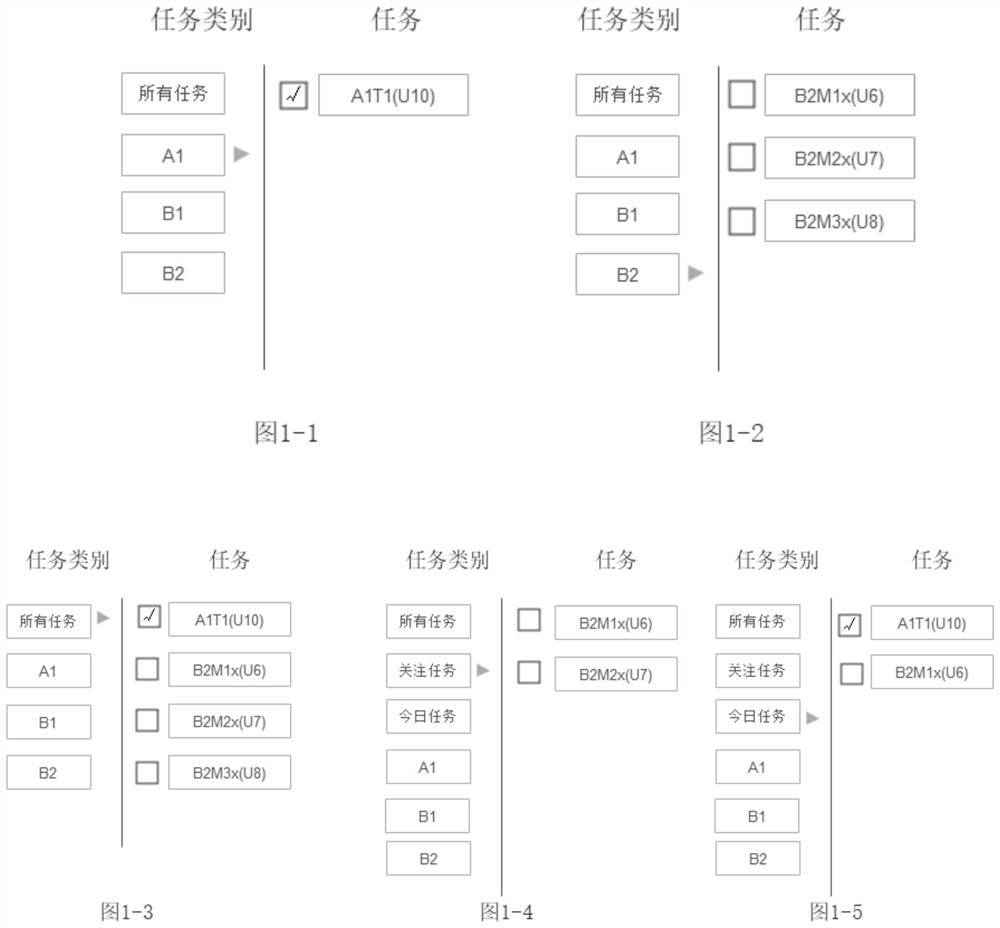

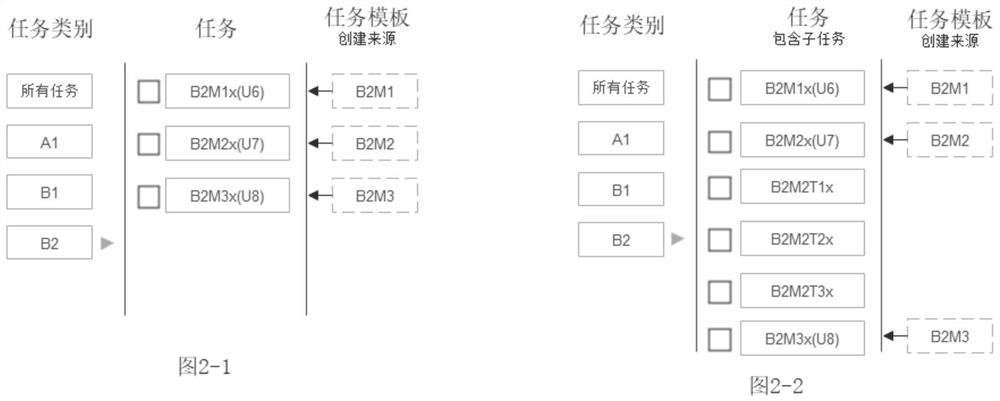

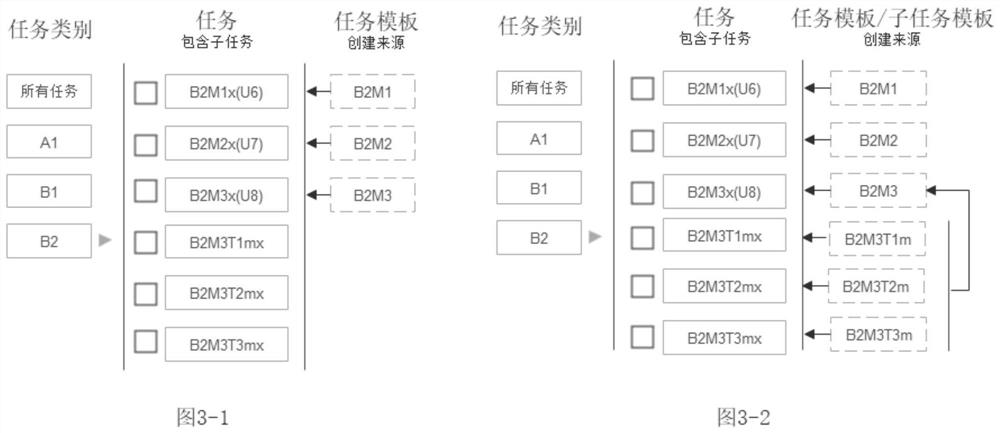

Enterprise-level task management method

PendingCN111709698AKnow the scope of authorityDon't worry about mispostingOffice automationResourcesKnowledge managementEnterprise level

The invention relates to an enterprise-level task management method. The method comprises the following steps: (1) creating a task category; (2) creating a task; wherein the created task categories and all the created task categories are leveled, and authorities can be independently configured; wherein the creation task belongs to one or more task categories; wherein the tasks can be allocated tothemselves or other people, and after the tasks are issued, task issuers can directly see the task states of task executors. According to the method, the enterprise-level task sharing and cooperationproblems are solved, the authority structure is easy to understand, and the classification and arrangement of the tasks are excellent.

Owner:CHANGZHOU YINGNENG ELECTRICAL

Method of implementing inter-task sharing data

InactiveCN101163094BEasy accessSafe handlingError prevention/detection by using return channelDigital computer detailsProtection mechanismData sharing

The invention discloses a method for realizing data sharing among tasks. The problems that deadlock is easy to be generated by multi tasks when visiting the sharing data area and the fault is not easy to be localized are solved. The invention includes that the task without the authority to visit the sharing data area sends an ACTION message to the task with visit authority; after the task with visit authority receives the message, the task runs corresponding program and operates the sharing data area according to the content of the message; after the running is over, the task with visit authority sends a responses message to the sender of the ACTION message; the responses message comprises the result of running; the sharing data area of the invention need not to be protected and the sharing data area data can be safely visited among tasks. The lock and other protection mechanisms are not considered and the code is simplified. The deadlock can not be generated without lock ,so the problem of deadlock is completely avoided.

Owner:杭州戈虎达科技有限公司

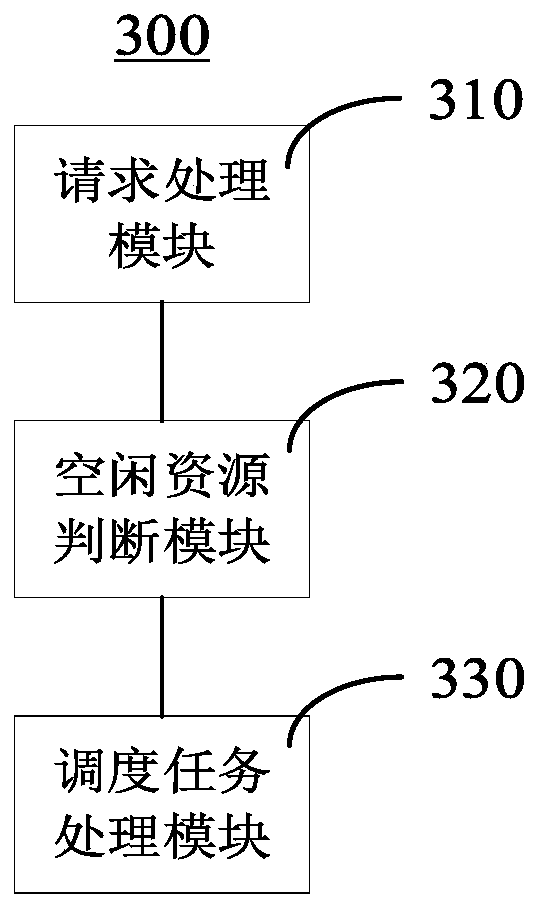

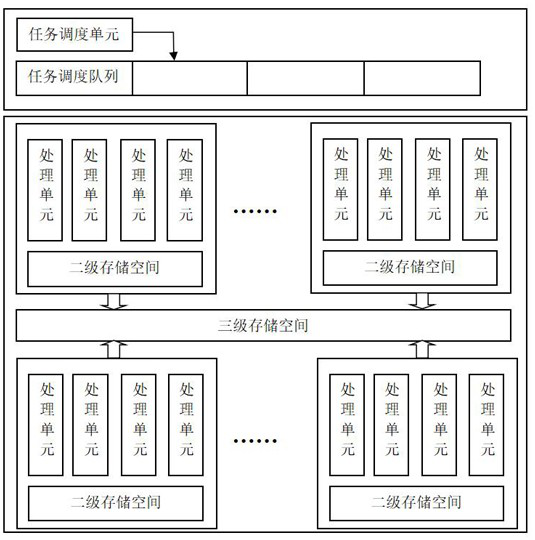

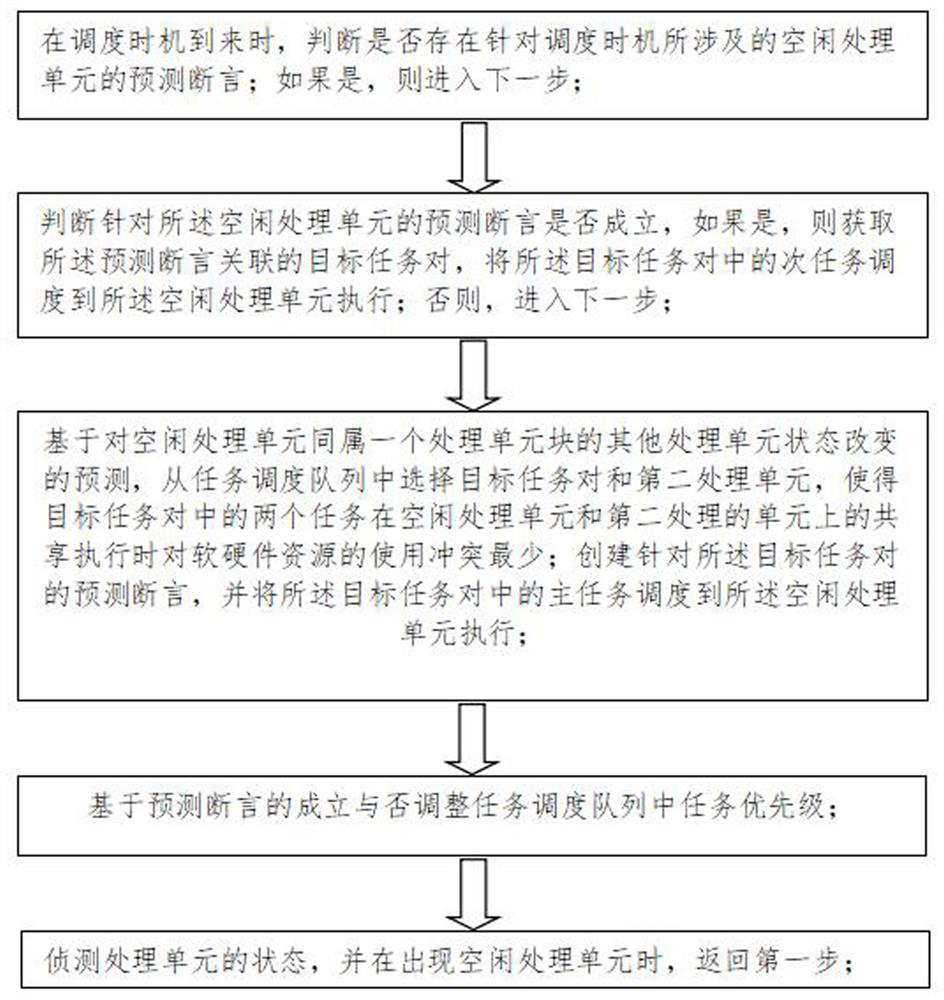

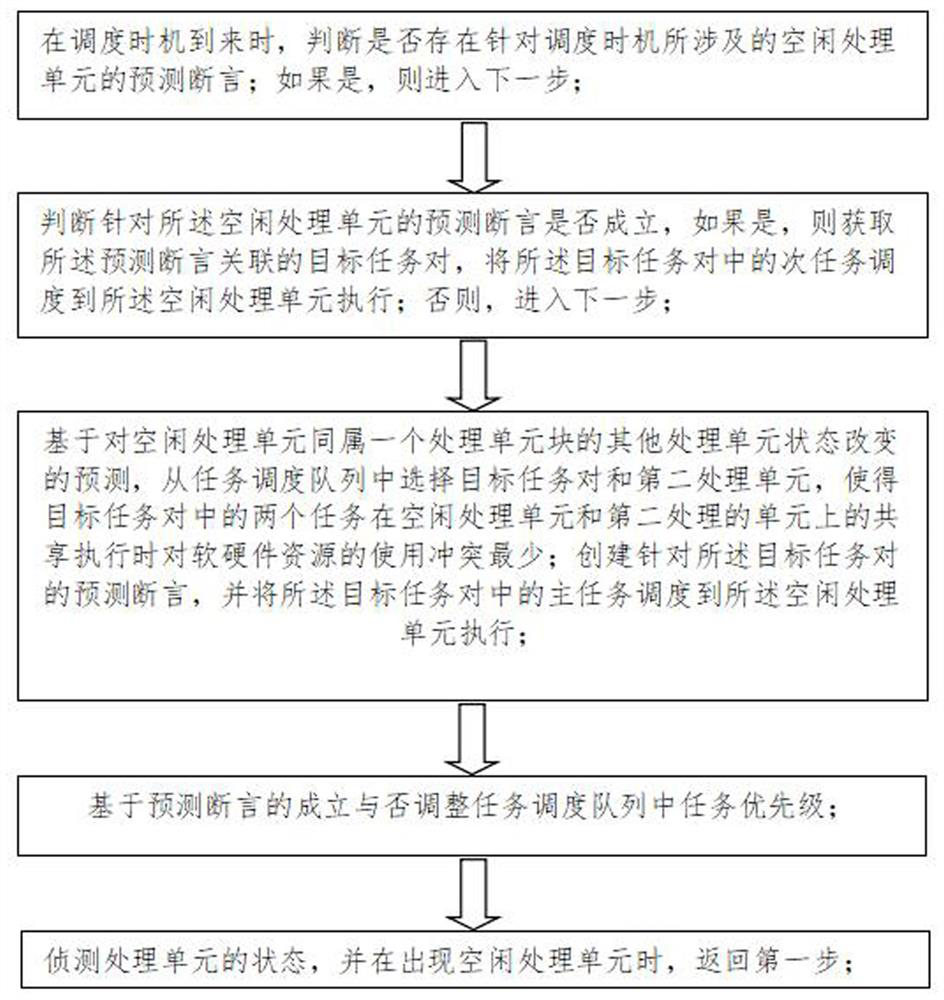

Processing unit task scheduling method and device

ActiveCN114356534AIncrease profitImprove execution efficiencyProgram initiation/switchingResource allocationProcessing elementResource management

The invention relates to a processing unit task scheduling method and device, and the method comprises the steps: judging whether a prediction assertion for an idle processing unit related to a scheduling opportunity exists or not when the scheduling opportunity arrives; during prediction assertion, obtaining a target task pair associated with the prediction assertion, and scheduling a secondary task in the target task pair to the idle processing unit for execution; the main task is scheduled to the first processing unit for execution after the prediction assertion is created. From the perspective of task demand condition estimation and hardware resource management, the fusion range of tasks and resources is expanded by constructing the target task pair, the balance between task demands and hardware resources is achieved on the basis of achieving good task sharing execution, and finally the task scheduling efficiency of the processing unit is improved.

Owner:苏州云途半导体有限公司

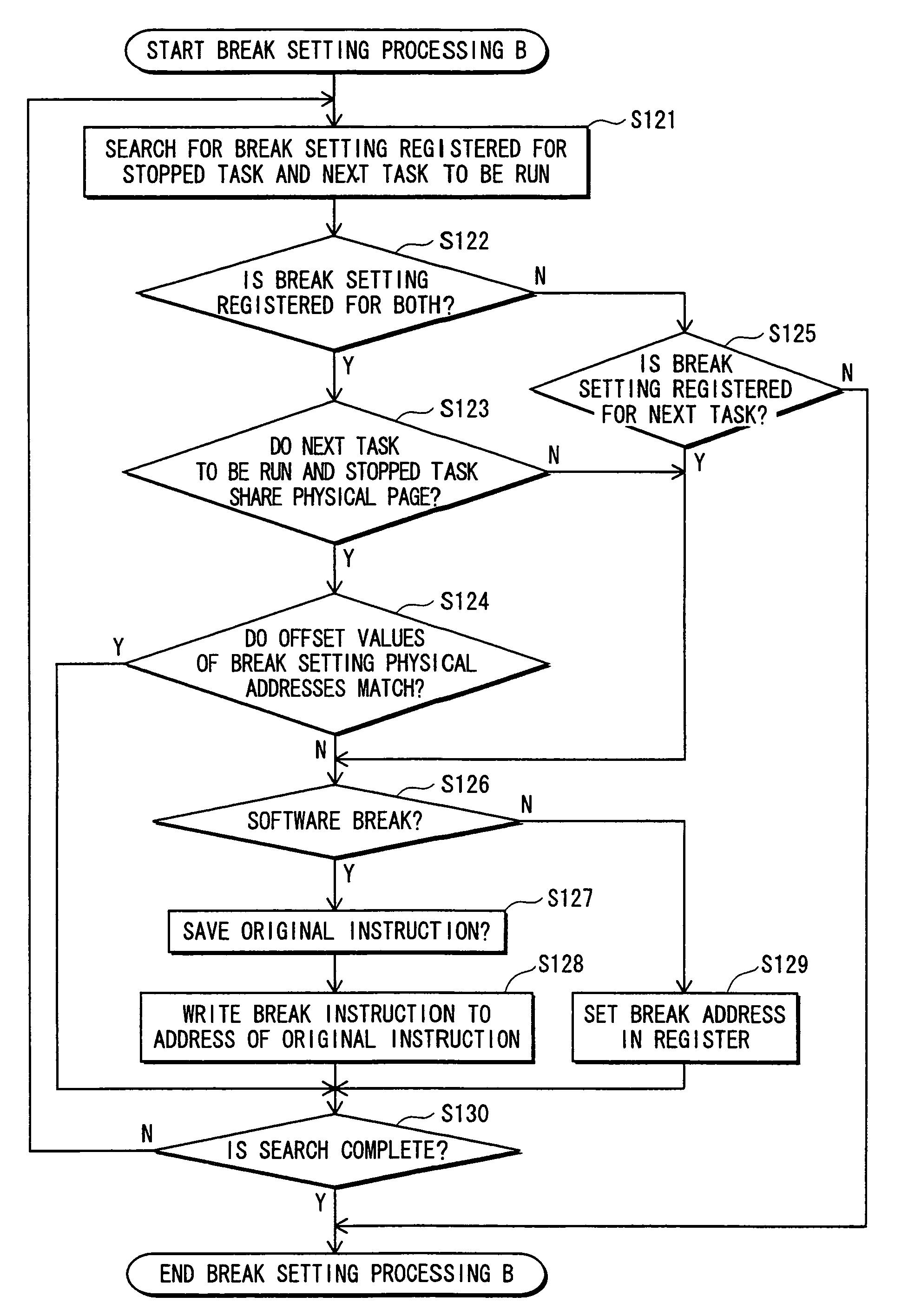

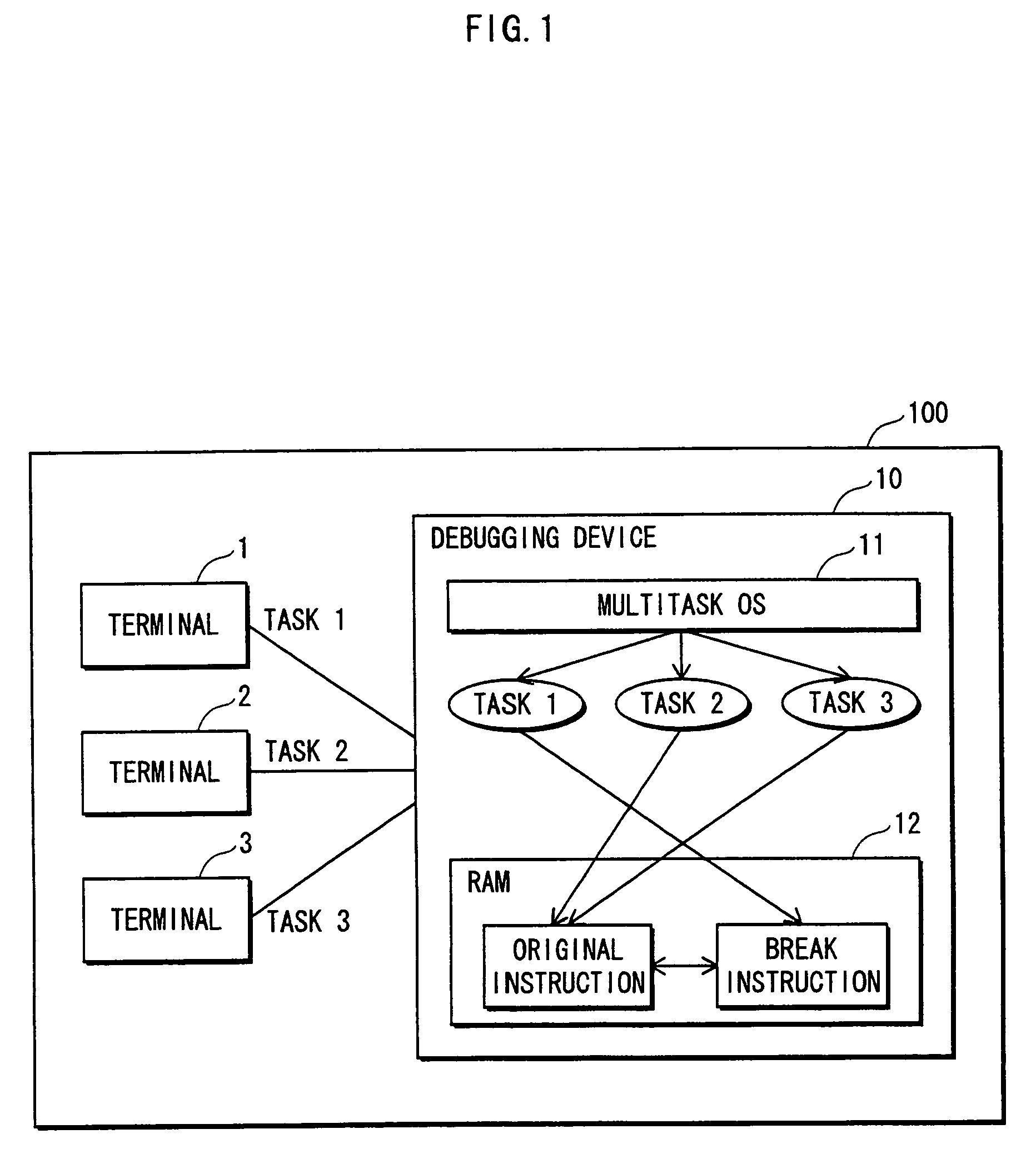

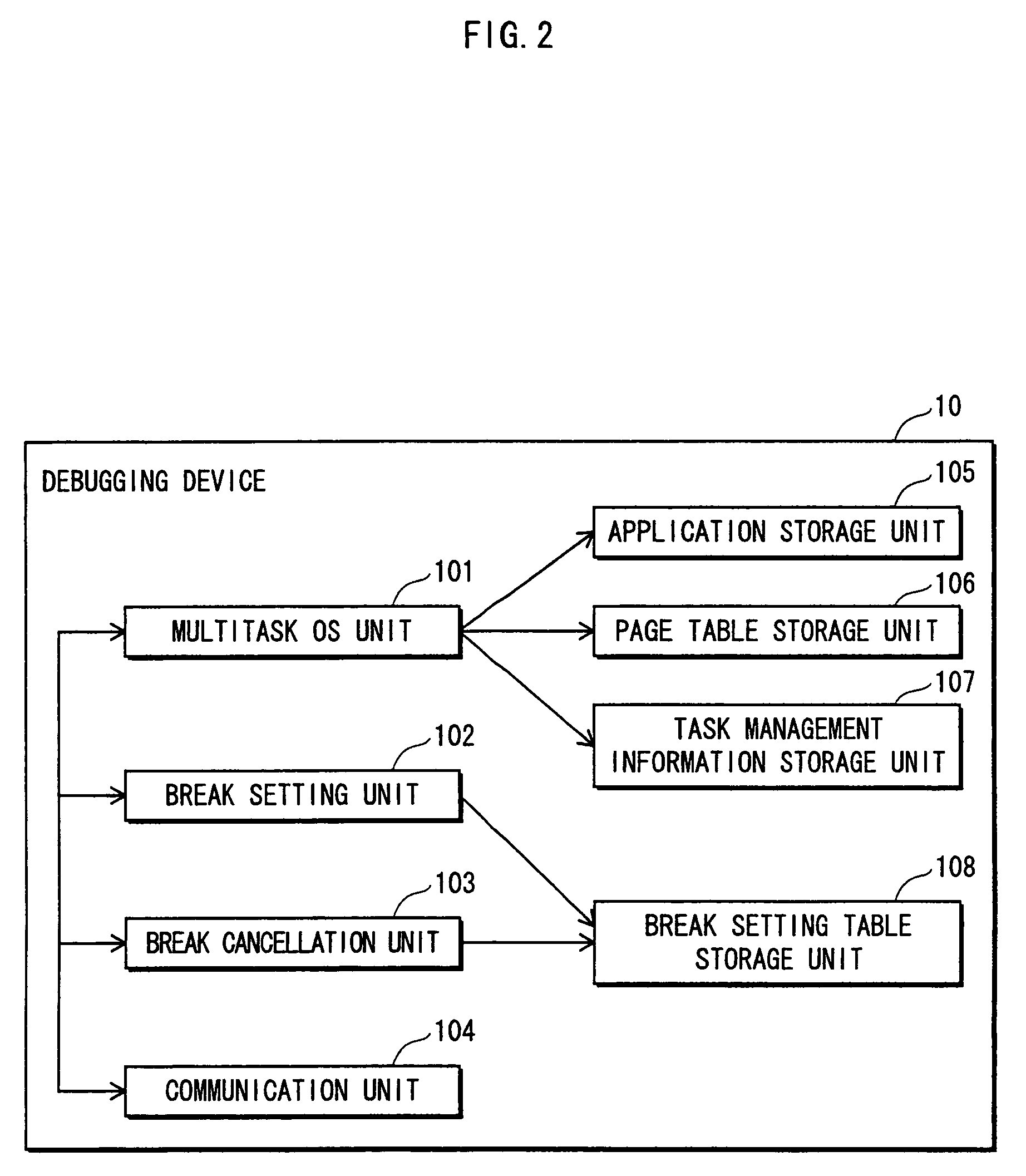

Debugging system and method

InactiveUS7694182B2Processing loadError detection/correctionSpecific program execution arrangementsSoftware engineeringPhysical address

In a multitask execution environment, a debugging device performs debugging setting for rewriting part of original recording content in a memory area shared by at least two tasks, and debugging cancellation for restoring rewritten recording content back to original recording content. The debugging device stores a memory area used by each task, and address information specifying each debugging target task and a respective address. When task switching occurs, if a next task is not a debugging target, recording content at a physical address specified by address information other than that of the next task and within the physical address space range used by the next task is put into a post-debugging cancellation state. If the next task is a debugging target task, in addition to the above processing, recording content at the physical address specified by the address information of the next task is put into a post-debugging setting state.

Owner:SOCIONEXT INC

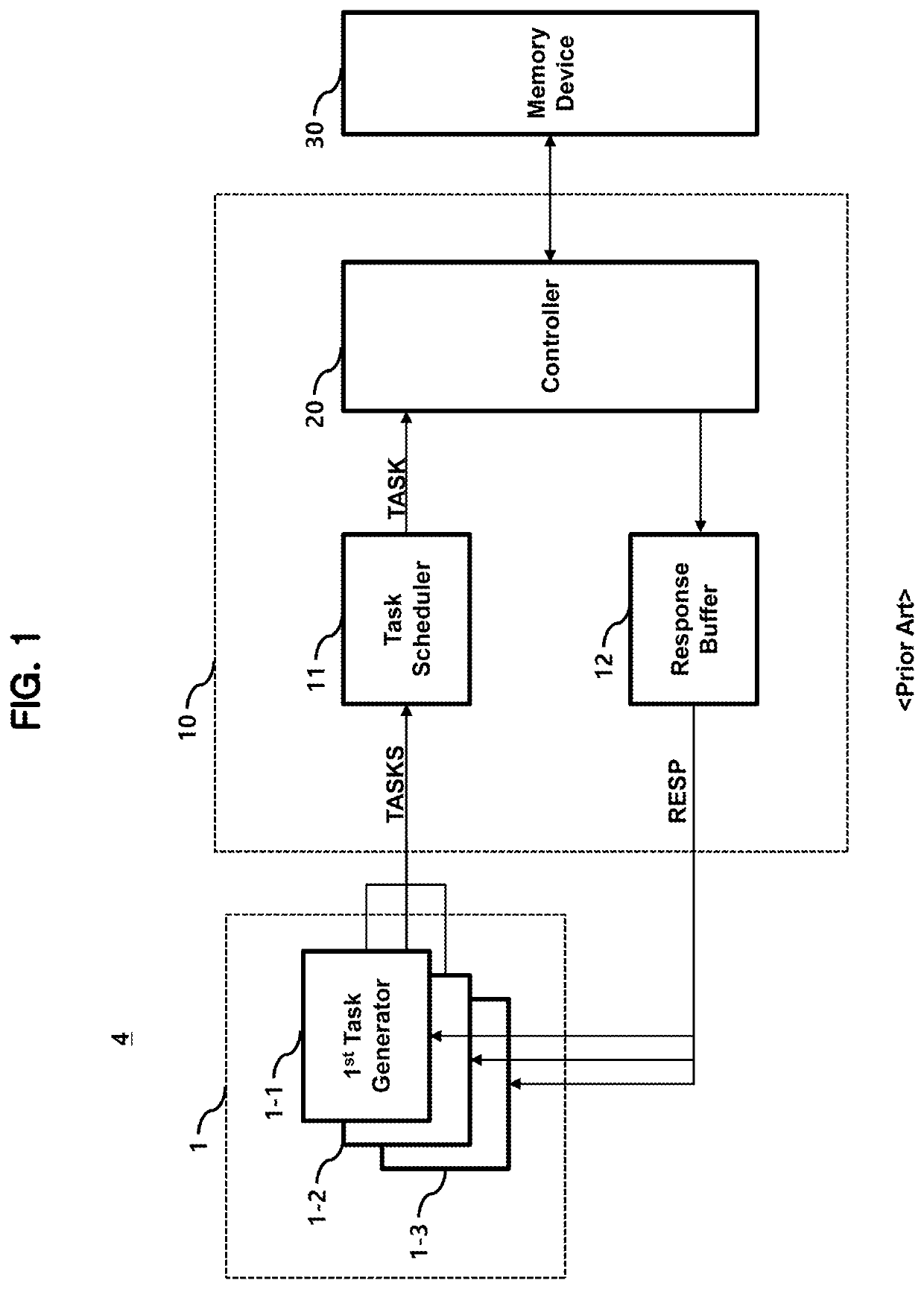

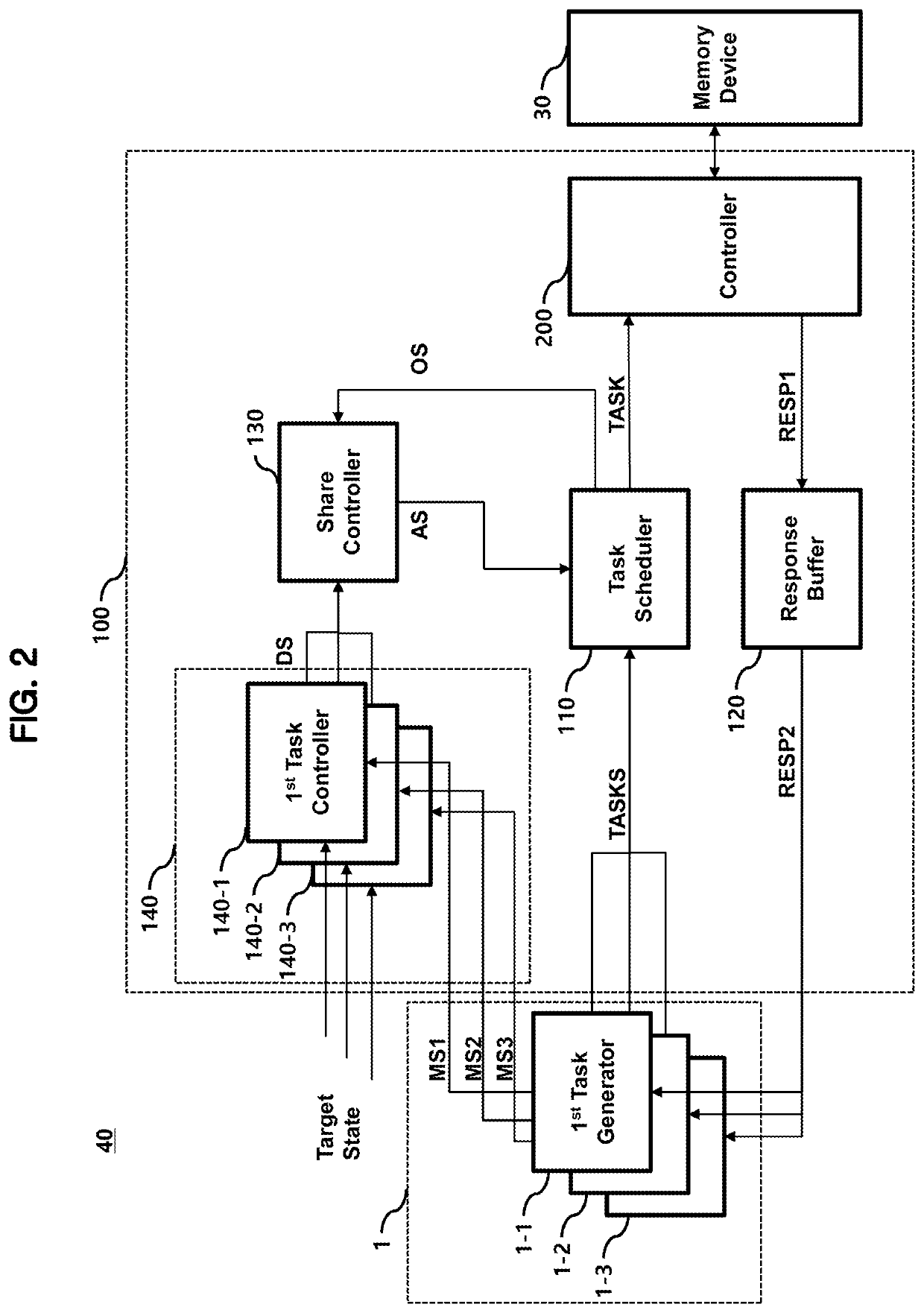

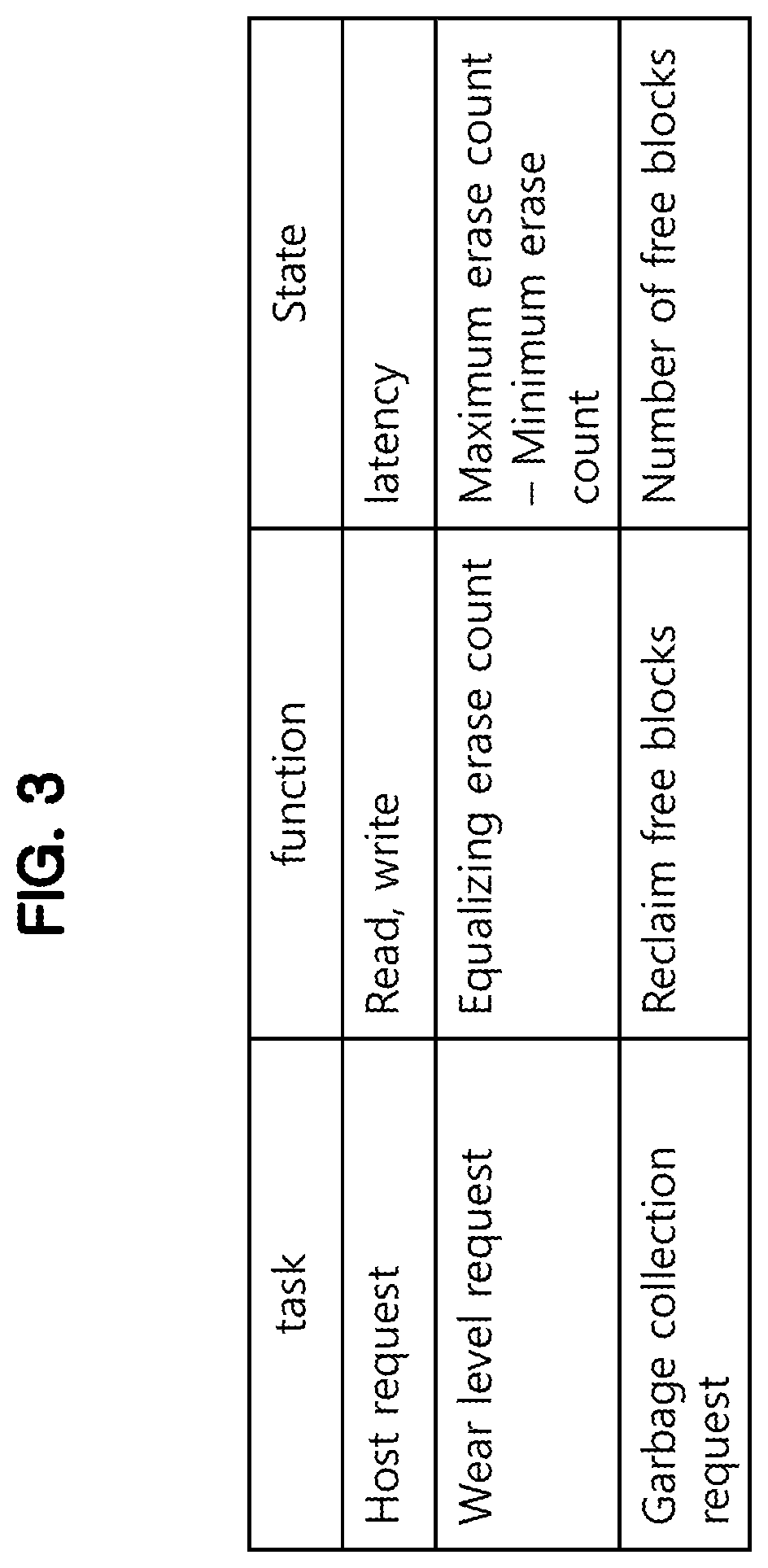

Semiconductor device for scheduling tasks for memory device and system including the same

ActiveUS10635351B2Memory architecture accessing/allocationInput/output to record carriersComputer hardwareDevice material

A semiconductor device may include a task controller configured to generate a target share for a plurality of task generators according to respective target states and respective measured states of the plurality of task generators, a task scheduler configured to schedule the plurality of tasks according to an allocated share, the plurality of tasks being provided from the plurality of task generators, and a share controller configured to determine the allocated share according to the target share and a measured share of the plurality of task generators.

Owner:SK HYNIX INC +1

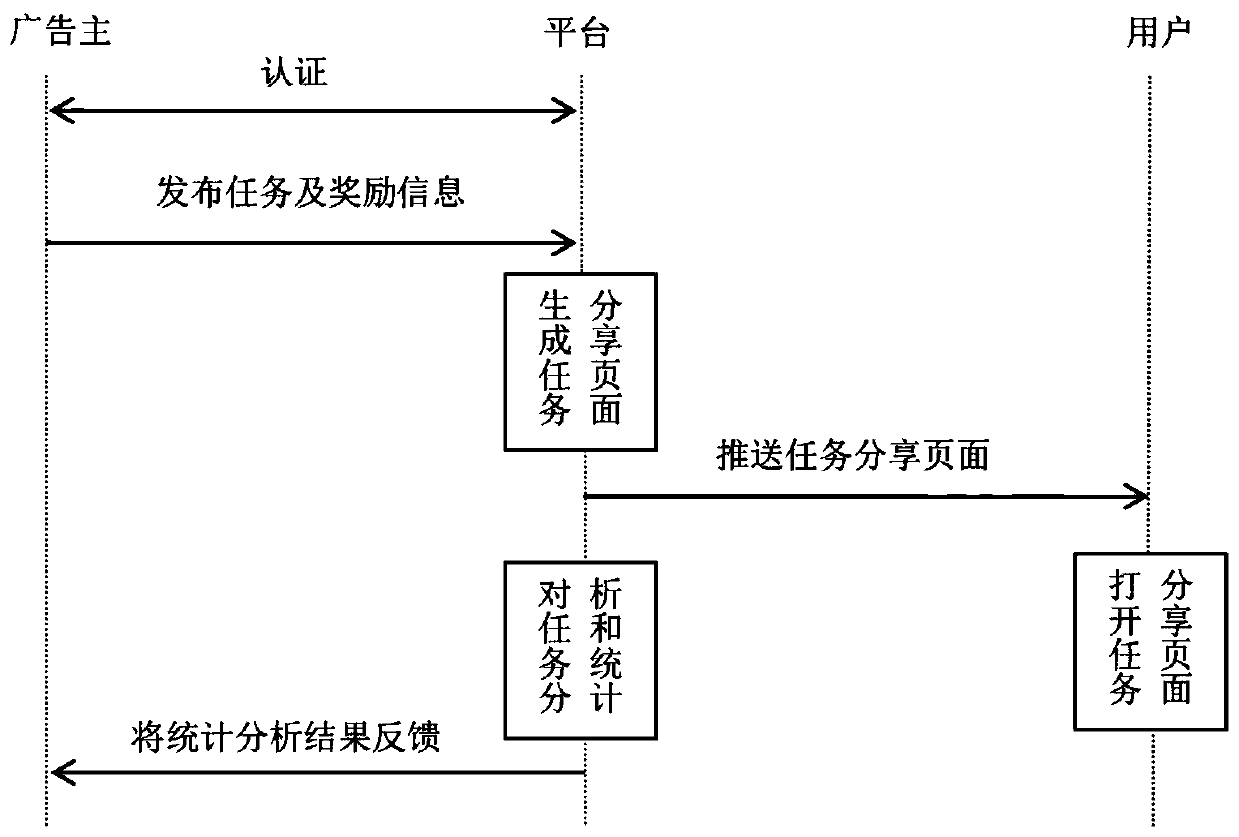

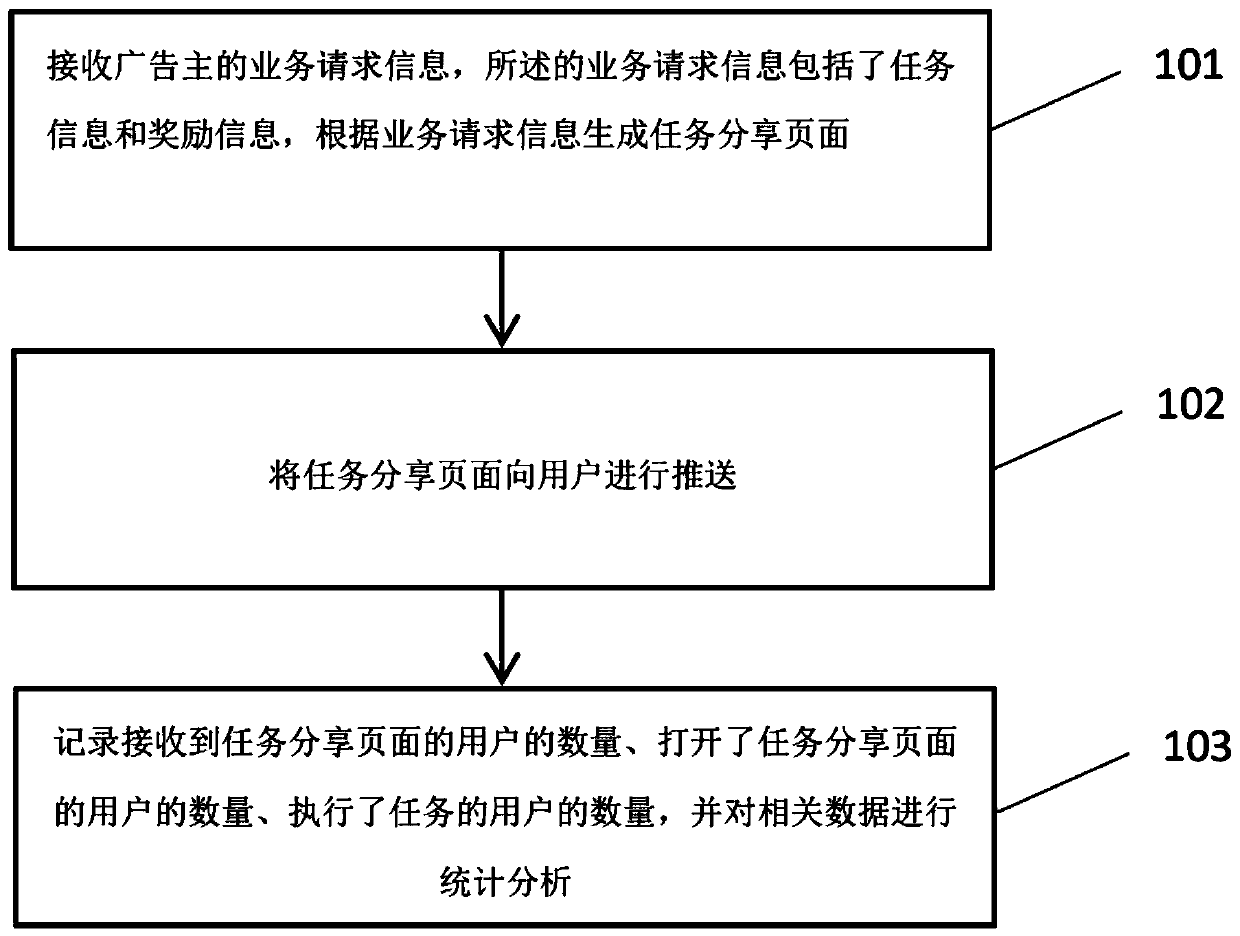

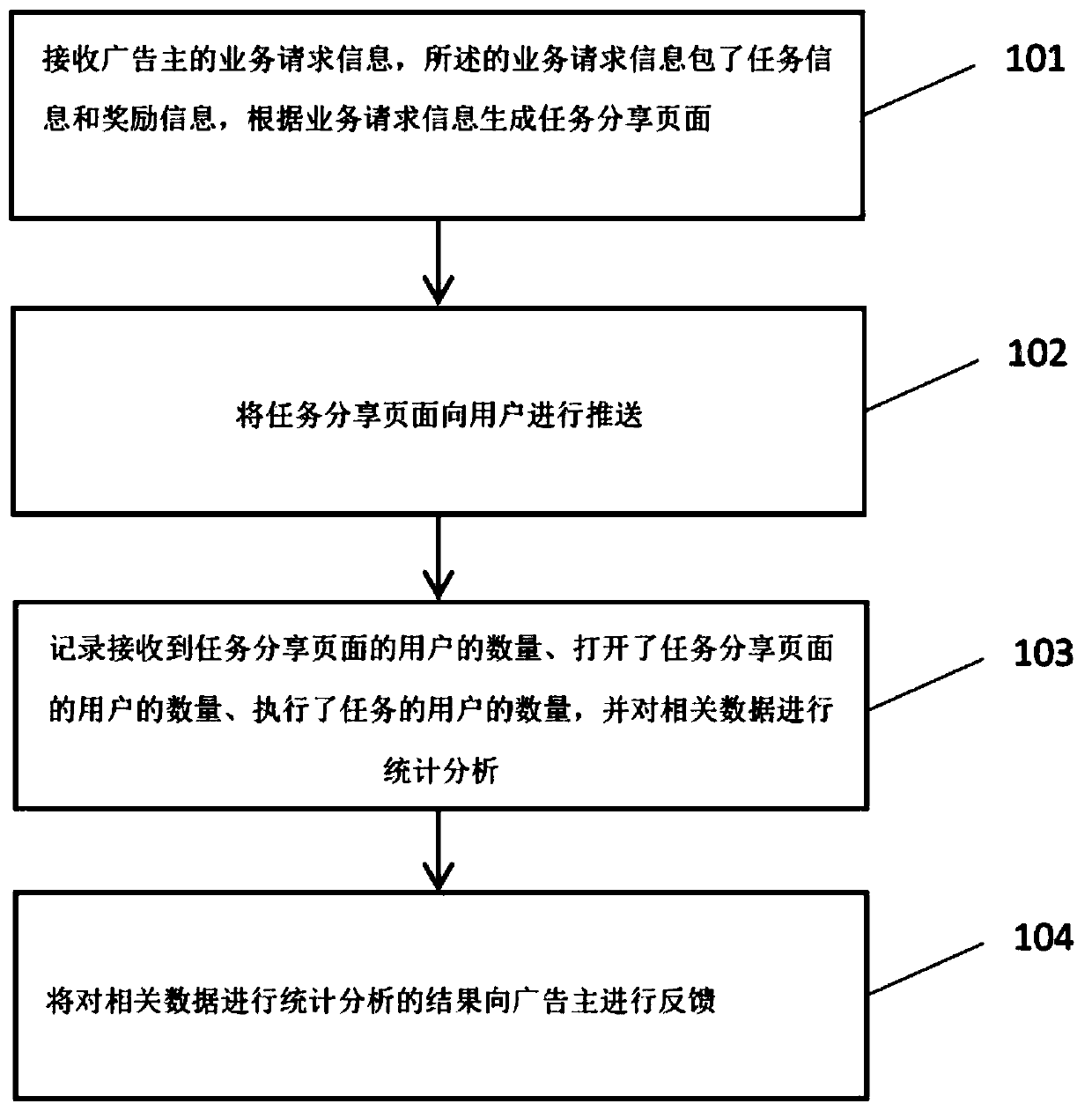

Service promotion method and device based on electronic lucky money, computer equipment and readable storage medium

PendingCN111160960AImprove push efficiencyNo more repulsionAdvertisementsPayment circuitsEngineeringWorld Wide Web

The invention discloses a service promotion method and device based on electronic lucky money, computer equipment, and a readable storage medium. The method comprises the steps: receiving service request information of an advertiser and the service request information comprising task information and reward information; generating a task sharing page according to the service request information, and pushing the task sharing page to the user; and recording the number of the users receiving the task sharing page, the number of the users opening the task sharing page and the number of the users executing the task, and carrying out statistical analysis on related data.

Owner:CCB FINTECH CO LTD

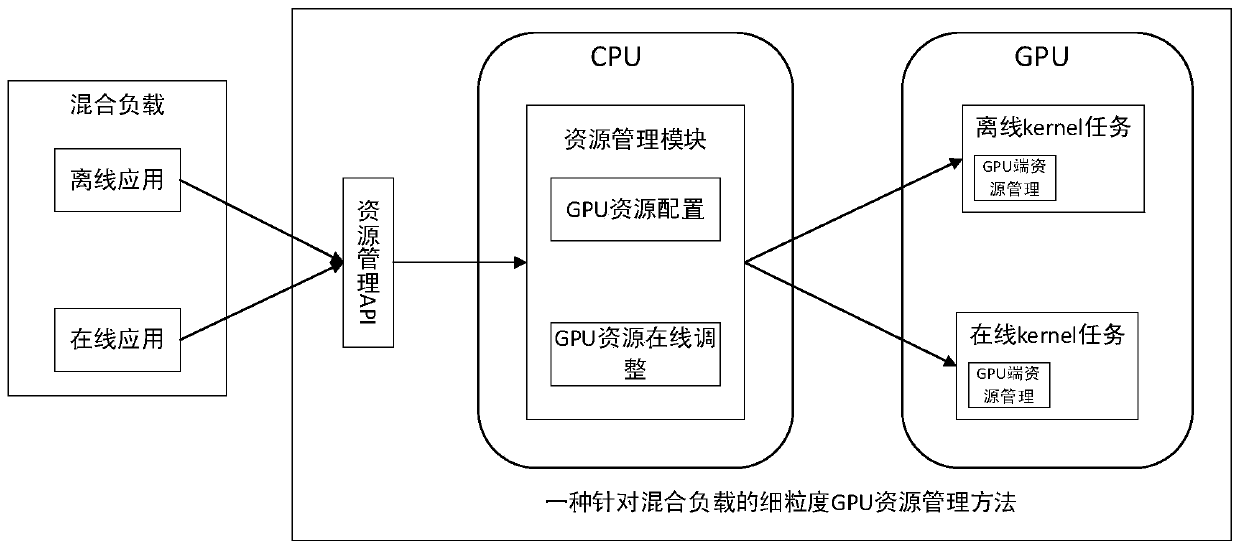

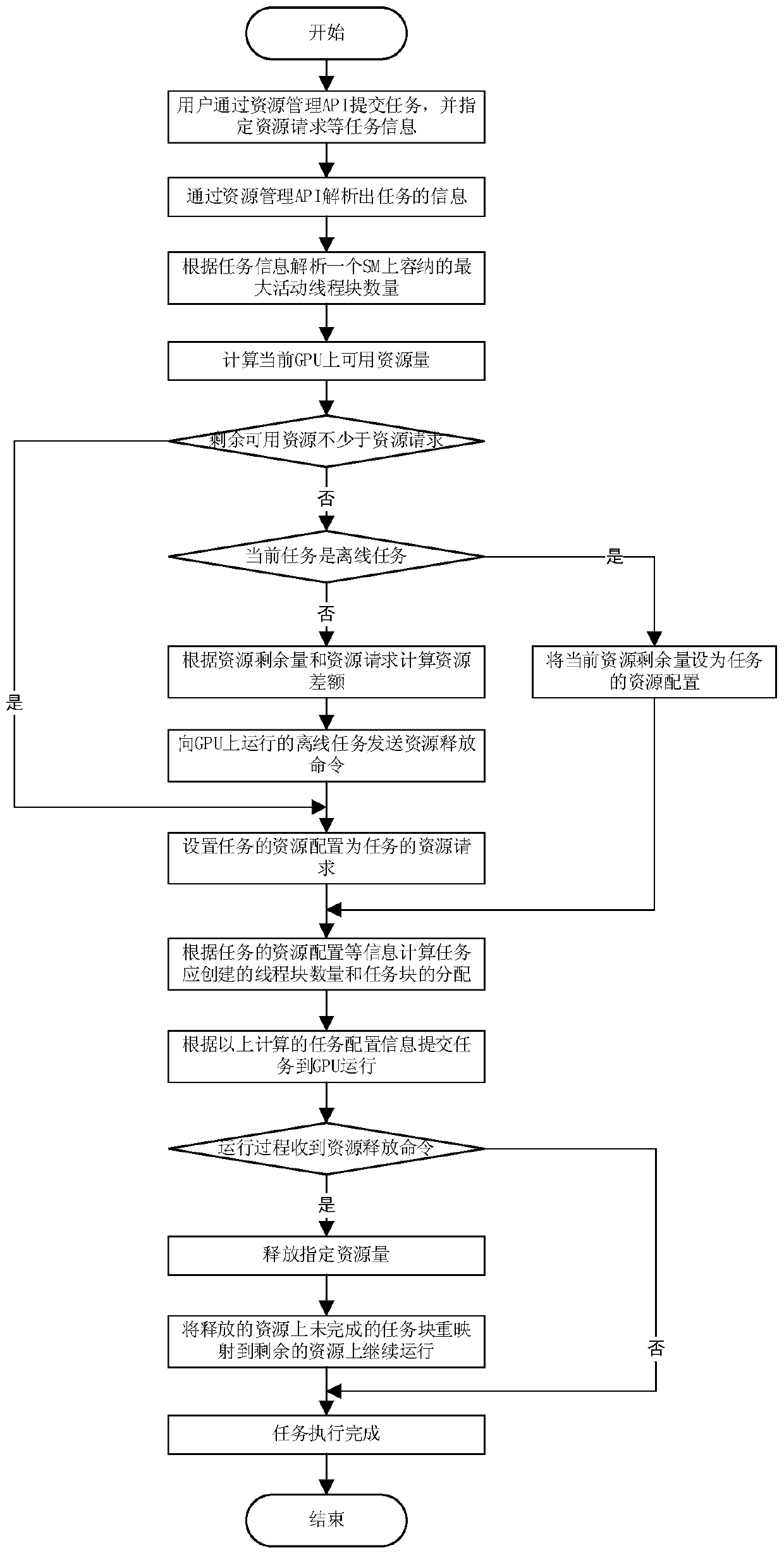

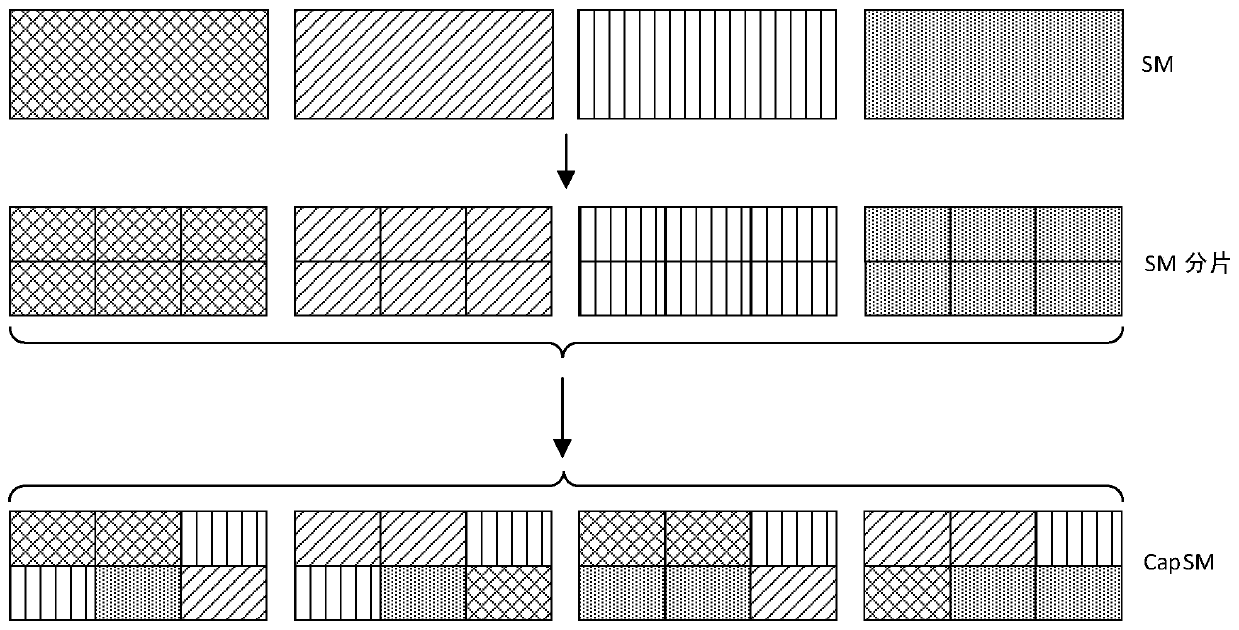

A fine-grained approach to GPU resource management for mixed workloads

InactiveCN107357661BEffective control of performance disturbanceGuaranteed service quality objectivesResource allocationQos quality of serviceMulti processor

The invention discloses a fine-grained GPU resource management method for mixed loads. A capacity-based streaming multiprocessor abstract model CapSM is proposed and serves as a basic unit of resource management; when the mixed loads (including an online task and an offline task) share GPU resources, the use of the GPU resources by different types of tasks is managed through fine granularity, and task resource allocation and resource online adjustment are supported; and while the GPU resources are shared, the service quality of the online task is ensured. The resources allocated to the tasks are determined according to the types of the tasks, a resource request and a current GPU resource state of a system; the use of the GPU resources by the offline task can be met under the condition of sufficient resources; and when the GPU resources are insufficient, the resource use of the offline task is dynamically adjusted to preferentially meet the resource demand of the online task, so that when the mixed loads run at the same time, the performance of the online task can be ensured and the GPU resources can be fully utilized.

Owner:凯习(北京)信息科技有限公司

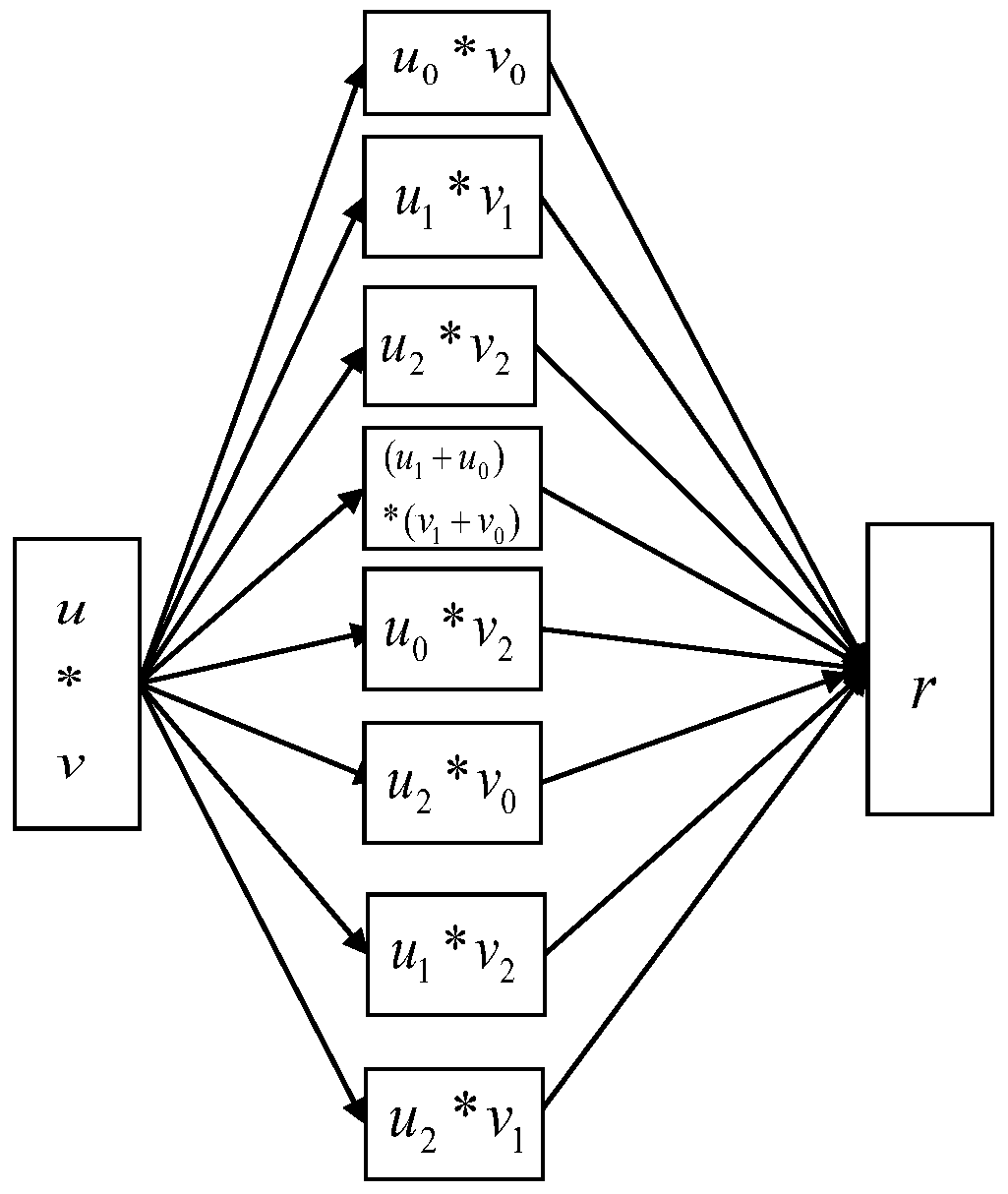

A Parallel Implementation Method of Large Integer Multiplication Karatsuba Algorithm

ActiveCN105653239BImprove efficiencySolving Data Dependency IssuesComputation using non-contact making devicesTheoretical computer scienceKaratsuba algorithm

The invention discloses a parallel implementation method of a big data Karatsuba algorithm. According to the method, the correlation problems of storage and calculation of partial products are solved through ingenious formula transformation technique, pointer operation and storage manner on the basis of 64-bit unsigned long integer operation, the algorithm is parallelized by adopting a section task sharing policy through OpenMP multi-thread programming, so that the first-layer parallelization of 8 threads in a recursive program is started to solve 8 partial products, each section is responsible for the calculation task of one partial product, and after all the partial products are solved, serial merging is carried out, so that the Karatsuba algorithm is parallelized and the efficiency of the algorithm is improved.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI

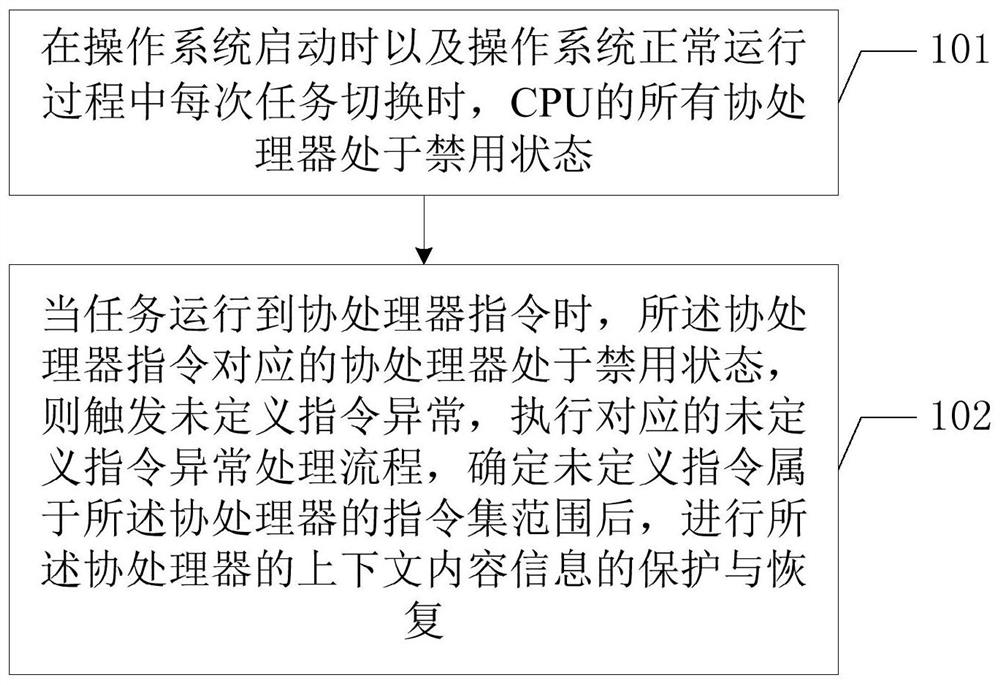

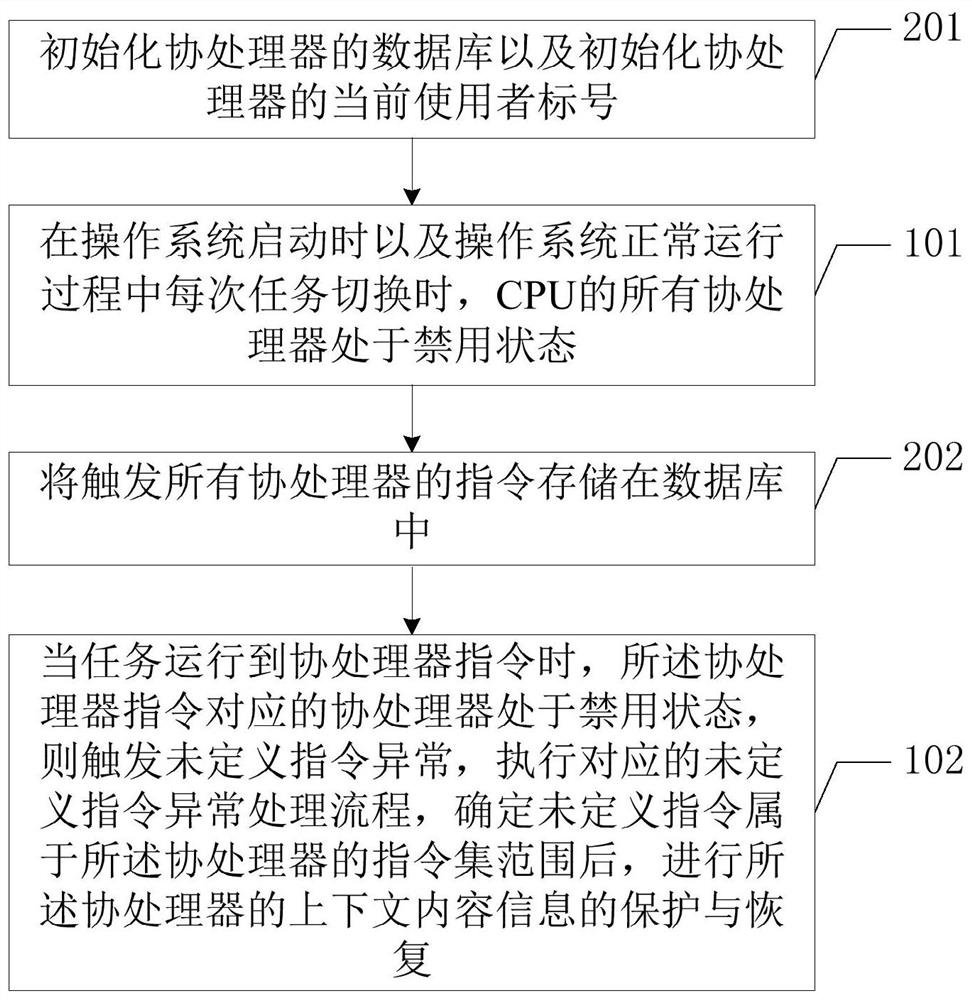

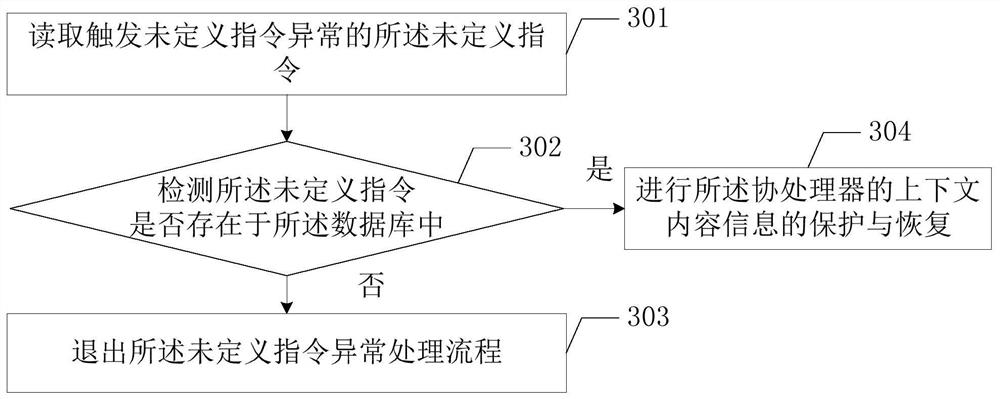

Method and terminal device for improving task switching efficiency

ActiveCN110750304BImprove the efficiency of task switchingProgram initiation/switchingConcurrent instruction executionInformation processingComputer architecture

The present invention is applicable to the technical field of computer information processing, and provides a method for improving task switching efficiency and a terminal device. The method includes: controlling the CPU's All coprocessors are in a disabled state; when the task runs to a coprocessor instruction, the coprocessor corresponding to the coprocessor instruction is in a disabled state, an undefined instruction exception is triggered, and the corresponding undefined instruction exception processing flow is executed, After determining that the undefined instruction belongs to the instruction set range of the coprocessor, the protection and recovery of the context content information of the coprocessor is performed, so that the context content information of the coprocessor can be automatically protected on demand according to task requirements and recovery, which not only improves the efficiency of task switching, but also enables multiple tasks to share the use of coprocessors.

Owner:PAX COMP TECH SHENZHEN

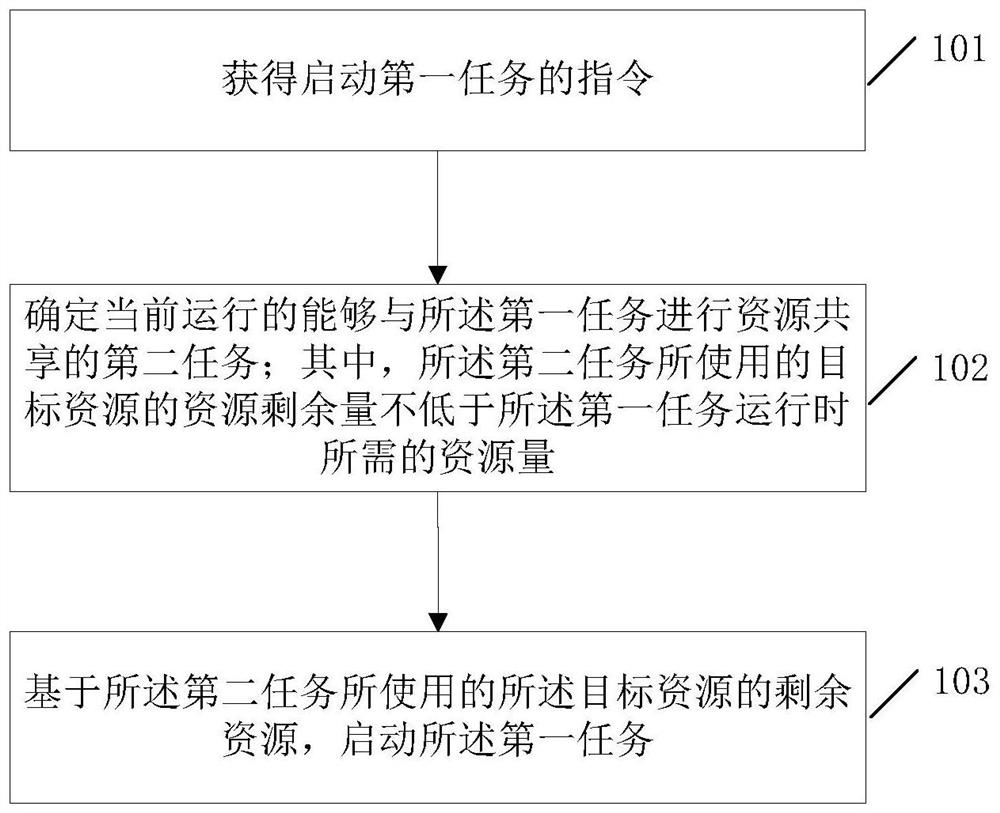

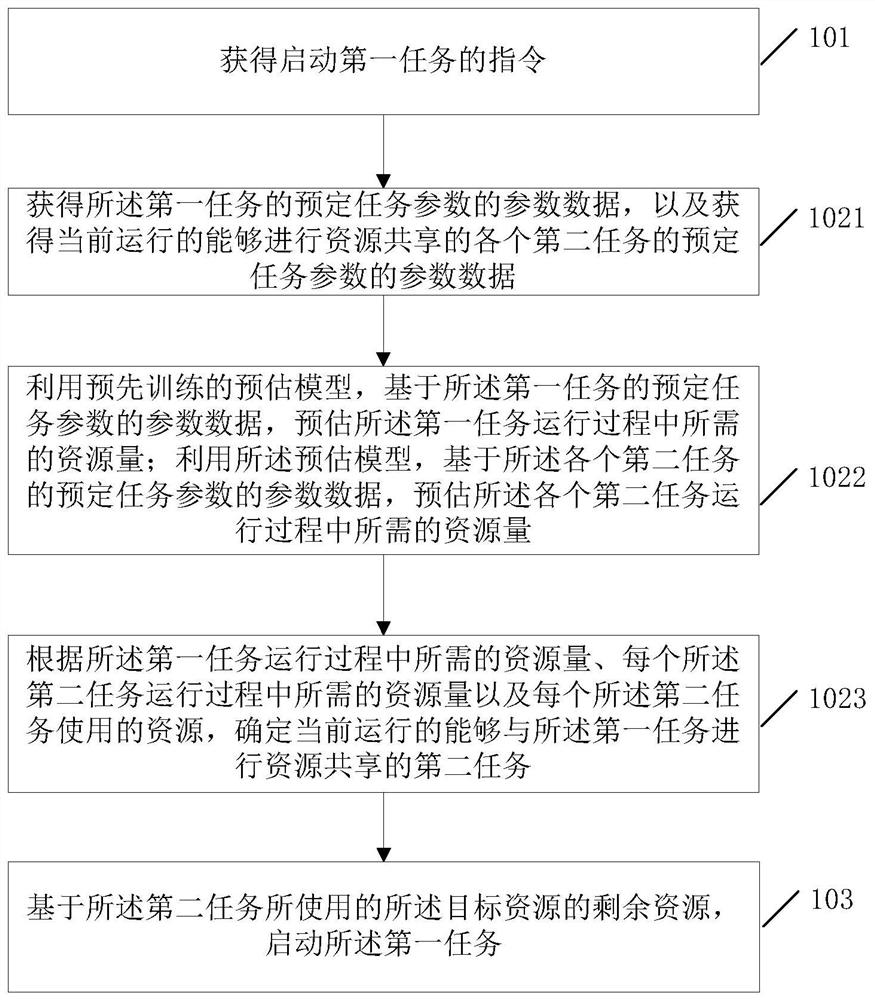

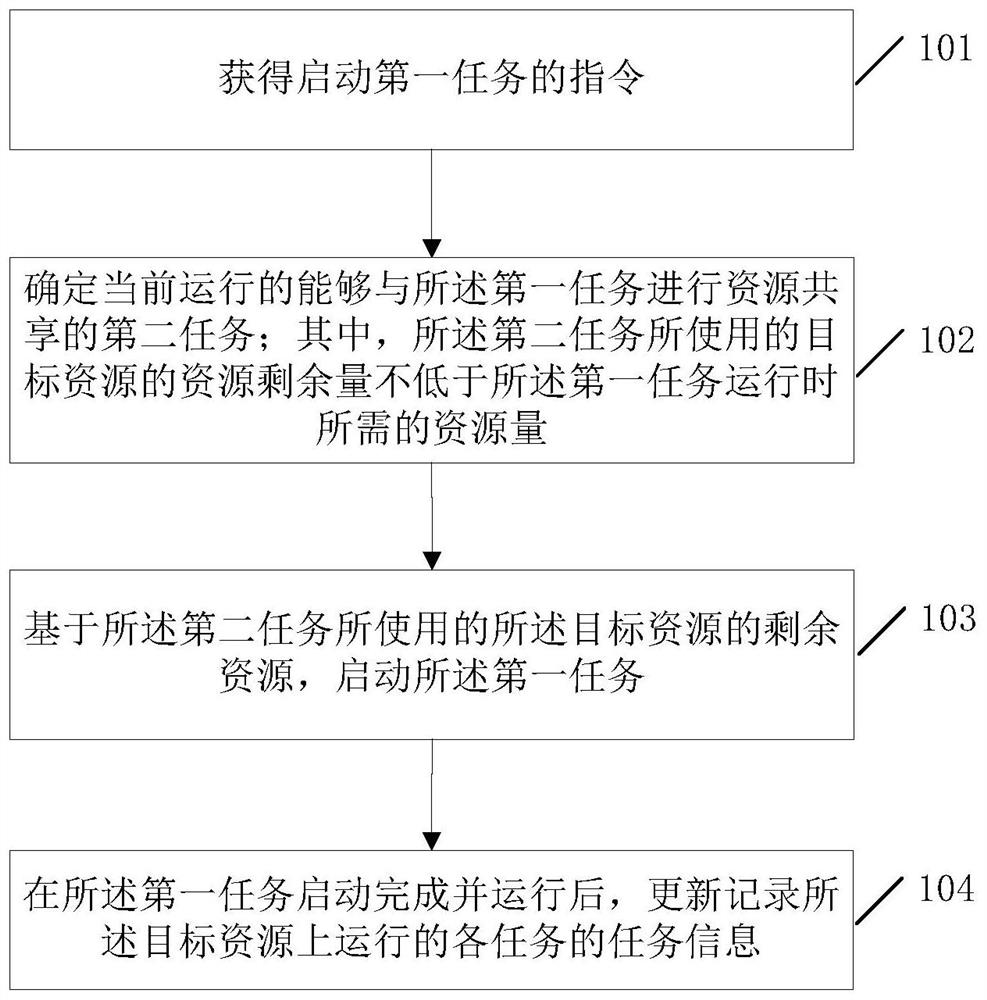

A task processing method, service platform and electronic equipment

ActiveCN108052384BReduce wasteOvercome the problem of low resource utilizationProgram initiation/switchingResource allocationResource utilizationProcessing

The present application provides a task processing method, a service platform, and an electronic device. When the method obtains an instruction to start a first task, it determines the currently running second task that can share resources with the first task, and based on The remaining resources of the target resource used by the second task start the first task. Wherein, the resource remaining amount of the target resource used by the second task is not lower than the resource amount required when the first task is running. It can be seen that the present application provides a multi-task resource sharing solution. When the application solution is applied to the resource scheduling of small tasks, more than one small task can be started and run based on the same GPU, so that This enables multiple small tasks to share the same GPU resource, effectively overcomes the problem of low utilization of GPU resources in the prior art due to small tasks monopolizing the GPU, and reduces waste of resources.

Owner:LENOVO (BEIJING) LTD

A processing unit task scheduling method and device

ActiveCN114356534BIncrease profitImprove execution efficiencyProgram initiation/switchingResource allocationSoftware engineeringProcessing element

The present invention relates to a processing unit task scheduling method and device, the method comprising: when the scheduling opportunity arrives, judging whether there is a forecast assertion for the idle processing unit involved in the scheduling opportunity; when predicting the assertion, acquiring the forecast assertion For the associated target task pair, the secondary task in the target task pair is scheduled to be executed on the idle processing unit; the main task is scheduled to be executed on the first processing unit after the prediction assertion is created. From the perspective of task demand estimation and hardware resource management, the present invention expands the scope of task and resource fusion by constructing target task pairs, and achieves good shared execution of tasks on the basis of task demand and hardware resources. Reach a balance, and finally improve the efficiency of processing unit task scheduling.

Owner:江苏云途半导体有限公司

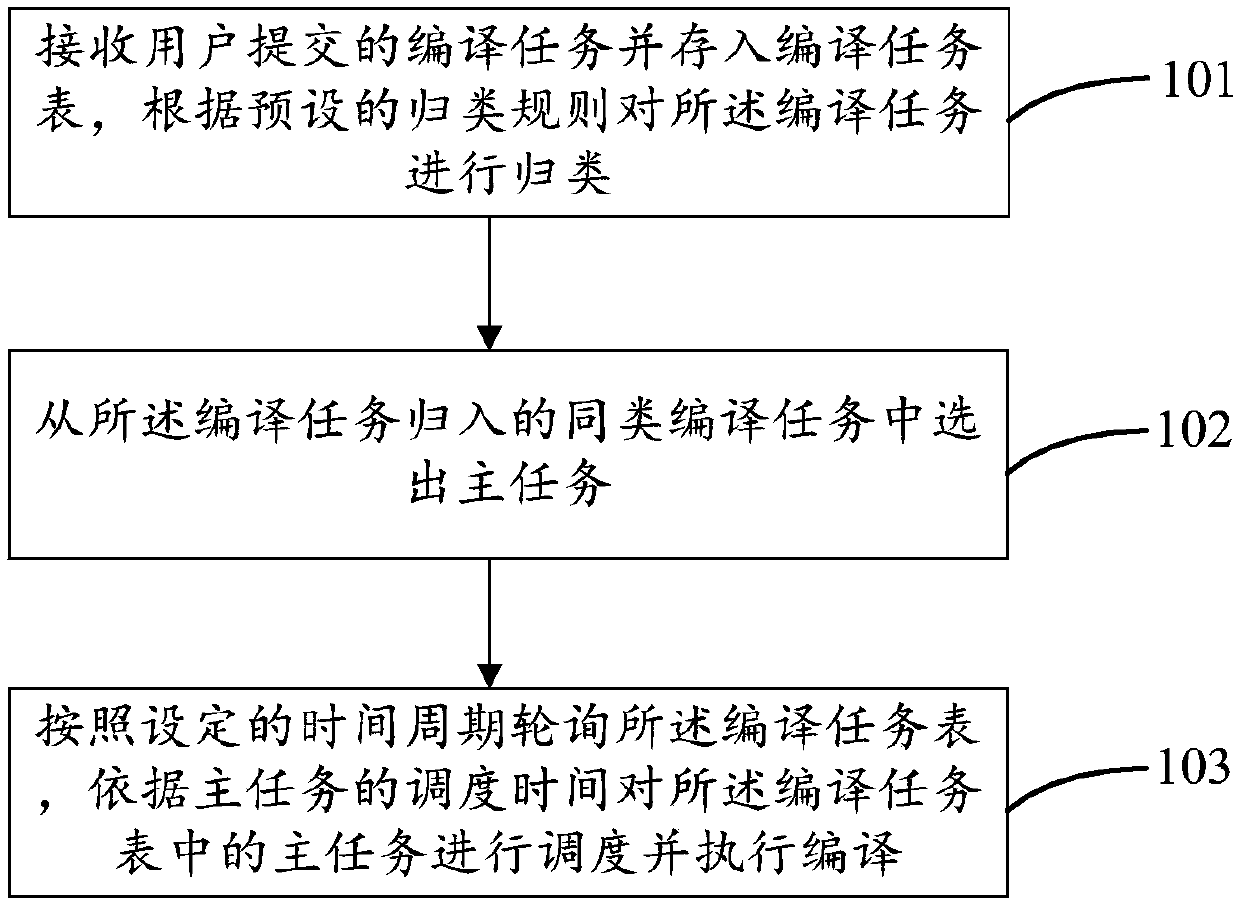

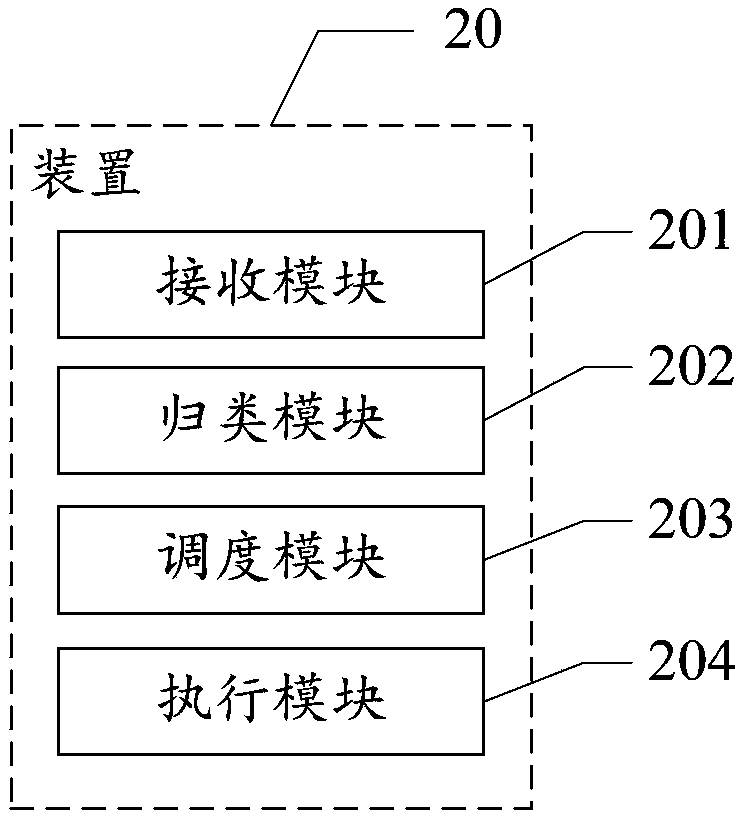

Compiling task execution method and device

ActiveCN110879707AReduce waiting timeImprove compilation efficiencyCode compilationProgramming languageSoftware engineering

The embodiment of the invention discloses a compiling task execution method and a device. The invention relates to the field of data communication. Compilation tasks are classified into similar compilation tasks according to a preset classification rule, only main tasks in the similar compilation tasks are scheduled and compiled, and other compilation tasks in the similar compilation tasks share compilation results of the main tasks. The compiling efficiency is improved, and the waiting time of compiling tasks is shortened. The method comprises the steps of receiving compiling tasks submittedby a user, storing the compiling tasks into a compiling task table, and classifying the compiling tasks according to a preset classification rule; selecting a main task from similar compiling tasks towhich the compiling tasks belong; and polling the compilation task table according to a set time period, scheduling the main task in the compilation task table according to the scheduling time of themain task, and executing compilation.

Owner:MAIPU COMM TECH CO LTD

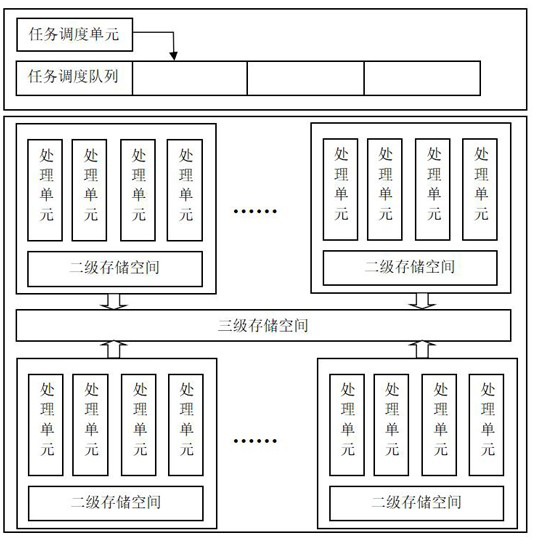

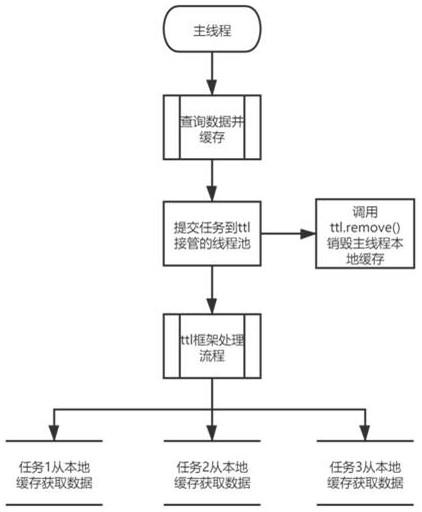

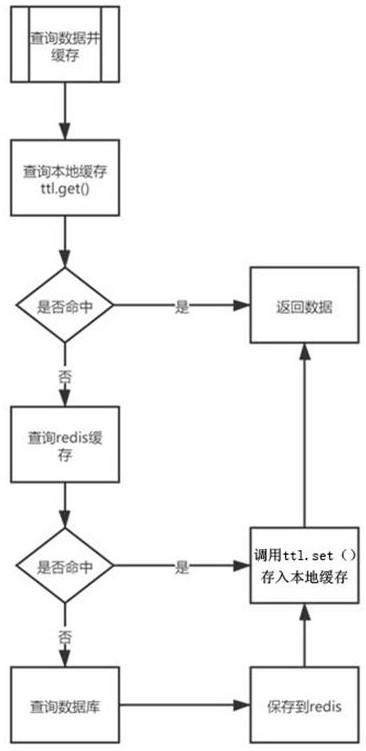

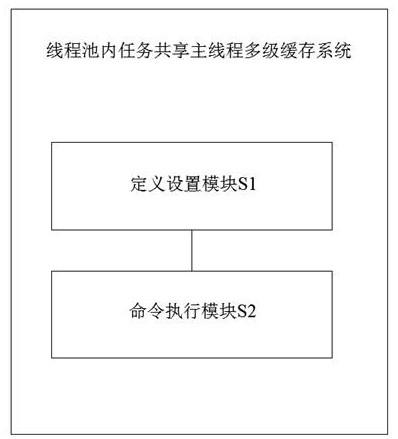

Method and system for multi-level caching of main thread shared by tasks in thread pool, and equipment

InactiveCN113535786AProcessing speedRelieve pressureDigital data information retrievalSpecial data processing applicationsComputer architectureParallel computing

The invention discloses a method and system for multi-level caching of a main thread shared by tasks in a thread pool, and equipment. The method for multi-level caching of the main thread shared by the tasks in the thread poolcomprises the following steps: setting a multi-level cache query method; defining a thread pool, and setting a task execution method in the thread pool; starting a main thread, and caching the data to cache middleware and local according to a multi-level cache query method; and creating a sub-task in a main thread, submitting the sub-task to a defined thread pool, executing the sub-task, and obtaining data from the local. The system for multi-level caching of the main thread shared by the tasks in the thread pooll comprises: a definition setting module which is used for setting a multi-level cache query method and is used for defining a thread pool and setting a task execution method in the thread pool; a command execution module which is used for starting a main thread and caching the data to the cache middleware and the local according to a multi-level cache query method and is used for creating a sub-task in the main thread, submitting the sub-task to the defined thread pool, executing the sub-task and acquiring data from the local.

Owner:广州嘉为科技有限公司

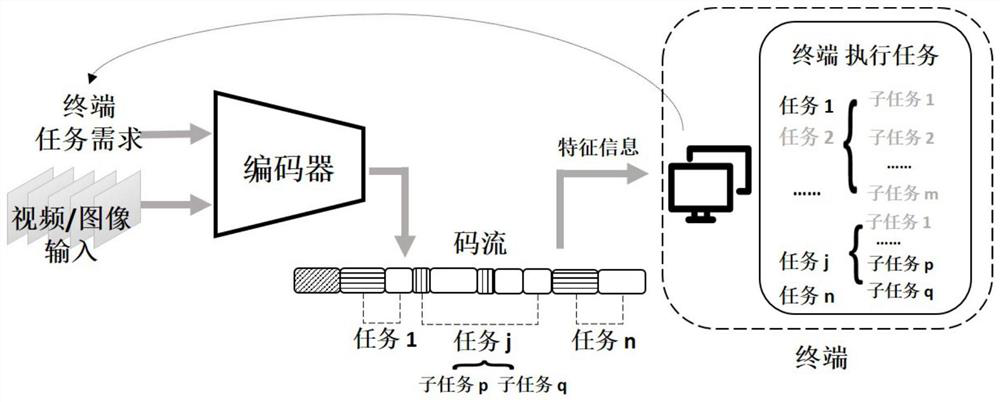

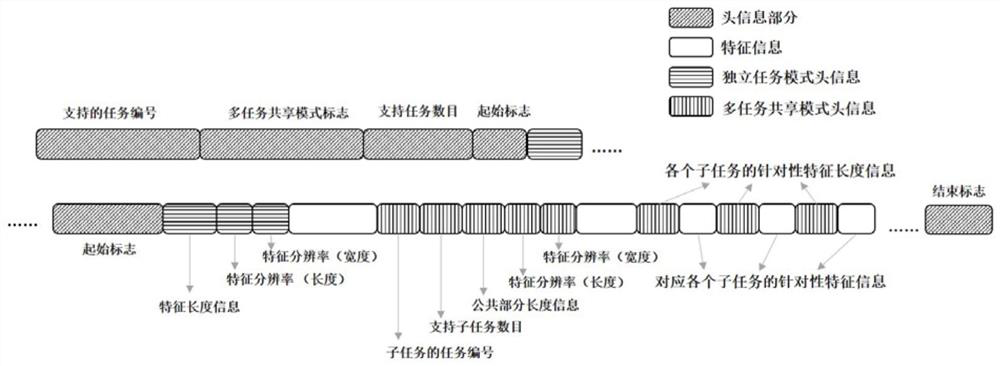

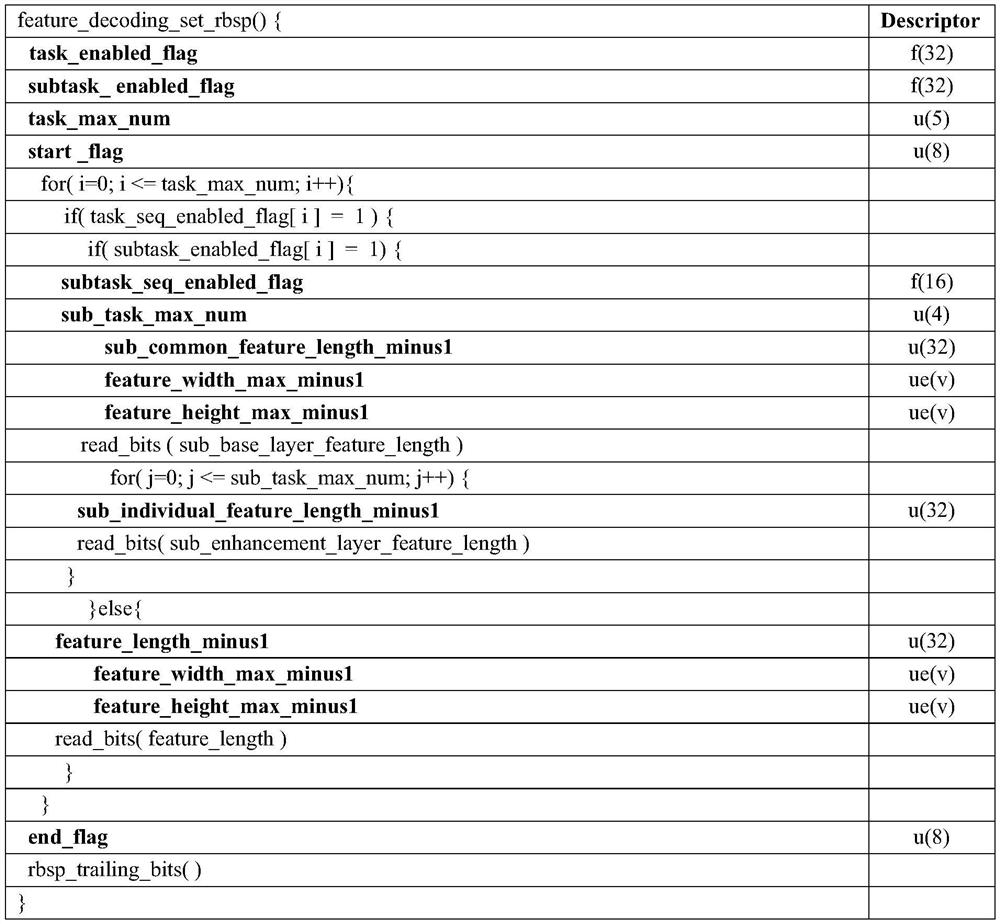

Machine-Oriented Universal Coding Method

ActiveCN110662080BReduce transmissionSmall amount of calculationDigital video signal modificationTask analysisAlgorithm

The invention discloses a machine-oriented universal coding method, including: compression coding stage: for different machine intelligence analysis tasks, adopt independent task mode and / or multi-task sharing mode to compress corresponding video or image data to obtain corresponding feature information ; Put the feature information into the code stream corresponding to the video or image data, and at the same time combine the compression mode to give the label information required in the decoding, so as to obtain the feature code stream; Decoding stage: read the header information of the feature code stream, according to the The annotation information determines the compression mode used in the compression stage, so that the feature information is read in a corresponding way, and then the feature information is used as the input of the machine intelligence analysis task to obtain the analysis result. This method can realize the encoding of video / image feature information required for each task, thereby improving the efficiency of intelligent task analysis, reducing transmission pressure, supporting edge analysis and computing, and other possible future needs.

Owner:UNIV OF SCI & TECH OF CHINA

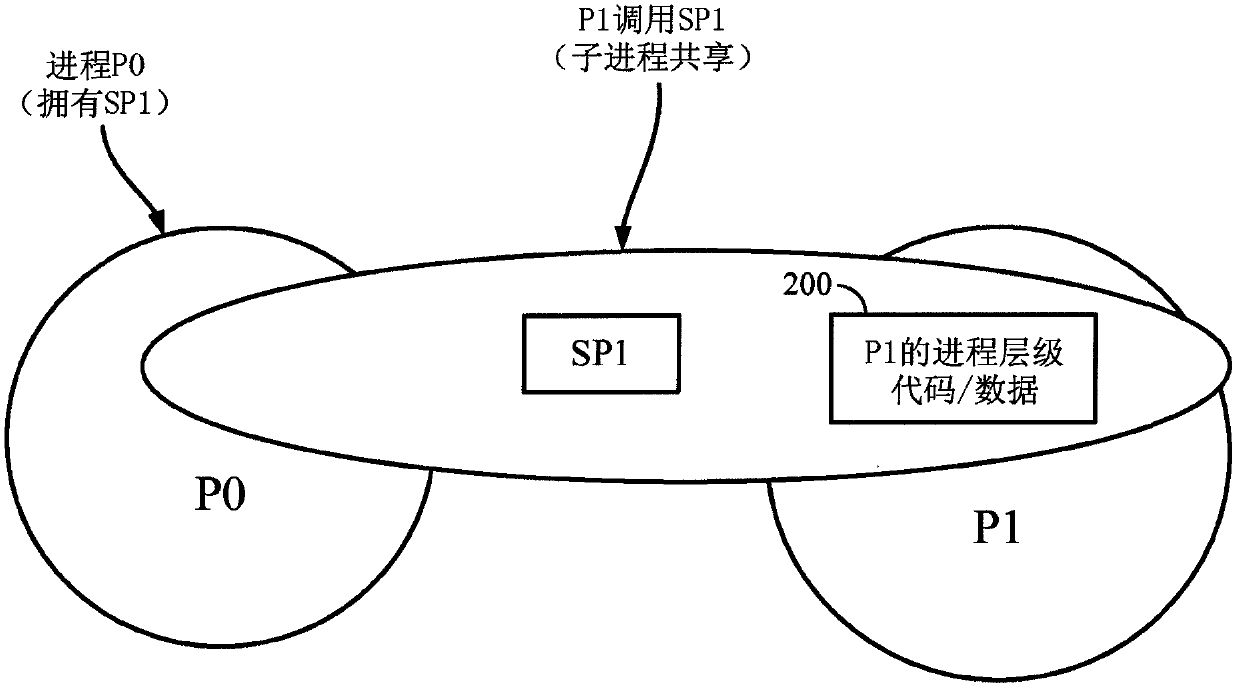

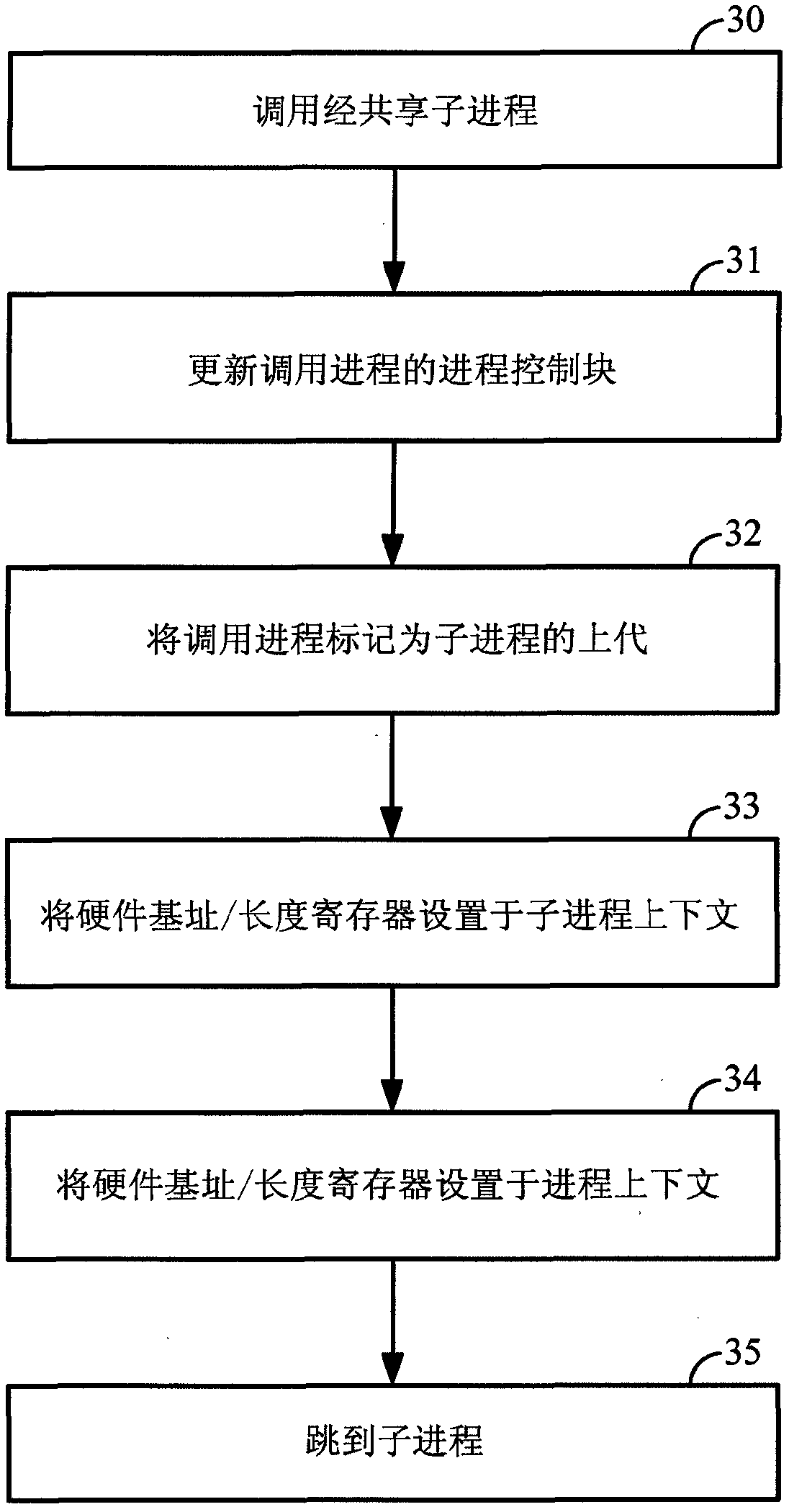

Sharing operating system sub-processes across tasks

InactiveCN102016802AProgram initiation/switchingProgram control using stored programsOperational systemIndustrial engineering

An operating system permits sharing of a sub-process (or process unit) across multiple processes (or tasks). Each shared sub-process has its own context. The sharing is enabled by tracking when a process invokes a sub-process. When a process invokes a sub-process, the process is designated as a parent process of the child sub-process. The invoked sub-process may require use of process level variable data. To enable storage of the process level variable data for each calling process, the variable data is stored in memory using a base address and a fixed offset. Although the based address may vary from process to process, the fixed offset remains the same across processes.

Owner:QUALCOMM INC

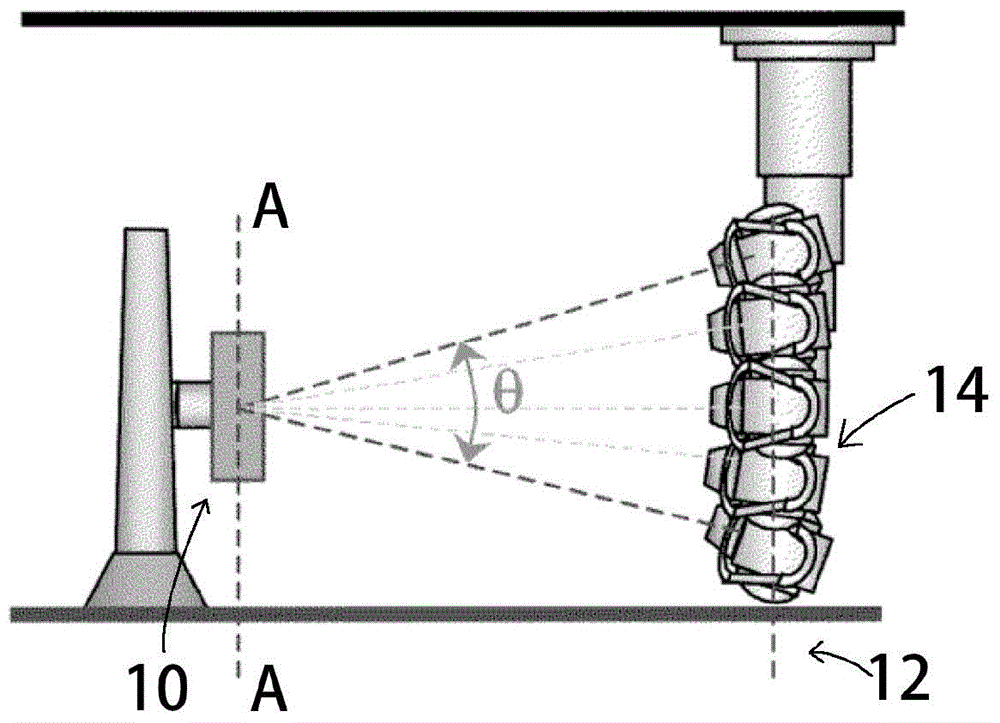

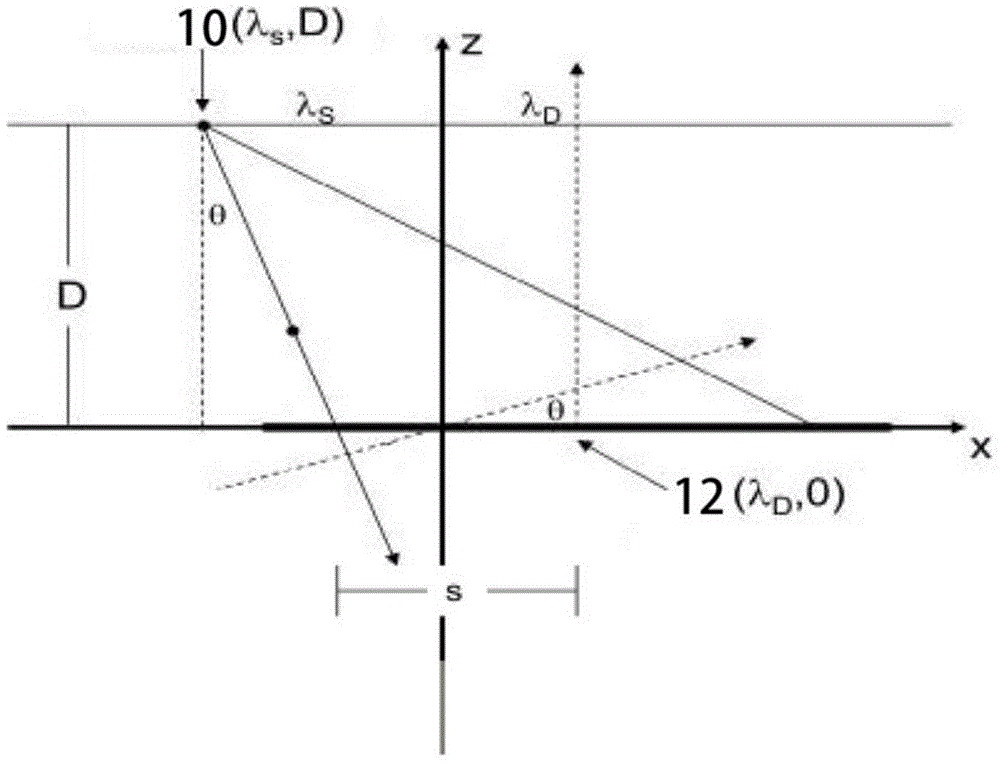

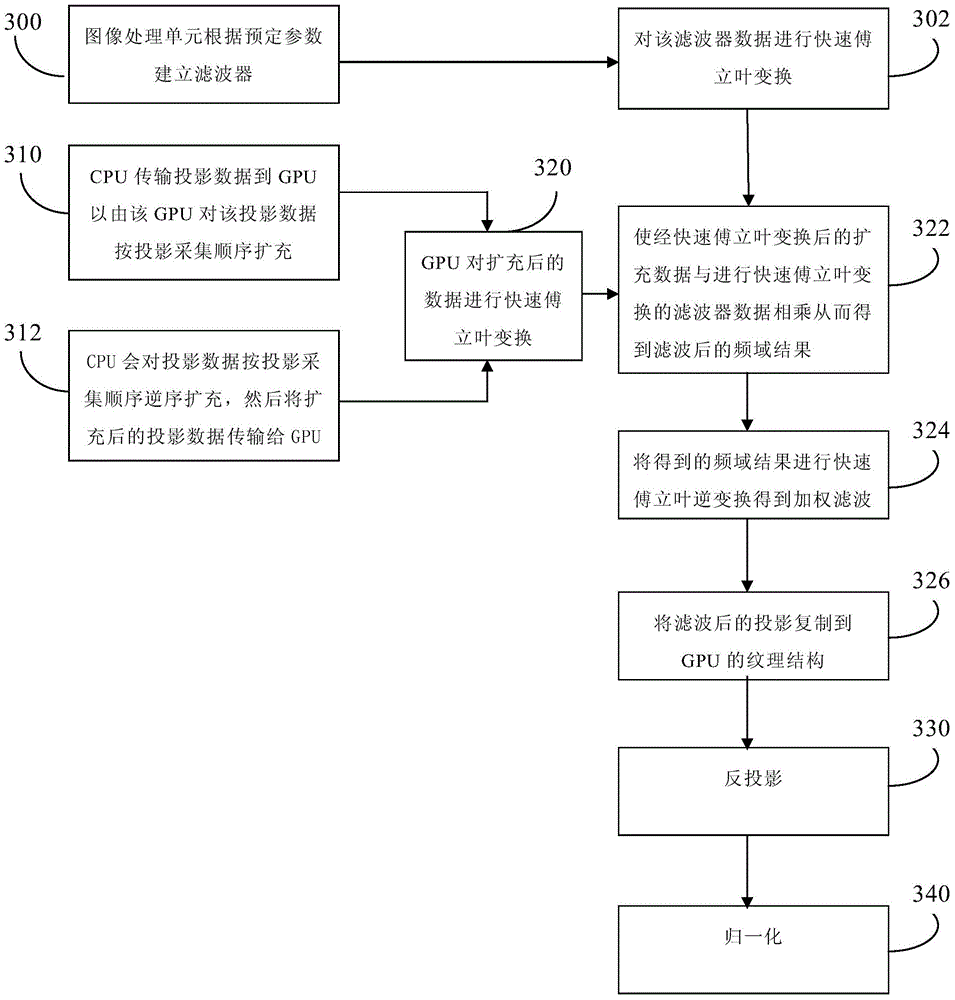

Reconstruction method of tomographic imaging

The invention relates to a reconstruction method of tomographic imaging. According to an embodiment of the invention, the reconstruction method of tomographic imaging includes the following steps: projection data are obtained; a graphics processing unit uses an open computing language to filter the projection data; and the graphics processing unit uses the open computing language to perform back projection on the filtered projection data, and performs normalization processing on a volume image reconstructed through the back projection. By coordinating task sharing between a CPU and the GPU, the reconstruction method of tomographic imaging provided by the embodiment of the invention ingeniously utilizes structural features of the open computing language and reduces extra time occupied by data transmission, and thus the speed of reconstruction can be substantially improved. In addition, the reconstruction method of tomographic imaging also improves quality of imaging through processing such as weighting and normalization.

Owner:RAYCO SHANGHAI MEDICAL PROD

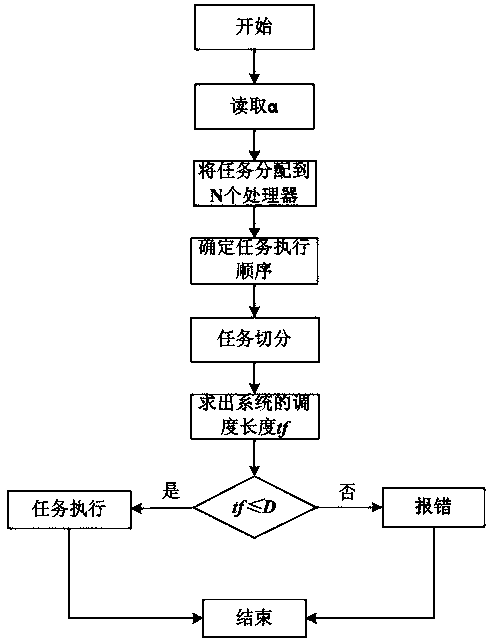

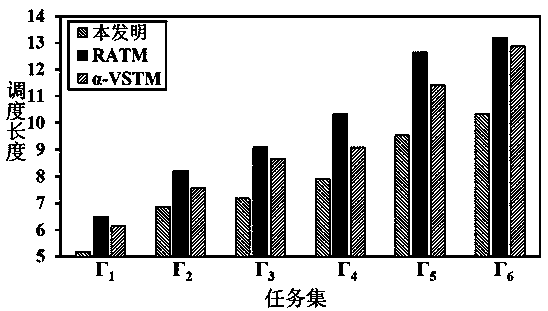

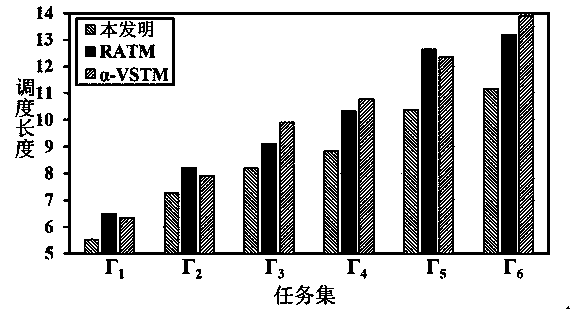

A temperature-scheduling length-aware real-time task scheduling method for heterogeneous multi-core processors

The invention discloses a real-time task scheduling method for sensing temperature-scheduling length of a heterogeneous multi-core processor. The real-time task scheduling method comprises the following steps that a default weight factor alpha of a system is read; tasks stored in a task queue is allocated to an optimal processor; optimal frequency is selected for each task in processor task queues; an execution sequence of the tasks in each processor task queue is determined; the tasks exceeding temperature constraints are segmented; whether the current scheduling length of the system is smaller than or equal to the given stop time D shared by all the tasks is judged, if yes, task execution is performed, and scheduling is completed, if not, scheduling failure is returned, the tasks cannot be executed, and the scheduling is completed. The scheduling length of the system can be minimized under the condition that the system meets peak temperature constraints and time constraints by using the real-time task scheduling method.

Owner:EAST CHINA NORMAL UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com