Robot weak light environment grabbing detection method based on multi-task sharing network

A technology of shared network and detection method, which is applied in the field of grasping and detection in low-light environment of robots, can solve the problems of low detection accuracy, achieve the effects of reducing enterprise operating costs, cost controllable, and expanding the scope of working hours

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

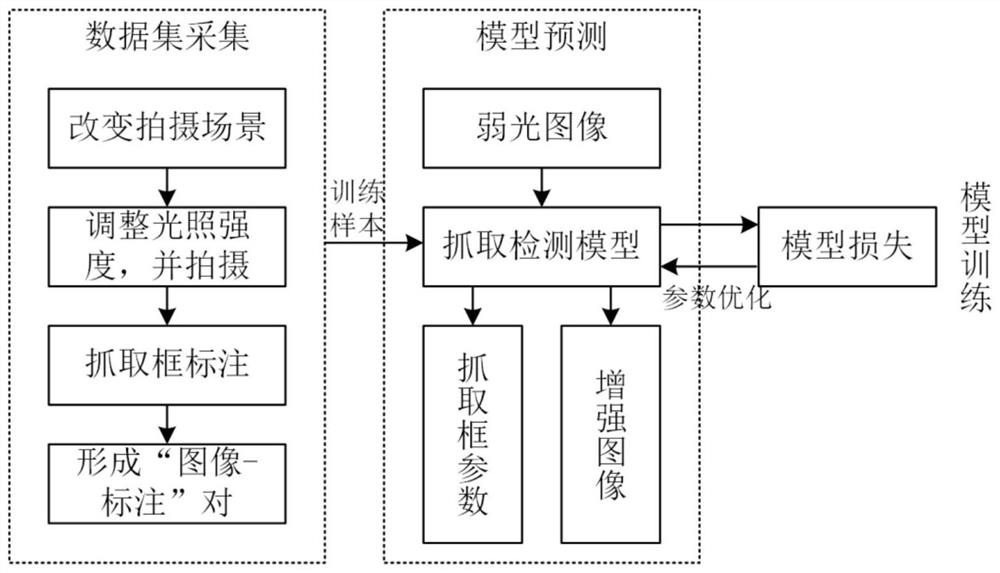

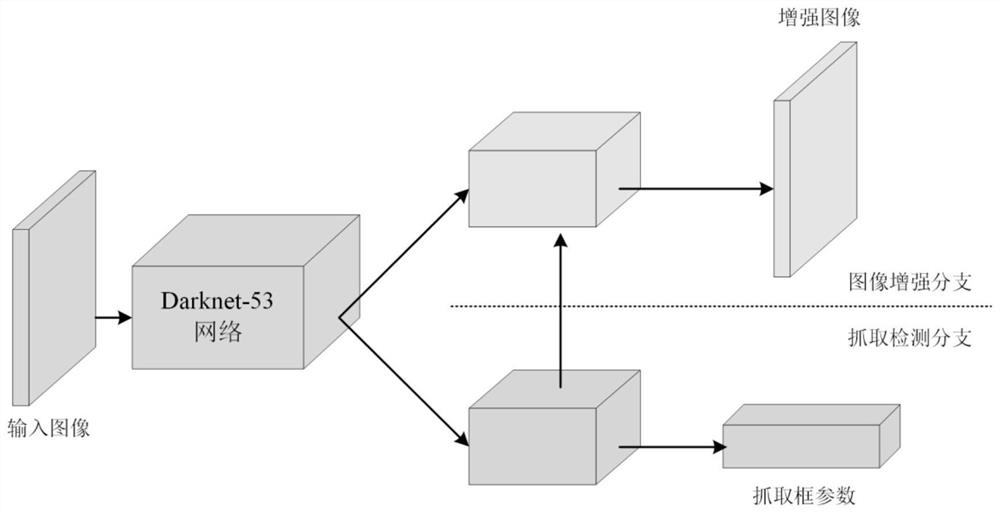

[0048] figure 1 It is a flow chart of the present invention, a method for grasping and detecting a low-light environment of a robot based on a multi-task sharing network, comprising the following steps:

[0049] Step 1, collect and construct the corresponding data set d, Data set d consists of 4n pairs of "low-light image-normal-light image-captured annotation information" pairs, where Indicates the jth low-light environment image in scene i, Represents the normal illumination image under scene i, b i Indicates the capture frame annotation parameters in scene i, n indicates the number of scenes in the data set, and j indicates the number of low-light images taken in each scene. In order to ensure the strict matching of images taken in low-light environments and normal lighting environments, image acquisition is performed. ; until the complete data set d is obtained;

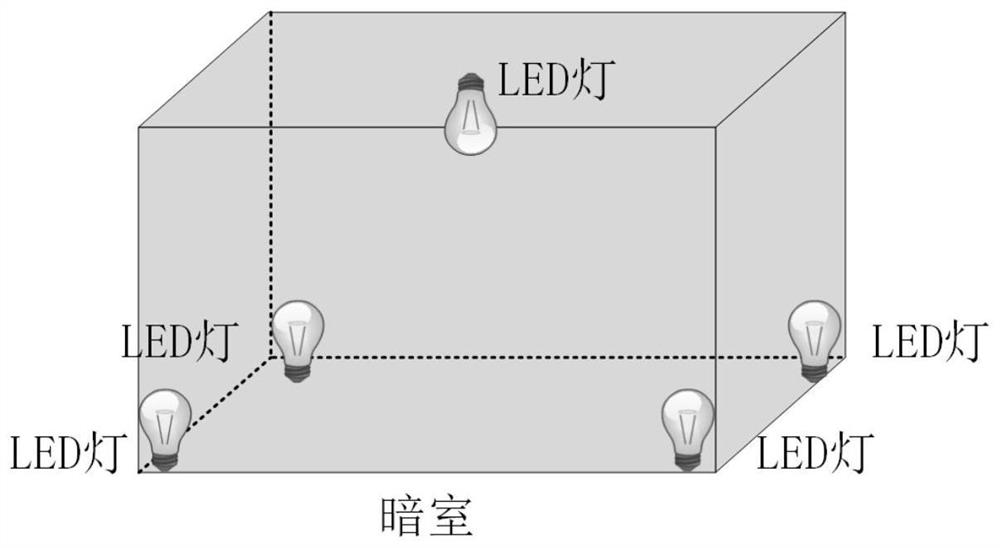

[0050] Step S1.1 Build a darkroom with no light inside, and install dimmable LED bulbs at the bottom ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com