Deep neural network compression method

A deep neural network and network layer technology, applied in neural learning methods, biological neural network models, neural architectures, etc., can solve problems such as limited improvement, large DNN model size, memory and battery life limitations, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

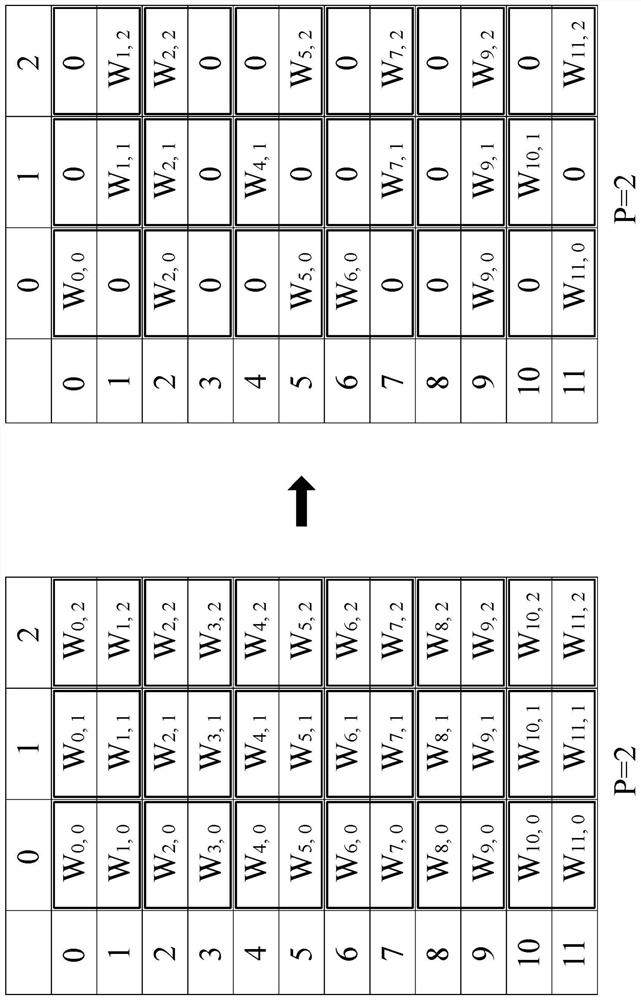

[0059] based on the following Figure 1A ~ Figure 10 , and the embodiment of the present invention will be described. This description is not intended to limit the embodiment of the present invention, but is one of the examples of the present invention.

[0060] Such as Figure 7 as well as Figure 10 As shown, according to a method of deep neural network compression according to an embodiment of the present invention, the branch pruning of optional local conventional weights, the steps include: Step 11 (S11): using a processor 100 to obtain a depth At least one weight of the neural network, the weight is placed between an input layer (11, 21) of the deep neural network adjacently connected to an output layer corresponding to two layers of network layers, and the nodes of the input layer (11, 21) The input value of is multiplied by a corresponding weight value, which is equal to the output value of the node of the output layer, the value of the P parameter is set, and the wei...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com