Method and device for driving virtual human in real time, electronic equipment and medium

A virtual human and duration technology, applied in the field of virtual human processing, can solve the problems of long computing process, poor real-time driving performance, and one hour or several hours, so as to improve real-time performance, improve parallel computing capability, and reduce the amount of computing. and the effect of data transfer volume

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

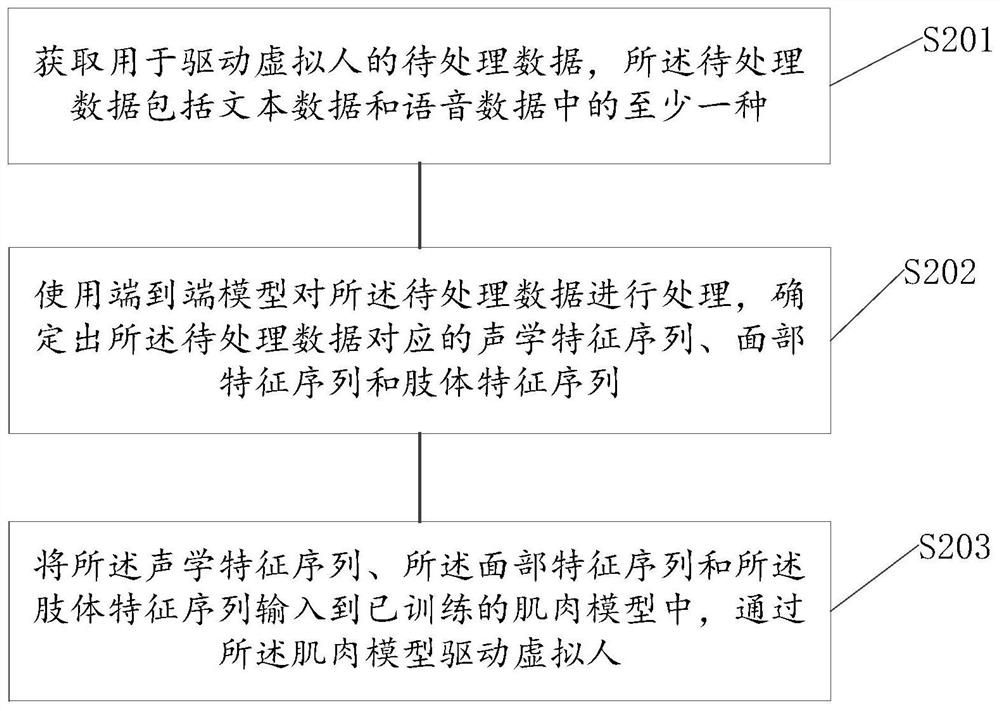

[0090] refer to figure 2 , which shows a flow chart of the steps of Embodiment 1 of a method for driving a virtual human in real time according to the present invention, which may specifically include the following steps:

[0091] S201. Obtain data to be processed for driving the virtual person, where the data to be processed includes at least one of text data and voice data;

[0092] S202. Use an end-to-end model to process the data to be processed, and determine an acoustic feature sequence, a facial feature sequence, and a body feature sequence corresponding to the data to be processed;

[0093] S203. Input the acoustic feature sequence, the facial feature sequence and the limb feature sequence into the trained muscle model, and drive the virtual human through the muscle model;

[0094] Wherein, step S201 includes:

[0095] Step S2021, acquiring the text feature and duration feature of the data to be processed;

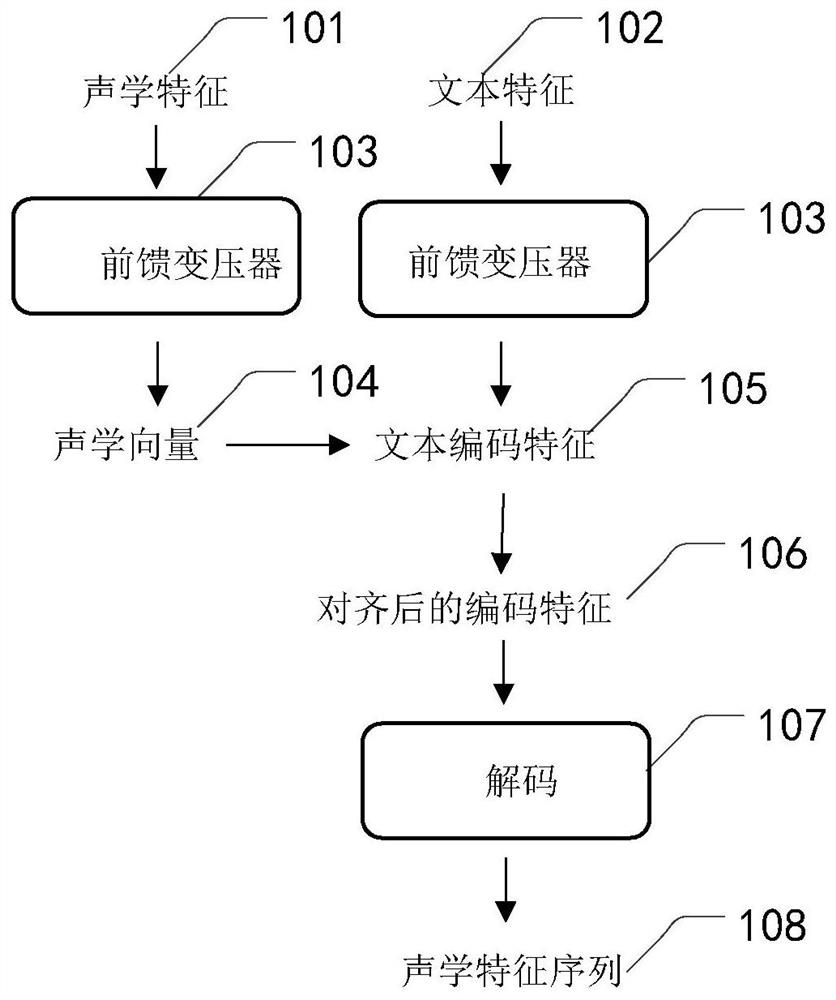

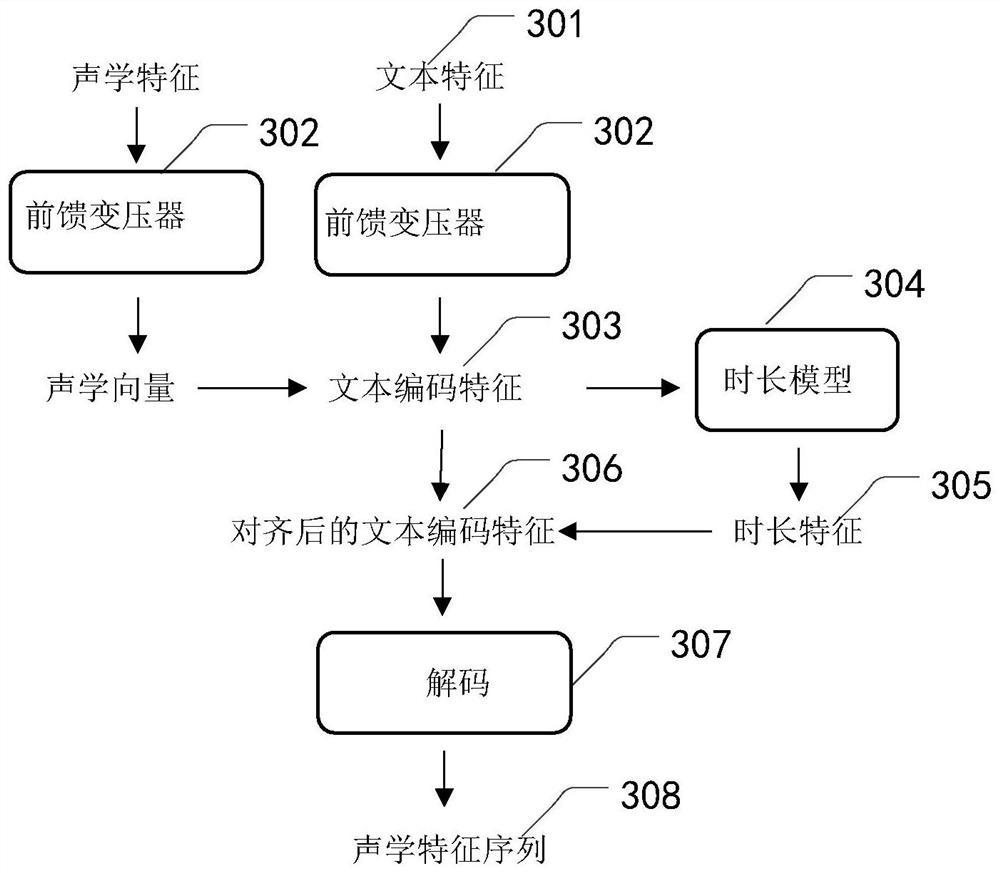

[0096] Step S2022. Determine the acoustic feature sequenc...

Embodiment 2

[0117] refer to Figure 4 , which shows a flow chart of the steps of Embodiment 1 of a method for driving a virtual human in real time according to the present invention, which may specifically include the following steps:

[0118] S401. Obtain data to be processed for driving the virtual person, where the data to be processed includes at least one of text data and voice data;

[0119] S402. Use an end-to-end model to process the data to be processed, and determine the fusion feature data corresponding to the data to be processed, wherein the fusion feature sequence is an acoustic feature sequence corresponding to the data to be processed, and the face It is obtained by fusing the feature sequence and the limb feature sequence;

[0120] S403. Input the fusion sequence into the trained muscle model, and drive the avatar through the muscle model;

[0121] Wherein, step S402 includes:

[0122] Step S4021, acquiring the text feature and duration feature of the data to be proces...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com