Method for quantizing PRELU activation function

A technology of activation function and data, applied in the field of neural network acceleration, to achieve the effect of reducing inference time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0042] In order to understand the technical content and advantages of the present invention more clearly, the present invention will be further described in detail in conjunction with the accompanying drawings.

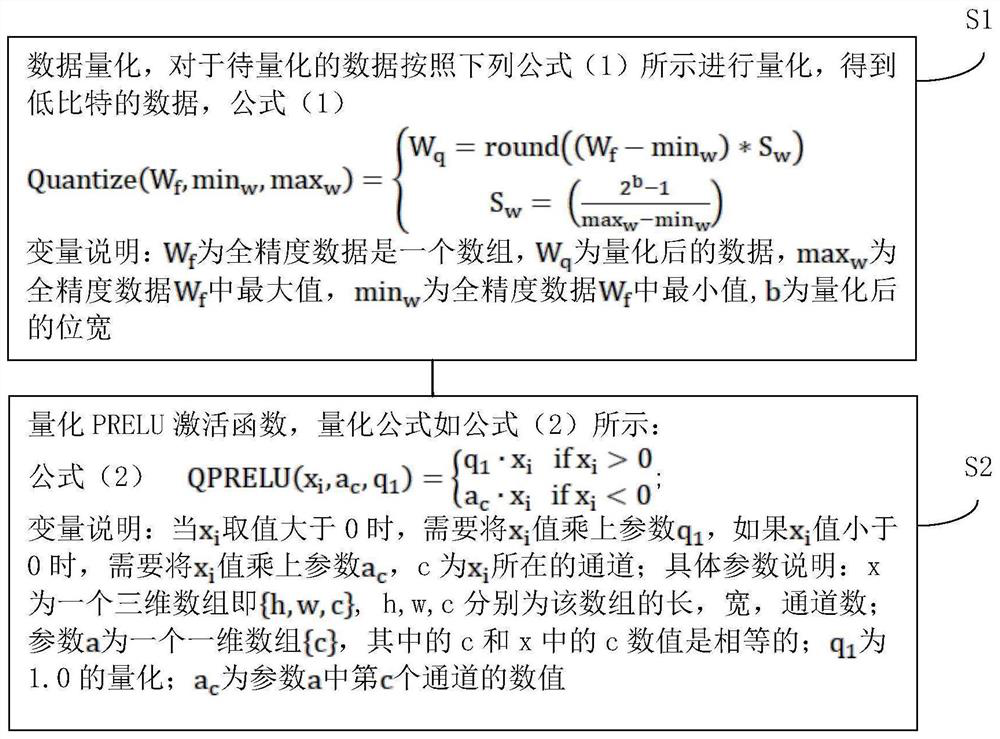

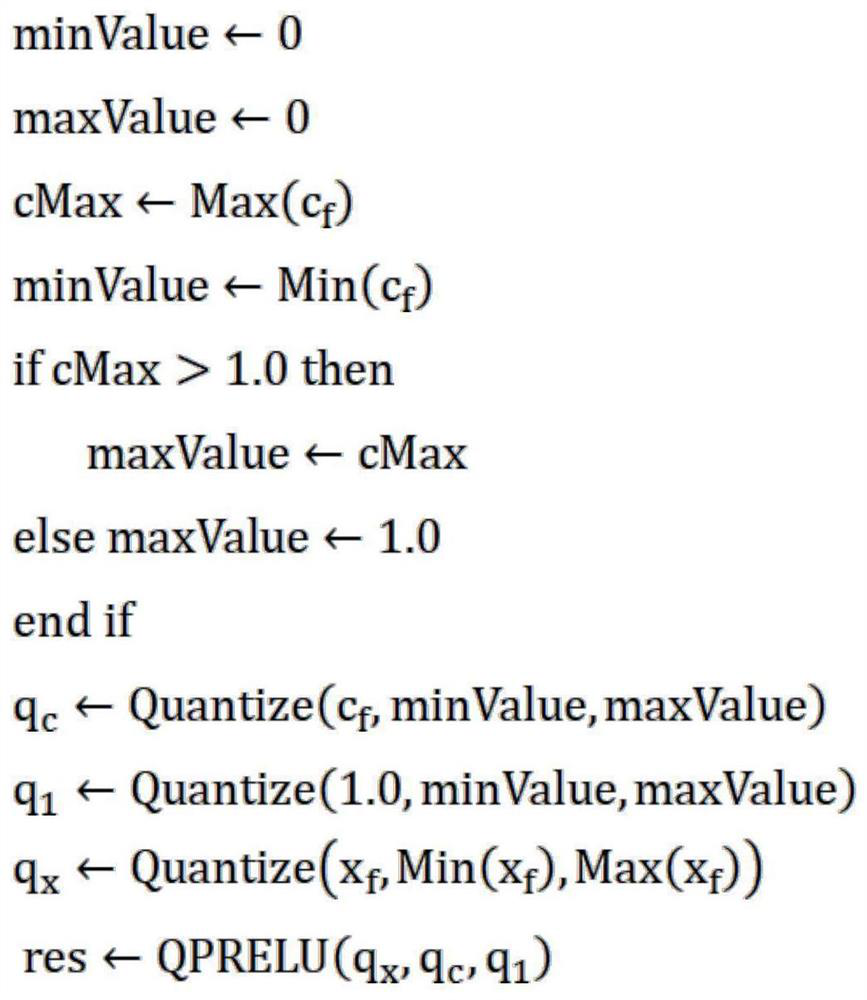

[0043] Such as figure 1 Shown, a kind of quantization activation function of the present invention is the method for PRELU, and described method comprises the following steps:

[0044] S1, data quantization, quantize the data to be quantized according to the following formula (1) to obtain low-bit data,

[0045] Formula 1)

[0046] Variable description: W f For full precision data is an array, W q is the quantized data, max w For full precision data W f Medium maximum value, min w For full precision data W f The minimum value, b is the bit width after quantization;

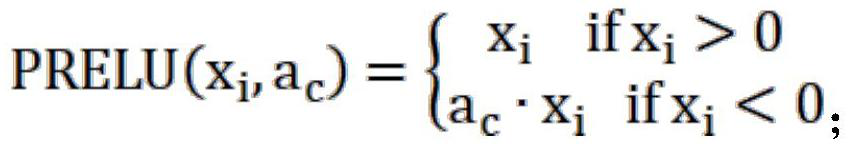

[0047] S2, quantize the PRELU activation function, the quantization formula is shown in formula (2):

[0048] Formula (2) Variable description: when x i When the value is greater than 0, you...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com