Human body behavior recognition method in man-machine cooperation assembly scene

A human-machine collaboration and recognition method technology, applied in the field of human behavior recognition, can solve the problems of low behavior recognition accuracy, easy recognition errors, and low assembly efficiency.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

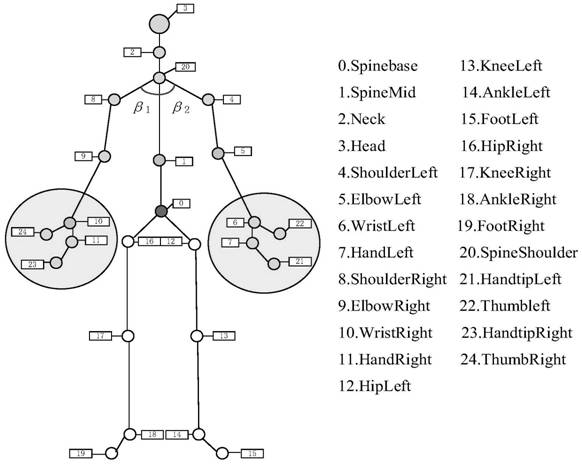

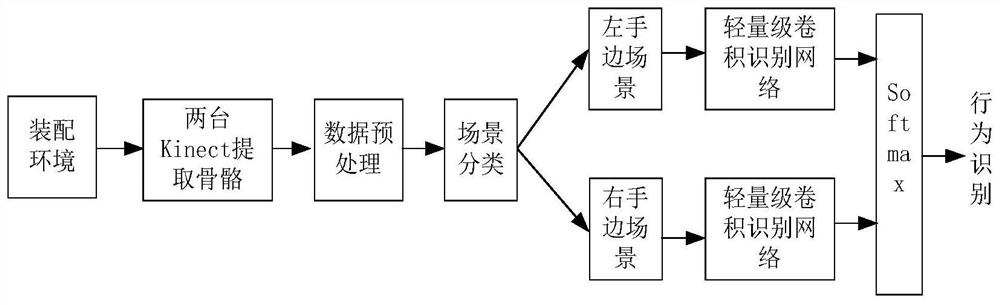

[0035] Such as figure 1 As shown, a human-machine collaborative assembly scene human behavior recognition method, which includes,

[0036] Step 1: Set up two somatosensory devices, the angle between the two somatosensory devices is θ, and obtain the joint point coordinate flow of the skeletal joints under human behavior from the somatosensory devices;

[0037] Step 2: Screen the joint point coordinate stream with complete skeletal joints, and locate the start position and end position of the action according to the motion event segmentation algorithm to obtain the joint point information;

[0038] Step 3, the joint point information is resampled according to the included angle θ to obtain the joint point coordinates;

[0039] Step 4: Use the coordinates of spinebase (joint 0) as the origin of the local coordinates to normalize the coordinates of other joint points, and then smooth to obtain a sequence of bones that constitute an action.

[0040] Step 5: Simplify the vectors ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com