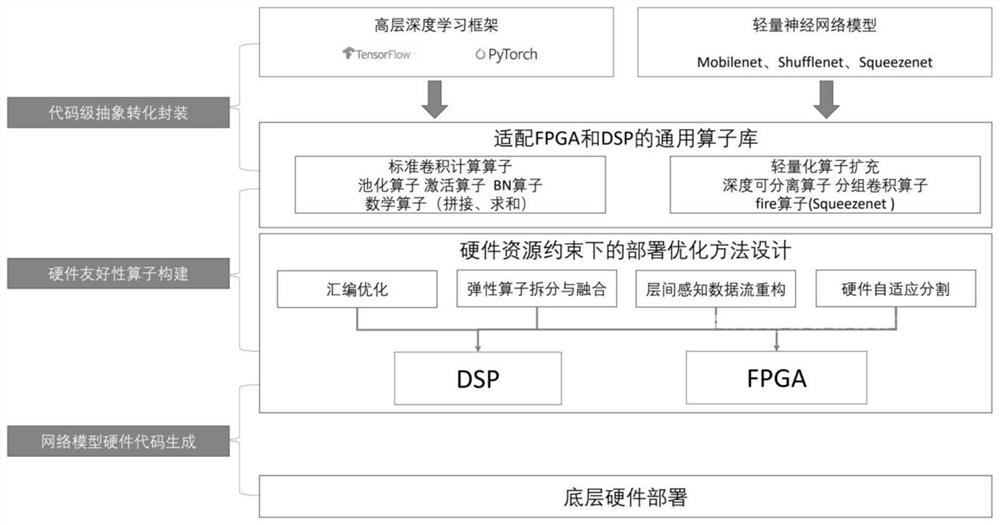

Design method for deploying and optimizing operator library on FPGA and DSP

A design method and operator technology, applied in software deployment, biological neural network models, neural architecture, etc., can solve problems such as limited hardware storage resources, difficulty in applying edge hardware devices, and high number of fine-grained operator memory accesses , to achieve the effect of reducing the time for manual code porting

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

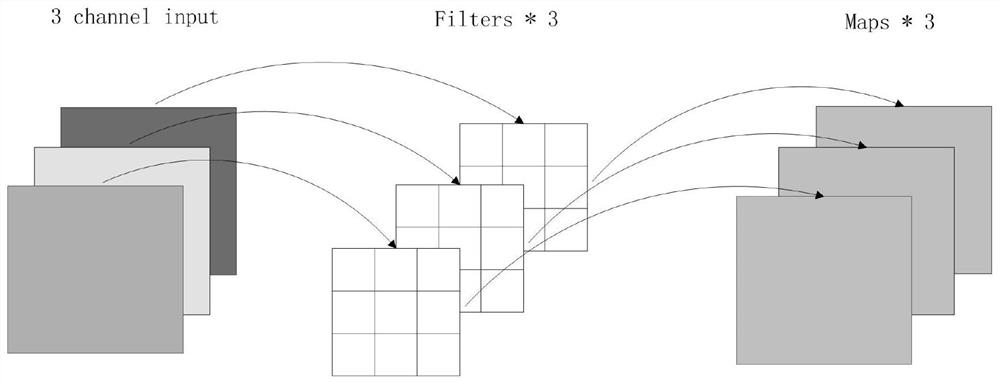

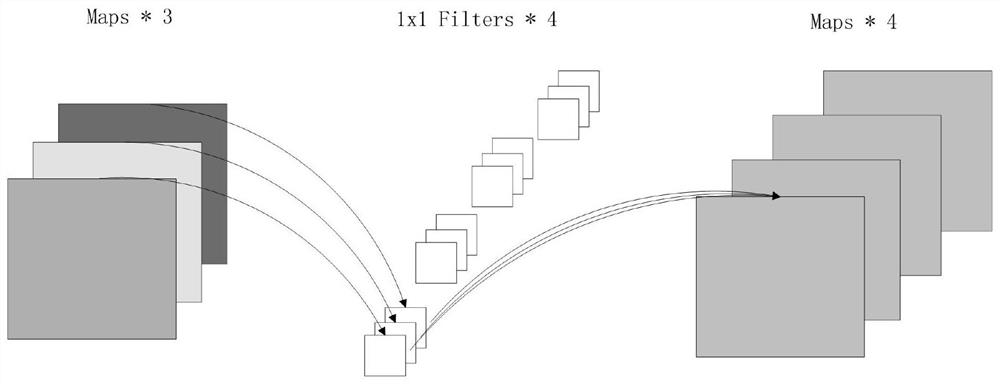

Method used

Image

Examples

Embodiment Construction

[0038] In order to make the technical means, creative features, goals and effects achieved by the present invention easy to understand, the present invention will be further described below in conjunction with specific embodiments.

[0039] In the description of the present invention, it should be noted that the terms "upper", "lower", "inner", "outer", "front end", "rear end", "both ends", "one end", "another end" The orientation or positional relationship indicated by etc. is based on the orientation or positional relationship shown in the drawings, and is only for the convenience of describing the present invention and simplifying the description, rather than indicating or implying that the referred device or element must have a specific orientation, use a specific Azimuth configuration and operation, therefore, should not be construed as limiting the invention. In addition, the terms "first" and "second" are used for descriptive purposes only, and should not be understood ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com