Video generation method, device, electronic equipment and storage medium

A video and original video technology, applied in the field of video processing, can solve the problems of easy loss of source domain character feature details, insufficient resolution, easy loss of character feature details, etc., to achieve the effect of enhancing timing consistency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

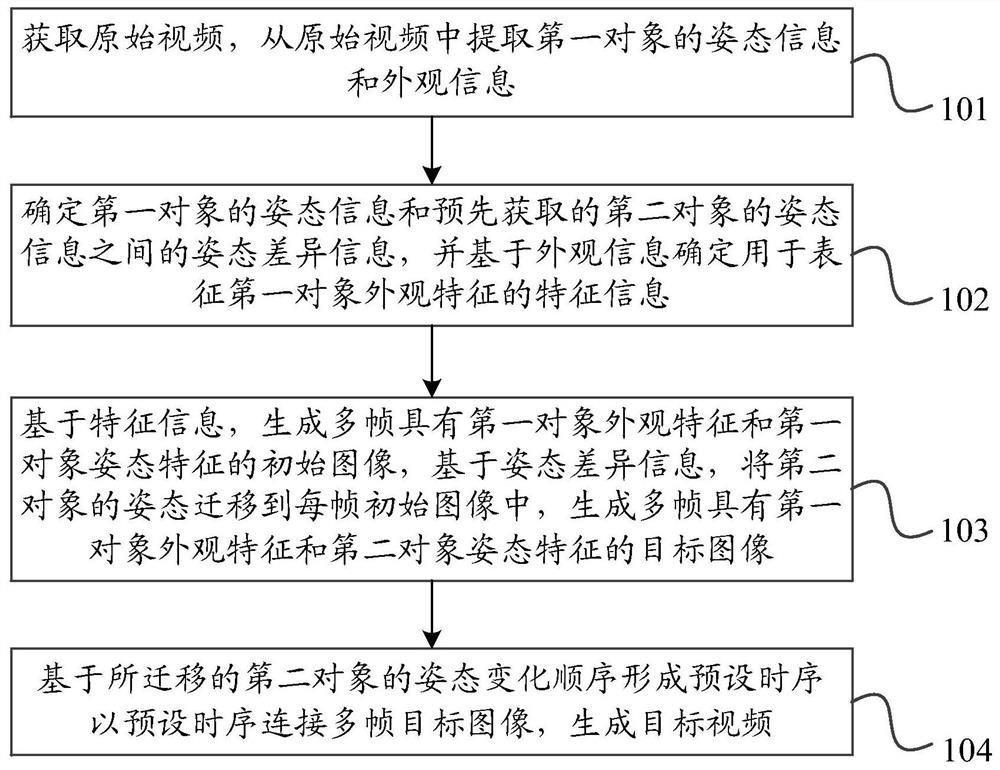

[0097] Embodiment 1. Generation of each frame of video

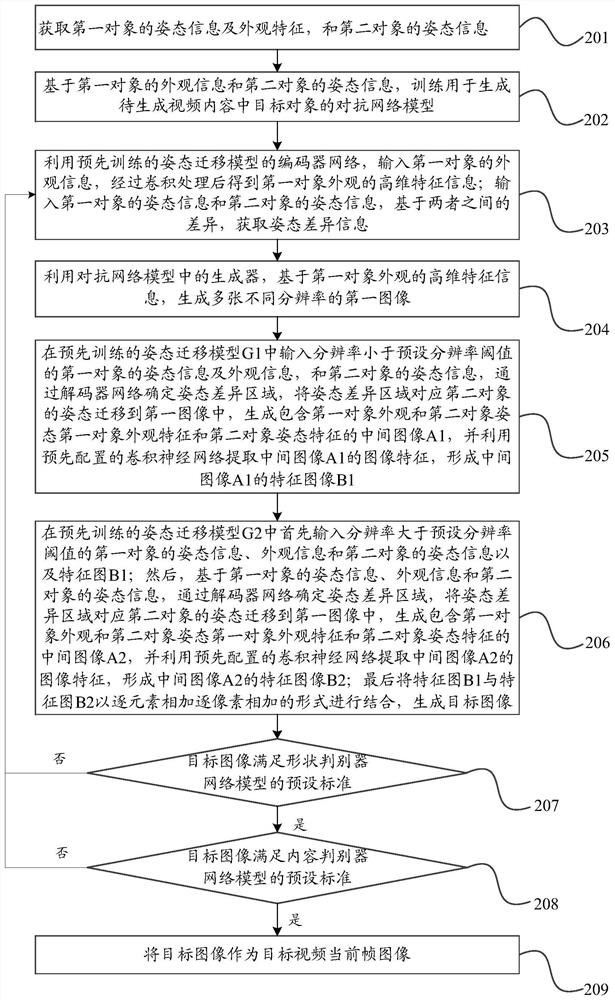

[0098] like figure 2 Shown is the video generation method provided by the embodiment of the present disclosure, wherein the generation of the target image can have the following steps:

[0099] Step 201, acquiring posture information and appearance information of a first object, and posture information of a second object.

[0100]In the specific implementation, the pre-trained key point detection model is used to extract the pose and generate pose information. First, the key points of the human body in the video are located, and then the key points are connected according to the joints of the human body. Finally, the key points containing each key point are obtained. Human skeleton image to complete the acquisition of posture information. Wherein, the positioning of the key points includes the positioning of the face, the human hand, and each joint of the human body. For each key point, its corresponding two-dimension...

Embodiment 2

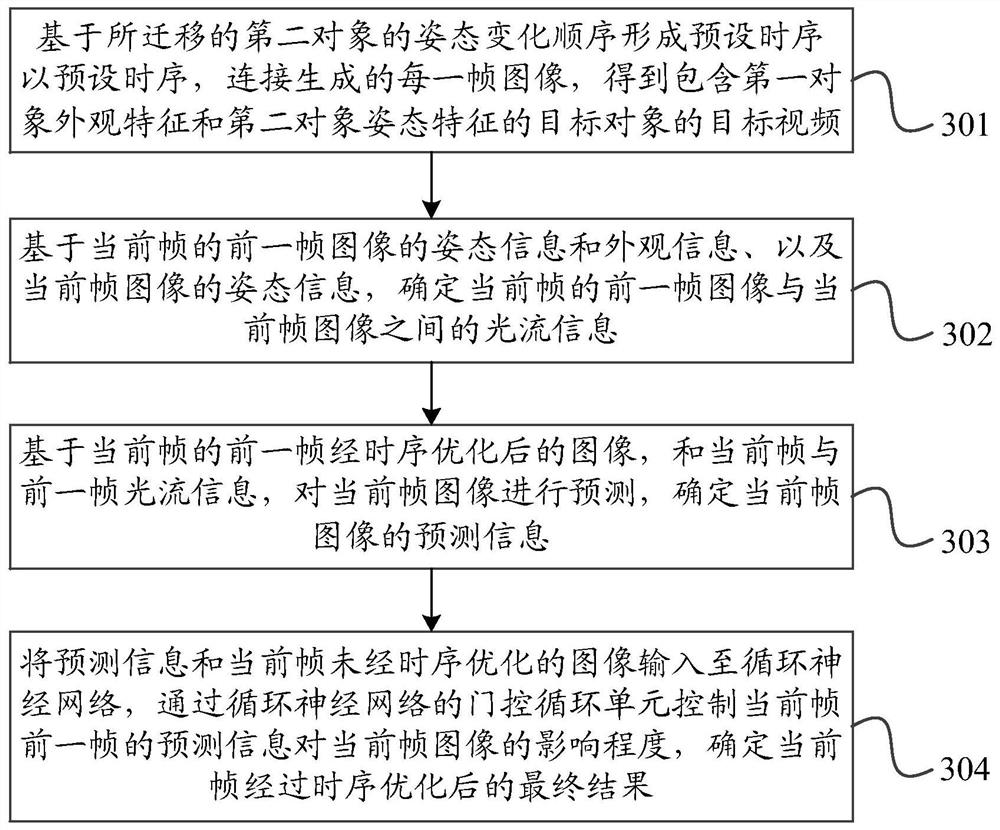

[0119] Embodiment 2. Optimization of Video Timing Information

[0120] like image 3 Shown is the video generation method provided by the embodiment of the present disclosure, wherein the optimization of video timing information may have the following steps:

[0121] Step 301, forming a preset time sequence based on the posture change sequence of the transferred second object, and connecting each frame of images generated at the preset time sequence to obtain the target object of the target object including the appearance characteristics of the first object and the posture characteristics of the second object video.

[0122] Step 302, based on the posture information and appearance information of the previous frame image of the current frame, and the posture information of the current frame image, determine the optical flow information between the previous frame image of the current frame and the current frame image, wherein the optical flow information The calculation of i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com