Figure video generation method based on generative adversarial network

A network and video technology, applied in the field of computer vision and image processing, can solve the problems that static images cannot meet the needs, achieve high practical value and promotion value, ensure accuracy, and clear logic

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040] The technical solution of the present invention will be further described below in conjunction with the accompanying drawings, but it is not limited thereto. Any modification or equivalent replacement of the technical solution of the present invention without departing from the spirit and scope of the technical solution of the present invention should be covered by the present invention. within the scope of protection.

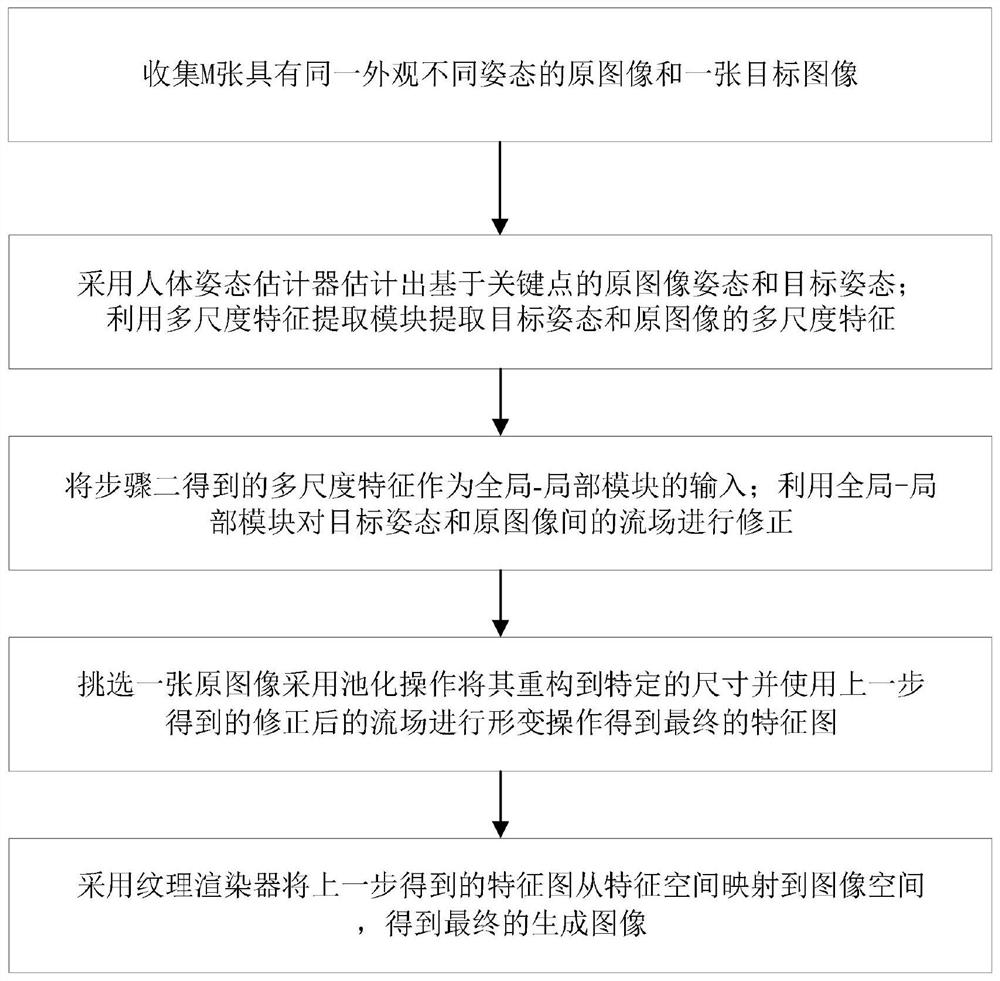

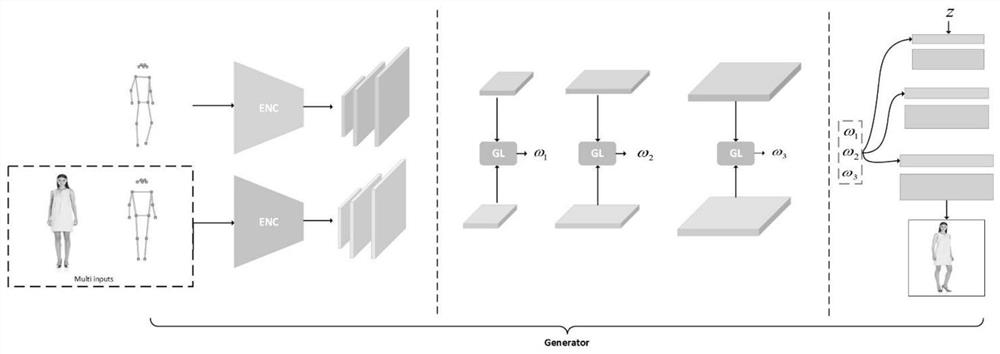

[0041] This embodiment provides a character video generation model based on the generation confrontation network, such as Figure 2-5 As shown, the model consists of two parts, the generator and the discriminator, where:

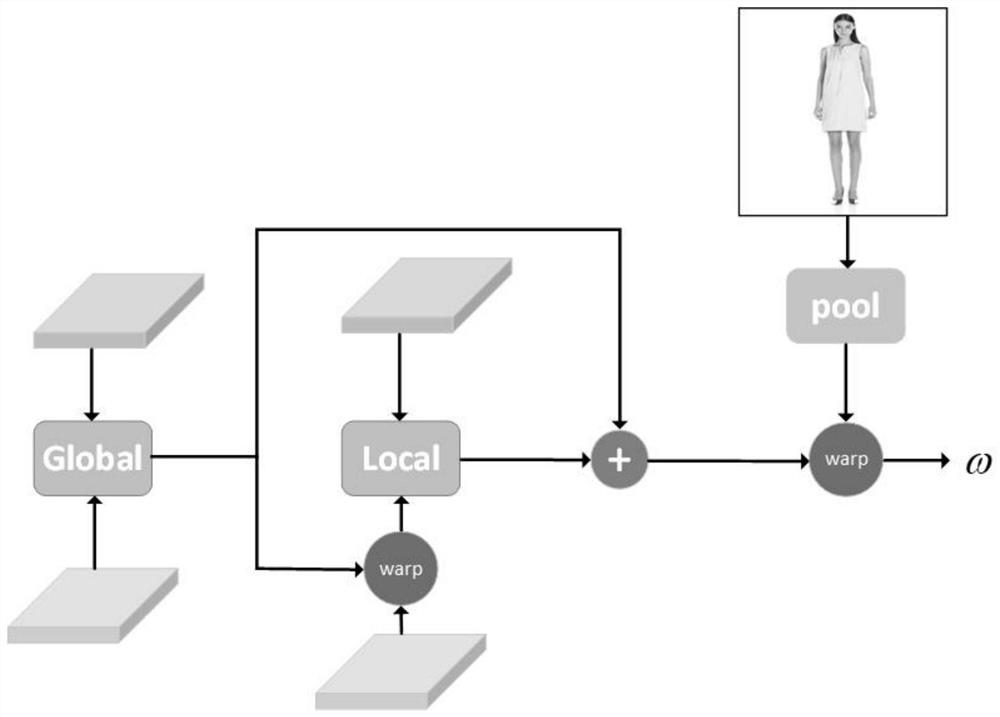

[0042] Described generator is made up of multi-scale feature extraction module (convolutional network with multiple subsampling convolutional layers), global-local module, texture renderer (based on SPADE network);

[0043] The discriminator is composed of a spatial consistency discriminator and a temporal consistency discriminator. ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com