Image description method of self-attention mechanism based on sample adaptive semantic guidance

A sample adaptive and image description technology, applied in semantic analysis, neural learning methods, natural language data processing, etc., can solve the problems of fixed parameter generalization reduction, semantic noise, etc., achieve good performance, reduce precision loss, strong migratory effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0071] The following embodiments will describe the present invention in detail in conjunction with the accompanying drawings.

[0072] Embodiments of the present invention include the following steps:

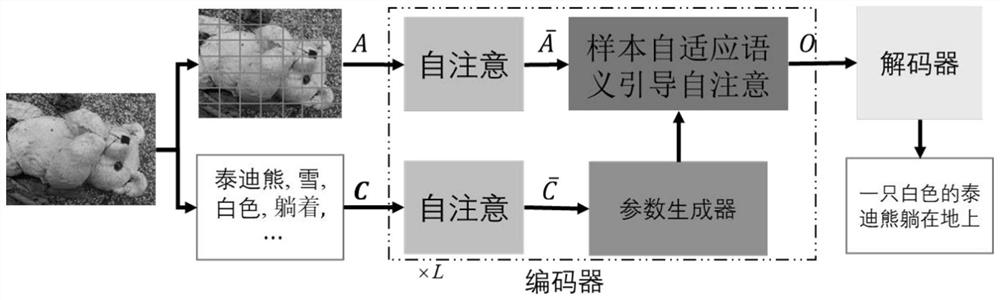

[0073] 1) For the images in the image library, first use the convolutional neural network to extract the corresponding image features A;

[0074] 2) For the images in the image library, use the semantic concept extractor to extract the semantic concept C;

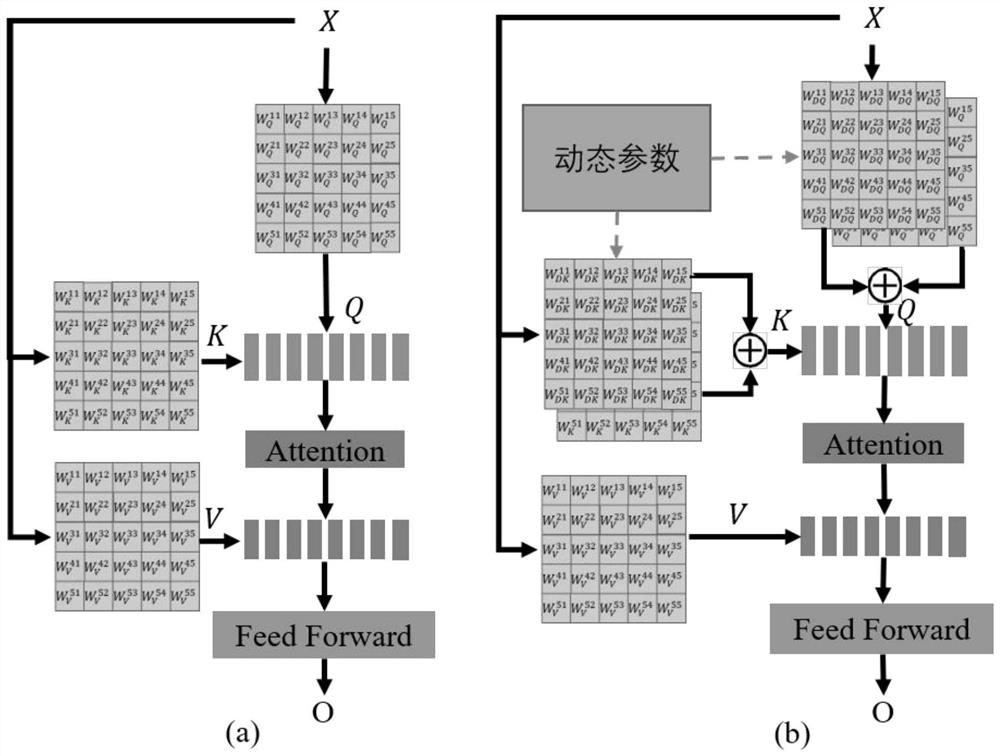

[0075] 3) Send the image feature A and language concept C into different self-attention networks, and further encode the features to obtain the corresponding hidden features and

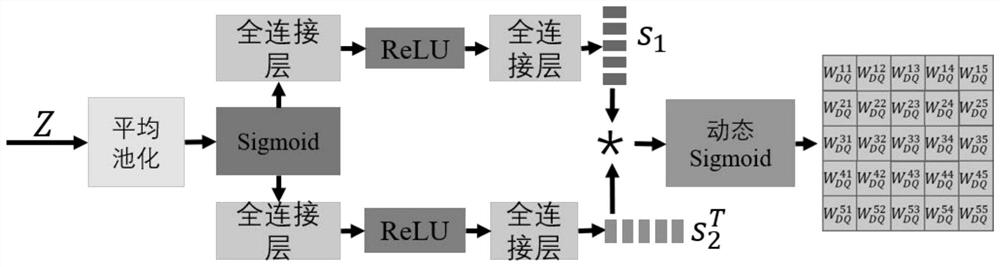

[0076] 4) Hiding the aforementioned semantic concept features Send it to the parameter generation network to generate the parameter W of the self-attention network DQ , W DK ;

[0077] 5) Hiding the aforementioned image features Input to the generated self-attention network to obtain semantically guided image features O;

[0078] 6) In...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com