Special personnel emotion recognition method and system based on multi-modal data fusion

A technology for emotion recognition and special personnel, applied in the field of emotion recognition for special personnel, can solve problems such as intelligent emotion recognition systems without special personnel, and achieve the effect of facilitating cross-layer transmission, reducing the amount of parameters, and reducing the problem of gradient disappearance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

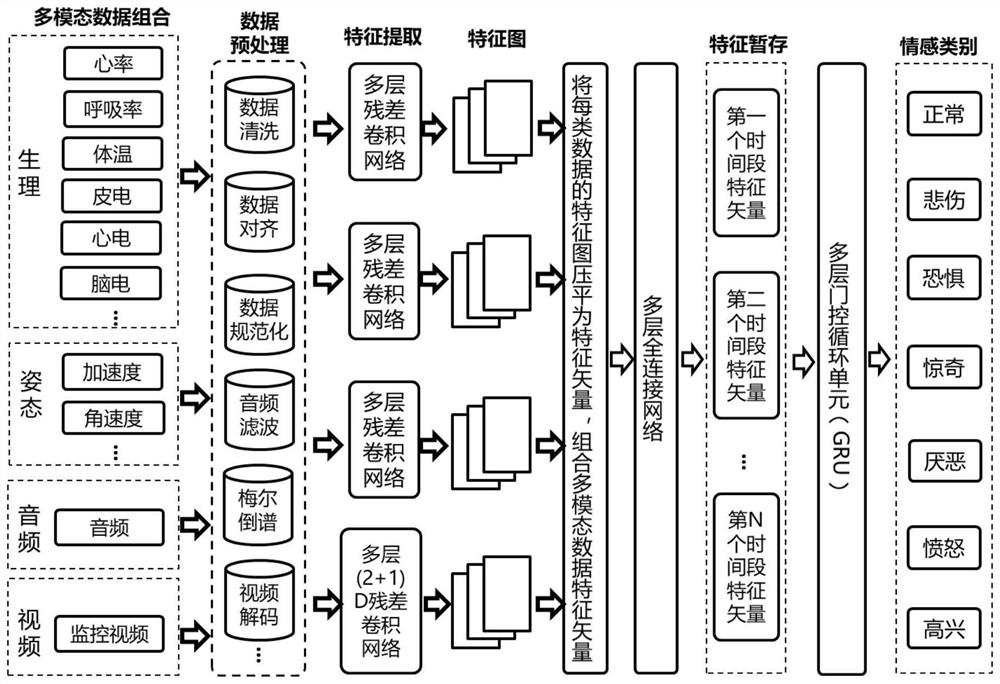

[0071] An emotion recognition method for special personnel based on multi-modal data fusion, which uses deep learning technology to mine deep semantic features of data and realize cross-modal fusion, output the emotional category of special personnel in a probabilistic manner, and construct deep learning Network, to achieve the hybrid fusion of multi-modal data and accurate emotion recognition, such as figure 1 shown, including the following steps:

[0072] (1) Preprocessing the acquired physiological parameters, attitude parameters, audio, and video of the special personnel, and extracting the corresponding physiological parameters, attitude parameters, audio, and video space-time feature vectors;

[0073] Physiological parameters and posture parameters of special personnel are collected through wearable devices for a period of time (such as 3 seconds). Physiological parameters include heart rate, breathing rate, body temperature, skin electricity, ECG, and EEG; posture param...

Embodiment 2

[0077] According to a kind of special personnel emotion recognition method based on multimodal data fusion described in embodiment 1, its difference is:

[0078] In step (1), preprocessing the acquired physiological parameters, attitude parameters, audio, and video of the special personnel refers to: performing data cleaning on the acquired physiological parameters and attitude parameters of the special personnel, performing audio filtering on the audio, and performing audio filtering on the audio. The video is decoded; the physiological parameters, posture parameters, audio, and video of the acquired special personnel in the same time period are subjected to data normalization and data alignment operations.

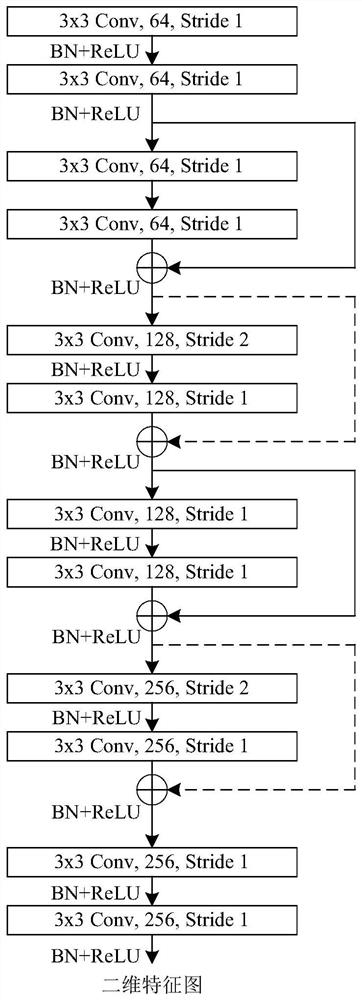

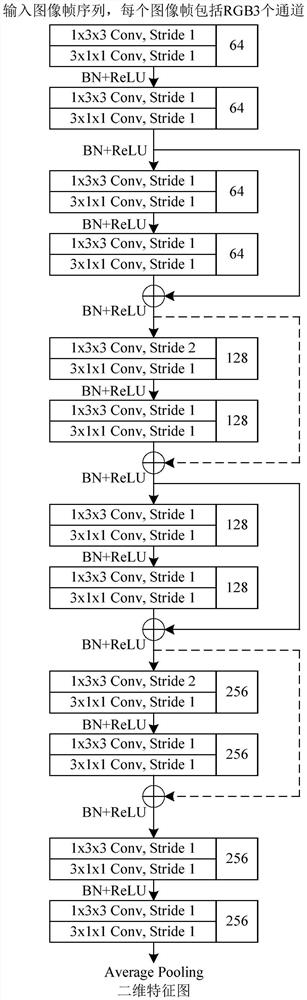

[0079] In step (1), the space-time feature vector of physiological parameters is obtained, specifically: the physiological parameters collected at each sampling time are spliced into a vector; if the sampling frequency is inconsistent, the highest sampling frequency is ...

Embodiment 3

[0093] According to a kind of special personnel emotion recognition method based on multimodal data fusion described in embodiment 1 or 2, its difference is:

[0094] The specific implementation process of step (2) is as follows:

[0095] Flatten the feature maps of physiological parameters, attitude parameters, audio, and video into feature vectors, and then concatenate all the feature vectors together to form a global feature vector to realize the fusion of feature layers;

[0096] A multi-layer fully connected network is used to realize joint feature vector extraction, and the feature vectors in this period of time are temporarily stored; for example Figure 5 As shown, it specifically refers to: input the global feature vector into the multi-layer fully connected network, the neurons in each layer of the multi-layer fully connected network are connected with all the neurons in the next layer according to a certain weight, and the neurons in each layer take The value is th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com