Real environment facial expression recognition method based on three-dimensional face feature reconstruction and image deep learning

A feature reconstruction, three-dimensional face technology, applied in the field of image processing, to improve the status of facial expression recognition in the real environment and improve the accuracy of the effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0020] The technical solution of the present invention will be further described in detail below in conjunction with the accompanying drawings.

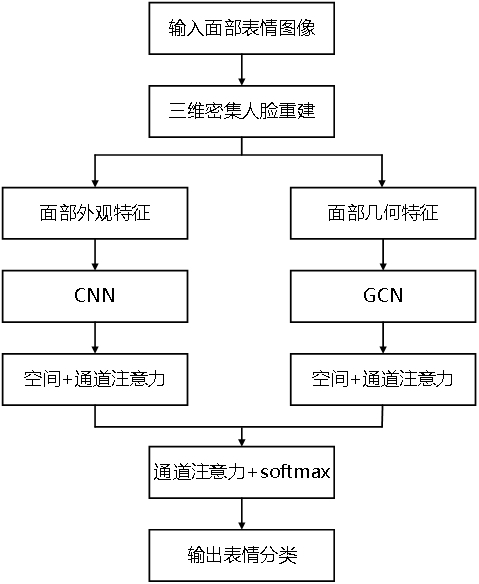

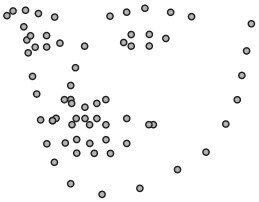

[0021] Step 1: Input the facial expression image in the real environment into the three-dimensional dense face reconstruction network, and output the facial appearance features and facial geometric features. The facial appearance features are as follows: figure 2 As shown, the present invention saves it as a smooth two-dimensional image, and the facial geometric features are as image 3 As shown, it consists of 3D coordinate information of 68 facial key points; among them, the facial appearance feature is the reconstructed appearance feature obtained after the multi-pose face image is transformed into a frontal face. In order to facilitate feature extraction, the present invention processes it as a smooth Two-dimensional image; the facial geometric feature is the three-dimensional coordinate information of several key points of the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com