Multi-source lane line fusion method and device, vehicle and storage medium

A fusion method and lane line technology, applied in the computer field, can solve problems such as the inability to guarantee detection stability and accuracy, and achieve the effect of improving stability and accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

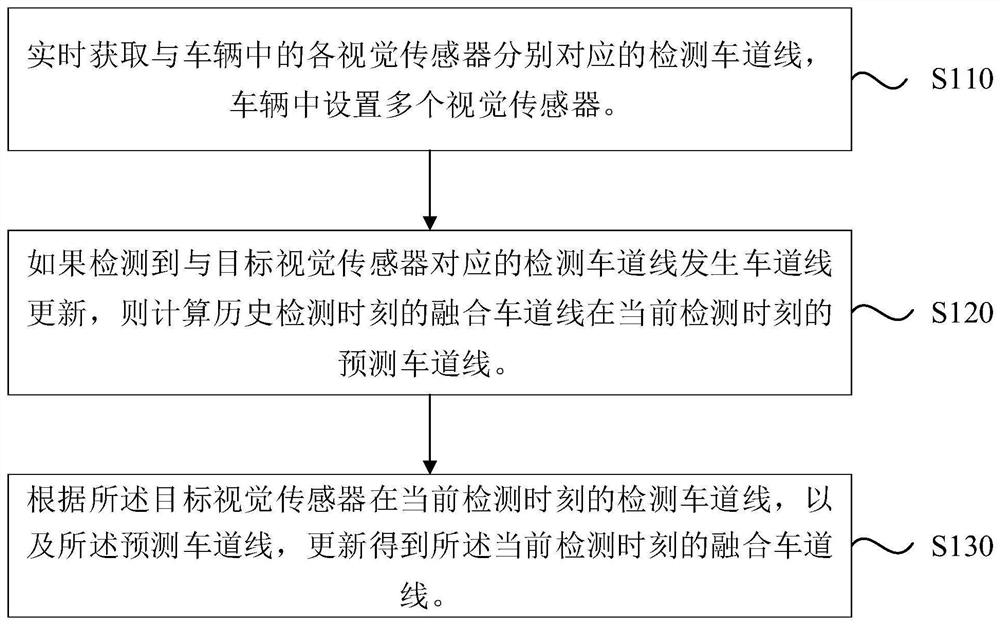

[0030] Figure 1a It is a flowchart of a multi-source lane line fusion method provided by Embodiment 1 of the present invention. This embodiment is applicable to the case where a vehicle equipped with multiple visual sensors performs lane line fusion on detected lane lines corresponding to each visual sensor. The method of this embodiment can be executed by a fusion device of multi-source lane lines, which can be implemented by software and / or hardware, and can generally be integrated in the vehicle-machine end of the vehicle. Typically, a vehicle with an automatic driving function In the engine end of the vehicle.

[0031] Correspondingly, the method specifically includes the following steps:

[0032] S110. Obtain in real time the detected lane lines respectively corresponding to the visual sensors in the vehicle, where multiple visual sensors are set in the vehicle.

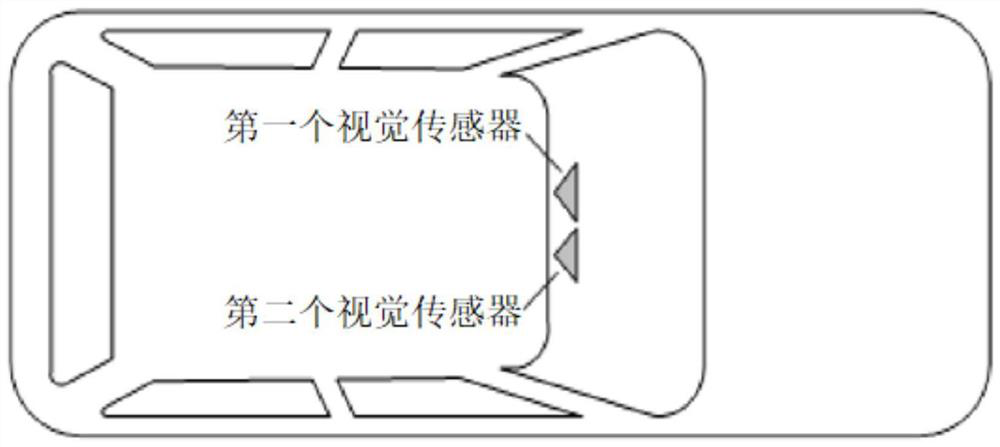

[0033]Among them, the visual sensor can have the ability to capture thousands of pixels of light from an e...

Embodiment 2

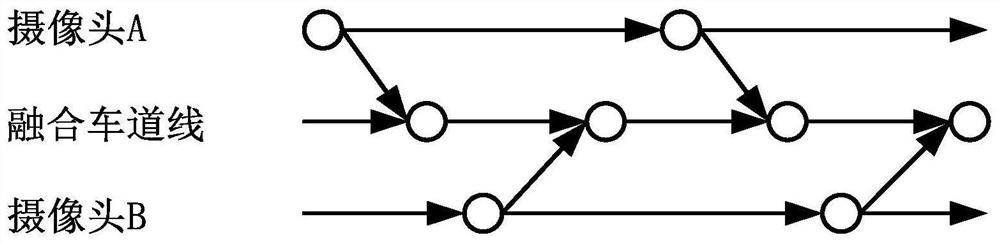

[0058] Figure 2a It is a flow chart of another multi-source lane line fusion method provided by Embodiment 2 of the present invention. This embodiment is optimized on the basis of the above-mentioned embodiments. In this embodiment, Kalman filtering is used to calculate the predicted lane line at the current detection time of the fused lane line at the historical detection time, and update to obtain the fusion at the current detection time. lane line.

[0059] Correspondingly, the method specifically includes the following steps:

[0060] S210. Obtain in real time the detected lane lines corresponding to the respective visual sensors in the vehicle, wherein multiple visual sensors are set in the vehicle.

[0061] S220 , judging whether it is detected that the detected lane line corresponding to the target visual sensor has been updated: if yes, execute S230 ; otherwise, return to execute S210 .

[0062] S230. According to the vehicle state information at the current detect...

Embodiment 3

[0133] Figure 3a It is a flow chart of another multi-source lane line fusion method provided by Embodiment 3 of the present invention. This embodiment is optimized on the basis of the above-mentioned embodiments. In this embodiment, according to the detected lane line at the current detection time of the target visual sensor and the predicted lane line, update and obtain the current detection time. After merging the lane lines, the method further includes: according to the fused lane lines at the current detection moment, screening the target lane lines to be used as a driving decision reference during the automatic driving process of the vehicle.

[0134] Correspondingly, the method specifically includes the following steps:

[0135] S310. Obtain in real time the detected lane lines corresponding to the respective visual sensors in the vehicle, where multiple visual sensors are set in the vehicle.

[0136] S320. If it is detected that the detected lane line corresponding t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com