Distributed system for large-scale deep learning inference

A distributed system and deep learning technology, applied in the direction of reasoning methods, etc., can solve the problems of poor system fault tolerance, high degree of intrusion of the host computer business, and long time consumption.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

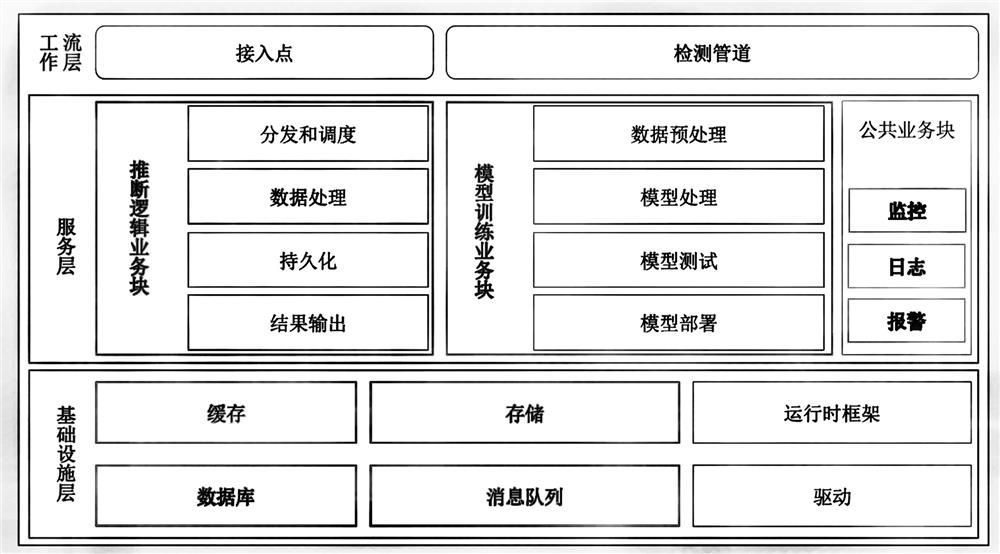

[0052] Such as figure 1 with figure 2 As shown, in this embodiment, the present invention includes a service layer, a workflow layer and an infrastructure layer;

[0053]Service layer: includes inference logic business block, model training business block and public business block. The inference logic business block undertakes the business logic of system inference and judgment. The block undertakes the common logic of the inference logic business block and the model training business block;

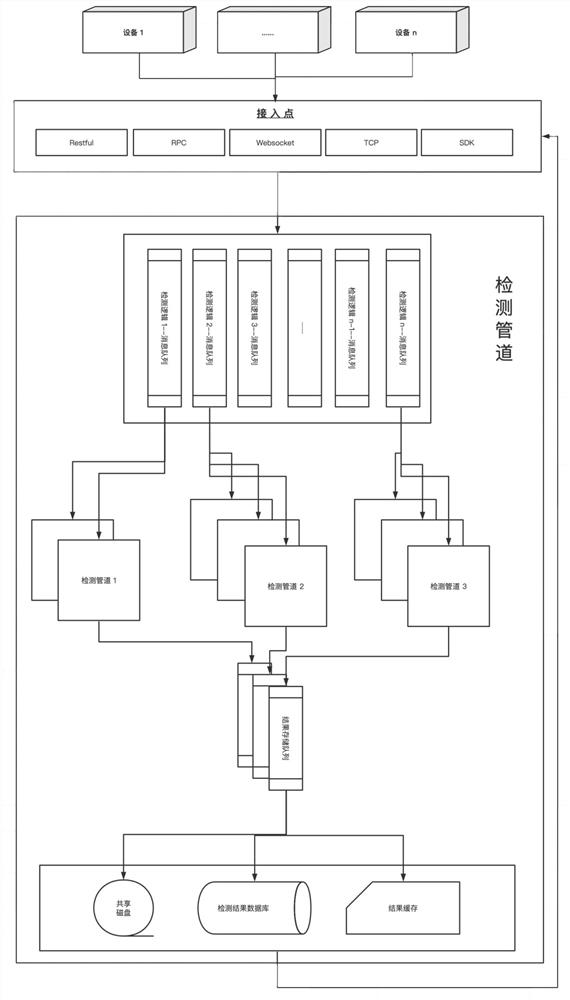

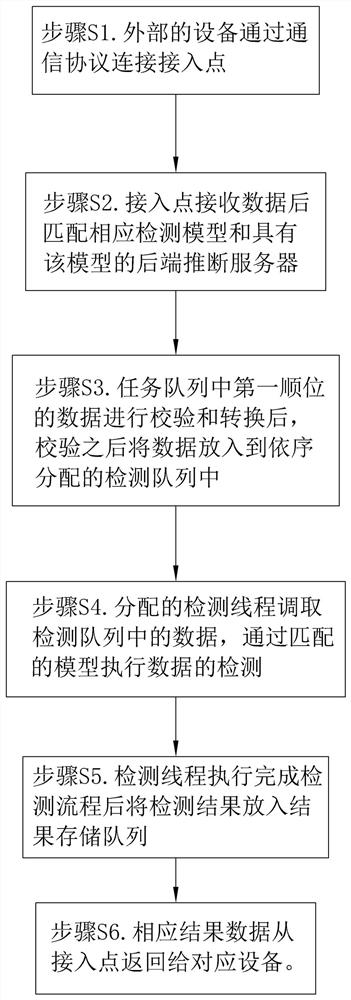

[0054] The workflow layer: includes several access points and several detection pipelines, the access points provide the access ports of the access protocols required by the equipment, and the access ports are network ports; the detection pipelines include several backends An inference server, the back-end inference server is a computing device, each of the back-end inference servers is assigned one or more detection threads, and the back-end inference server executes the incoming dat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com