Cloud computing application memory management method based on real-time content prediction and historical resource occupation

A technology for memory management and historical resources, applied in the field of cloud computing application memory management

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022] The present invention will be described in detail below in conjunction with accompanying drawing:

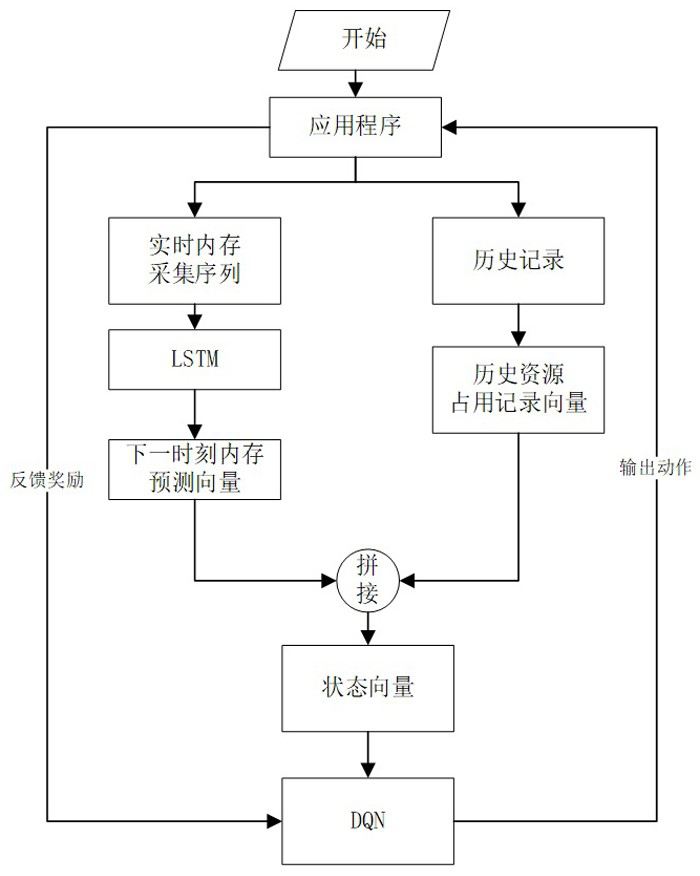

[0023] Such as figure 1 As shown, the present invention proposes a cloud computing application memory management method based on real-time content prediction and historical resource occupancy, including the following steps:

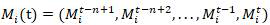

[0024] a) Applications in a given cloud computing environment . Checkpoint at fixed time interval T, logging the application The memory usage of the past n checkpoints. application The memory usage record at the tth checkpoint (hereinafter referred to as t time) for:

[0025]

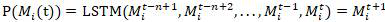

[0026] b) Based on the long-short-term memory network LSTM (known algorithm), the input is the application program at time t memory usage record of , the output is the application at time t+1 Memory usage prediction for :

[0027]

[0028] In each subsequent iterative operation, at a given time t, based on the memory usage records of the previous n chec...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com