Transfomer model processing method, readable storage medium and equipment

A model processing and storage medium technology, applied in voice analysis, voice recognition, instruments, etc., can solve the problem of large number of parameters and achieve the effect of reducing the number of parameters

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

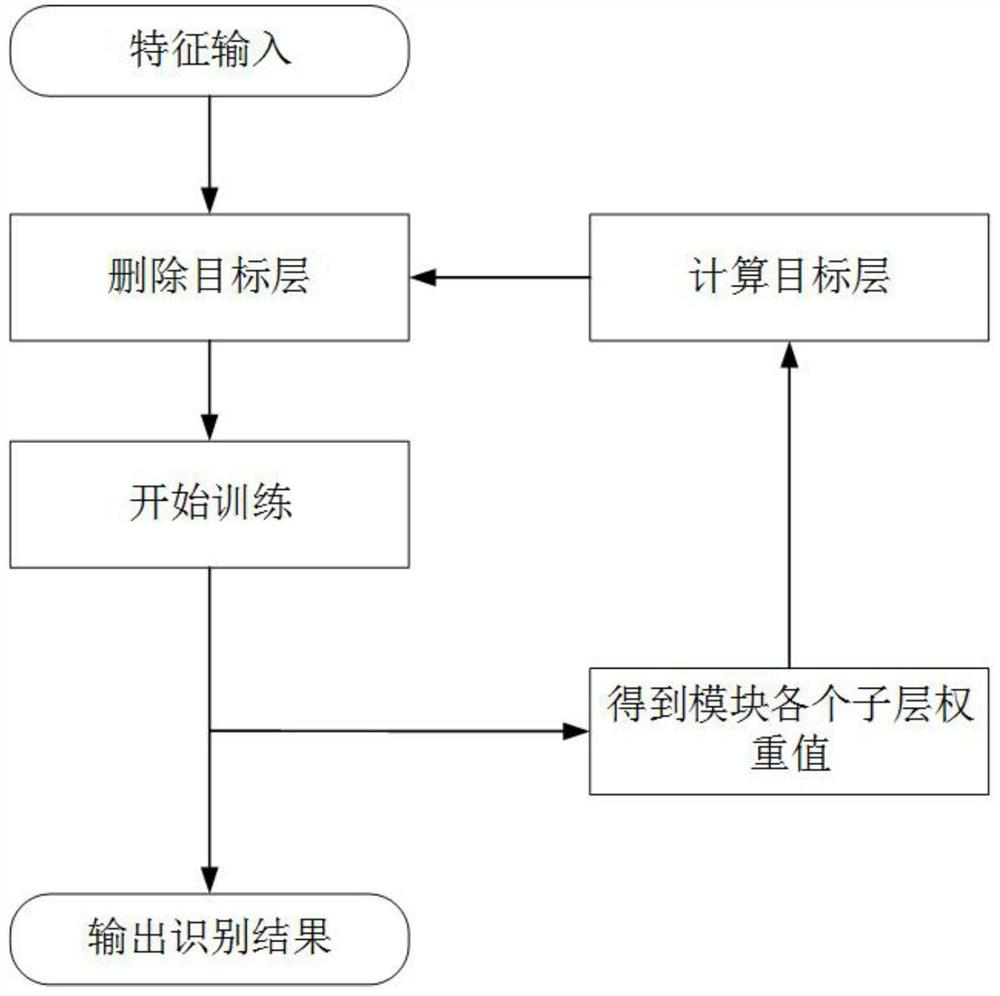

[0029] This embodiment provides a transfomer model processing method, such as figure 1 shown, including steps:

[0030] S1. During training, the target layer is calculated according to the sub-layer weights of the transformer model, and the target layer is deleted or retained during the next training.

[0031] Further, step S1 includes:

[0032] S11. In the Encoder structure of the Transformer model, the two sub-layers and the last two sub-layers of the Decoder structure perform matrix operations according to the weights related to the output of the sub-layers;

[0033] The weight associated with the sublayer output is the nearest weight of the sublayer output Let the value of the matrix be a matrix of 1 and Do matrix operations: n i = u 1 W i u 2 .

[0034] S12. Select the minimum value in the matrix operation, take the layer where the minimum value is located as the target layer, obtain different target layers for each sub-layer, and delete the target layer in th...

Embodiment 2

[0043] This embodiment provides a computer-readable storage medium, at least one instruction, at least one section of program, code set or instruction set is stored in the readable storage medium, at least one instruction, at least one section of program, code set or instruction set is controlled by the processor Load and execute to realize the transfomer model processing method of Embodiment 1.

[0044] Optionally, the computer-readable storage medium may include: a read-only memory (ROM, Read Only Memory), a random access memory (RAM, Random Access Memory), a solid-state hard drive (SSD, Solid State Drives) or an optical disc, and the like. Wherein, the random access memory may include a resistive random access memory (ReRAM, Resistance Random Access Memory) and a dynamic random access memory (DRAM, Dynamic Random Access Memory).

Embodiment 3

[0046] This embodiment provides a device, which can be a computer device, or a mobile terminal device, such as a mobile phone, a tablet computer, etc., including a processor and a memory, where program codes are stored in the memory, and the processor executes the program codes to execute The transfomer model processing method of embodiment 1.

[0047] Those skilled in the art should be aware that, in the foregoing one or more examples, the functions described in the embodiments of the present application may be implemented by hardware, software, firmware or any combination thereof. When implemented in software, the functions may be stored on or transmitted over as one or more instructions or code on a computer-readable medium. Computer-readable media includes both computer storage media and communication media including any medium that facilitates transfer of a computer program from one place to another. A storage media may be any available media that can be accessed by a ge...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com