Method for quantitatively improving model precision through low-bit mixing precision

A technology of model accuracy and precision, applied in the field of image processing, can solve the problems of difficult to guarantee the structural accuracy of mobilenet models, and difficult to achieve full-precision accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0053] In order to understand the technical content and advantages of the present invention more clearly, the present invention will now be further described in detail with reference to the accompanying drawings.

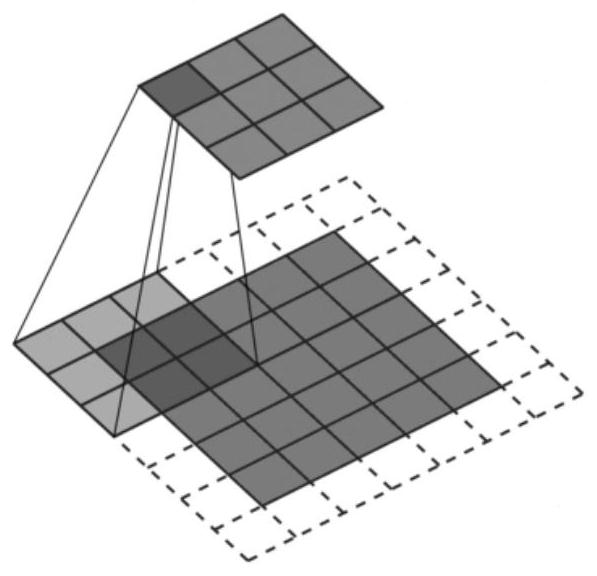

[0054] Through the calculation and analysis of the model channel, this method ensures that the int16-bit condition of the model does not exceed the boundary, and maximizes the quantization bit width and precision of each layer; Note: The model int16-bit value range is (-32768 to 32767) is In order to speed up the model inference during inference on the inference side, the value is limited within the range of int16; if the value range exceeds, the result of the model inference will be abnormal.

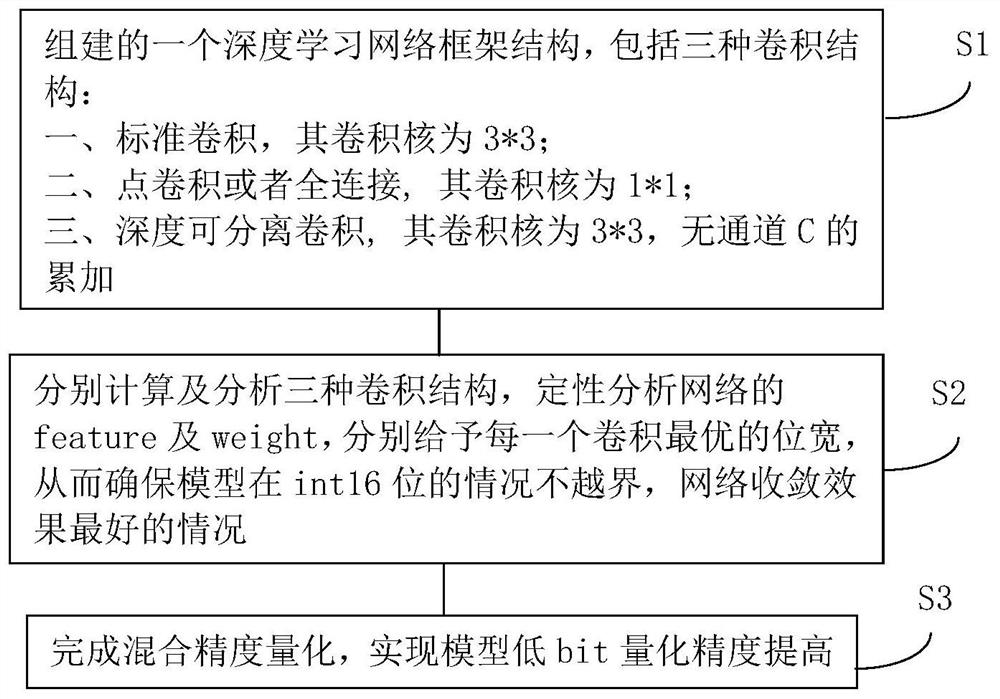

[0055] like figure 2 As shown, the present invention relates to a low-bit mixed-precision quantization method for improving model accuracy, and the method further includes the following steps:

[0056] S1, a deep learning network framework structure is formed, including th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com