Patents

Literature

36 results about "Mixed precision" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

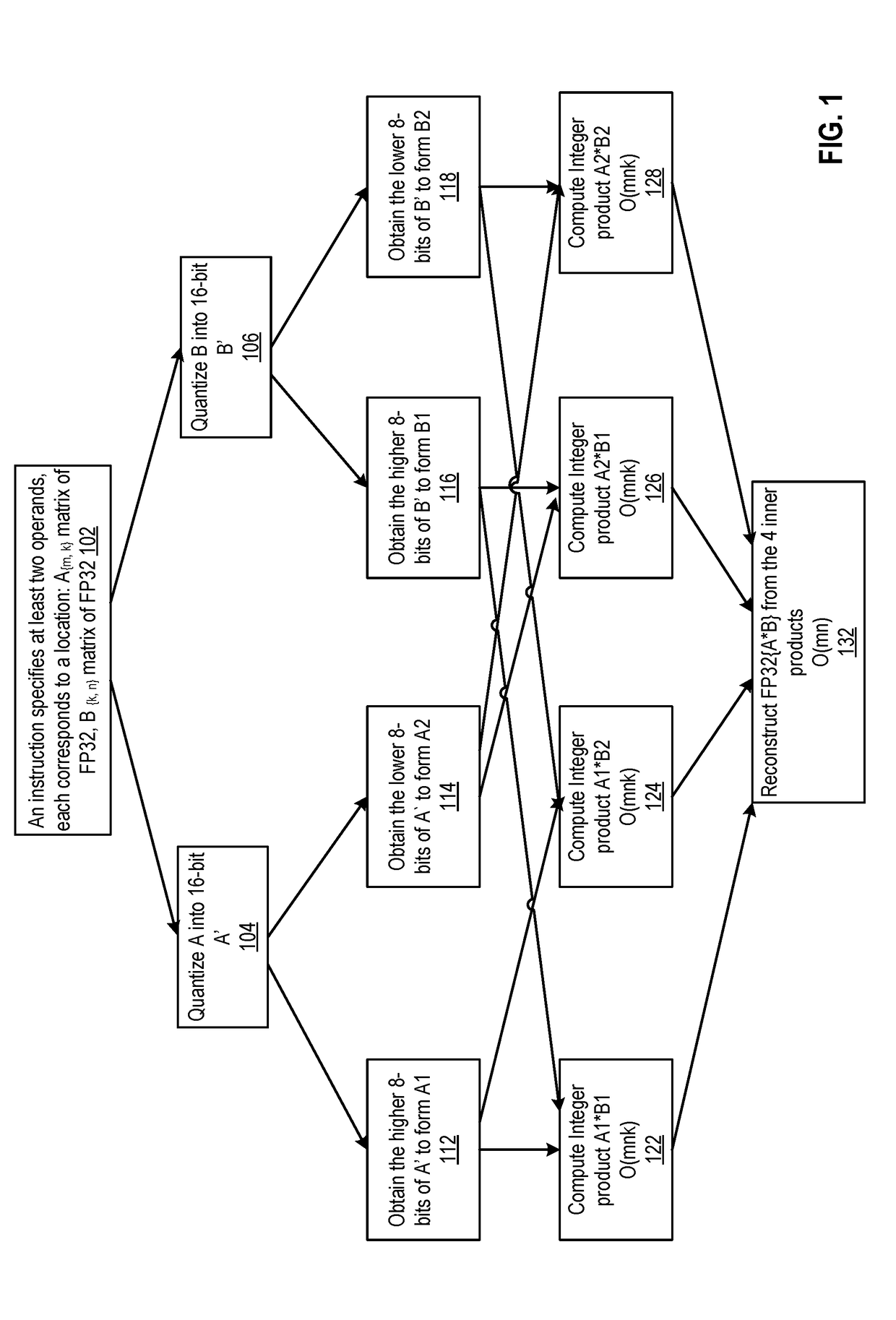

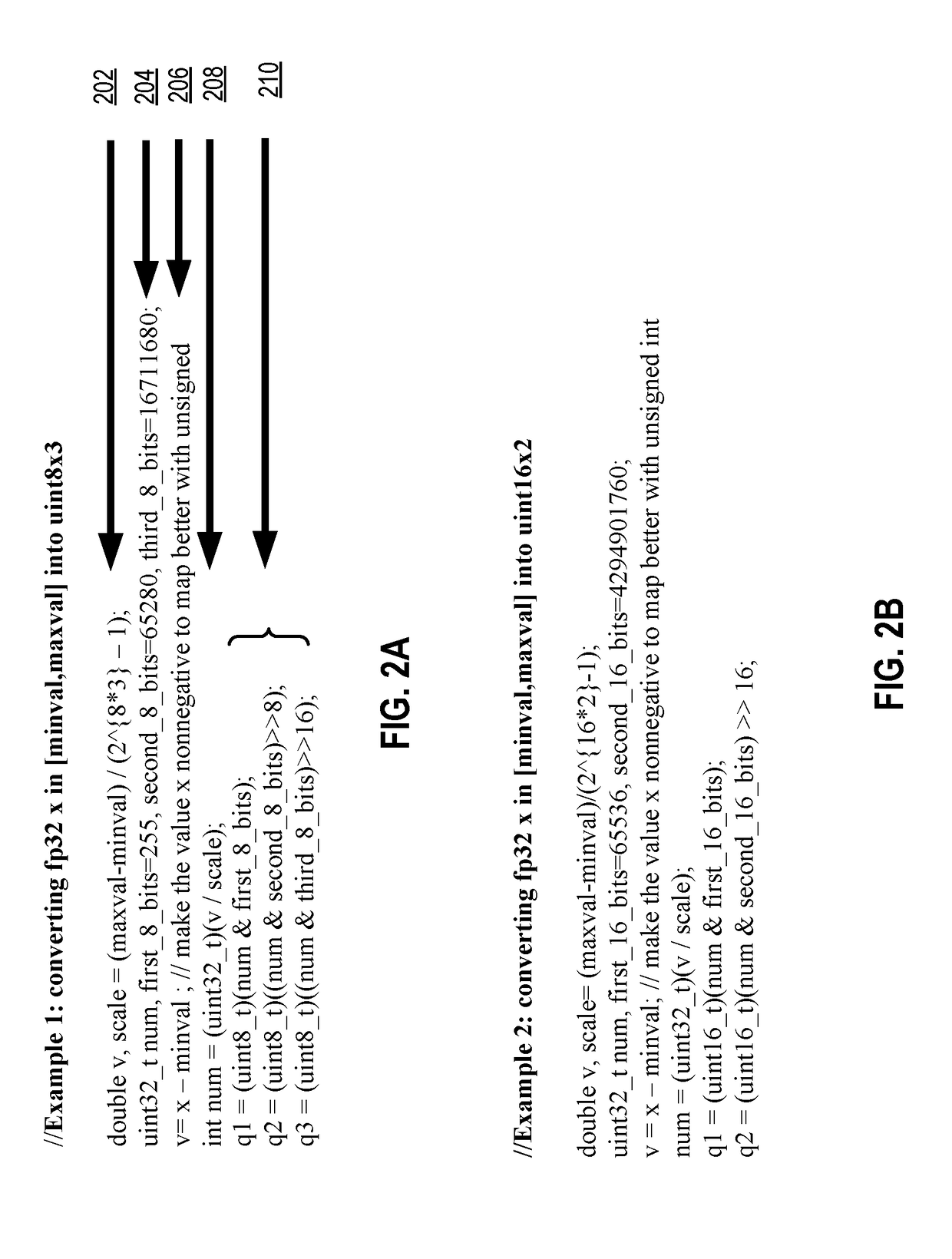

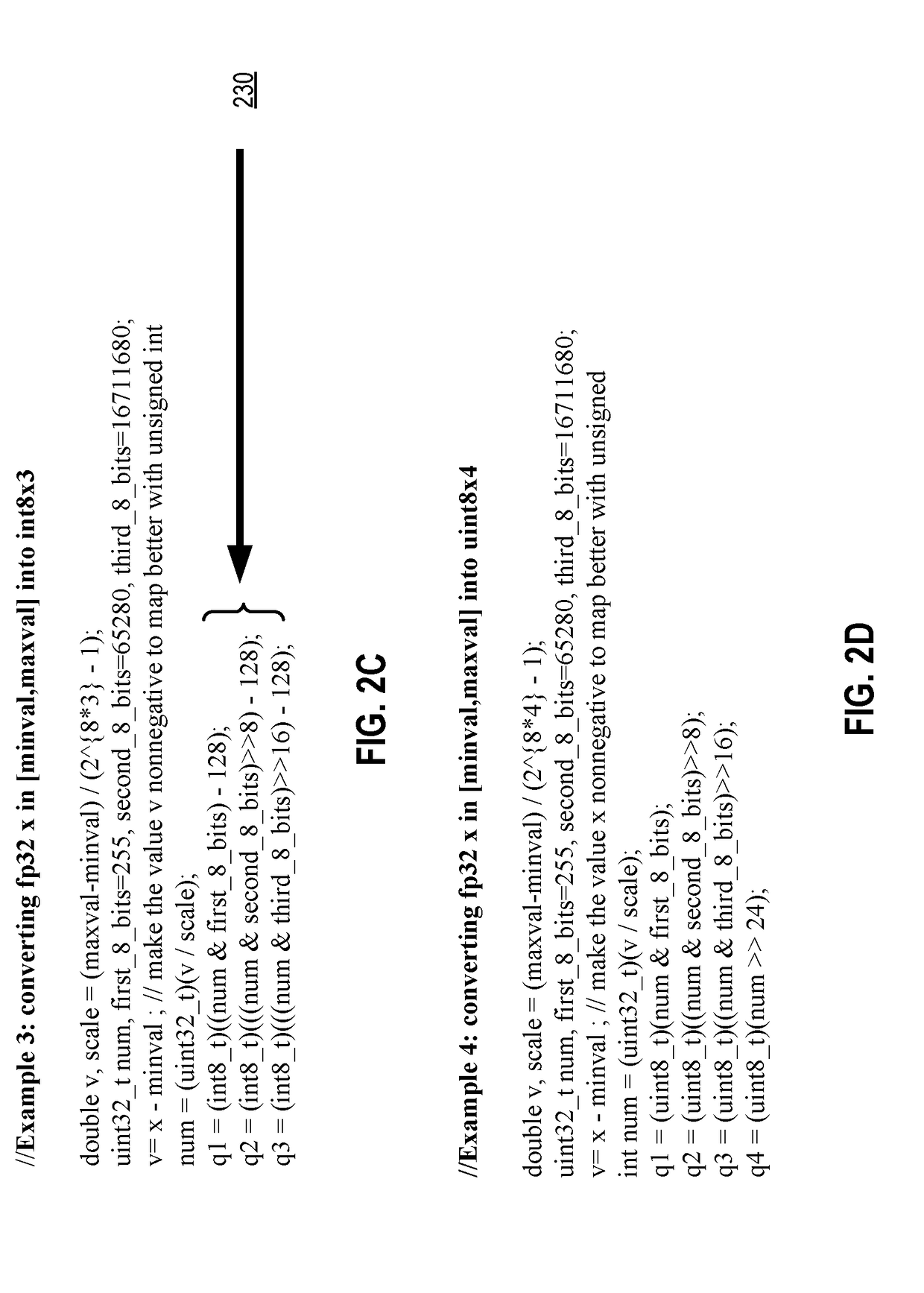

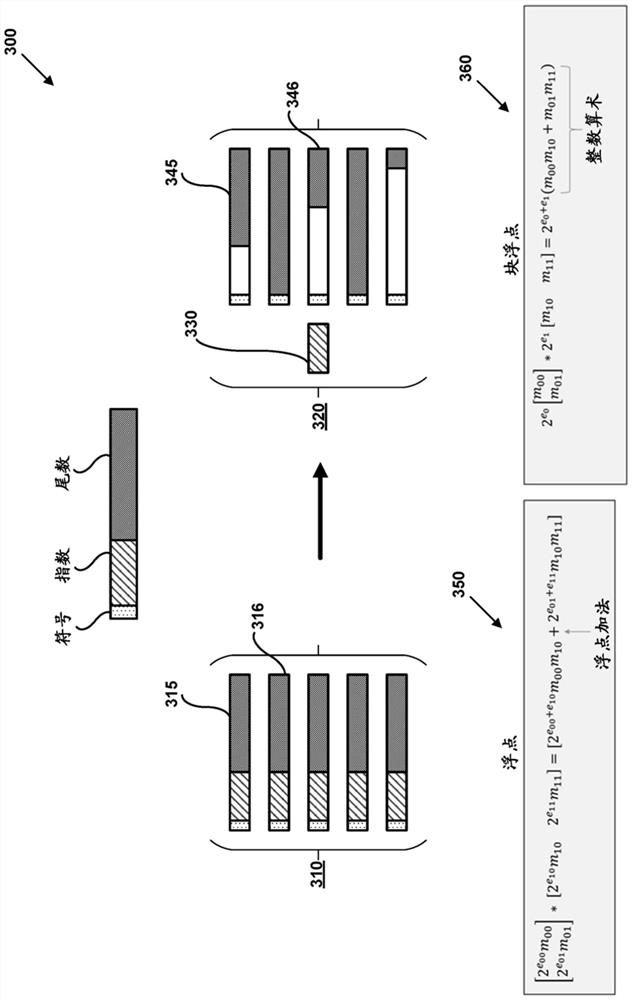

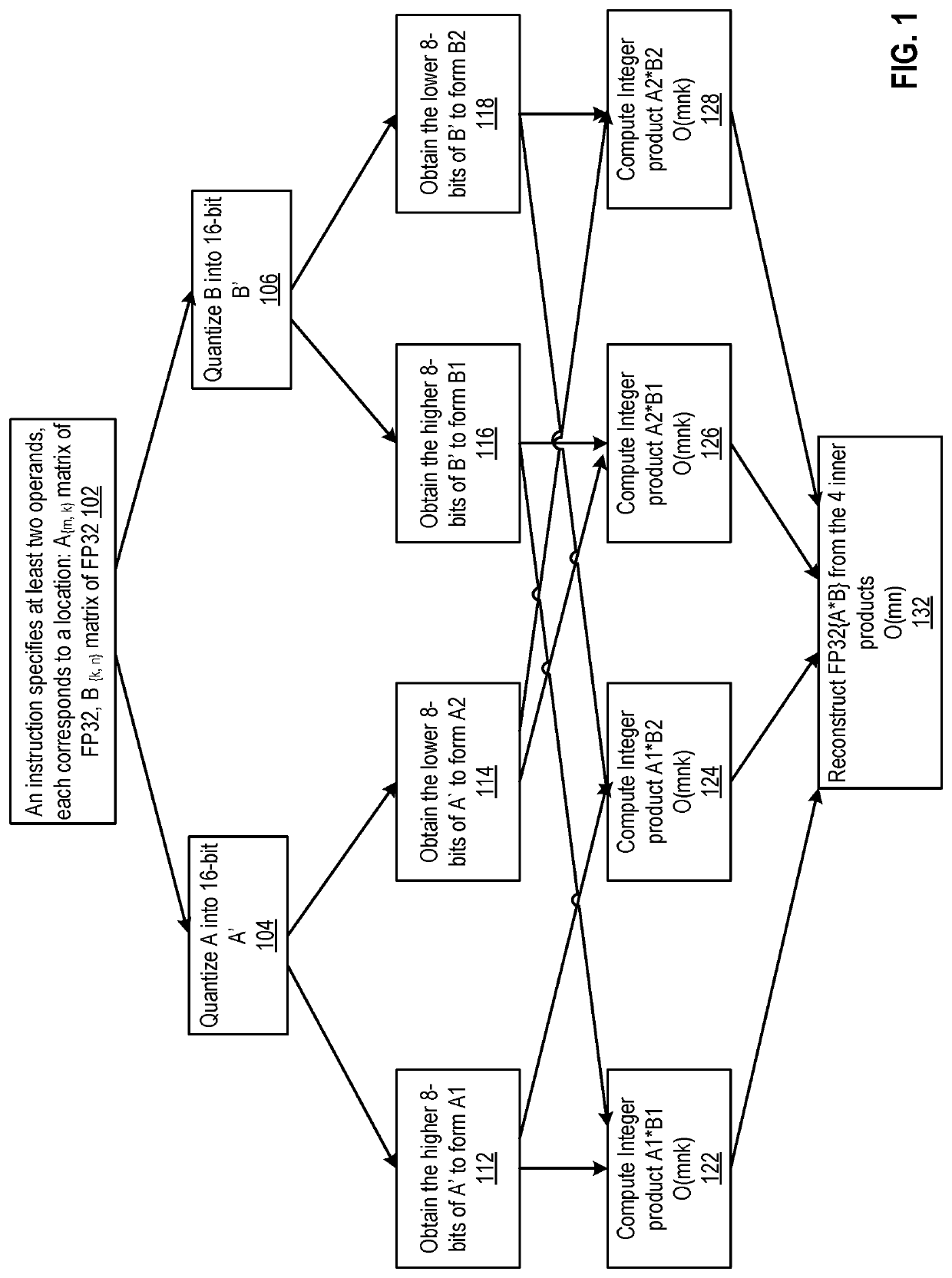

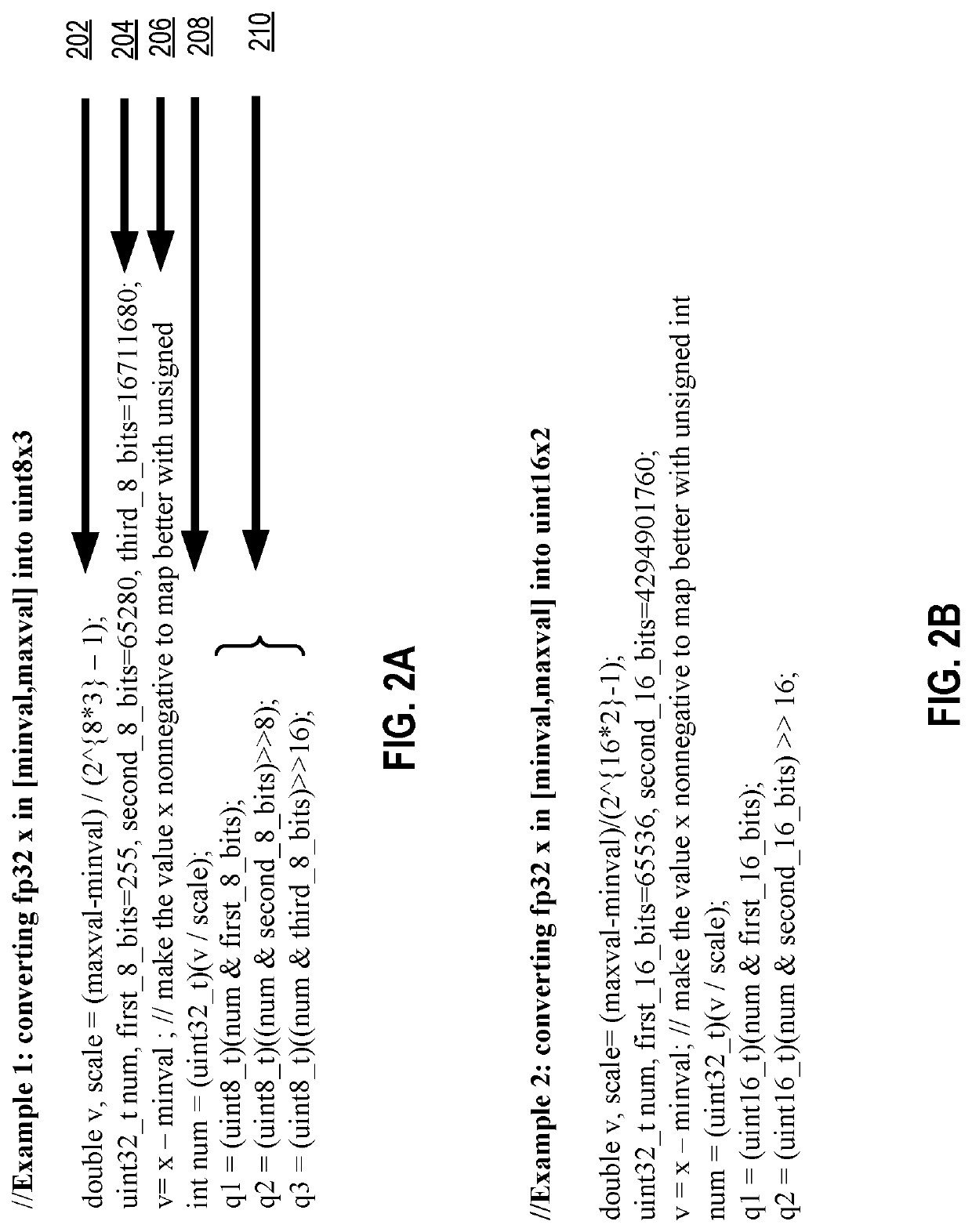

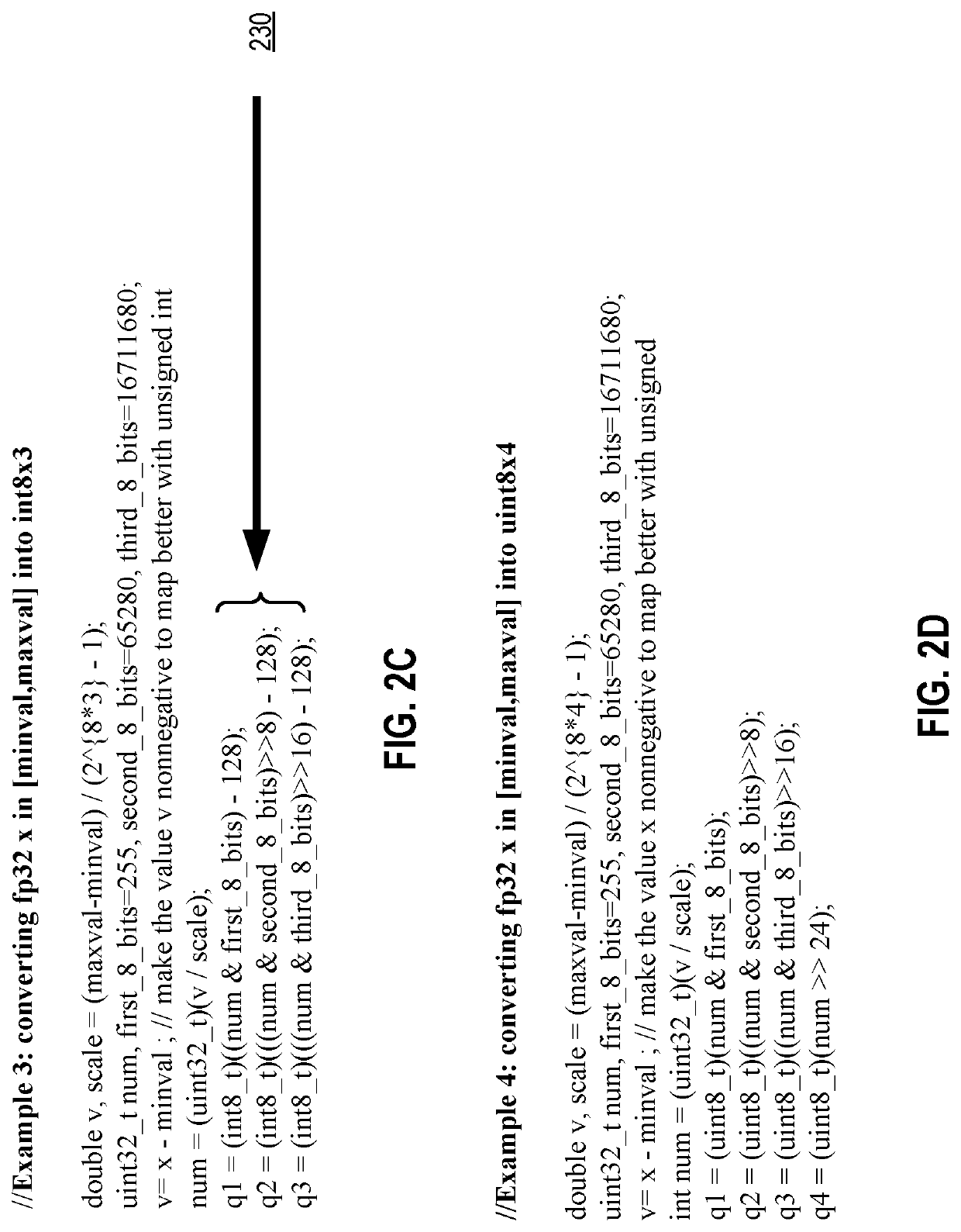

Computer processor for higher precision computations using a mixed-precision decomposition of operations

Embodiments detailed herein relate to arithmetic operations of float-point values. An exemplary processor includes decoding circuitry to decode an instruction, where the instruction specifies locations of a plurality of operands, values of which being in a floating-point format. The exemplary processor further includes execution circuitry to execute the decoded instruction, where the execution includes to: convert the values for each operand, each value being converted into a plurality of lower precision values, where an exponent is to be stored for each operand; perform arithmetic operations among lower precision values converted from values for the plurality of the operands; and generate a floating-point value by converting a resulting value from the arithmetic operations into the floating-point format and store the floating-point value.

Owner:INTEL CORP

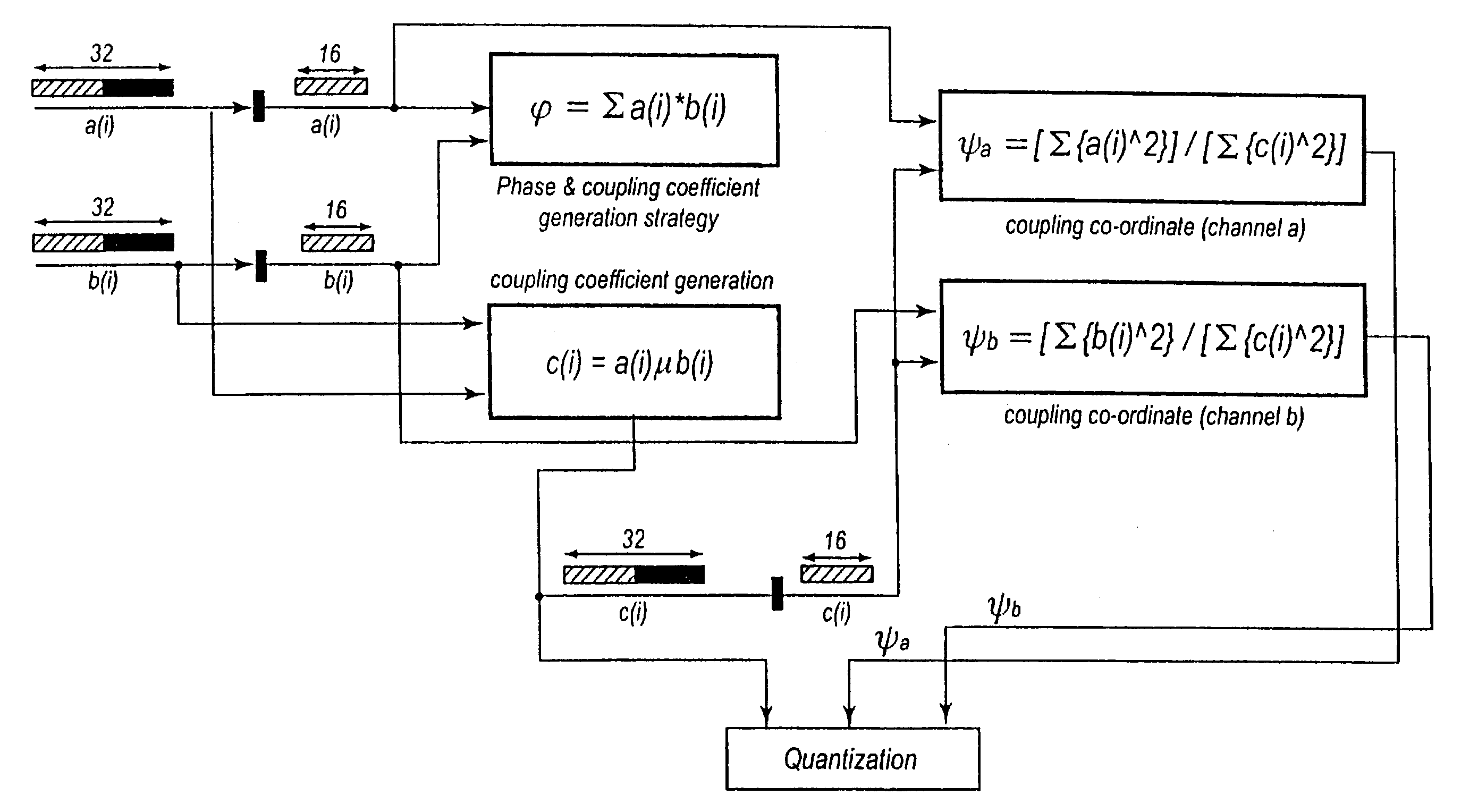

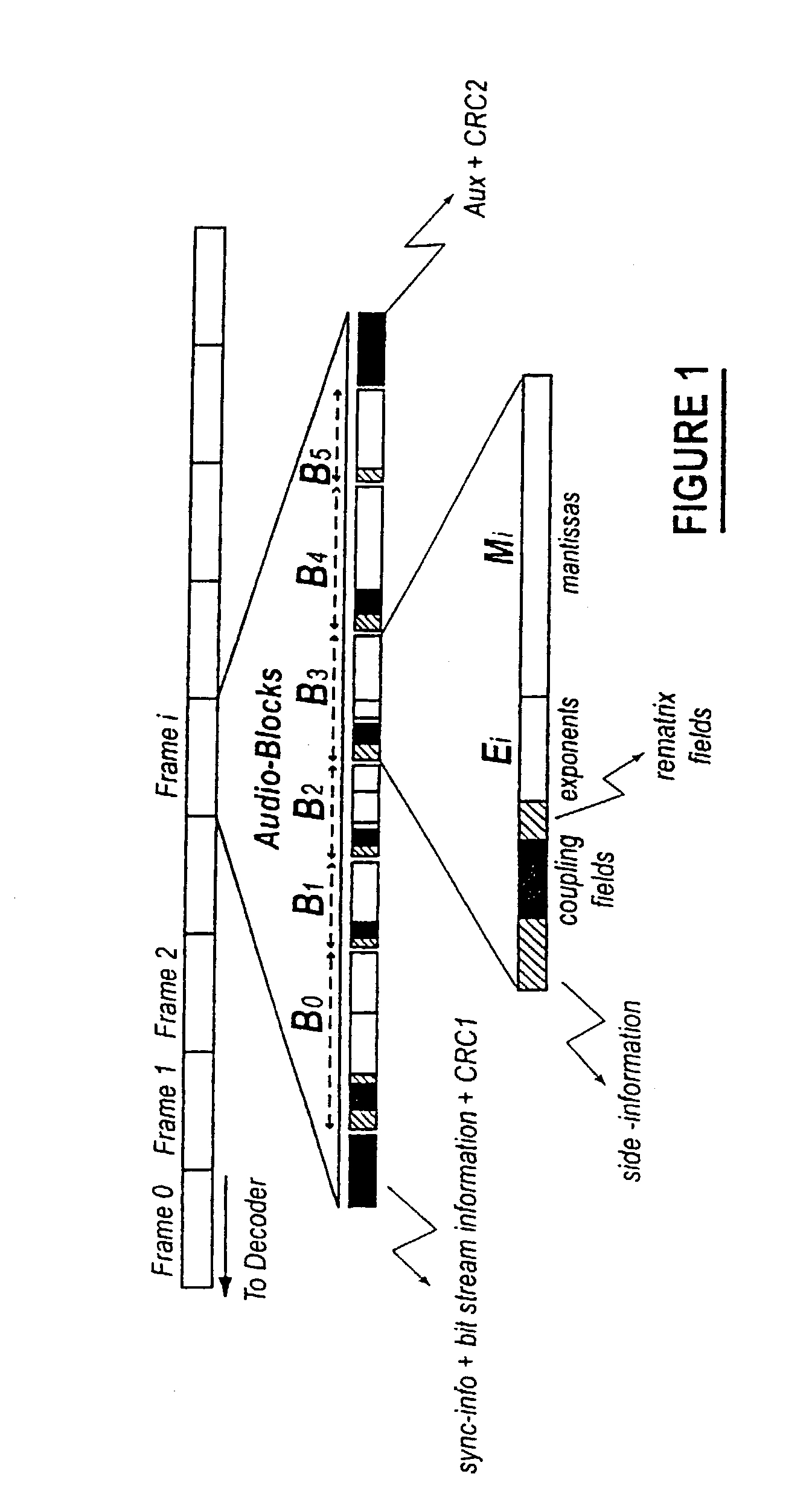

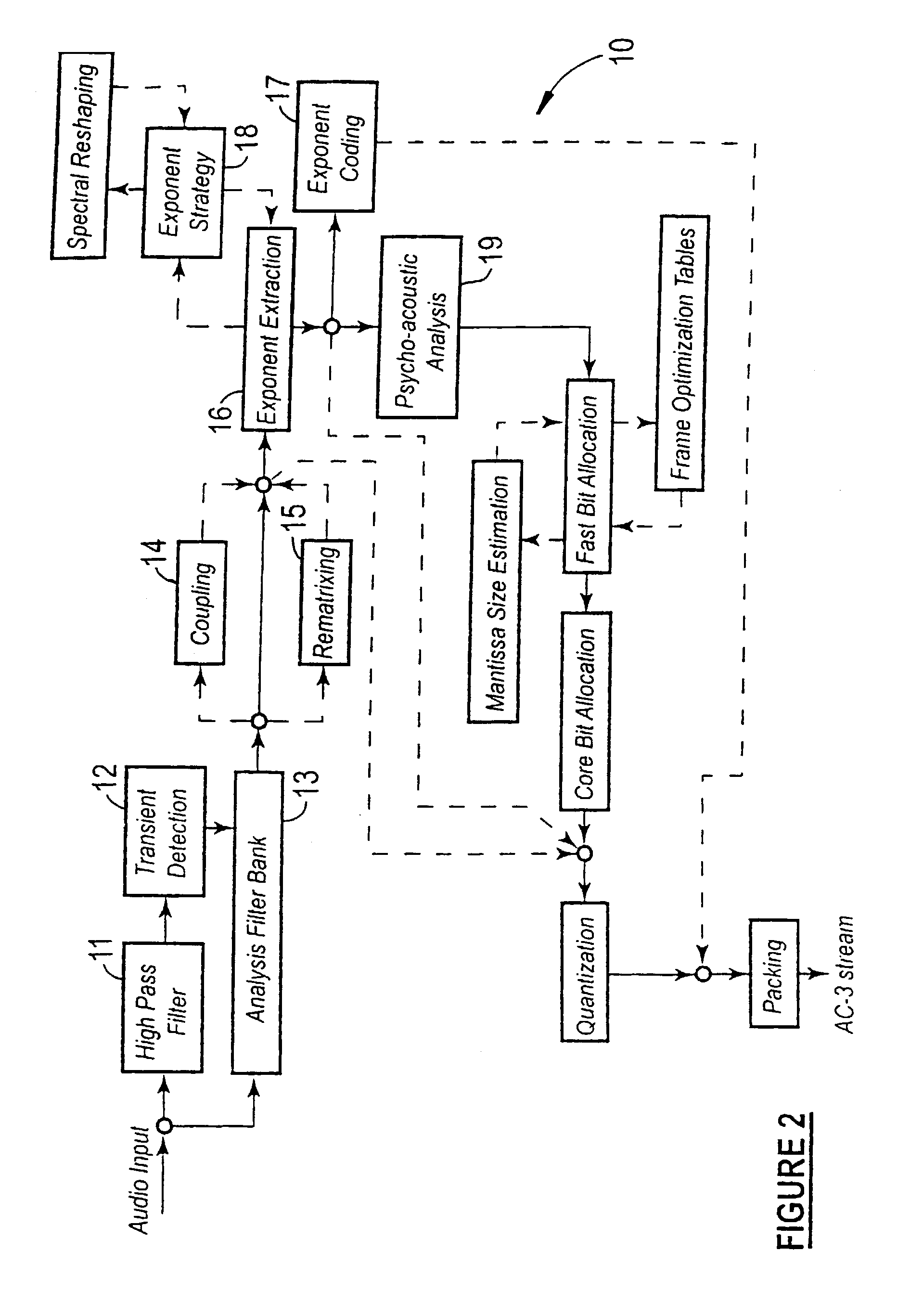

Channel coupling for an AC-3 encoder

InactiveUS7096240B1None is suitable for purposeDigital data processing detailsSpeech analysisChannel coupling16-bit

Channel coupling for an AC-3 encoder, using mixed precision computations and 16-bit coupling coefficient calculations for channels with 32-bit frequency coefficients.

Owner:STMICROELECTRONICS ASIA PACIFIC PTE

Implementing mixed-precision floating-point operations in a programmable integrated circuit device

ActiveUS8706790B1Reduced resourceNot consume significant resourceComputations using contact-making devicesOperandFloating point

Owner:ALTERA CORP

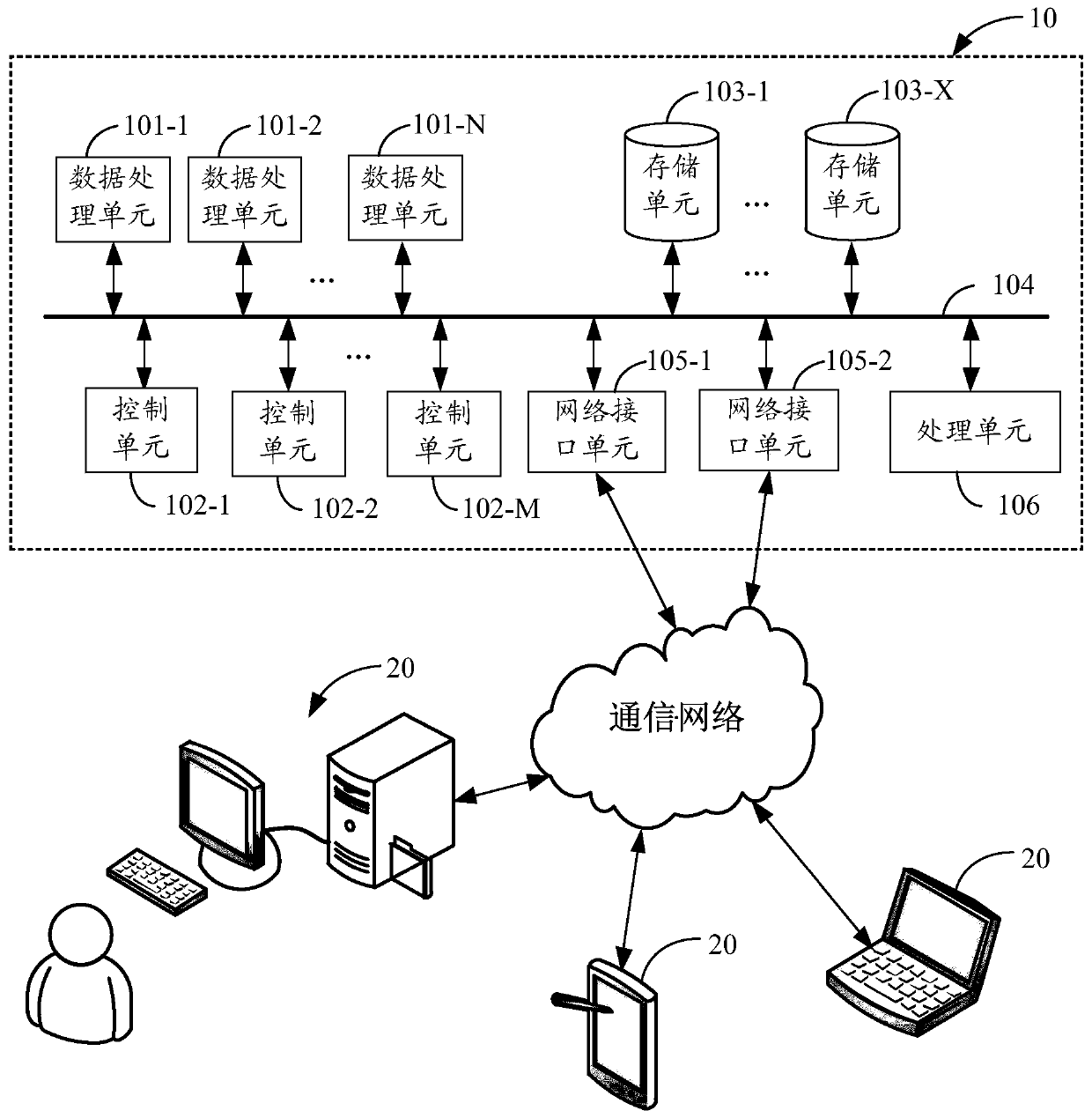

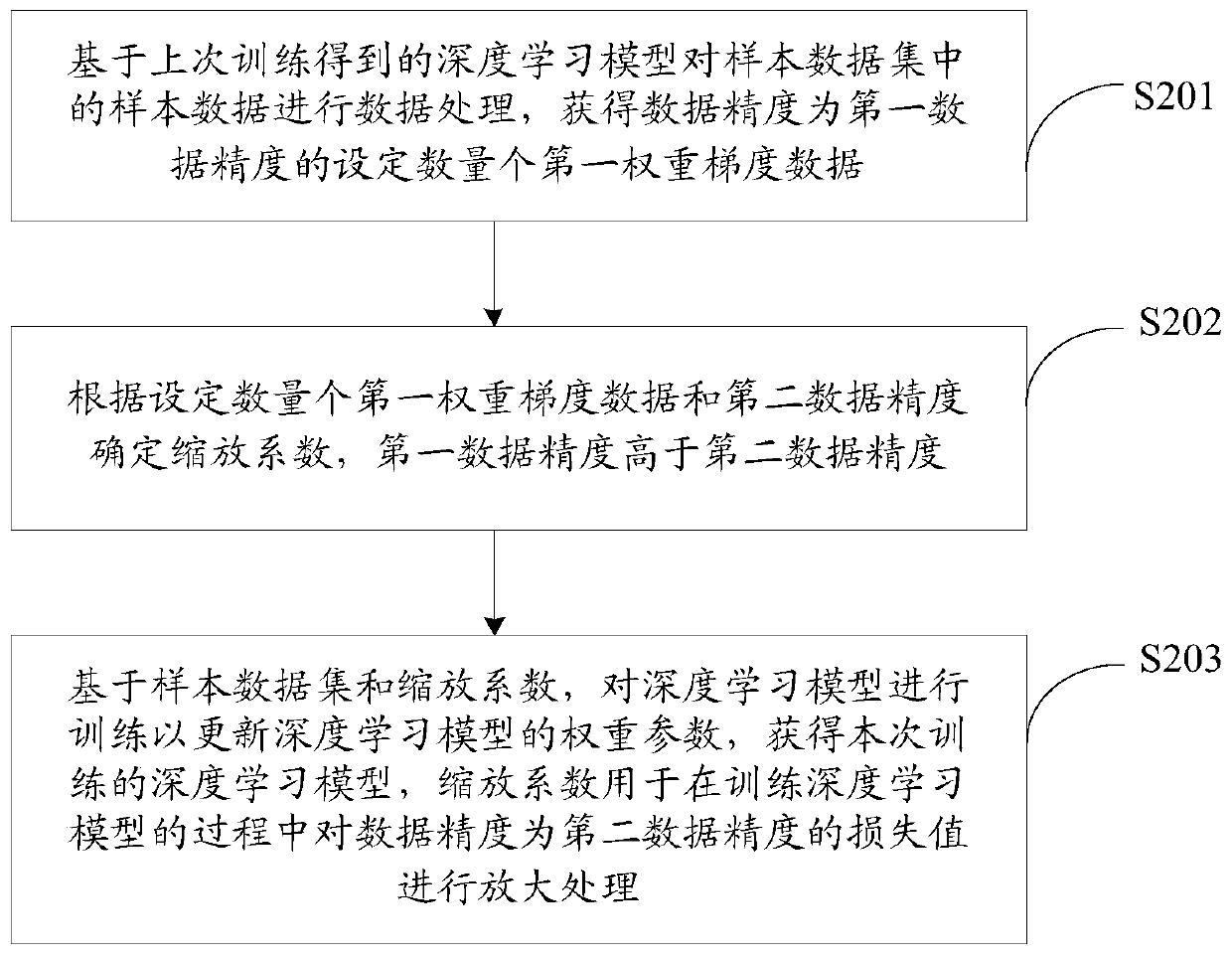

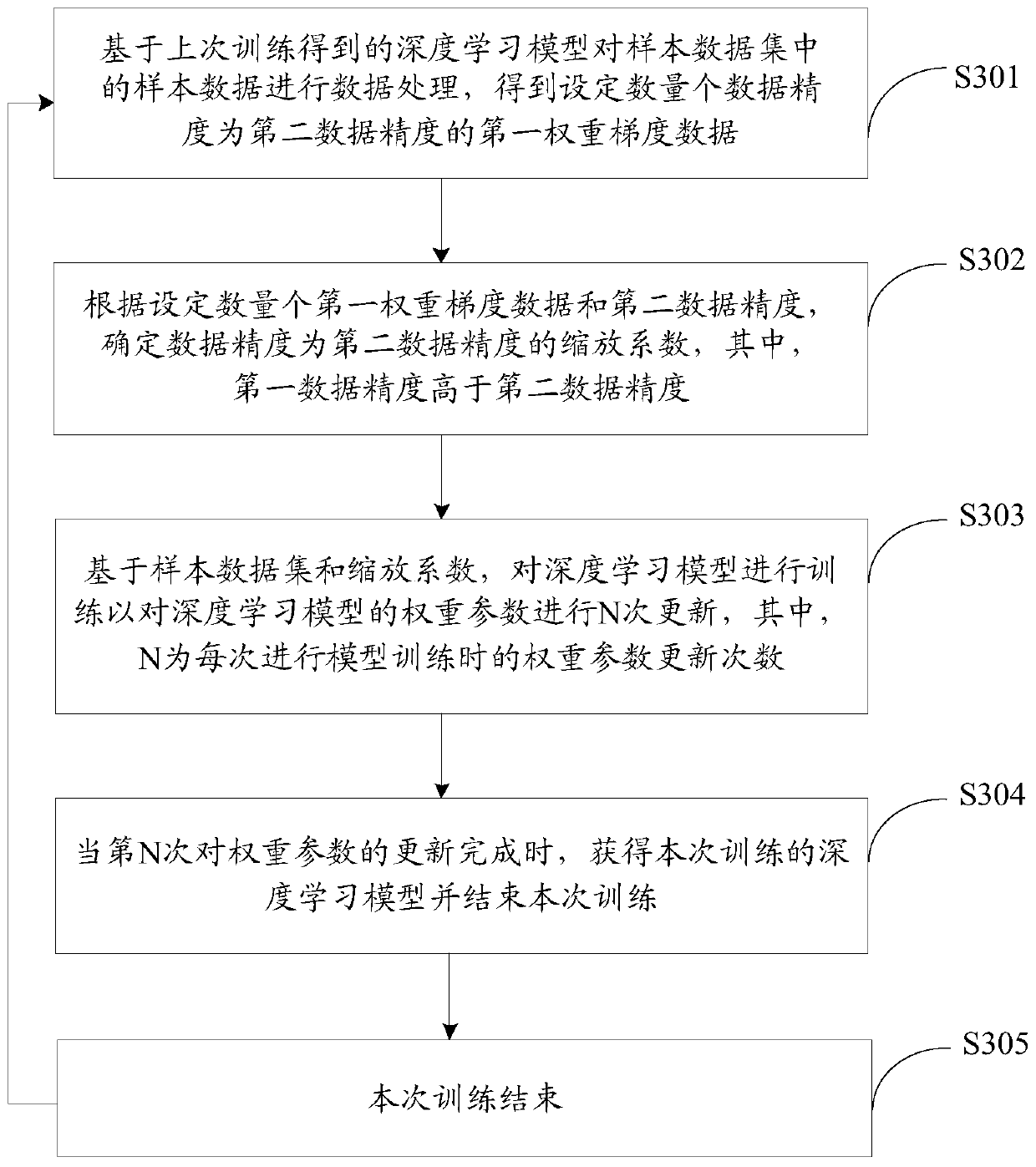

Deep learning model training method, device and system based on mixed precision

PendingCN110163368AGuaranteed derivative resultsGuaranteed accuracyNeural architecturesNeural learning methodsData setMixed precision

The invention discloses a deep learning model training method, device and system based on mixed precision, and the method comprises the steps: carrying out the data processing of sample data in a sample data set based on a deep learning model obtained through the last training, and obtaining a set number of first weight gradient data with the data precision being the first data precision; determining the data precision as a scaling coefficient of the second data precision according to the set number of first weight gradient data and the second data precision, the first data precision being higher than the second data precision; based on the sample data set and the scaling coefficient, training the deep learning model to update the weight parameters of the deep learning model, obtaining thedeep learning model trained this time, and the scaling coefficient is used for amplifying the loss value with the data precision being the second data precision in the process of training the deep learning model, so that the training efficiency and the training precision are improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

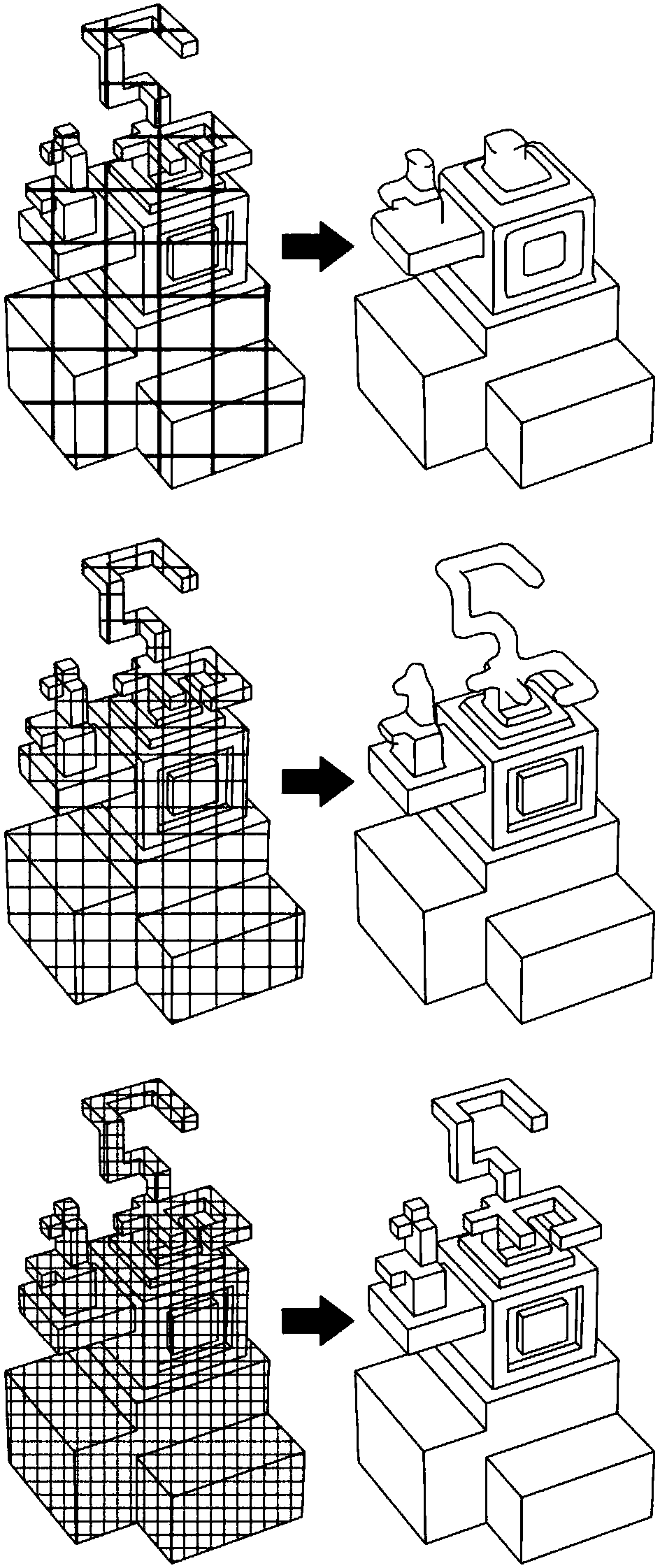

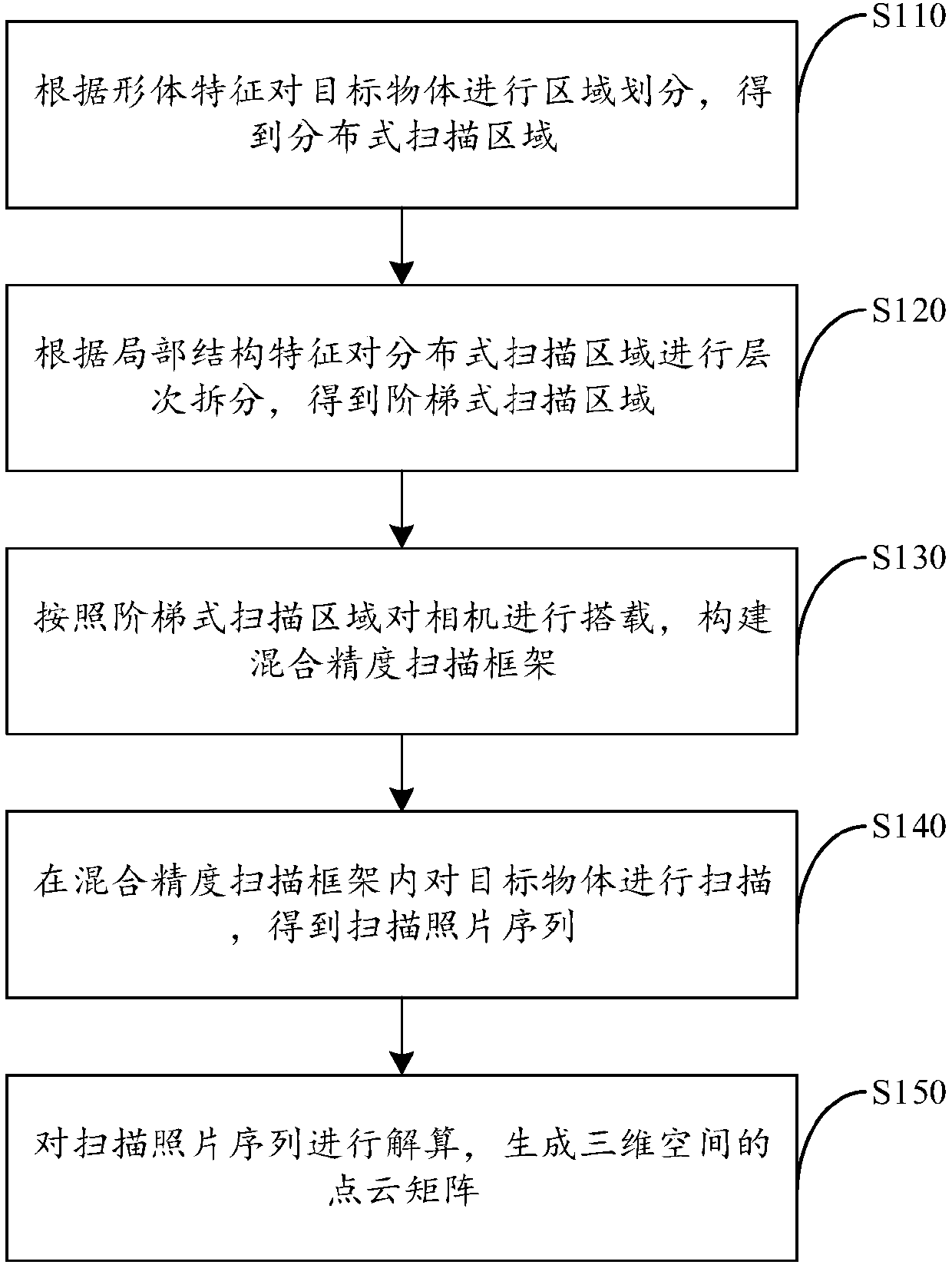

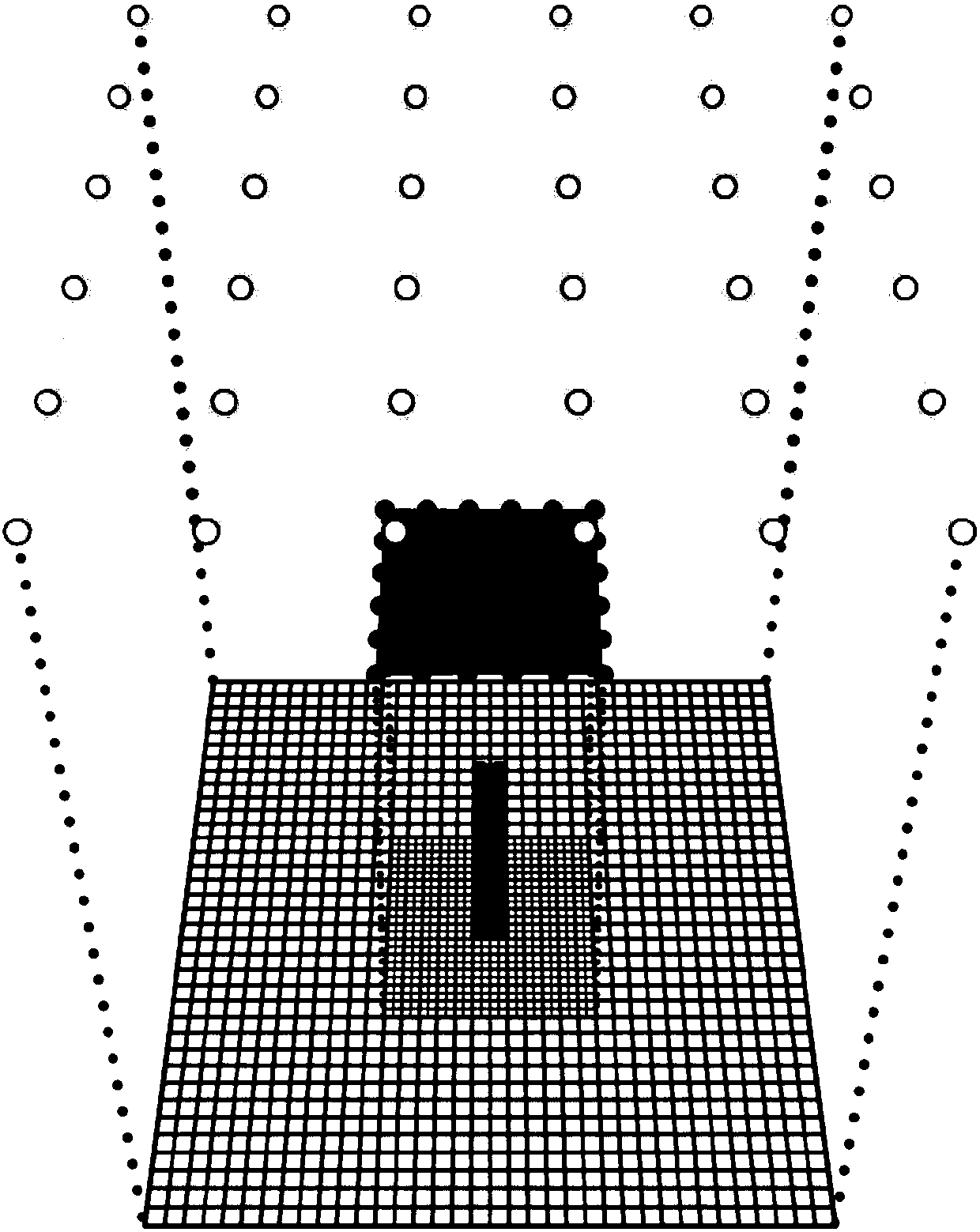

Mixed-precision scanning method and system

InactiveCN107767414ALow costImprove scanning efficiencyDetails involving processing stepsImage enhancementBody shapePoint cloud

The invention provides a mixed-precision scanning method and system, and relates to the field of three-dimensional information technology. The method includes: carrying out area division on a target object according to body shape features to obtain a distributed scanning area; carrying out hierarchical splitting on the distributed scanning area according to local structure features to obtain a stepped scanning area; mounting cameras according to the stepped scanning area, and constructing a mixed-precision scanning framework; scanning the target object in the mixed-precision scanning frameworkto obtain a scanning photograph sequence; and carrying out calculation on the scanning photograph sequence to generate a point cloud matrix of three-dimensional space. The method can reduce costs andimprove scanning efficiency.

Owner:林嘉恒

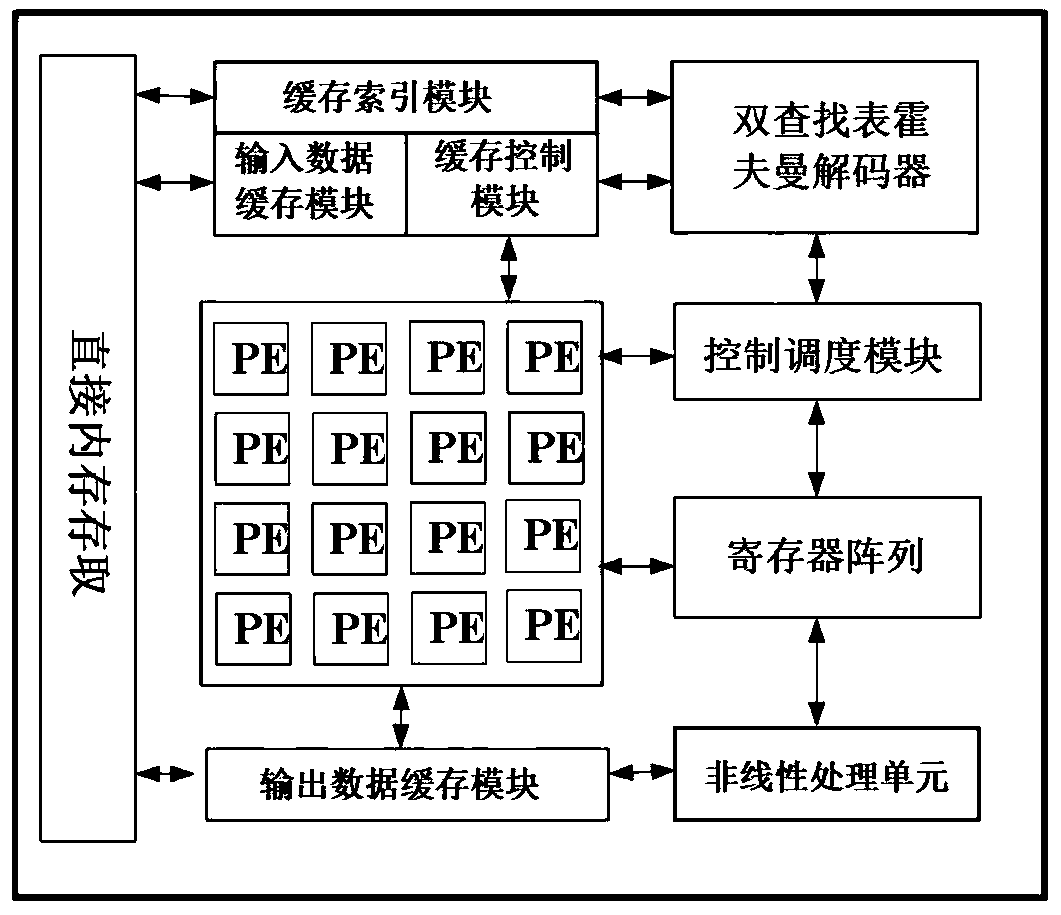

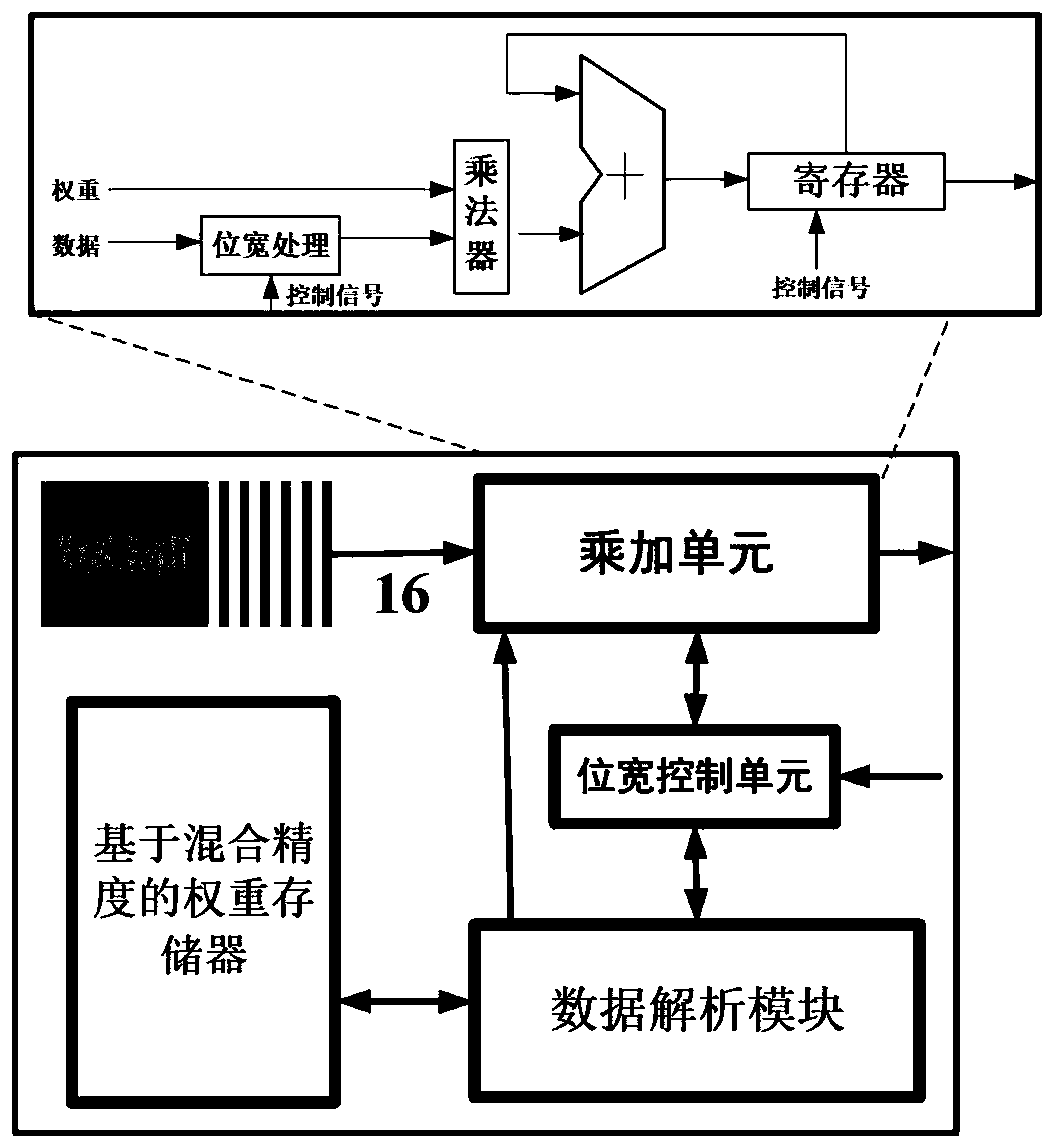

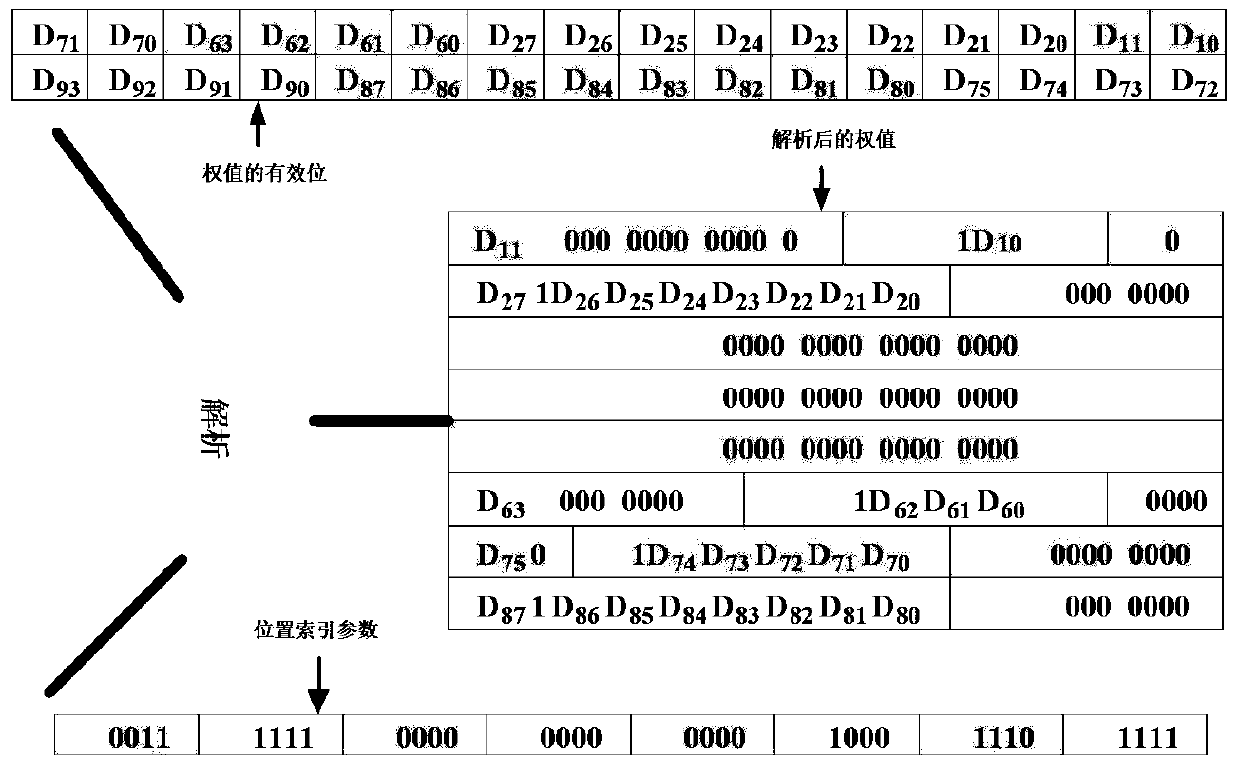

Deep neural network accelerator based on hybrid precision storage

InactiveCN110766155ASmall amount of calculationAccuracy adjustableNeural learning methodsData streamHuffman decoding

The invention discloses a deep neural network accelerator based on hybrid precision storage, and belongs to the technical field of calculation, reckoning and counting. The accelerator comprises an on-chip cache module, a control module, a bit-width-controllable multiply-add-batch calculation module, a nonlinear calculation module, a register array and a Huffman decoding module based on double lookup tables, effective bit and sign bit parameters of the weight are stored in the same memory, so that mixed-precision data storage and analysis are realized, and multiply-add operation of mixed-precision data and weight is realized. Through data storage analysis based on mixed precision and Huffman decoding based on a dual lookup table, compression and storage of data and weights under different precision are realized, data streams are reduced, and low-power-consumption data scheduling based on a deep neural network is realized.

Owner:SOUTHEAST UNIV

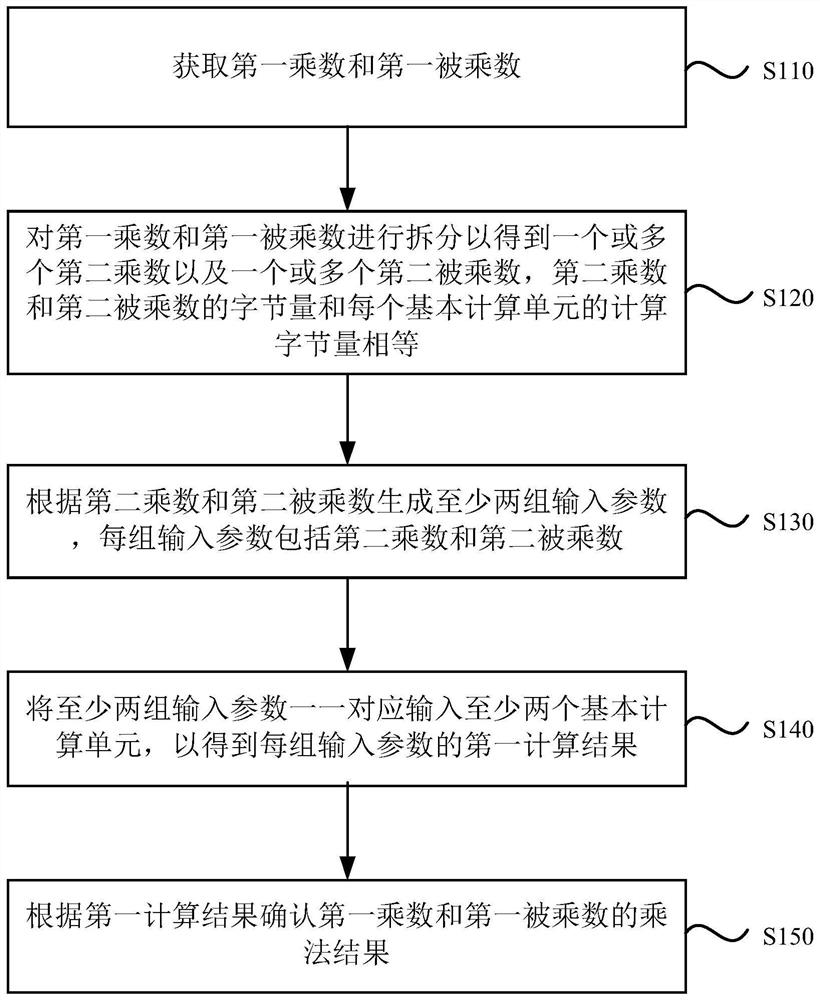

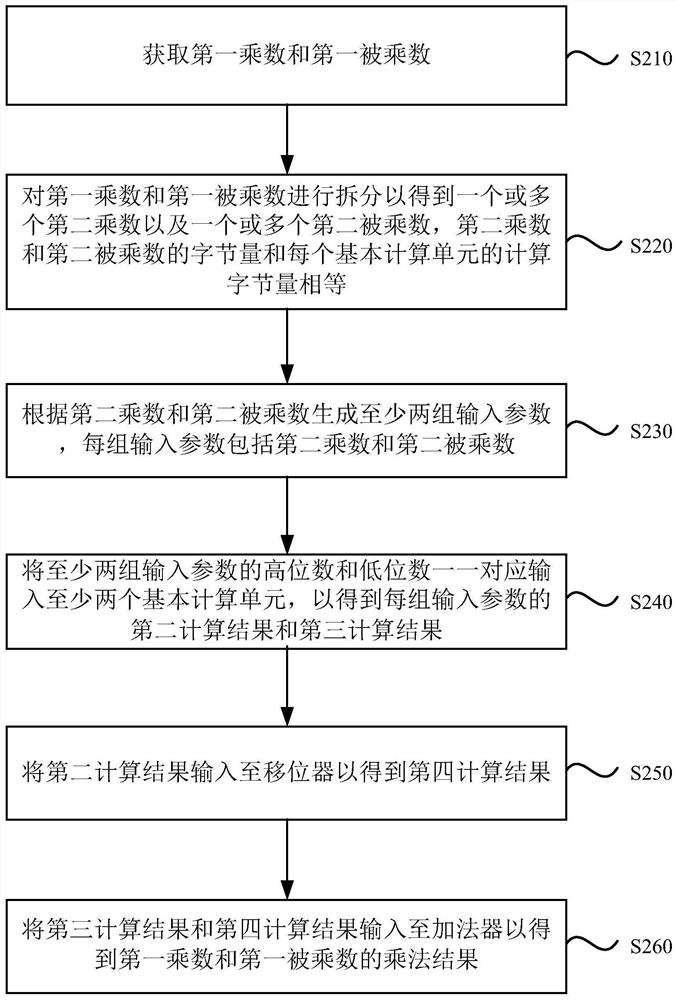

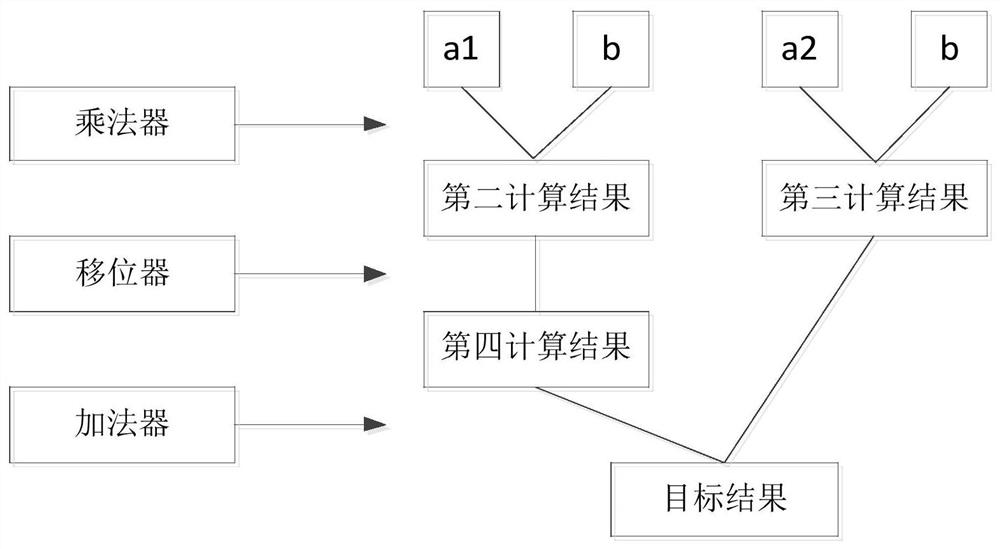

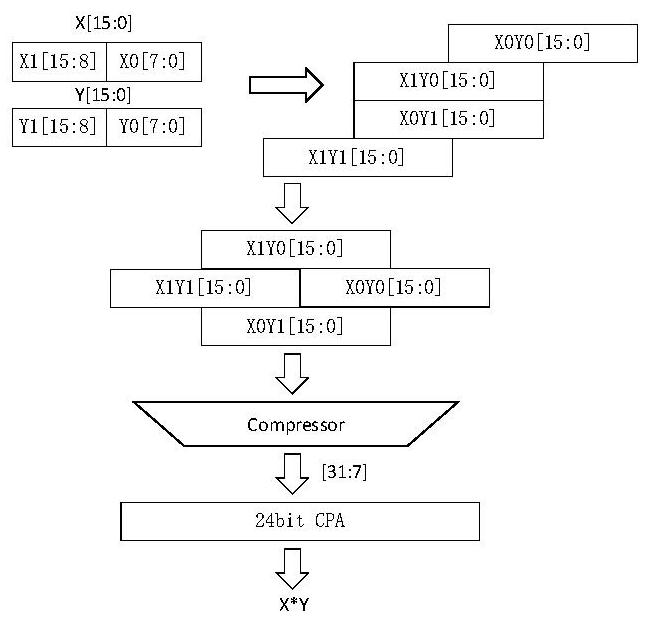

Hybrid precision space-time multiplexing multiplier based on NAS search and control method of hybrid precision space-time multiplexing multiplier

PendingCN111966327ASolve UtilizationSolve the requestDigital data processing detailsData streamBinary multiplier

The embodiment of the invention discloses a hybrid precision space-time multiplexing multiplier based on NAS search and a control method of the hybrid precision space-time multiplexing multiplier. Themethod comprises the steps of splitting a first multiplier and a first multiplicand to obtain one or more second multipliers and one or more second multiplicand, wherein the byte quantity of the second multipliers and the byte quantity of the second multiplicand are equal to the calculation byte quantity of each basic calculation unit; generating at least two groups of input parameters accordingto the second multiplier and the second multiplicand, wherein each group of input parameters comprises the second multiplier and the second multiplicand; inputting the at least two groups of input parameters into at least two basic calculation units in a one-to-one correspondence manner to obtain a first calculation result of each group of input parameters; and determining a target result of the first multiplier and the first multiplicand according to the first calculation result. According to the embodiment of the invention, the hybrid precision fixed-point multiply-accumulate calculation issupported through the hybrid serial-parallel design of time and space, so that the data stream is deeply optimized. The working speed of a traditional multiplier is improved, and the required area andenergy consumption are reduced.

Owner:SOUTH UNIVERSITY OF SCIENCE AND TECHNOLOGY OF CHINA

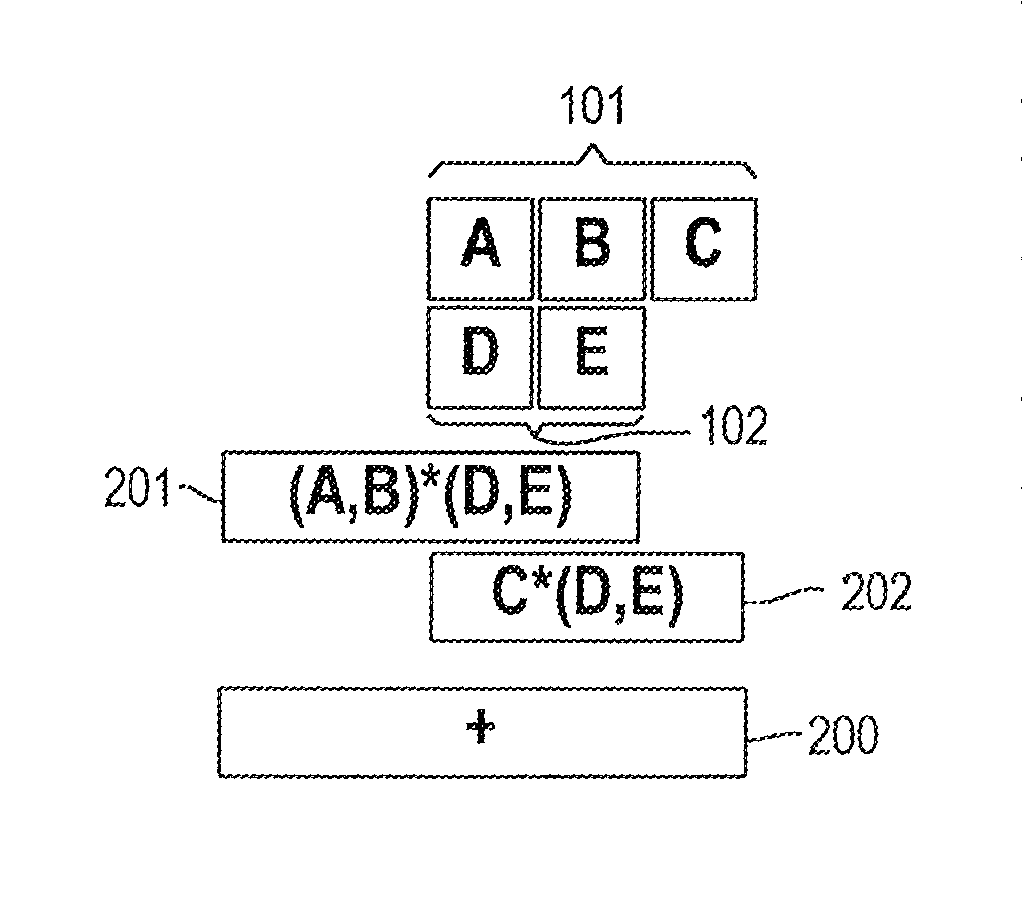

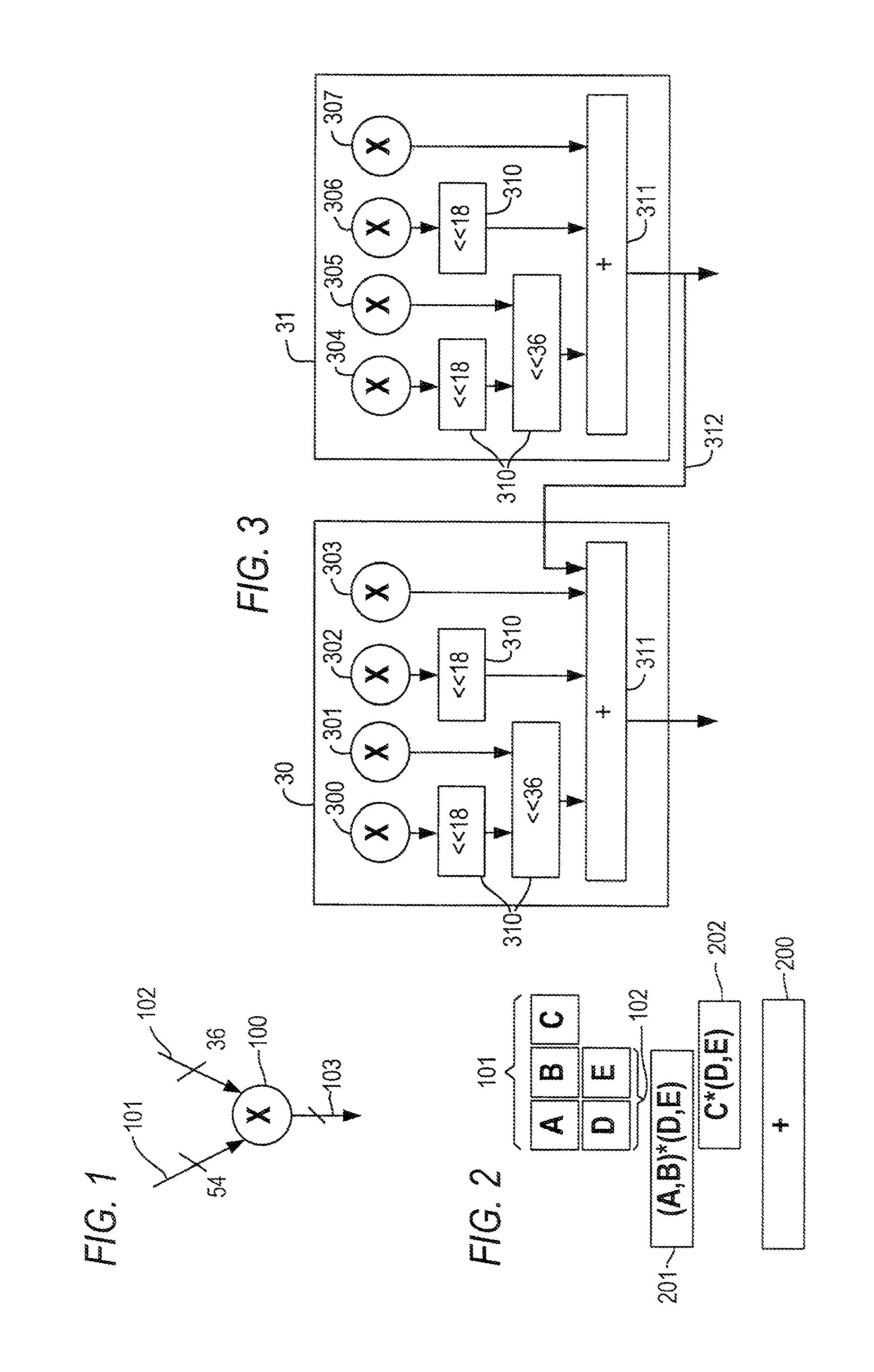

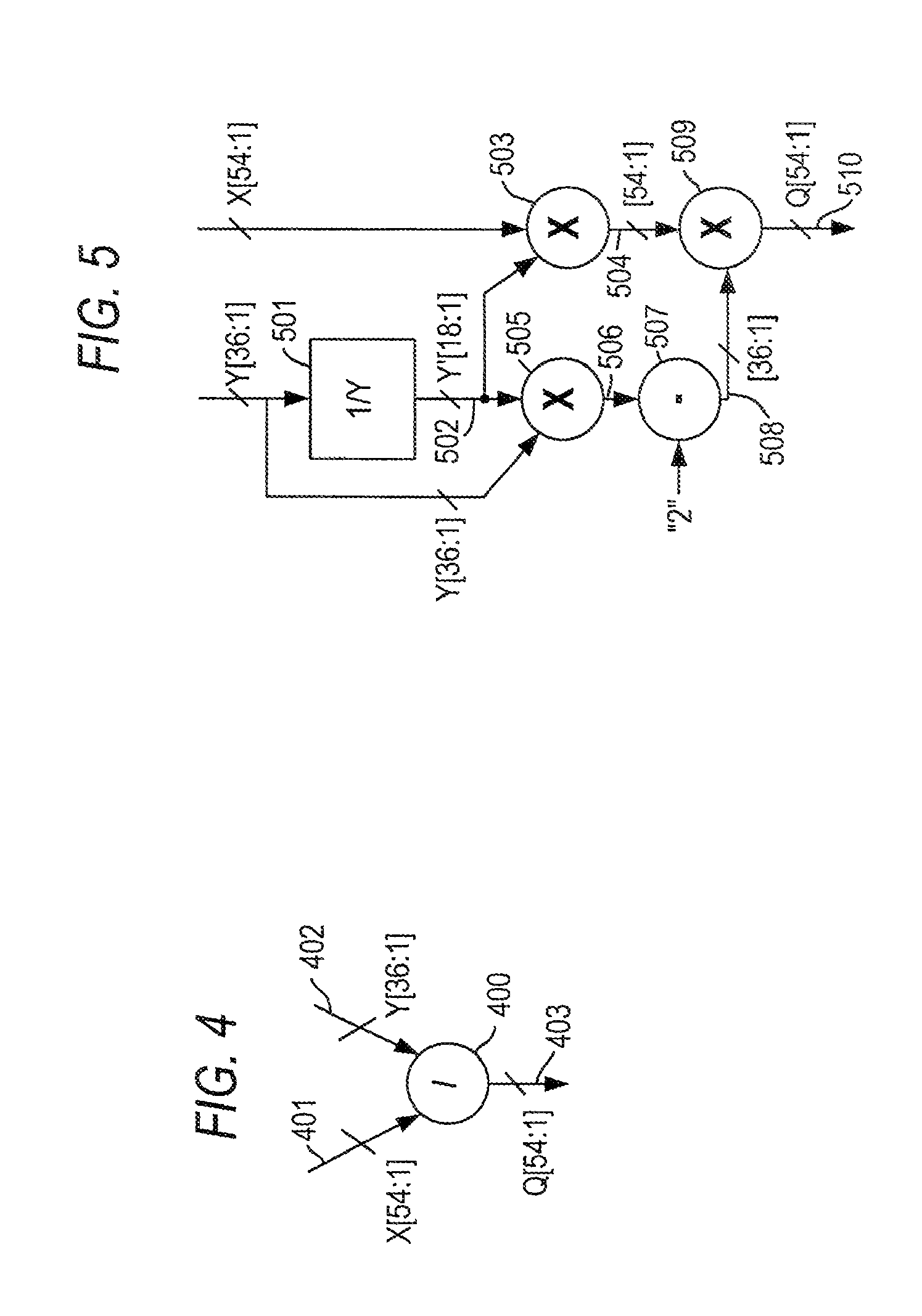

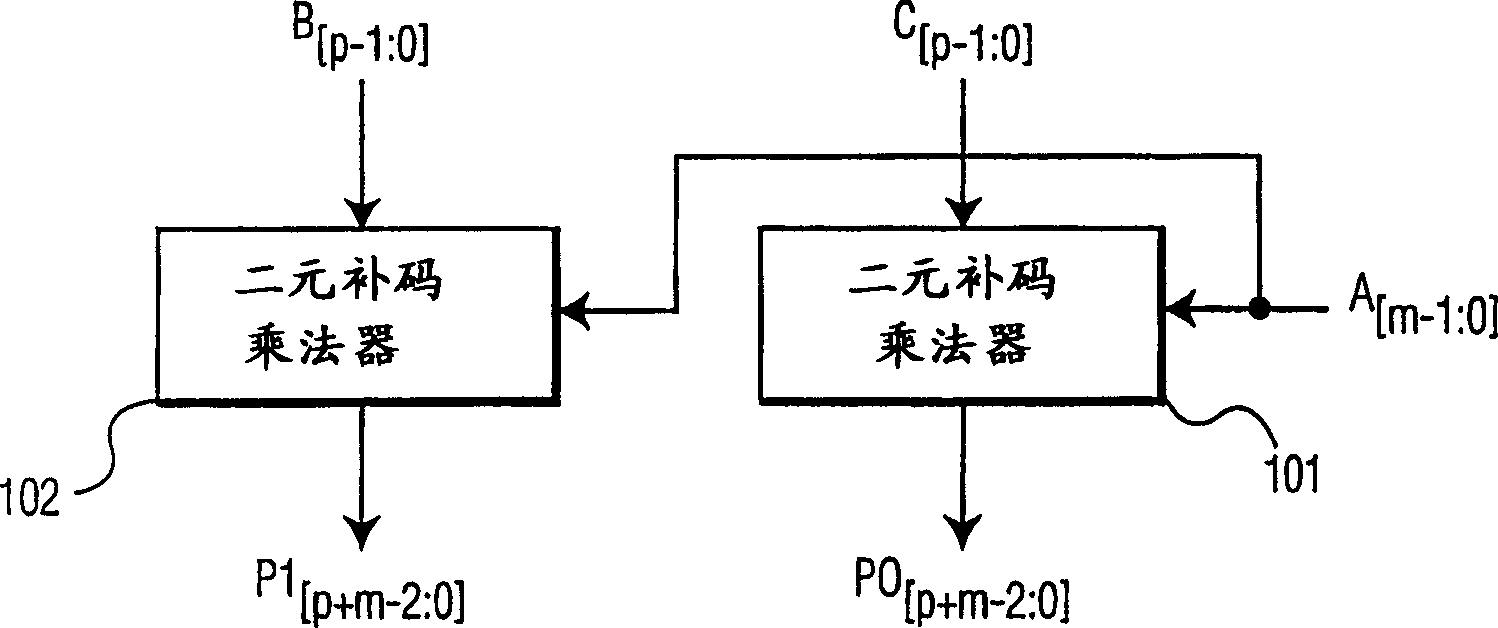

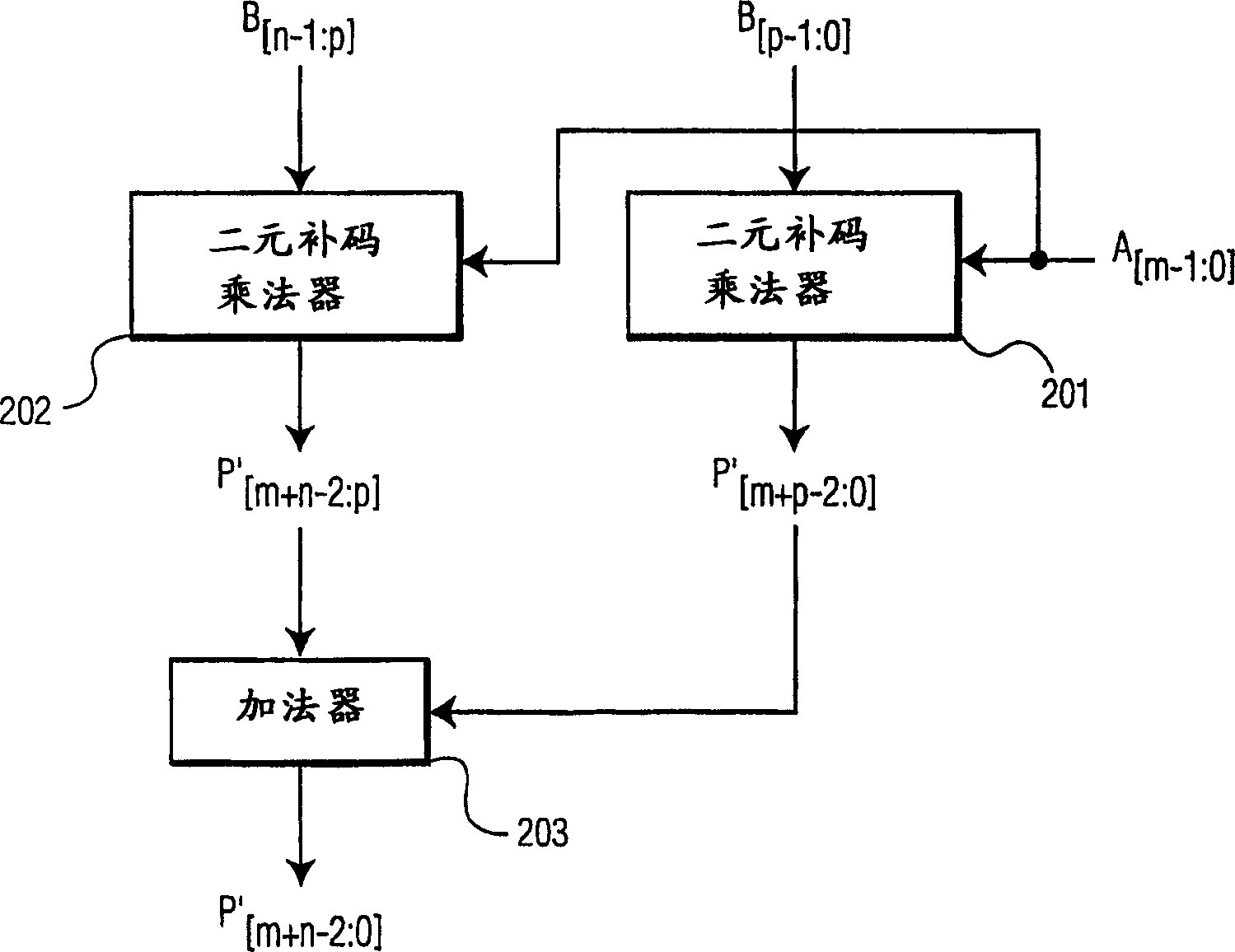

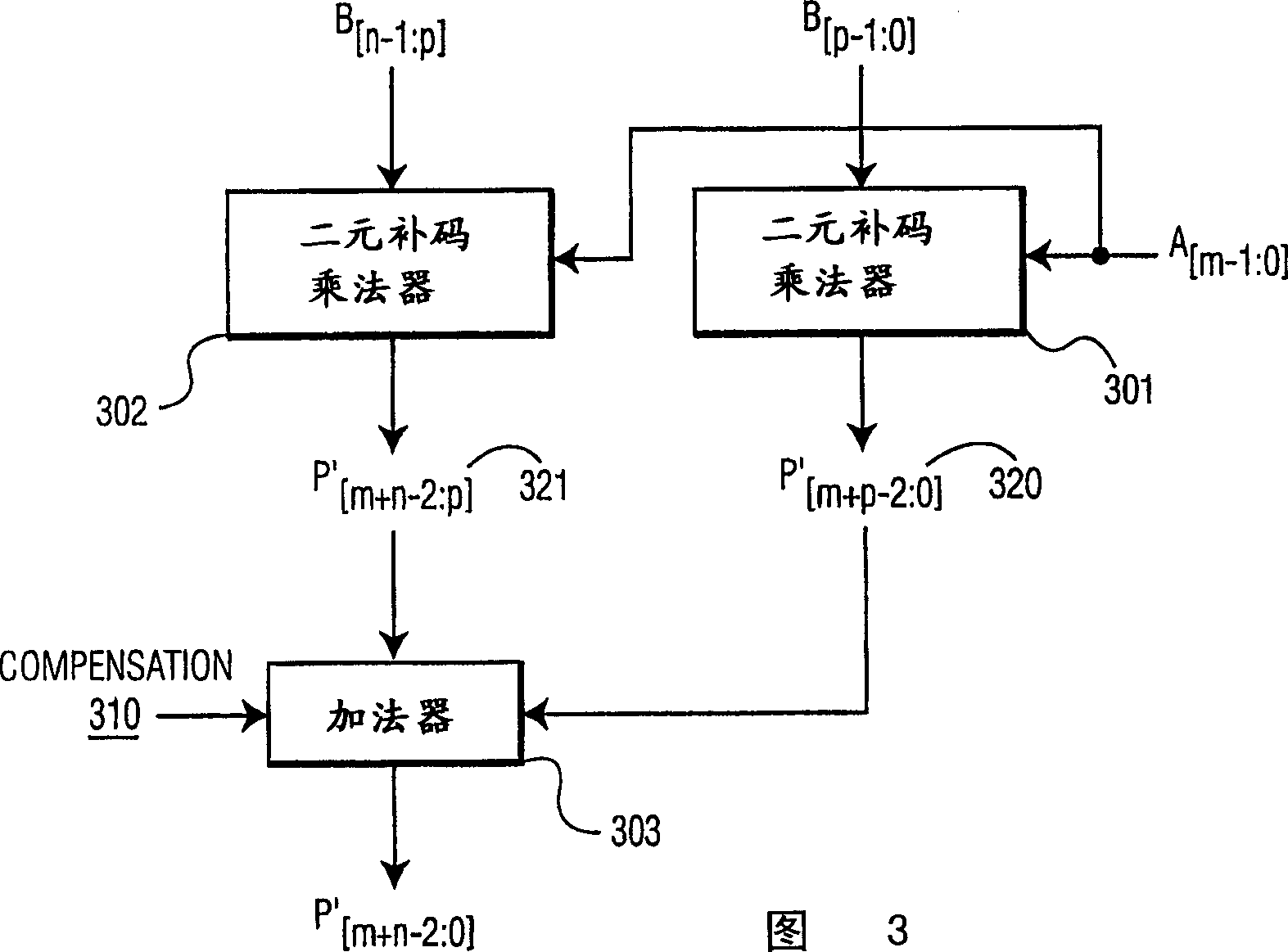

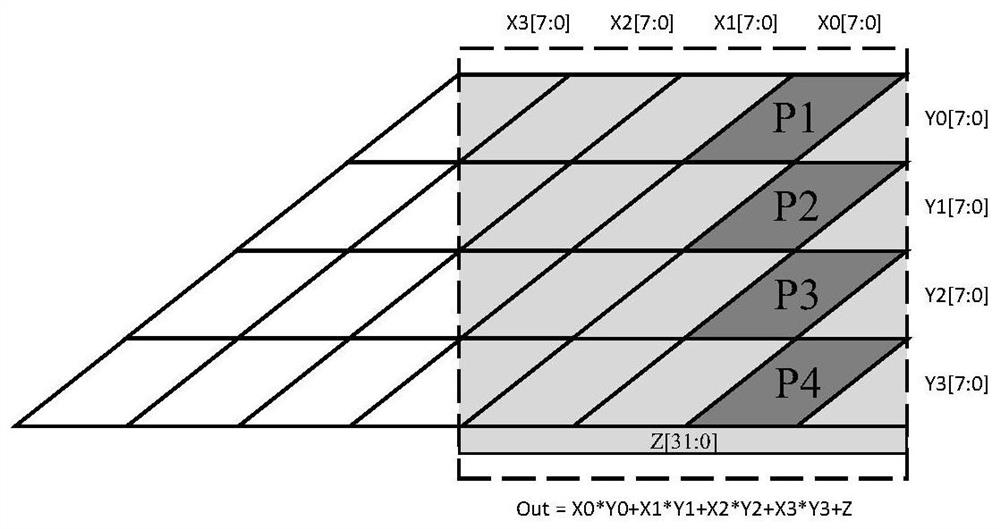

Splittable multiplier for efficient mixed-precision DSP

A method and architecture with which to achieve efficient sub-word parallelism for multiplication resources is presented. In a preferred embodiment, a dual two's complement multiplier is presented, such that an n bit operand B can be split, and each portion of the operand B multiplied with another operand A in parallel. The intermediate products are combined in an adder with a compensation vector to correct any false negative sign on the two's complement sub-product from the multiplier handling the least significant, or lower, p bits of the split operand B, or B[p-1:0], where p=n / 2. The compensation vector C is derived from the A and B operands using a simple circuit. The technique is easily extendible to 3 or more parallel multipliers, over which an n bit operand D can be split and multiplied with operand A in parallel. The compensation vector C' is similarly derived from the D and A operands in an analogous manner to the dual two's complement multiplier embodiment.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

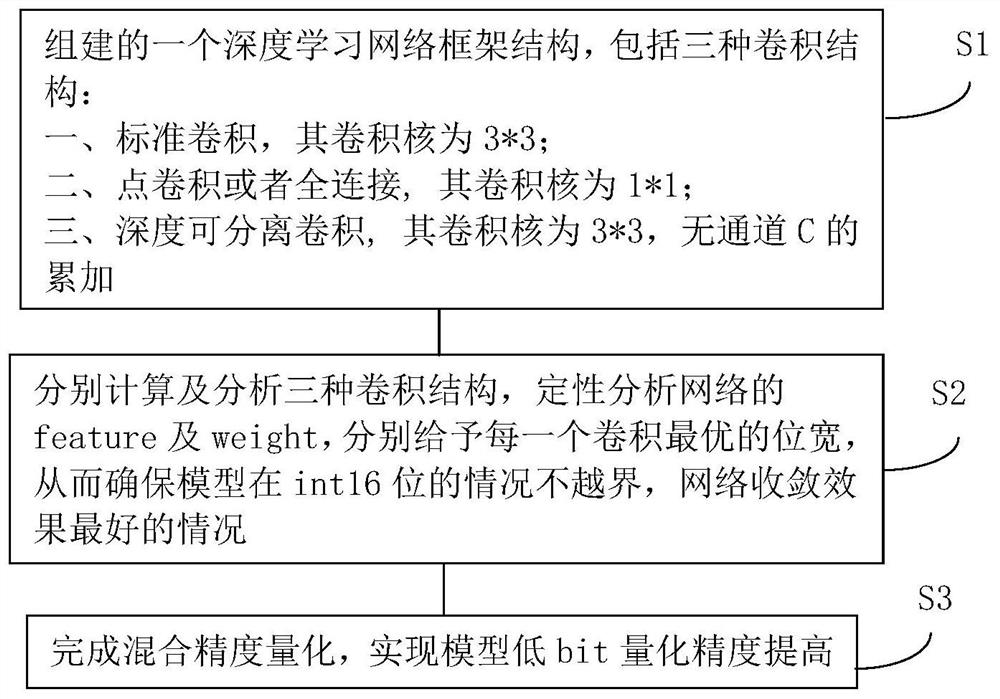

Method for quantitatively improving model precision through low-bit mixing precision

PendingCN114692818AHigh precisionMake sure reasoning doesn't cross boundariesNeural architecturesNeural learning methodsAlgorithmNetwork structure

The invention provides a method for quantitatively improving model precision through low-bit mixing precision, and the method comprises the steps: carrying out the qualitative analysis of a model network structure, and guaranteeing that reasoning does not cross a boundary through the calculation and analysis of a model channel; and the network model precision is improved in a mixed precision configuration form. According to the method, by configuring the mixing precision mode, it is ensured that the precision of the model is the same as that of 8 bits and full precision in the low-bit process. And the mixed precision quantization is carried out, and the feature and the weight of the network are qualitatively analyzed, so that the low-bit quantization precision of the model is higher.

Owner:合肥君正科技有限公司

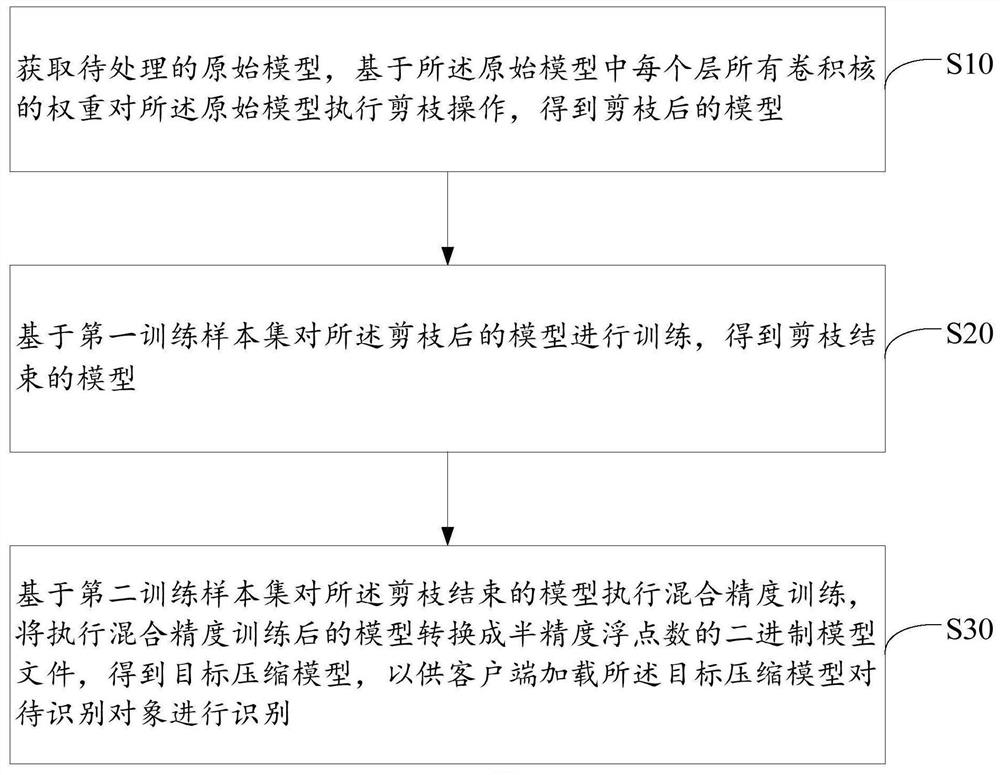

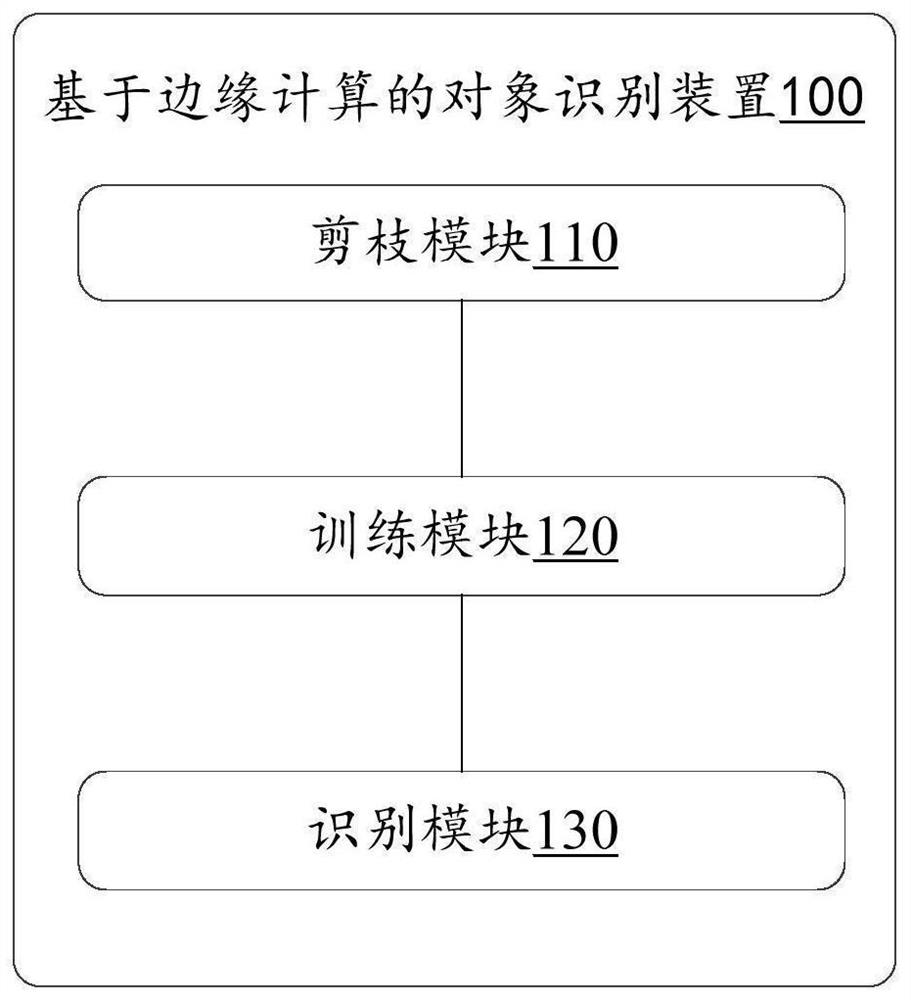

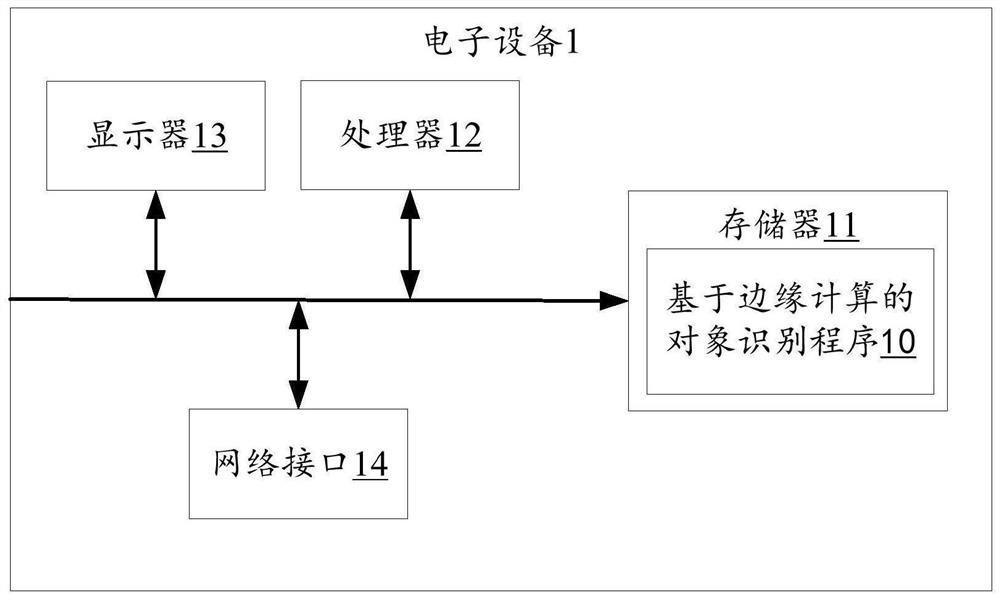

Object recognition method and device based on edge calculation, equipment and medium

PendingCN113688796AAvoid the risk of downtimeReduce redundant informationCharacter and pattern recognitionNeural architecturesPattern recognitionAlgorithm

The invention relates to the technical field of artificial intelligence, and provides an object recognition method and device based on edge calculation, equipment and a medium. The method comprises the steps of obtaining a to-be-processed original model, performing pruning operation on the original model based on weights of all convolution kernels of each layer in the original model to obtain a pruned model, training the pruned model based on a first training sample set to obtain a pruned model, obtaining a second training sample set; and performing mixed precision training on the pruned model based on the second training sample set, and converting the trained model into a binary model file of a semi-precision floating-point number to obtain a target compression model, so that a client loads the target compression model to identify a to-be-identified object. According to the invention, the timeliness of model identification can be improved, and the risk of server downtime is reduced. The invention further relates to the technical field of block chains. The target compression model can be stored in a node of a block chain.

Owner:CHINA PING AN PROPERTY INSURANCE CO LTD

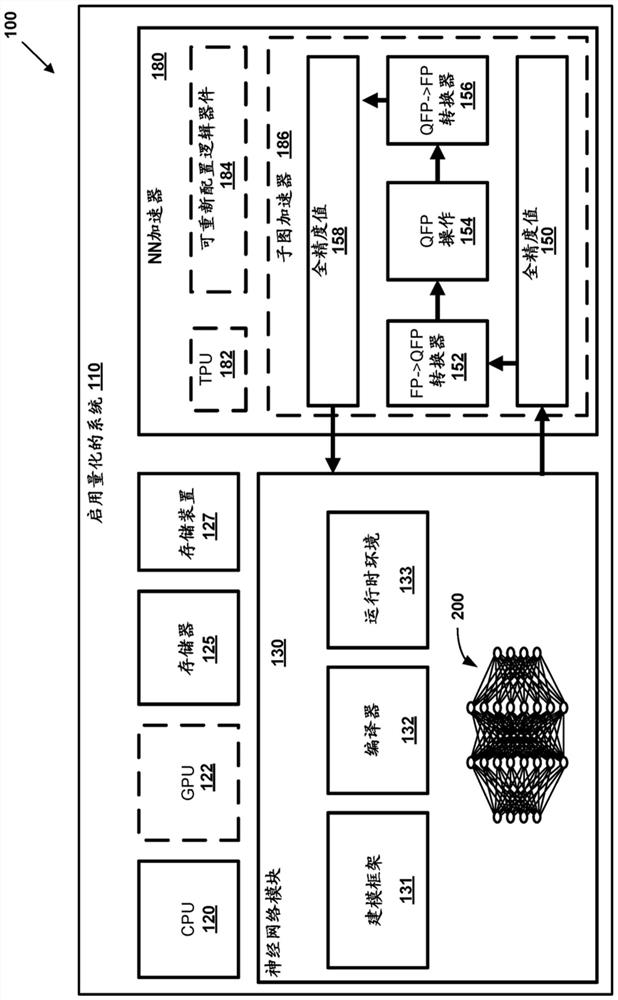

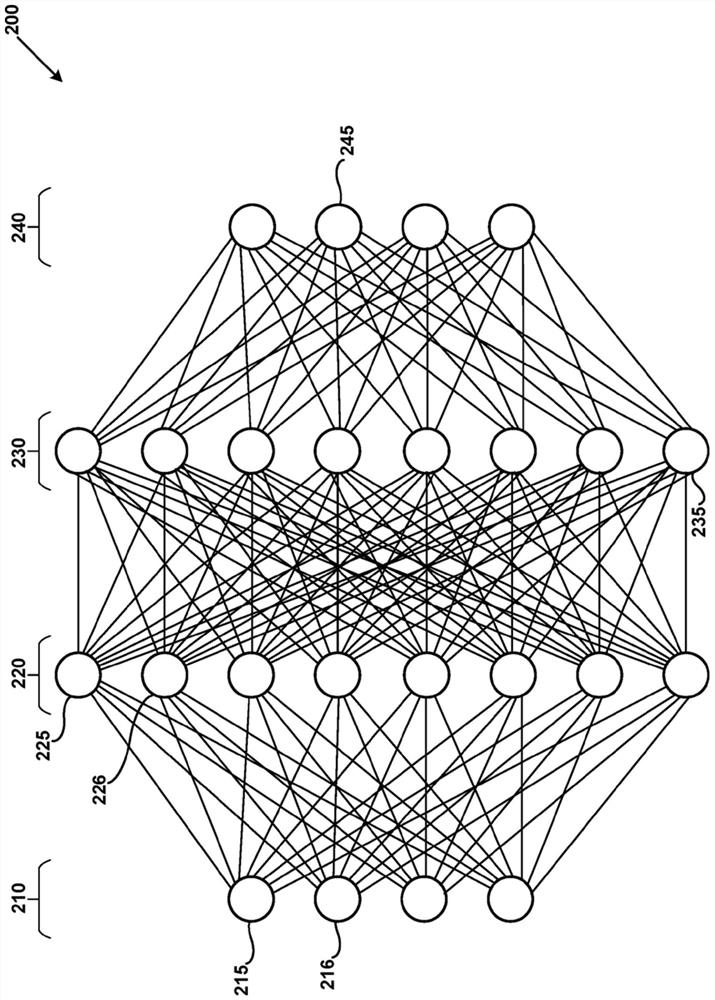

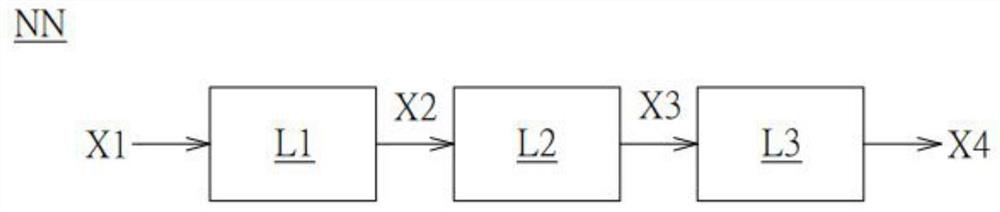

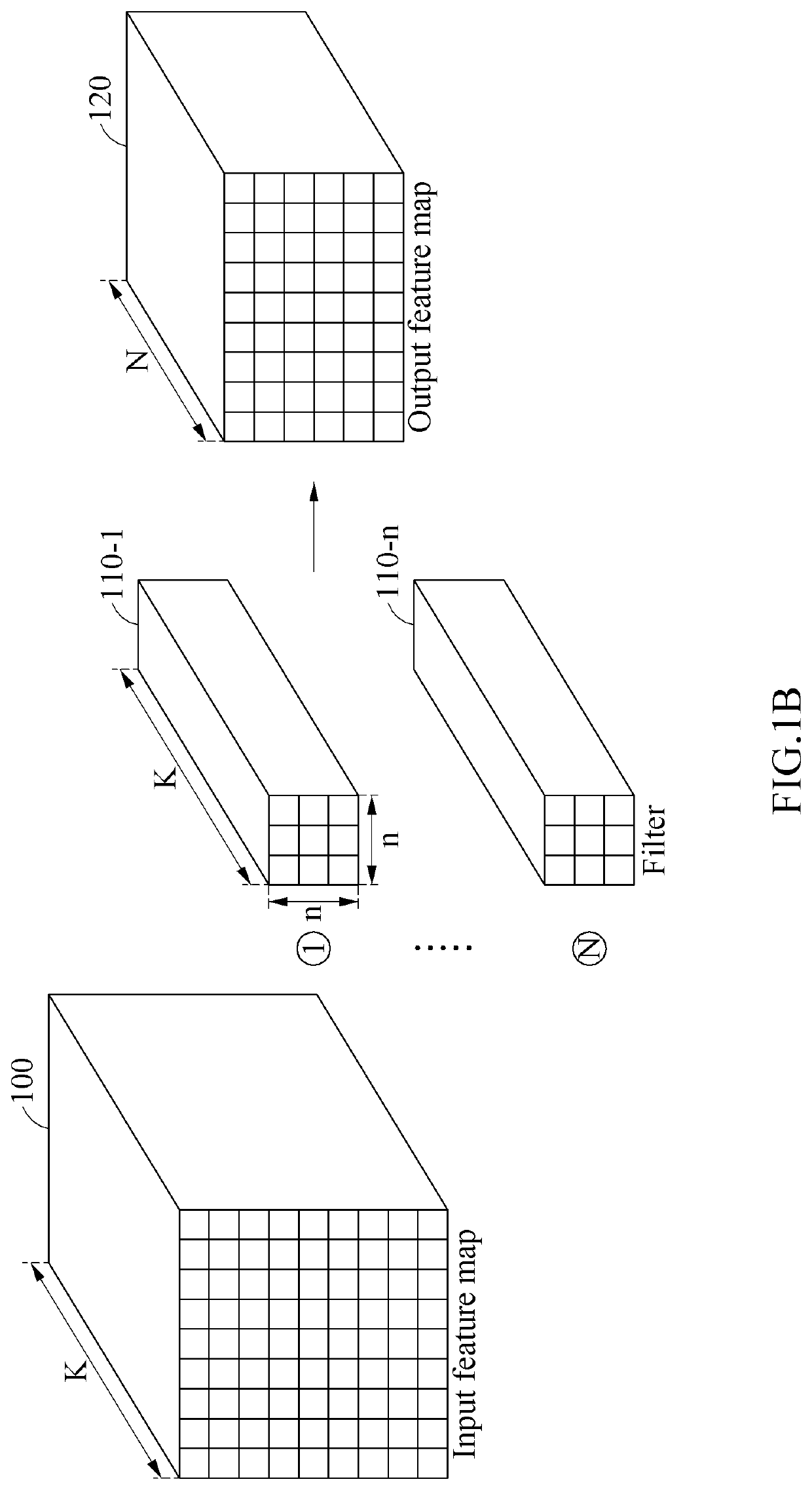

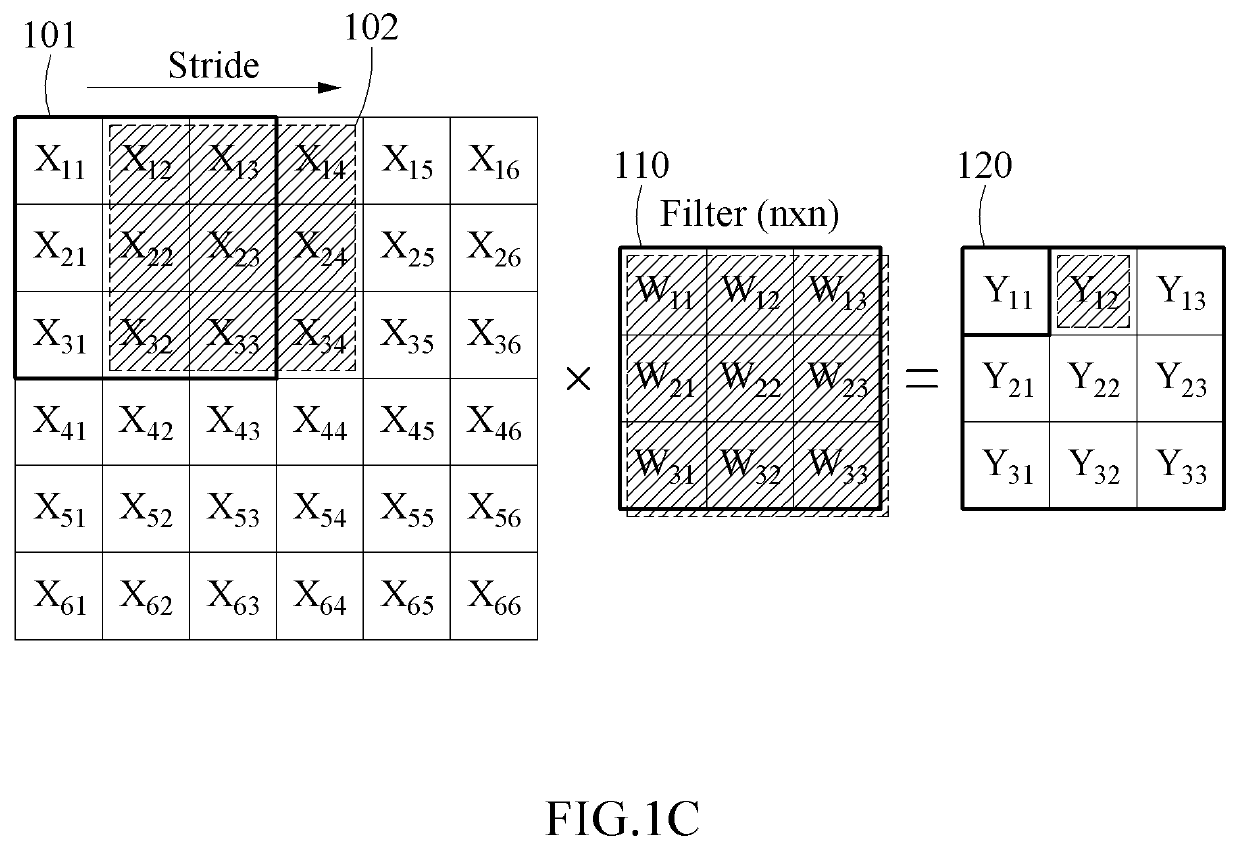

Training neural network accelerators using mixed precision data formats

Technology related to training a neural network accelerator using mixed precision data formats is disclosed. In one example of the disclosed technology, a neural network accelerator is configured to accelerate a given layer of a multi-layer neural network. An input tensor for the given layer can be converted from a normal-precision floating-point format to a quantized-precision floating-point format. A tensor operation can be performed using the converted input tensor. A result of the tensor operation can be converted from the block floating-point format to the normal-precision floating-point format. The converted result can be used to generate an output tensor of the layer of the neural network, where the output tensor is in normal-precision floating-point format.

Owner:MICROSOFT TECH LICENSING LLC

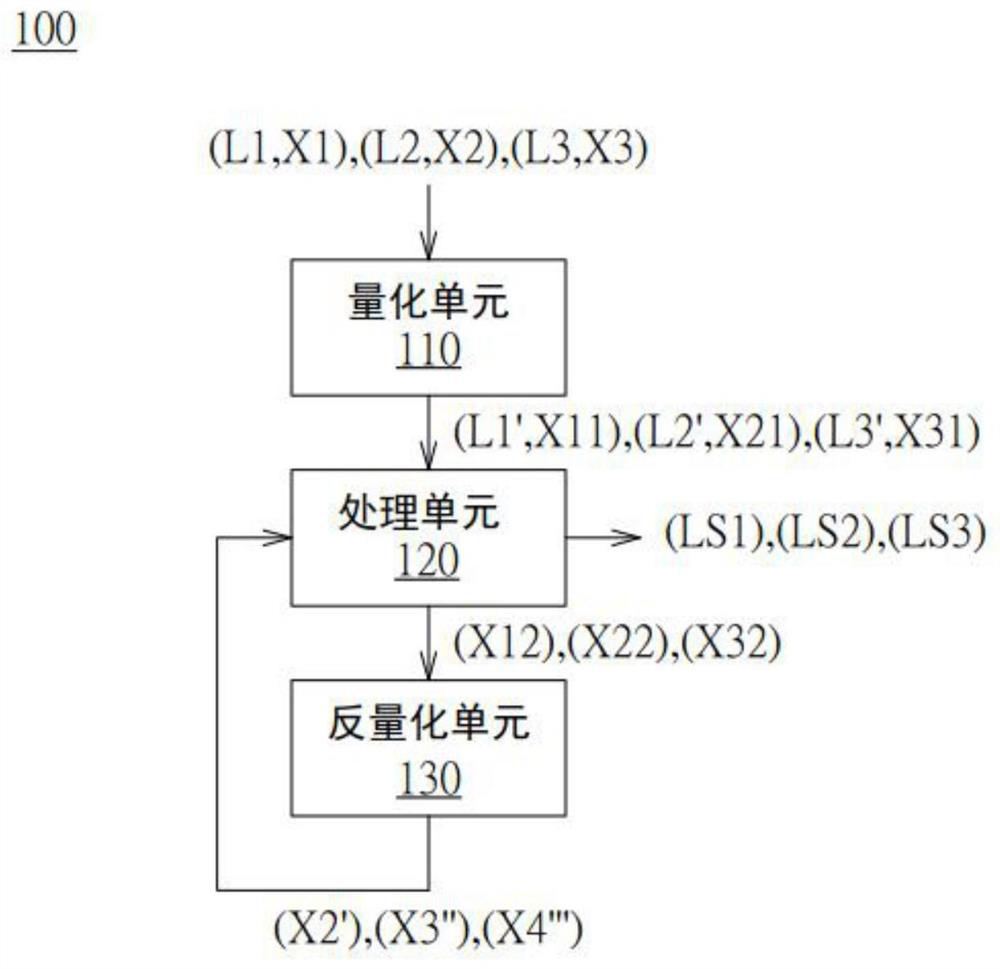

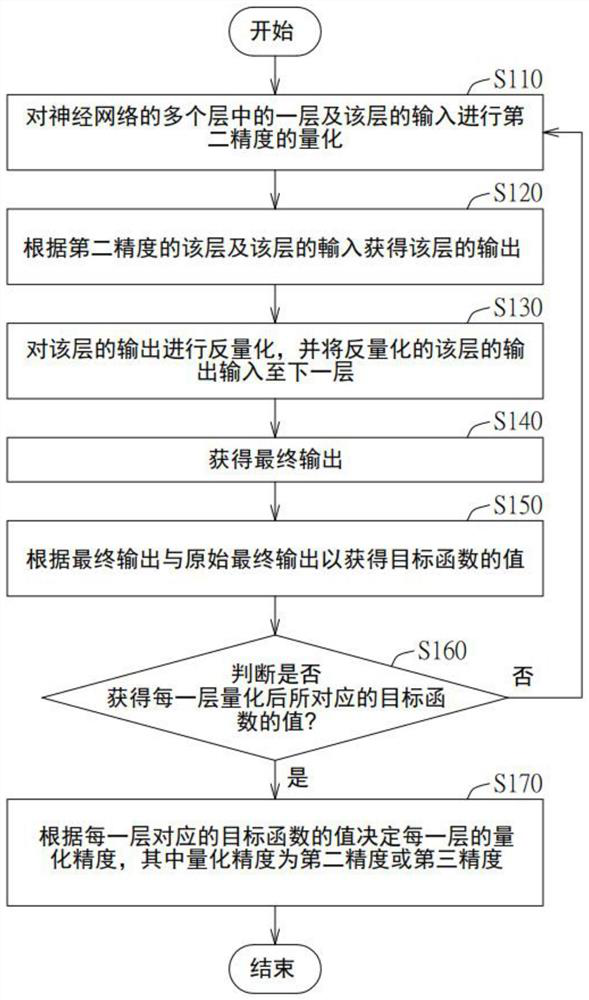

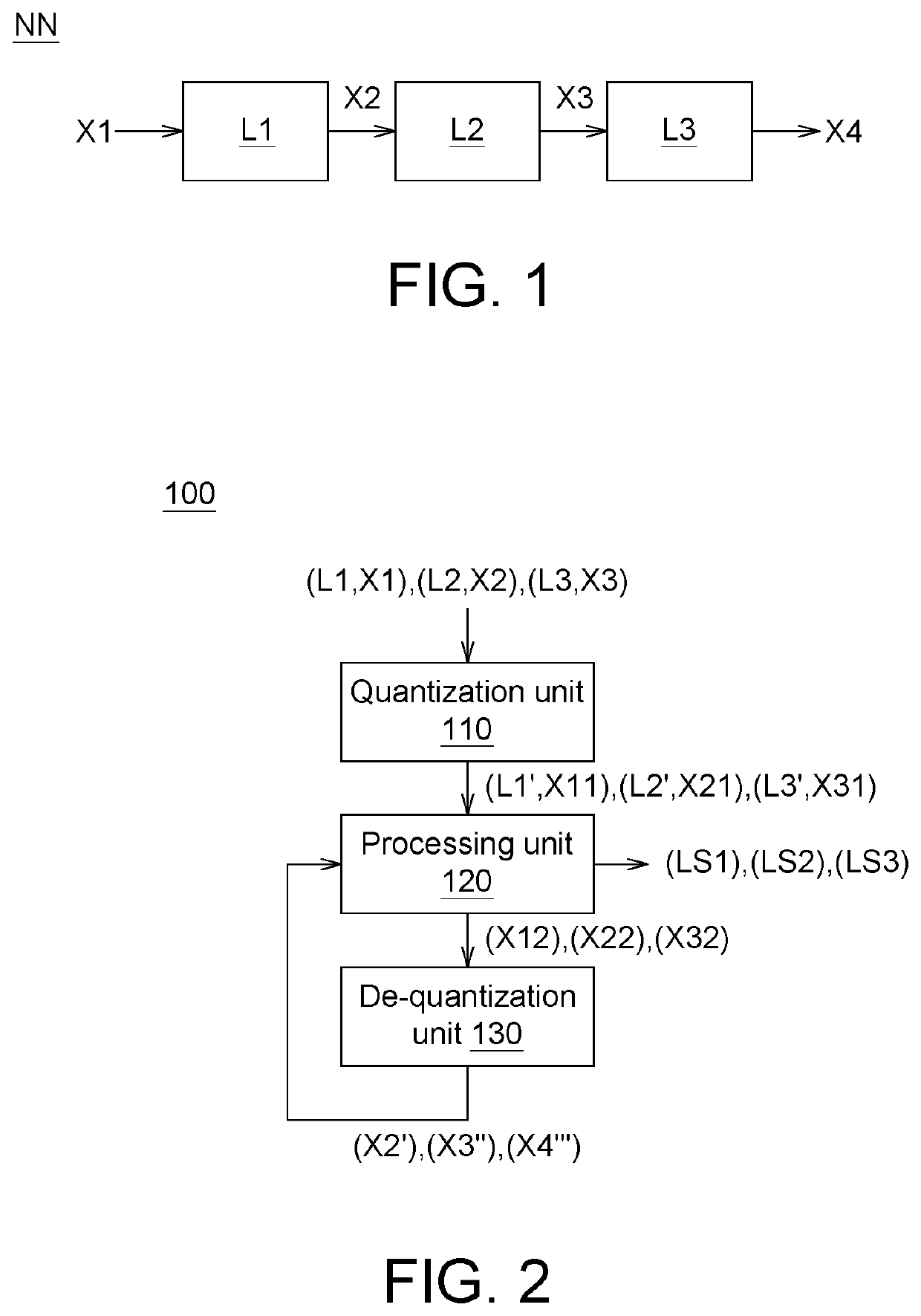

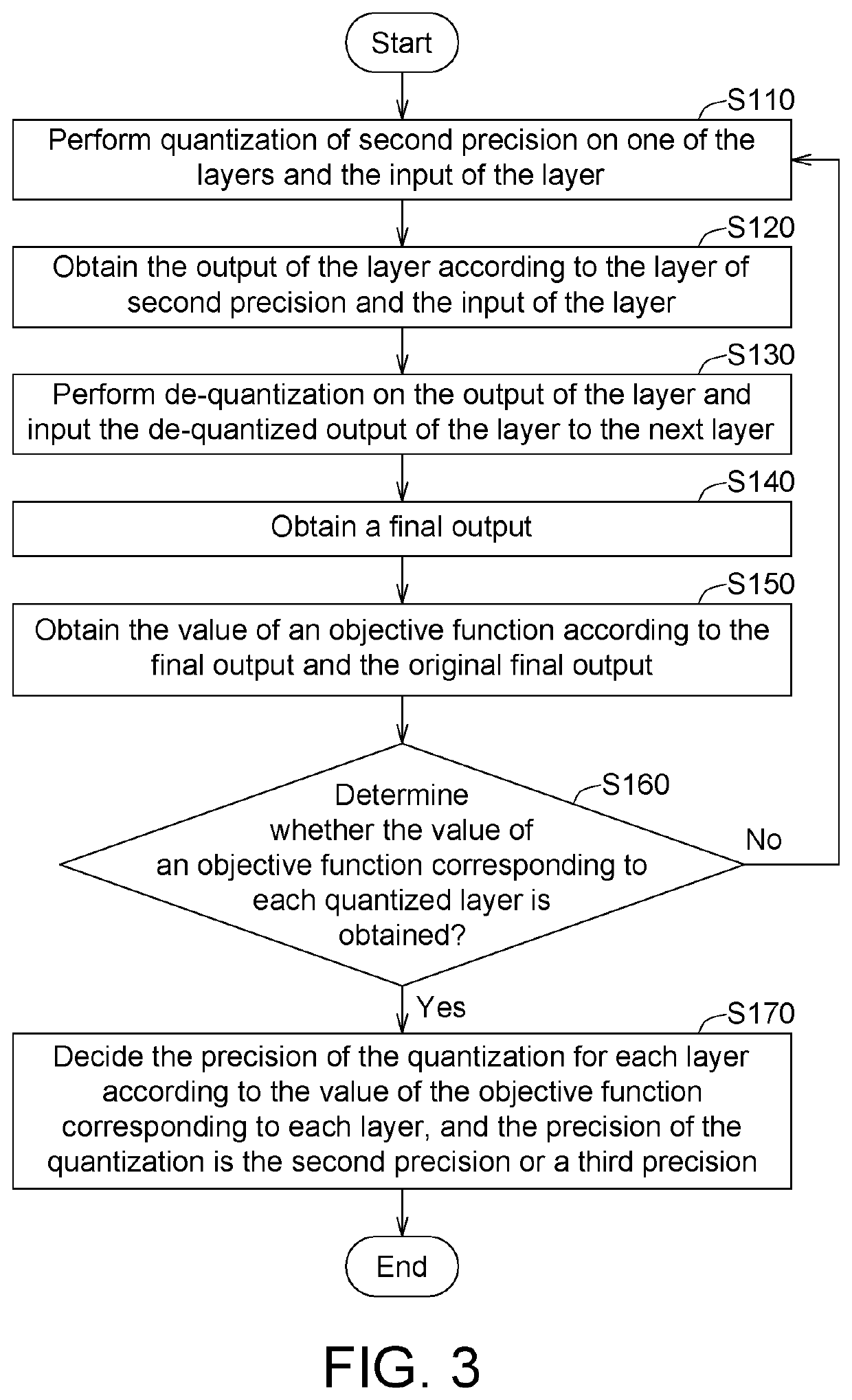

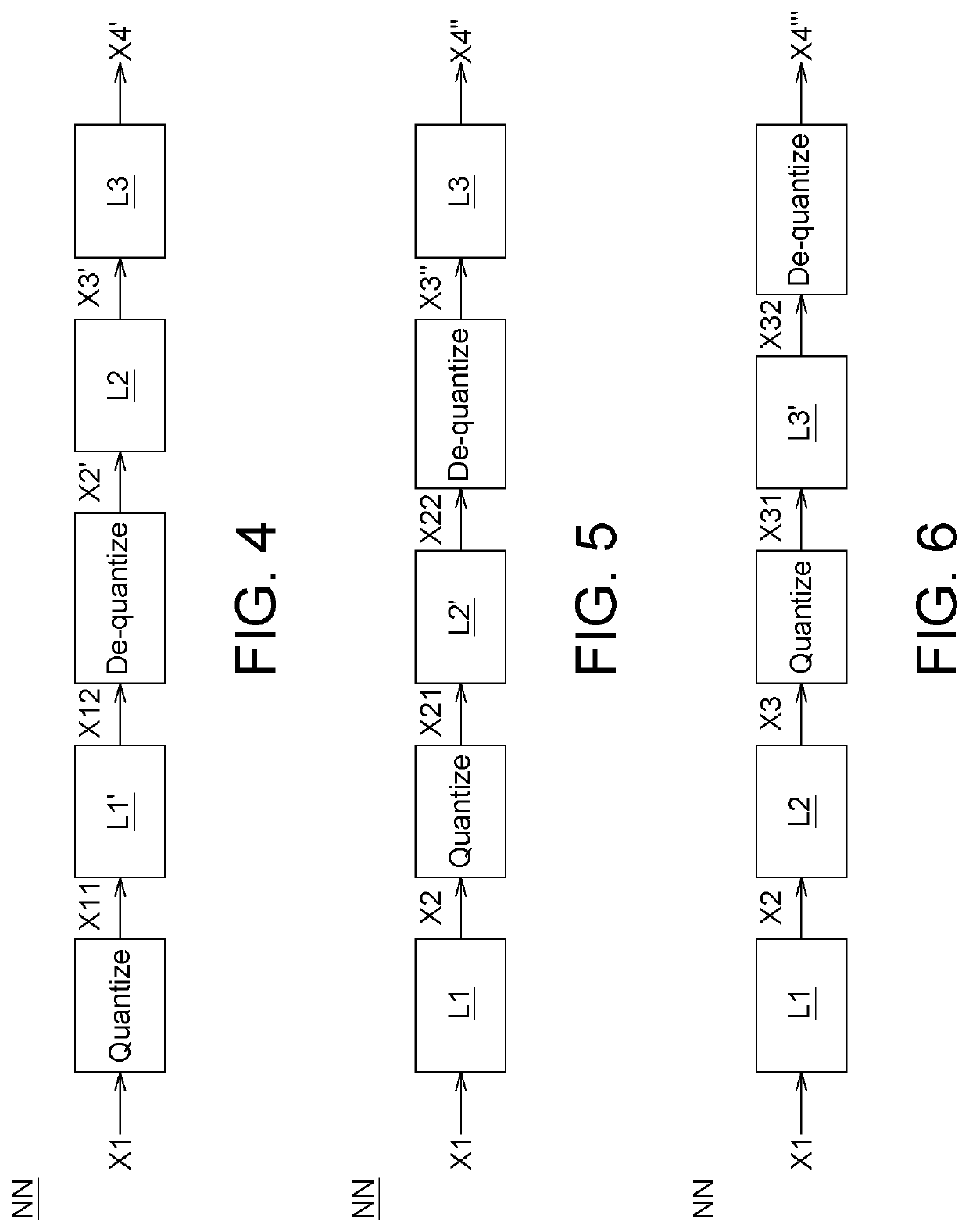

Mixing precision quantification method of neural network

PendingCN114492721ADetermine the quantization precisionNeural architecturesPhysical realisationAlgorithmInverse quantization

A hybrid precision quantization method for a neural network having a first precision and including a plurality of layers and an original final output. The mixed precision quantization method comprises the following steps of: performing quantization of a second precision on one of the layers and the input of the layer; obtaining the output of the layer according to the layer of the second precision and the input of the layer; performing inverse quantization on the output of the layer, and inputting the inverse quantized output of the layer to the next layer; obtaining a final output; obtaining a value of an objective function according to the final output and the original final output; repeating the steps until the value of the objective function corresponding to each of the layers is obtained; determining a quantization precision of each of the layers according to the value of the objective function corresponding to each of the layers; wherein the quantization precision is the first precision, the second precision, the third precision or the fourth precision.

Owner:BEIJING JINGSHI INTELLIGENT TECH CO LTD

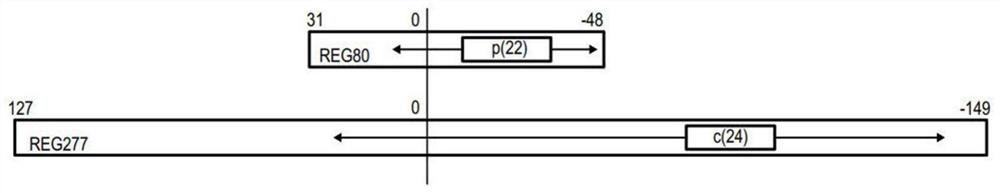

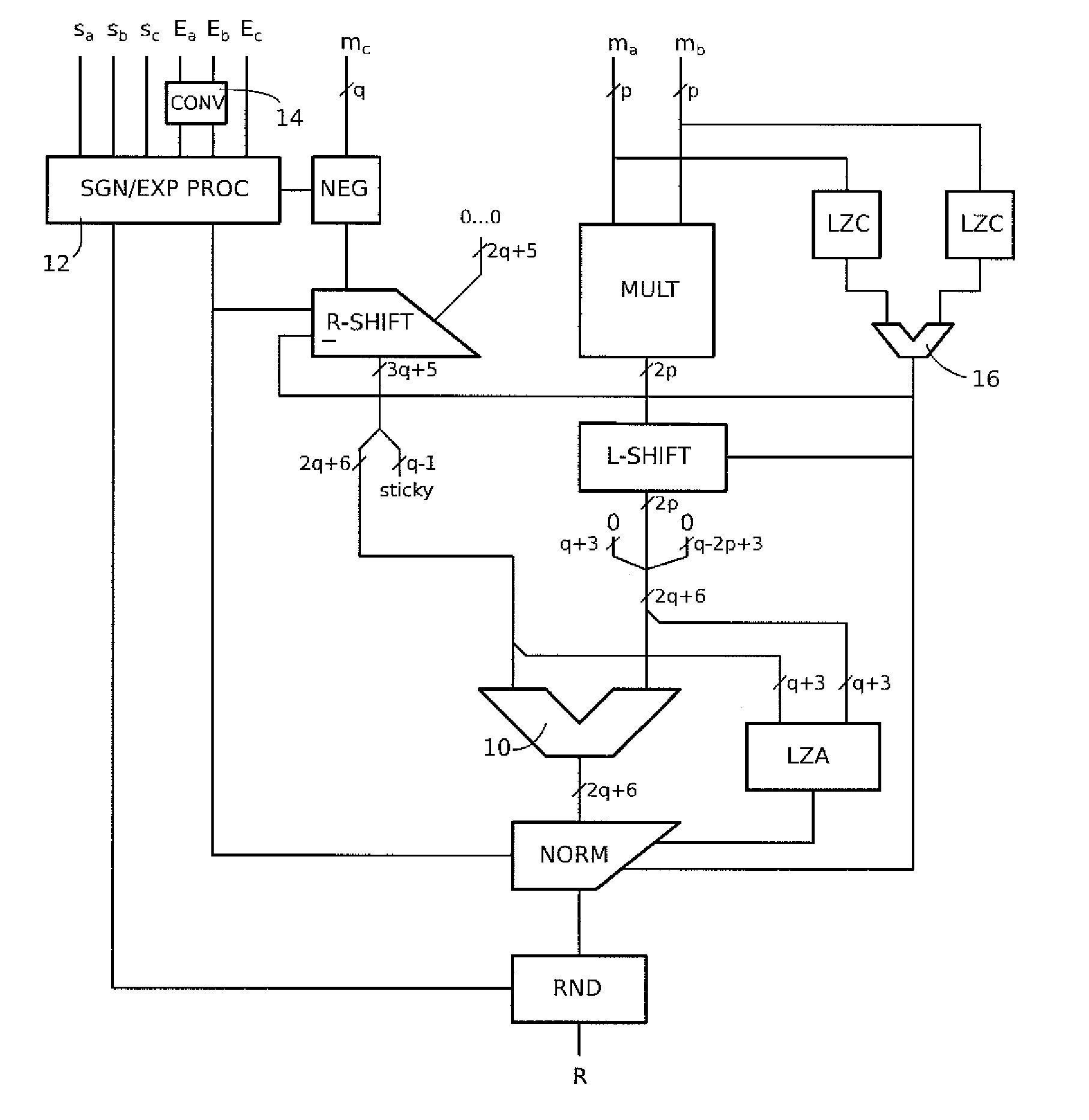

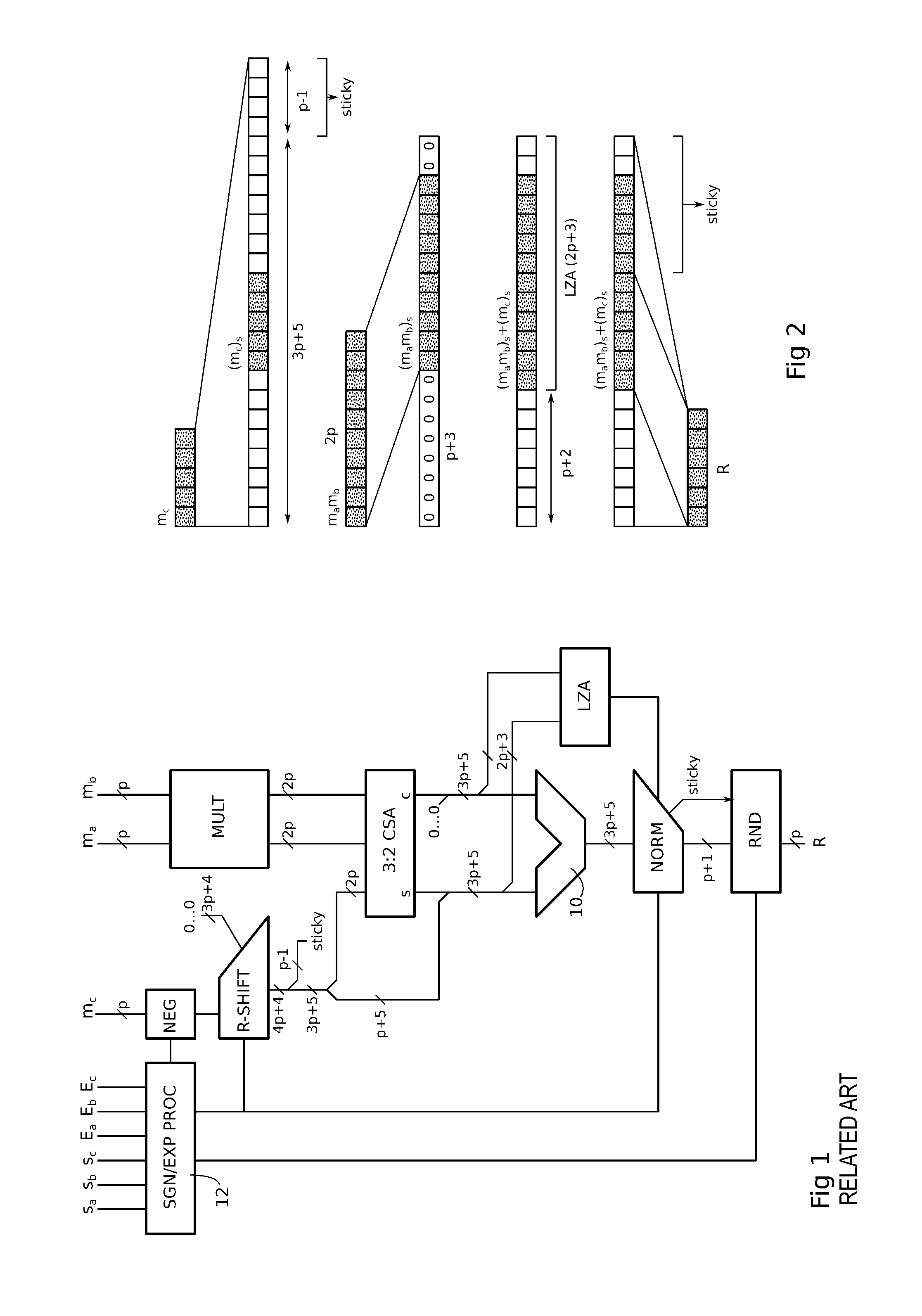

Fusion multiply-add operator with correctly rounded mixed precision floating-point number

A fusion multiply-add hardware operator comprising a multiplier receiving two multiplicands as floating-point numbers encoded in a first precision format; an alignment circuit associated with the multiplier configured to convert the result of the multiplication into a first fixed-point number ; and an adder configured to add the first fixed-point number and an addition operand. The addition operand is a floating-point number encoded in a second precision format , and the operator comprises an alignment circuit associated with the addition operand, configured to convert the addition operand into a second fixed-point number of reduced dynamic range relative to the dynamic range of the addition operand, having a number of bits equal to the number of bits of the first fixed-point number, extended on both sides by at least the size of the mantissa of the addition operand; the adder configured to add the first and second fixed-point numbers without loss.

Owner:KALRAY

Neural network operation method and device

PendingUS20220269950A1Increase the number ofComputation using non-contact making devicesNeural architecturesEngineeringArtificial intelligence

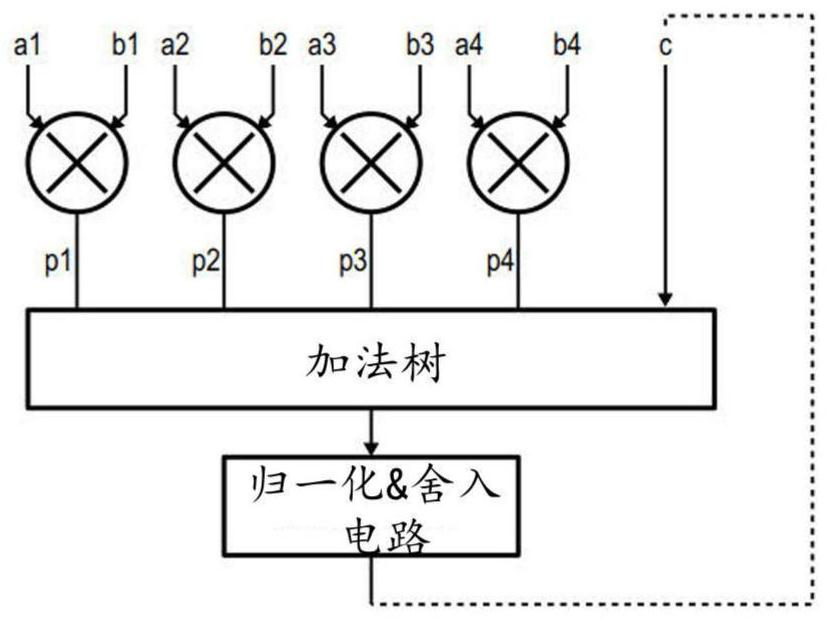

A neural network operation device includes an input feature map buffer to store an input feature map, a weight buffer to store a weight, an operator including an adder tree unit to perform an operation between the input feature map and the weight by a unit of a reference bit length, and a controller to map the input feature map and the weight to the operator to provide one or both of a mixed precision operation and data parallelism.

Owner:SAMSUNG ELECTRONICS CO LTD

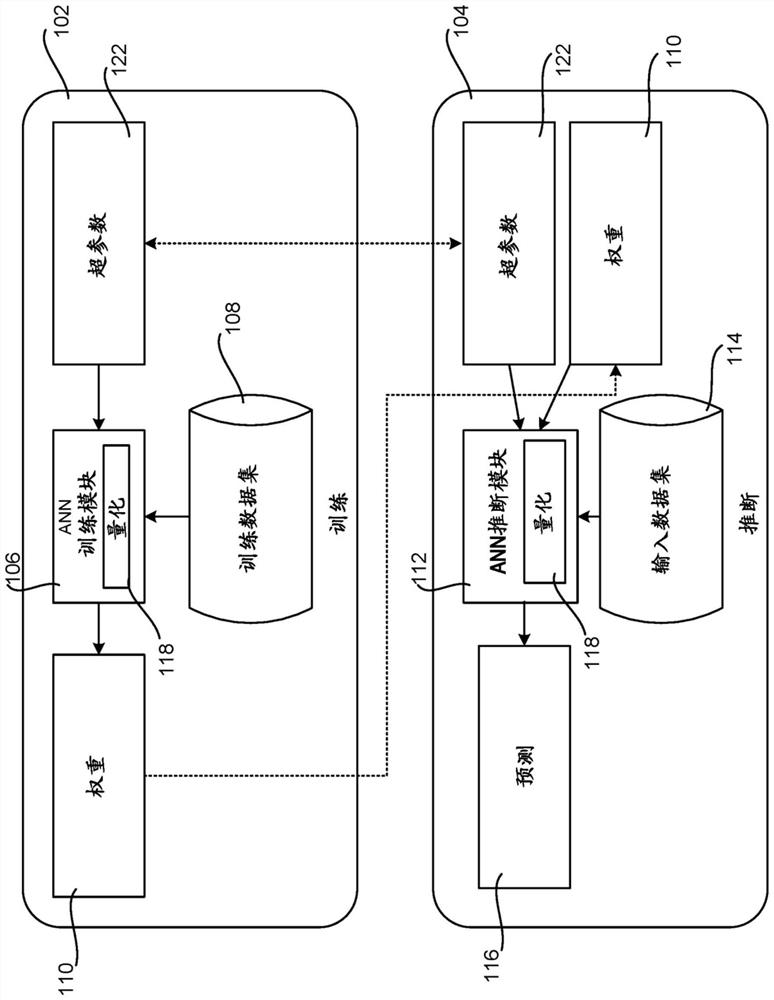

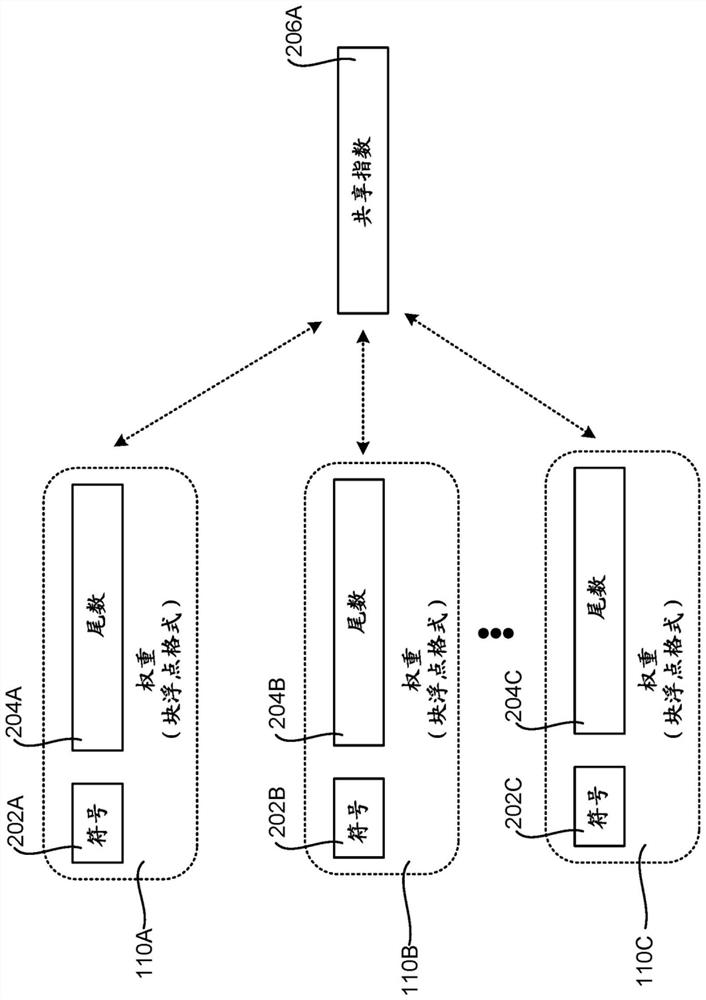

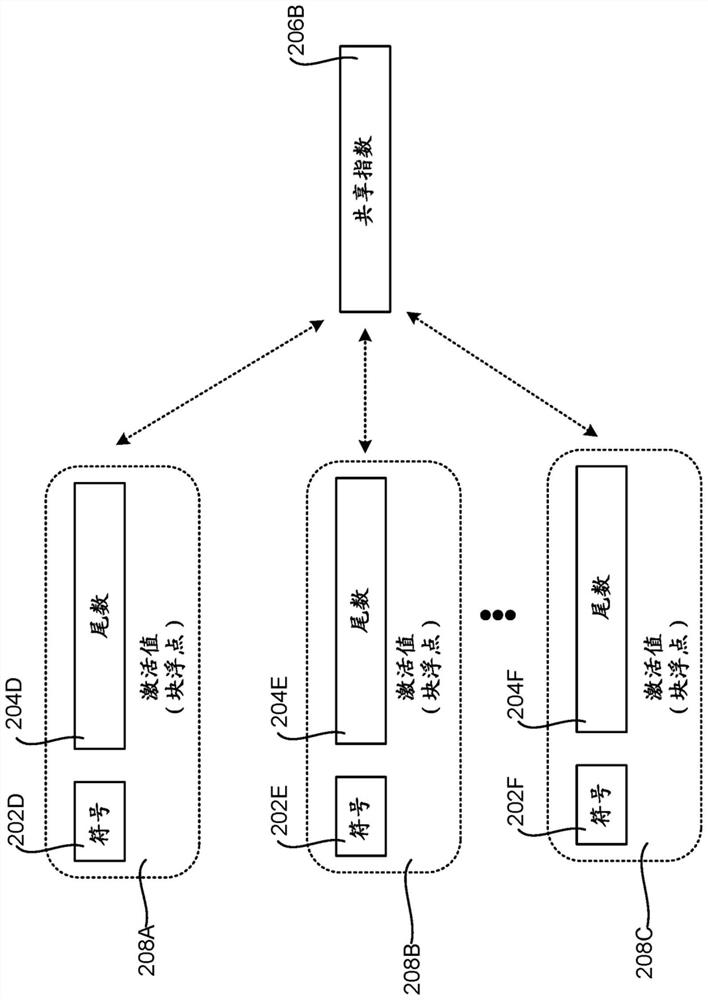

Mixed precision training of an artificial neural network

PendingCN113632106AHigh precisionReduce storage resourcesNeural architecturesPhysical realisationSimulationTerm memory

The use of mixed precision values when training an artificial neural network (ANN) can increase performance while reducing cost. Certain portions and / or steps of an ANN may be selected to use higher or lower precision values when training. Additionally, or alternatively, early phases of training are accurate enough with lower levels of precision to quickly refine an ANN model, while higher levels of precision may be used to increase accuracy for later steps and epochs. Similarly, different gates of a long short-term memory (LSTM) may be supplied with values having different precisions.

Owner:MICROSOFT TECH LICENSING LLC

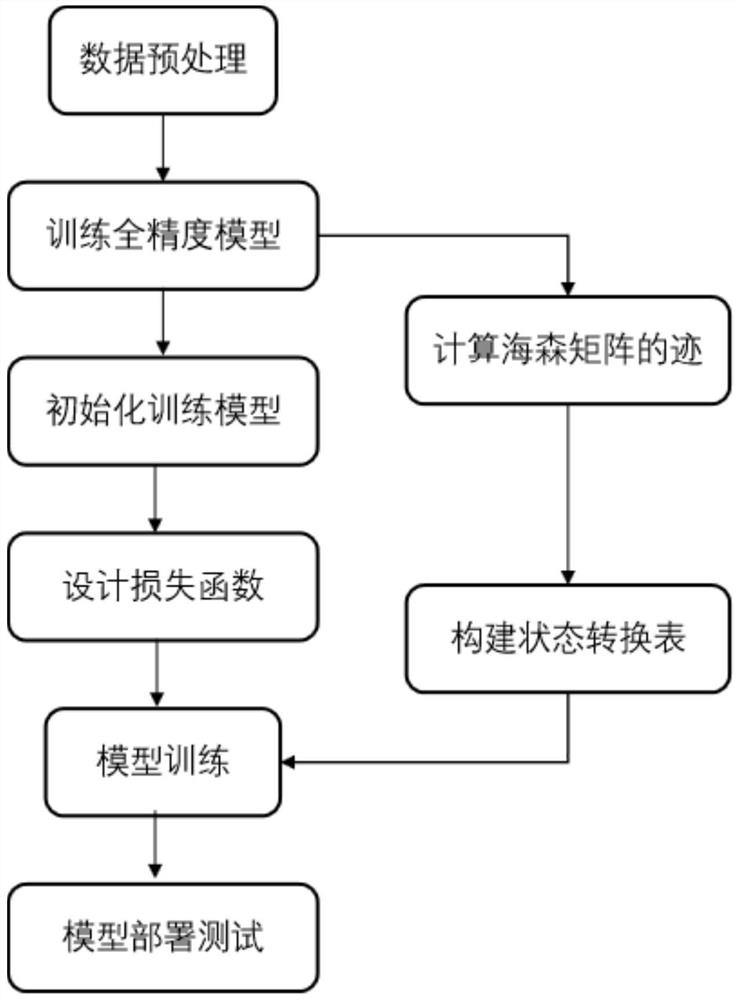

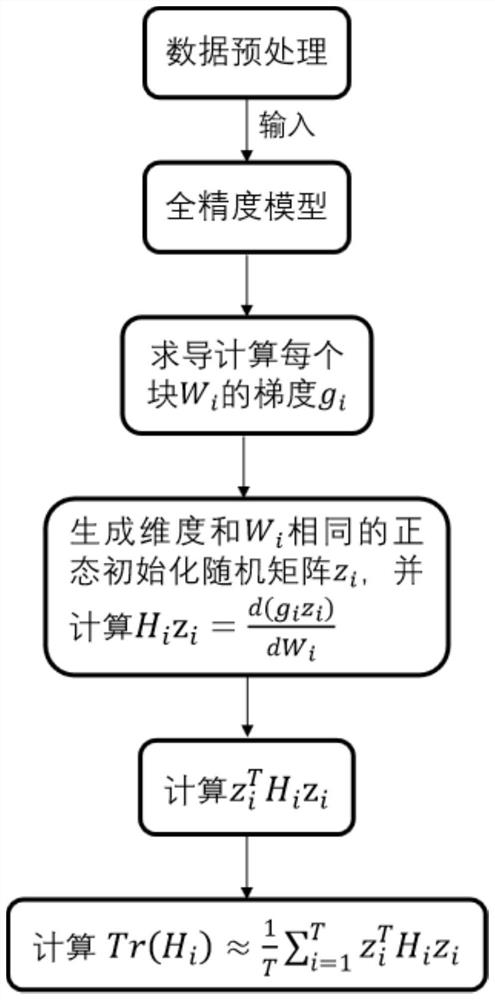

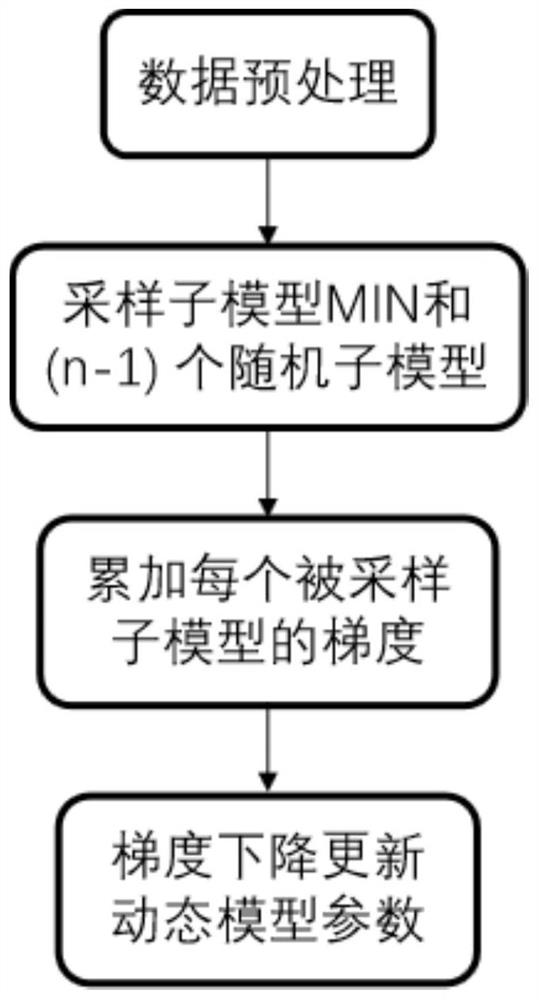

Dynamic mixing precision model construction method and system

InactiveCN113076663AImproved Adaptive Quantization FunctionHigh precisionDesign optimisation/simulationSpecial data processing applicationsAlgorithmSelf adaptive

The invention discloses a dynamic mixing precision model construction method and system, and relates to a deep neural network technology. Aiming at the convertible state number and precision problems in the prior art, the scheme is provided, and the method is characterized by constructing a mixed precision state conversion table according to an average trace of a Hessian matrix of parameters of different blocks in a full-precision model and an optional parameter precision table S; randomly sampling a plurality of mixed precision sub-models by adopting each training iteration in the training process, and performing quantization operation by using an improved quantization function to obtain a mixed precision model; and forming a mixed bit deployment state table according to the actual deployment requirements to carry out actual deployment. The method has the advantages that the search space and the calculation amount required by training are reduced by utilizing the Hessian matrix average trace and the random sampling, and meanwhile, the improved quantization function can be directly migrated among different quantization bits, and the quantization error is small, so that the mixed precision model can be adaptively deployed in a lower bit scene; and meanwhile, the precision in a higher bit scene is also improved.

Owner:SOUTH CHINA UNIV OF TECH

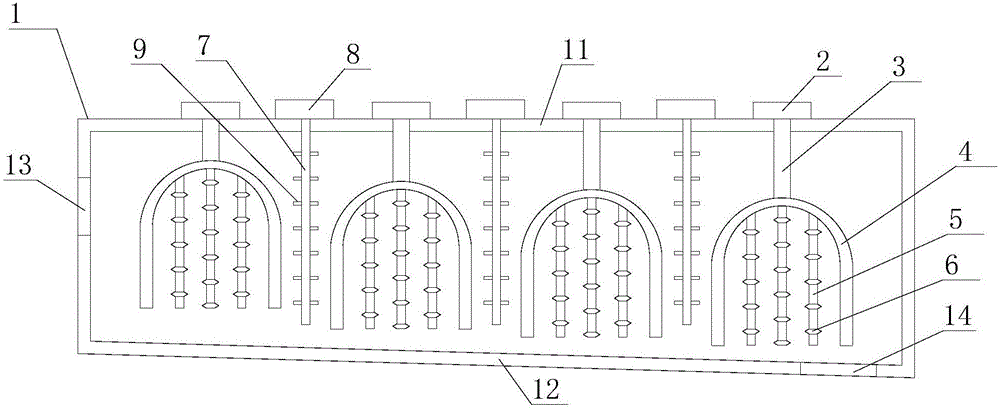

Continuous mixing equipment for poultry feed

InactiveCN106799179AStir wellStirring and mixing with high precisionFeeding-stuffRotary stirring mixersEngineeringContinuous mixing

The invention provides continuous mixing equipment for a poultry feed. The continuous mixing equipment comprises a stirring tank and a plurality of stirring devices, wherein a feed inlet and a discharge outlet are formed in the stirring tank, the plurality of stirring devices are arranged in the stirring tank and an interval is reserved between the two adjacent stirring devices, each stirring device consists of an invertedly U-shaped frame, a motor and a rotating axis, a plurality of stirring bars which are parallel to each other are distributed on the inner side of the invertedly U-shaped frame at intervals, the rotating axis is vertically distributed, the motor is arranged on a top plate of the stirring tank, the motor is connected with the rotating axis and drives the rotating axis to rotate, and the invertedly U-shaped frame is connected with the rotating axis and rotates along with the rotating axis. The feed is stirred, crushed and mixed through the invertedly U-shaped frame and the stirring bars and can be evenly stirred, the stirring and mixing precision is high, the stirring and mixing speed is fast and the quality of the product is high.

Owner:宿松县华图生物科技有限公司

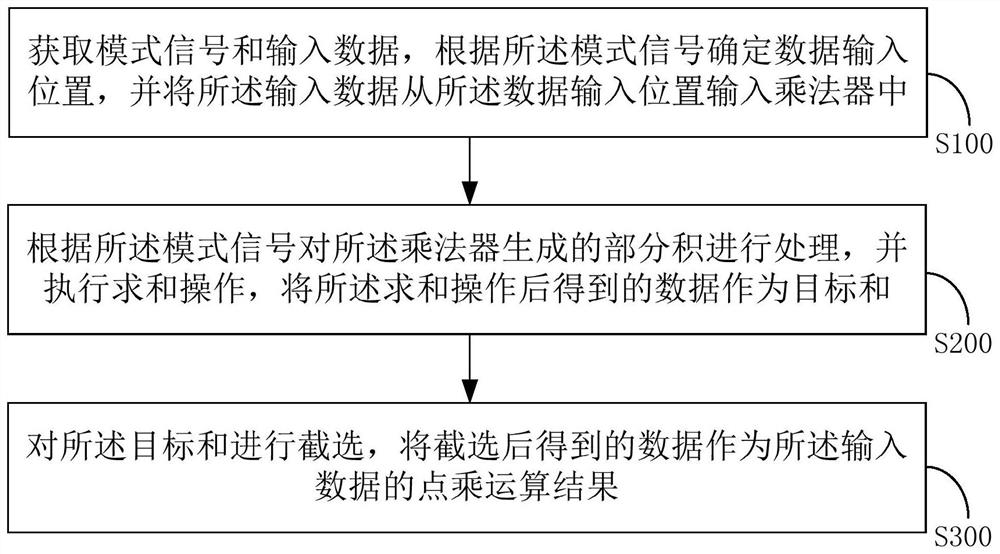

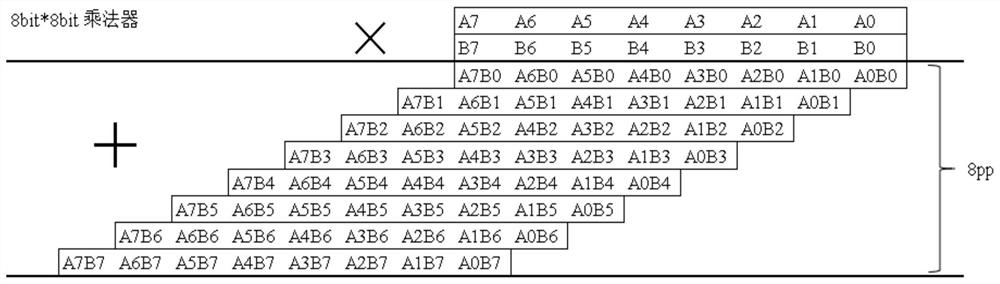

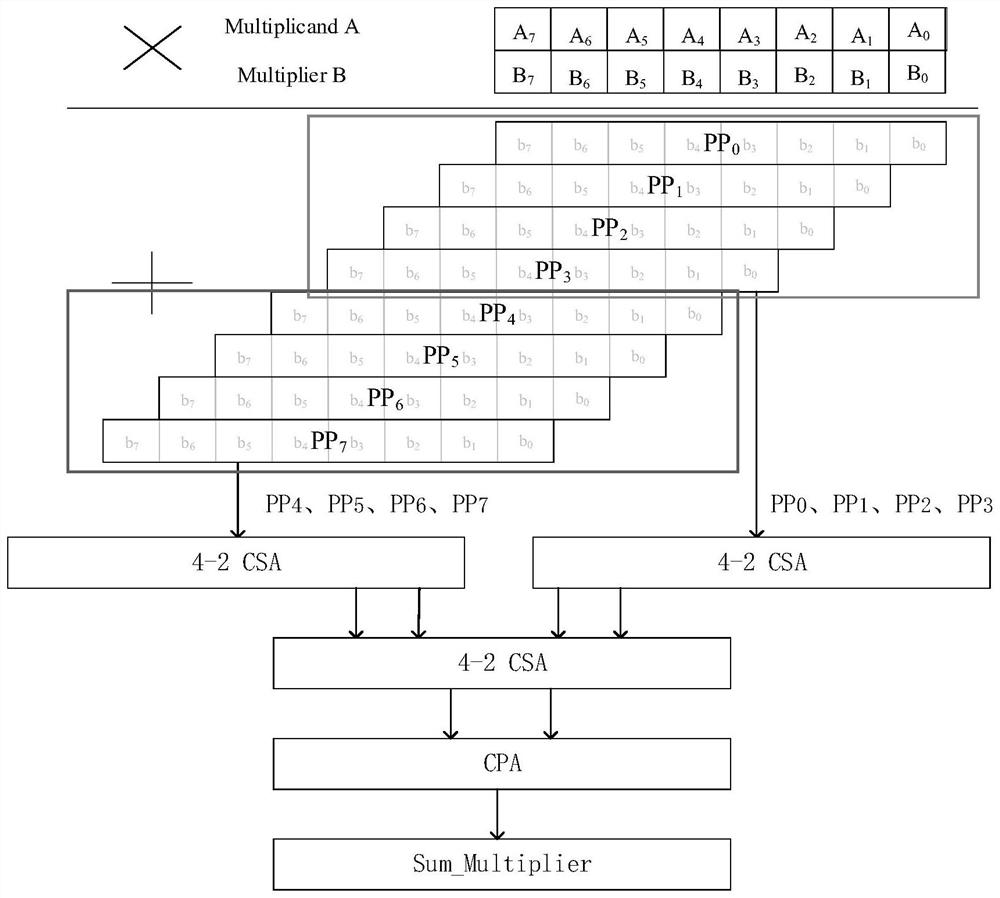

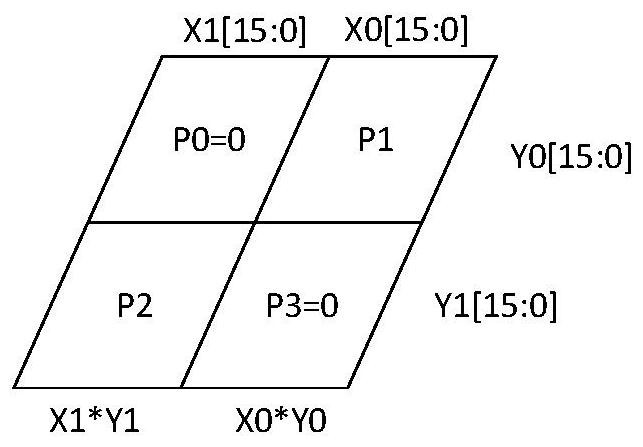

Fixed-point multiplication and addition operation unit and method suitable for mixed precision neural network

ActiveCN113010148ASolve problems such as excessive overhead and redundant idle resourcesDigital data processing detailsBiological neural network modelsBinary multiplierAlgorithm

The invention discloses a fixed-point multiply-add operation unit and method suitable for a mixed precision neural network, and the method comprises the steps: inputting different input data precisions into a multiplier from different positions, controlling the multiplier to shield a partial product of a designated region according to a mode signal, and then outputting a partial product generation part, and carrying out summation operation on the output partial product generation part according to methods corresponding to different precisions, so as to achieve point multiplication operation of mixed precisions. According to the invention, the point multiplication operation of the mixed precision neural network can be realized by adopting one multiplier, and the problems of overlarge hardware overhead, redundant idle resources and the like caused by the need of adopting various processing units with different precisions to process the mixed precision operation in the prior art are solved.

Owner:SOUTH UNIVERSITY OF SCIENCE AND TECHNOLOGY OF CHINA

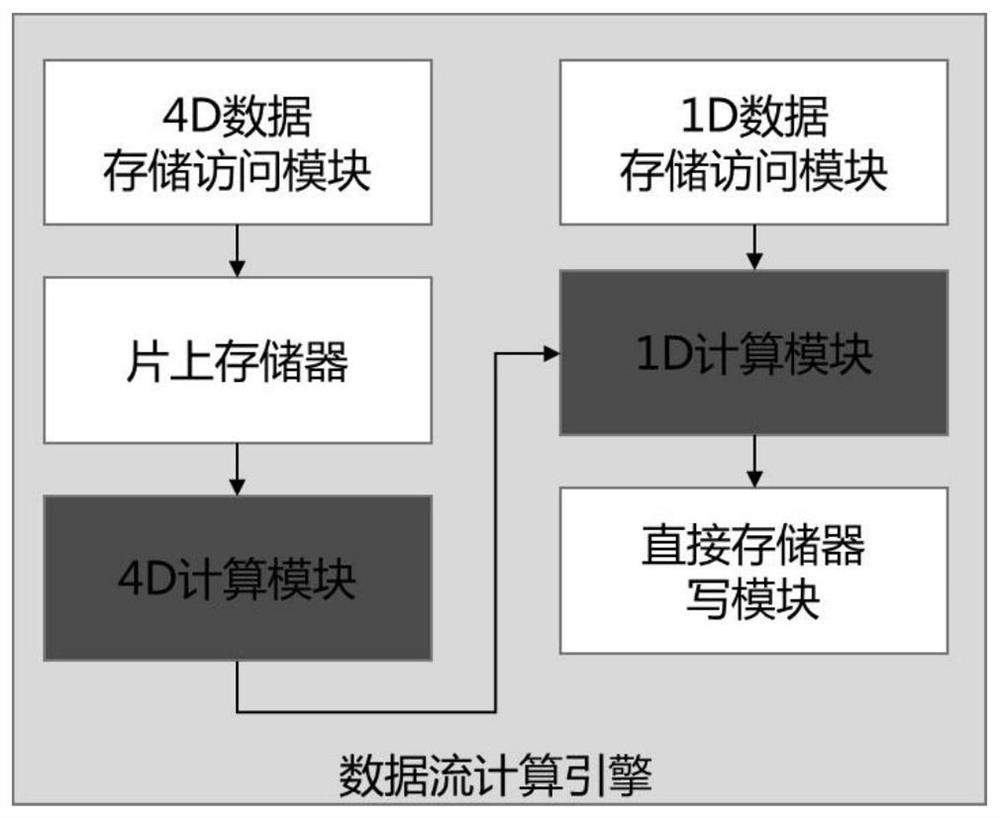

Hybrid precision arithmetic unit applied to reconfigurable array driven by data flow

PendingCN114047903AReduce the numberReduce the number of memory accessesDigital data processing detailsPhysical realisationData streamCell design

The invention discloses a hybrid precision arithmetic unit applied to a reconfigurable array driven by a data flow, and relates to the field of arithmetic unit design. The invention provides the arithmetic unit which supports hybrid precision and multiple working modes. Compared with an arithmetic unit provided at a present stage, the invention designs the high-energy-efficiency fixed-point arithmetic unit supporting multiple specifications and hybrid precision for general computation intensive application, and working modes are selected according to requirements; and based on the low-power-consumption low-overhead hybrid precision arithmetic unit and a reasonable data flow scheduling mode designed by the invention, the problems of low resource utilization rate and precision loss of the hybrid unit in a neural network application-oriented low-precision operation mode of a coarse-grained reconfigurable array fixed-specification calculation unit are solved, and the neural network application-oriented performance of the reconfigurable array is greatly improved.

Owner:SHANGHAI JIAO TONG UNIV

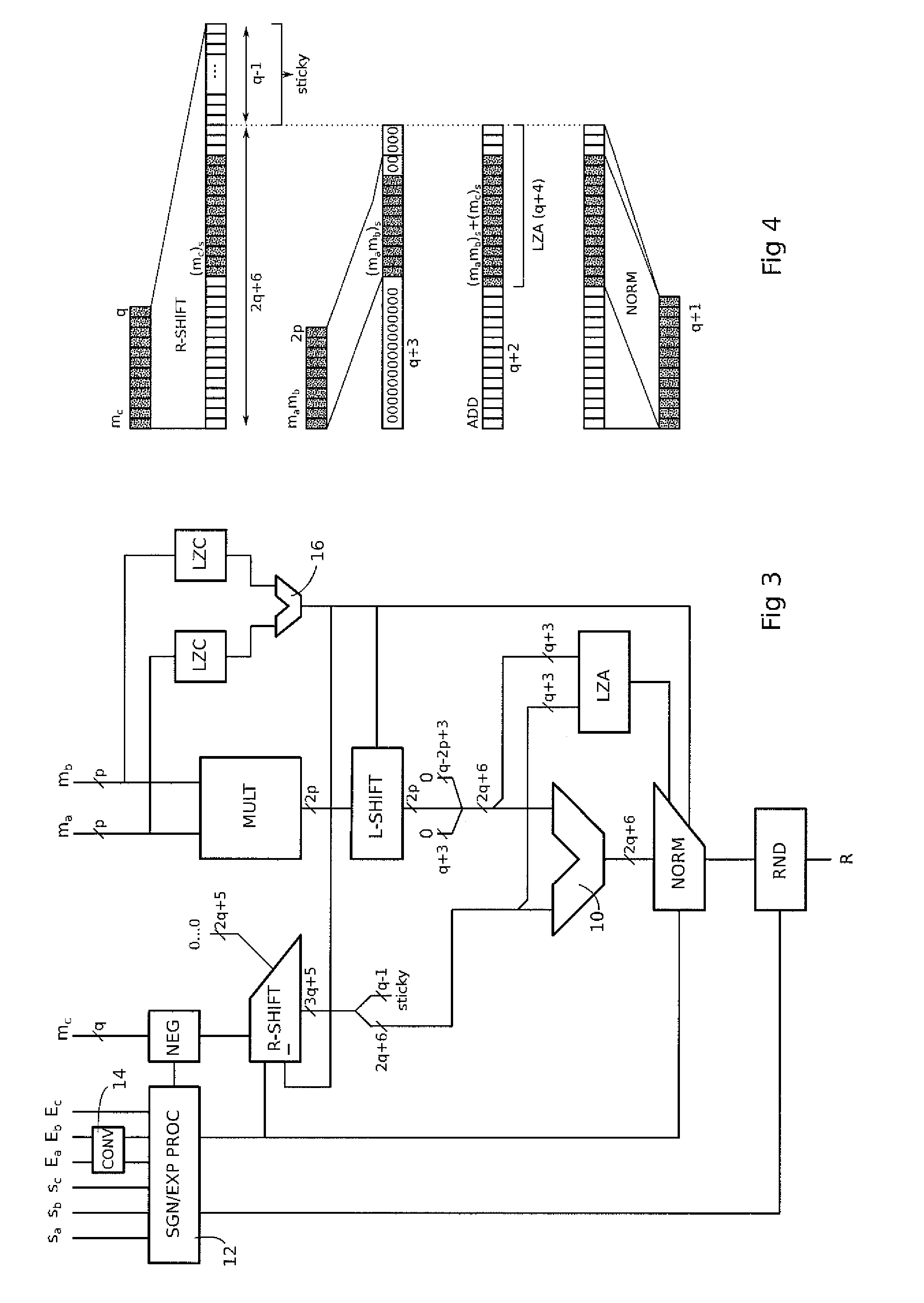

Mixed precision fused multiply-add operator

ActiveUS9367287B2Less complexComputation using denominational number representationBinary multiplierFloating point

A circuit for calculating the fused sum of an addend and product of two multiplication operands, the addend and multiplication operands being binary floating-point numbers represented in a standardized format as a mantissa and an exponent is provided. The multiplication operands are in a lower precision format than the addend, with q>2p, where p and q are the mantissa size of the multiplication operand and addend precision formats. The circuit includes a p-bit multiplier receiving the mantissas of the multiplication operands; a shift circuit aligning the mantissa of the addend with the product output by the multiplier based on the exponent values of the addend and multiplication operands; and an adder processing q-bit mantissas, receiving the aligned mantissa of the addend and the product, the input lines of the adder corresponding to the product being completed to the right by lines at 0 to form a q-bit mantissa.

Owner:KALRAY

Mixed-precision quantization method for neural network

A mixed-precision quantization method for a neural network is provided. The neural network has a first precision and includes several layers and an original final output. For a particular layer, quantization of second precision on the particular layer and an input is performed. An output of the particular layer is obtained according to the particular layer of second precision and the input. De-quantization on the output of the particular layer is performed, and the de-quantized output is inputted to a next layer to obtain a final output. A value of an objective function is obtained according to the final output and the original final output. Above steps are repeated until the value of the objective function of each layer is obtained. A precision of quantization for each layer is decided according to the value of the objective function. The precision of quantization is one of first to fourth precision.

Owner:BEIJING JINGSHI INTELLIGENT TECH CO LTD

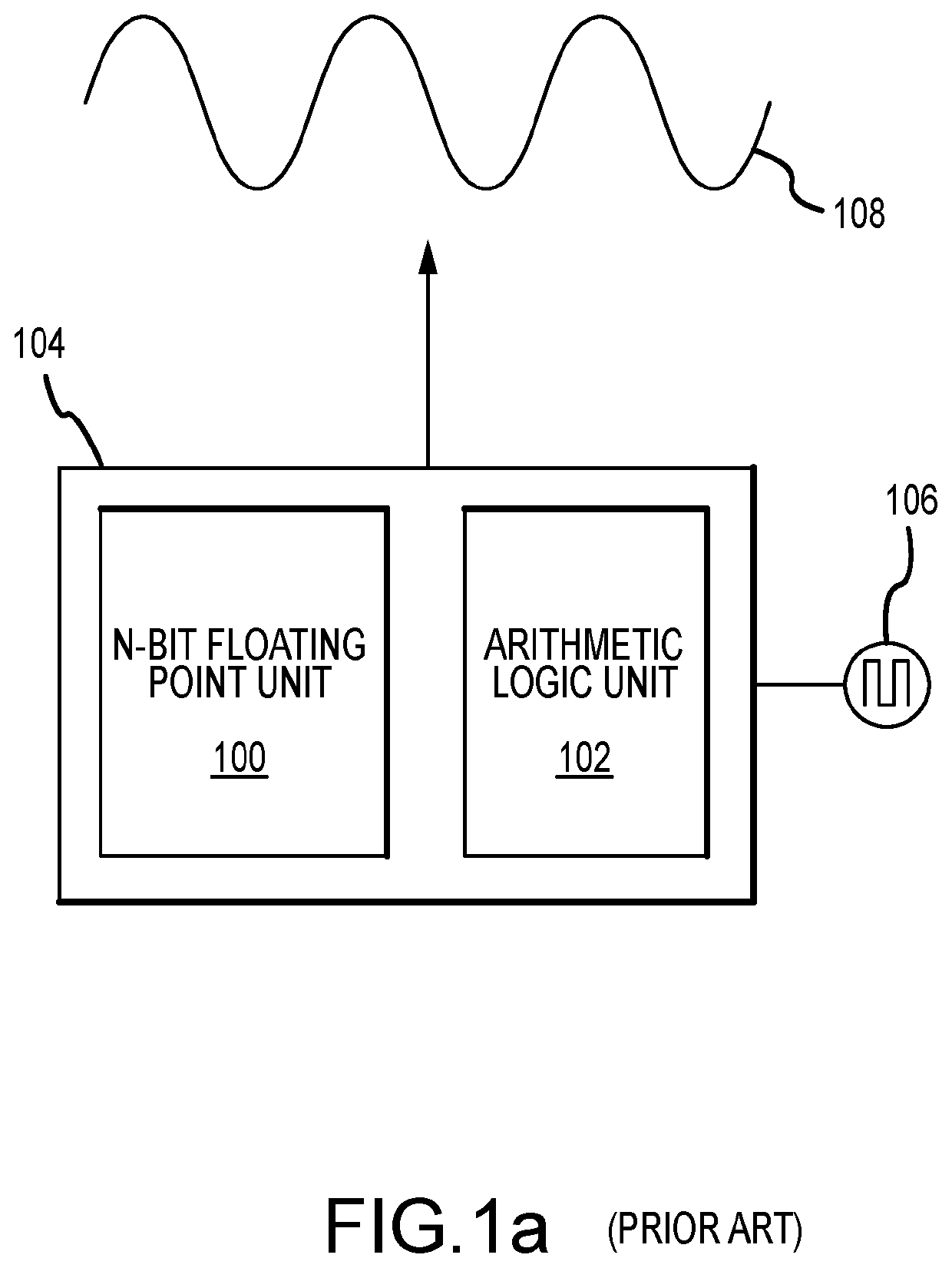

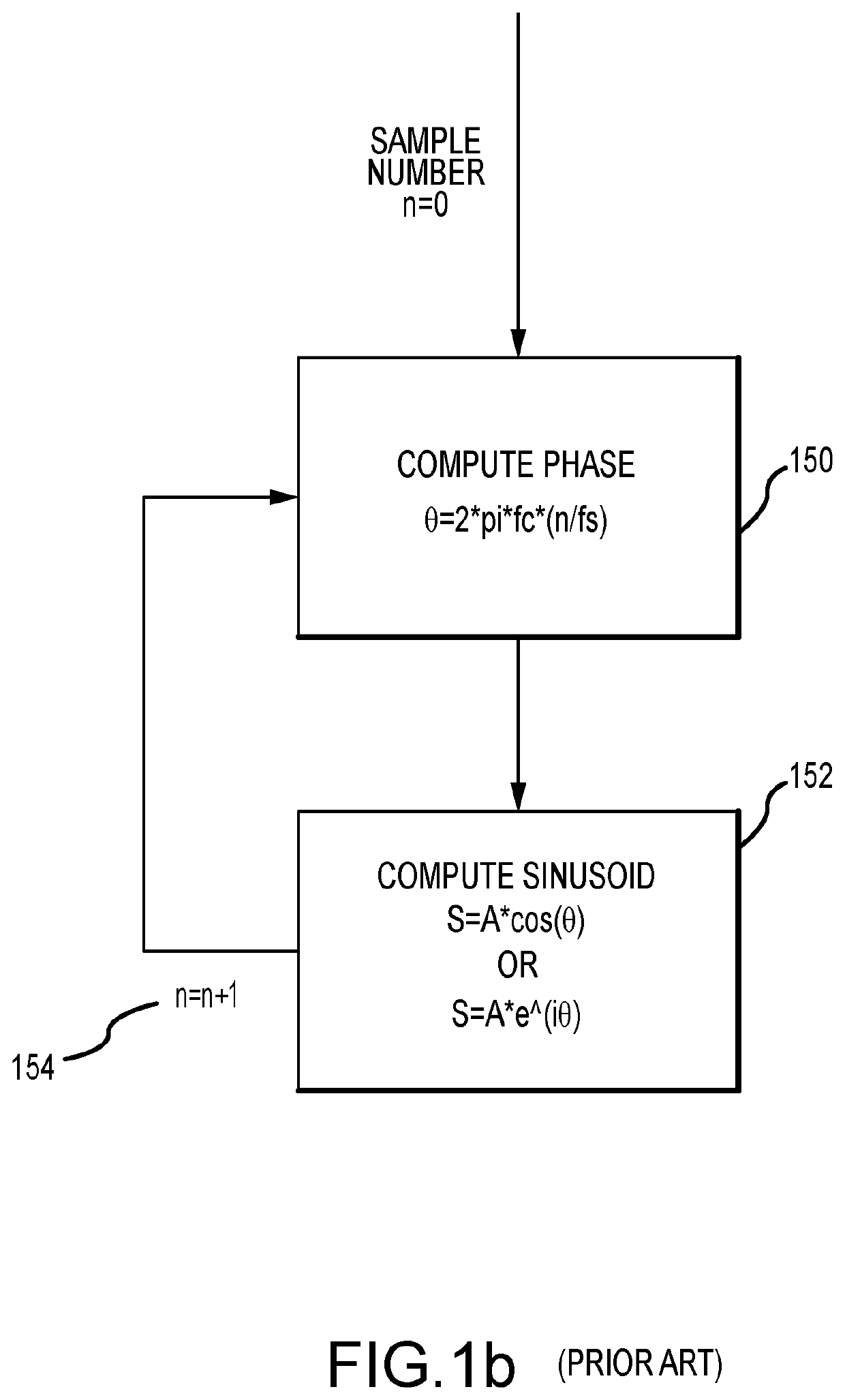

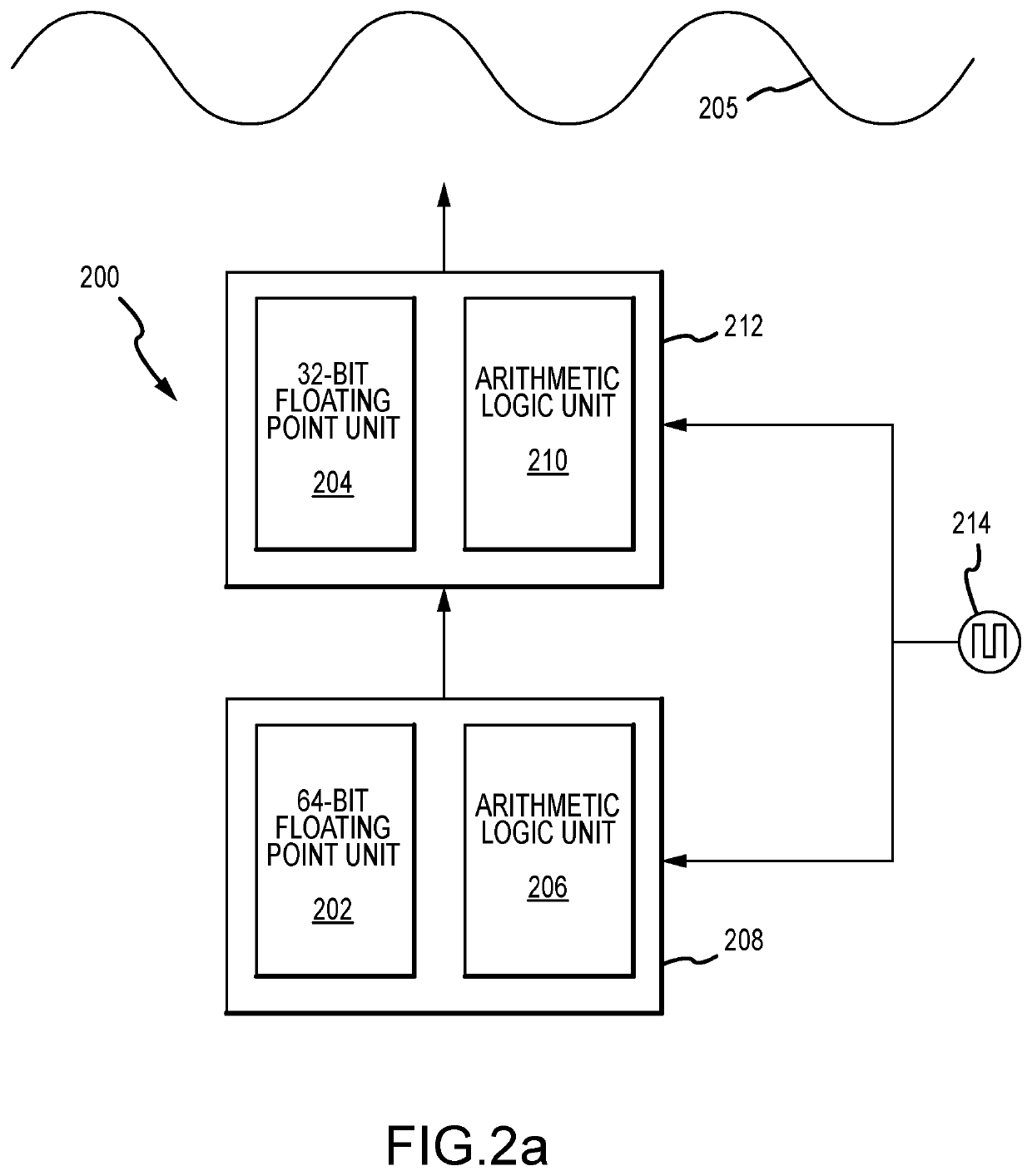

Computationally efficient mixed precision floating point waveform generation

ActiveUS20200409660A1Pointing accuratelyReducing generally unacceptable lossInterprogram communicationConcurrent instruction executionMachine epsilonSingle-precision floating-point format

Computationally efficient mixed precision floating point waveform generation takes advantage of the high-speed generation of waveforms with single-precision floating point numbers while reducing the generally unacceptable loss of precision of pure single-precision floating point to generate any waveform that repeats in 2π. This approaches computes a reference phase in double precision as the modulus of the phase with 2π and then computes offsets to that value in single precision. The double precision reference phase is recomputed as needed depending on how quickly the phase grows and how large a machine epsilon is desired.

Owner:RAYTHEON CO

Computer processor for higher precision computations using a mixed-precision decomposition of operations

ActiveUS20210089303A1Instruction analysisComputation using non-contact making devicesEngineeringFloating point

Owner:INTEL CORP

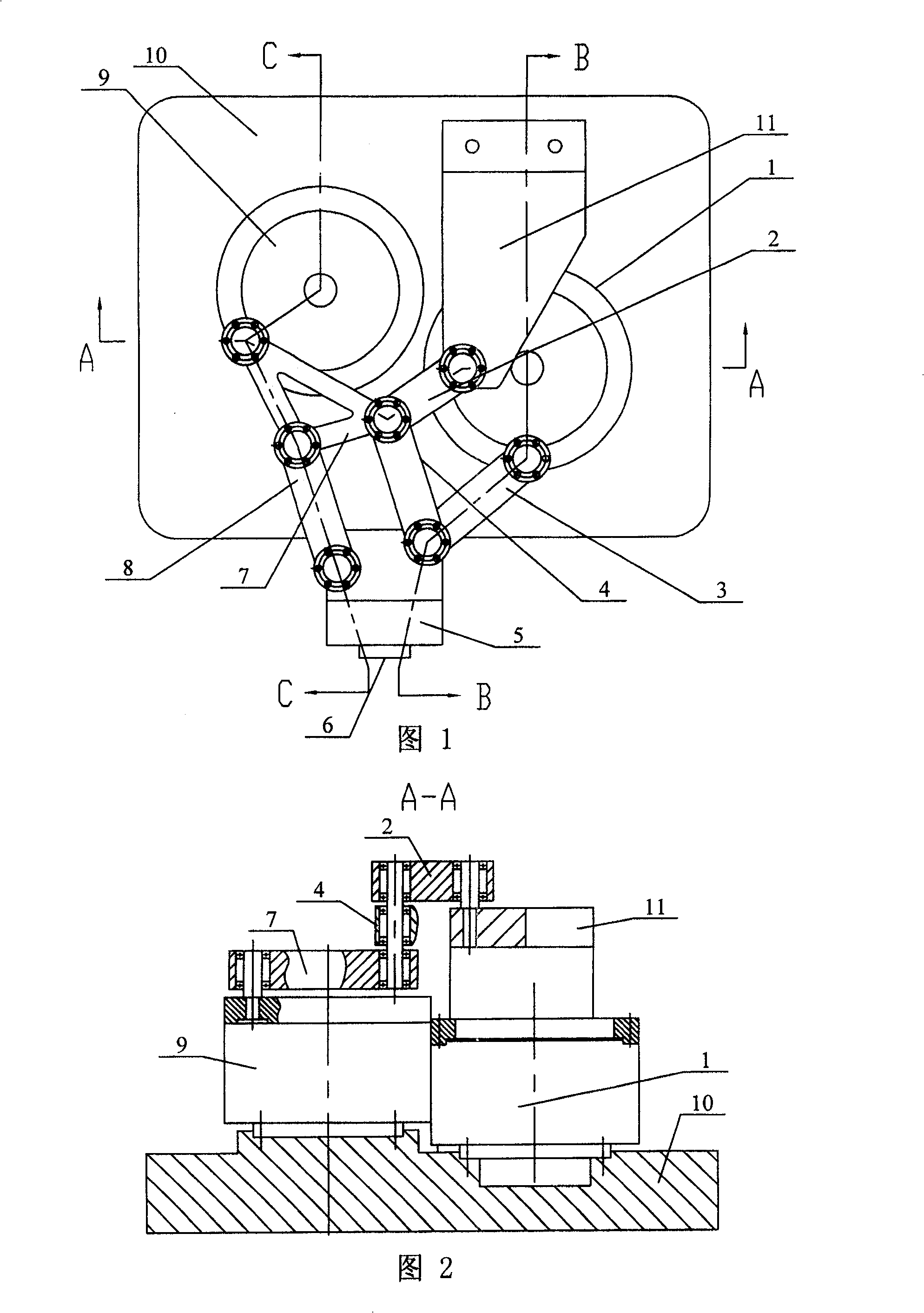

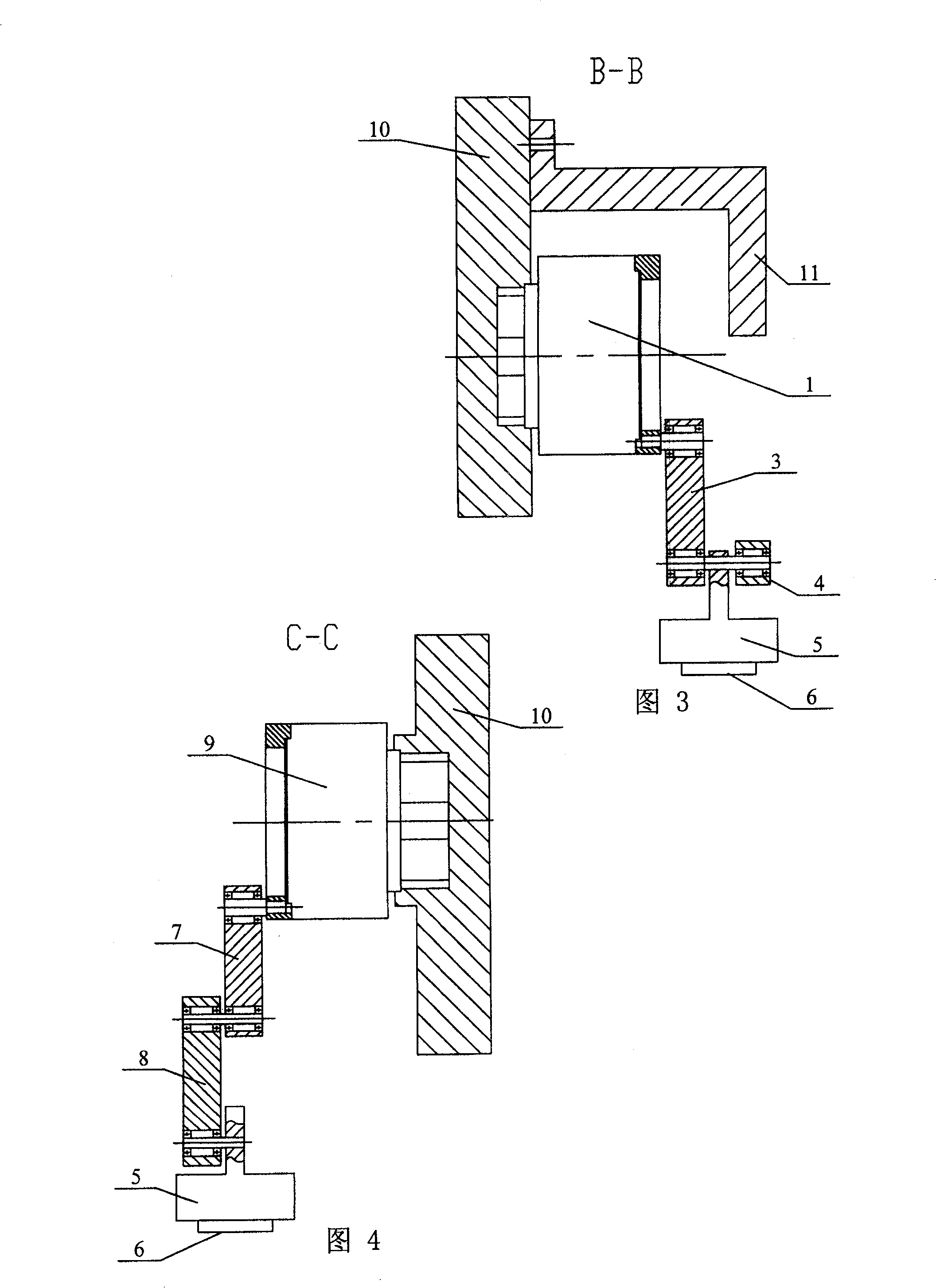

Direct-driving three-freedom serial-parallel mixed precision positioning mechanism

InactiveCN100478133CCompact structureHigh positioning accuracyInstrumental componentsMetal working apparatusMarine engineeringComputer module

The positioning mechanism includes following parts ad connections: bent plate is fixed on a side of machine foundation, base of No.1 motor and base of No.2 motor are fixed on the foundation; one end of No.1 connecting rod is hinge-jointed to bent plate, and another end of No.1 connecting rod is connected to one end of No.3 and No.4 connecting rods respectively; one end of No.2 connecting rod is hinge-jointed to output end of No.1 motor, another ends of No.3 and No.2 connecting rods are hinge-jointed to output module from upper to lower respectively. The invention also contains No.5 connecting rod, and other connections etc. Rectilinear motion module is fixed to output module. Advantages are: compact structure, high positioning accuracy, and good dynamic performance.

Owner:HARBIN INST OF TECH

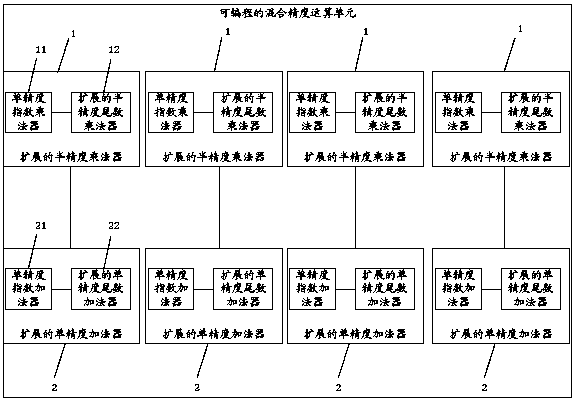

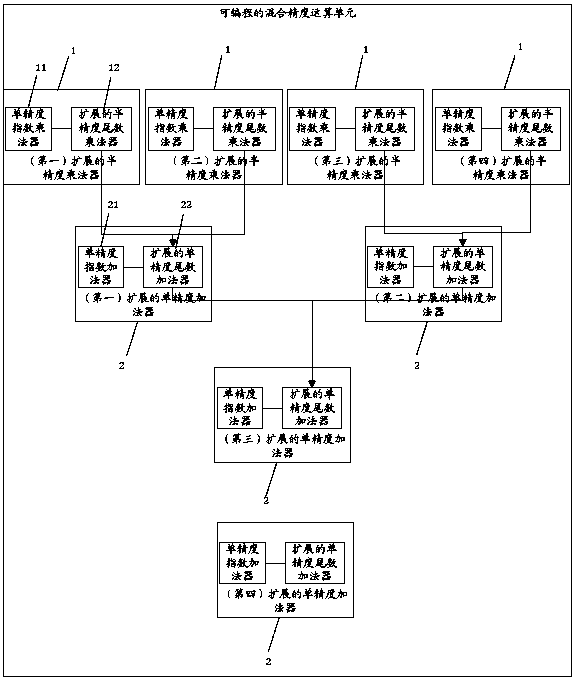

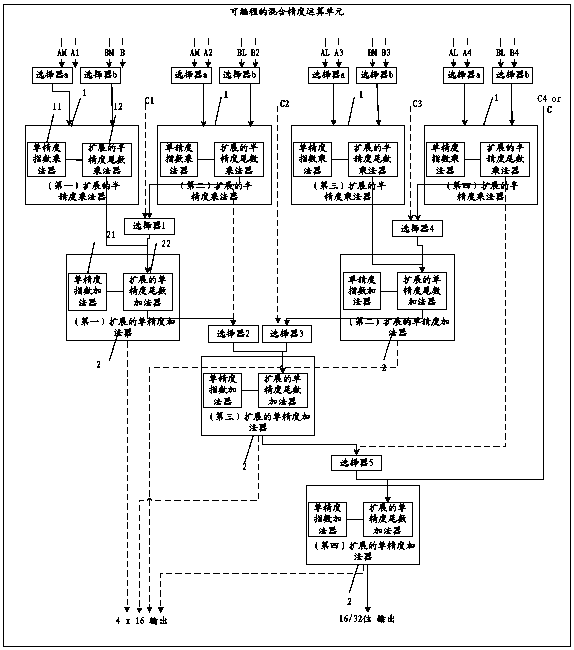

Programmable mixed-precision arithmetic unit

ActiveCN109634558BImprove energy efficiency ratioComputation using non-contact making devicesHigh energyFloating point

The invention provides a programmable hybrid precision operation unit which can support floating point or fixed-point multiplication and / or addition operation with various precisions, can realize multi-path concurrent low-precision operation, and can also integrally realize high-precision operation, so that the programmable hybrid precision operation unit has a relatively high energy efficiency ratio.

Owner:上海燧原科技有限公司

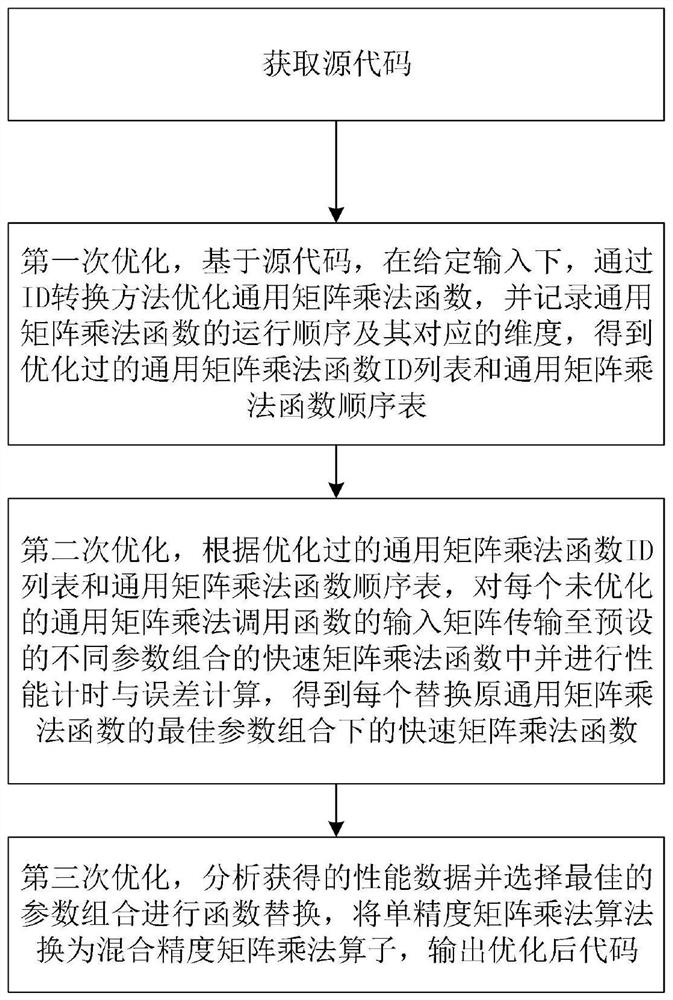

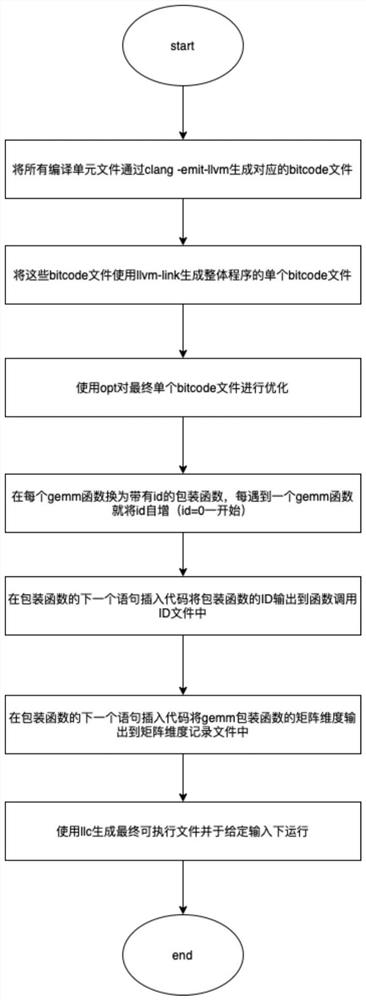

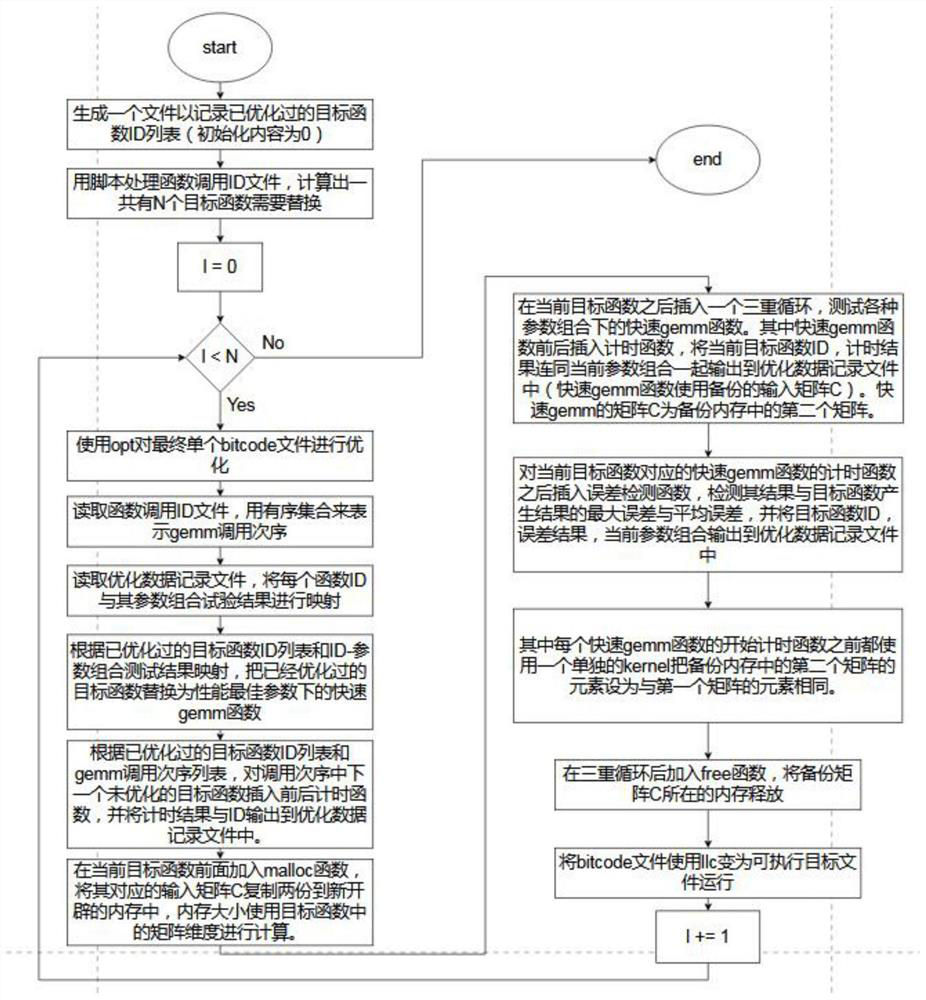

Error-controllable hybrid precision operator automatic optimization method

PendingCN114217817AFull use of mixed-precision computing capabilitiesImprove the efficiency of multiplication operationsCode refactoringComplex mathematical operationsGeneral matrixAlgorithm

The invention discloses an error-controllable hybrid precision operator automatic optimization method, which comprises the following steps of: first optimization: under given input, optimizing a general matrix multiplication function through an ID (Identity) conversion method, and recording an operation sequence and a corresponding dimension of the general matrix multiplication function; second optimization: according to an optimized general matrix multiplication GEMM function ID list and a general matrix multiplication function sequence list, transmitting the input of each non-optimized general matrix multiplication calling function to a preset fast matrix multiplication function of different parameter combinations, and carrying out performance timing and error calculation; and third optimization: analyzing the obtained data, converting a single-precision matrix multiplication algorithm into a mixed-precision matrix multiplication operator, and outputting an optimized code. By using the method, the hybrid precision GEMM operator can help to improve the performance in a more complex and wider high-performance computing program. The method can be widely applied to the field of compilers.

Owner:SUN YAT SEN UNIV

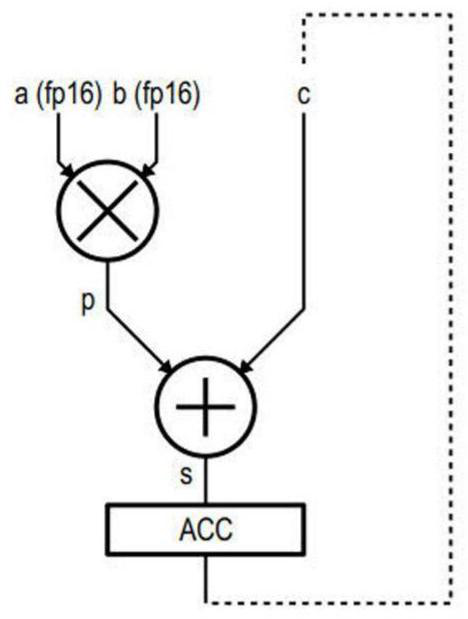

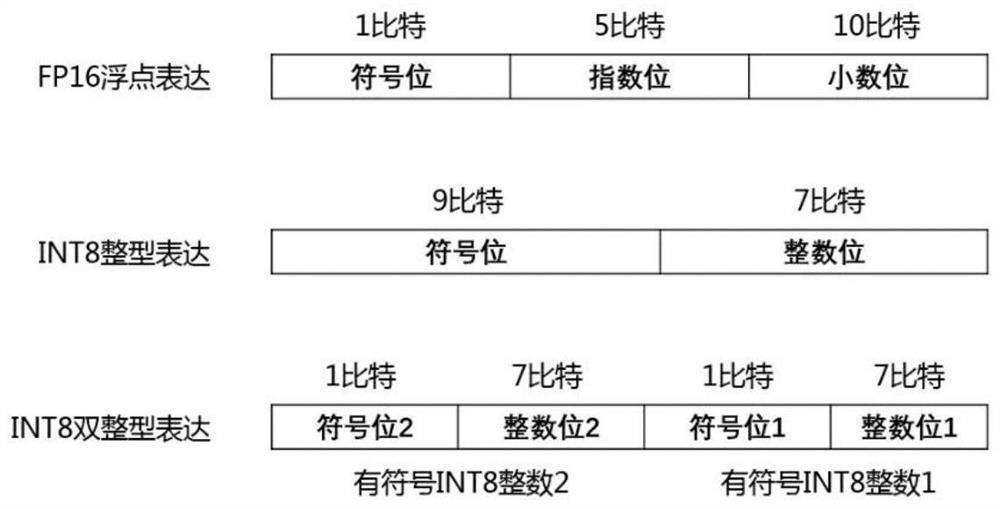

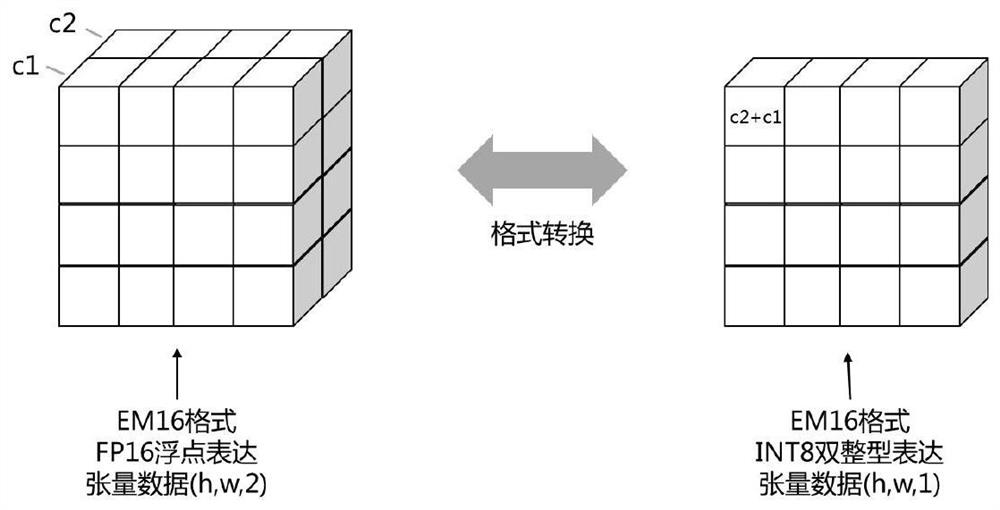

Hybrid precision arithmetic unit for FP16 floating point data and INT8 integer data operation

ActiveCN112860218AAccuracy ownsGood arithmeticDigital data processing detailsEnergy efficient computingAlgorithmComputation process

The invention discloses a hybrid precision arithmetic unit for FP16 floating point data and INT8 integer data operation. The mixed precision arithmetic unit comprises a precision conversion module, an arithmetic unit, two input data and one, two or four output data. The input data and the output data are expressed in an EM16 format, and the EM16 format expression is 16 digits and comprises FP16 floating point expression, INT8 integer expression and INT8 double integer expression; the two input data are respectively feature data and parameter data in neural network calculation; the precision conversion module is used for executing precision conversion among expressions of the EM16 format of the feature data according to the external configuration information; and the arithmetic unit is used for executing additive operation or multiplication operation between two pieces of data which are both FP16 floating point data or both INT8 integer data according to external configuration information. According to the hybrid precision arithmetic unit, FP16 floating point data and INT8 integer data can be used in a mixed mode in the calculation process, so that hybrid precision calculation can have the precision of the FP16 and the speed of the INT8 in a neural network calculation task.

Owner:厦门壹普智慧科技有限公司

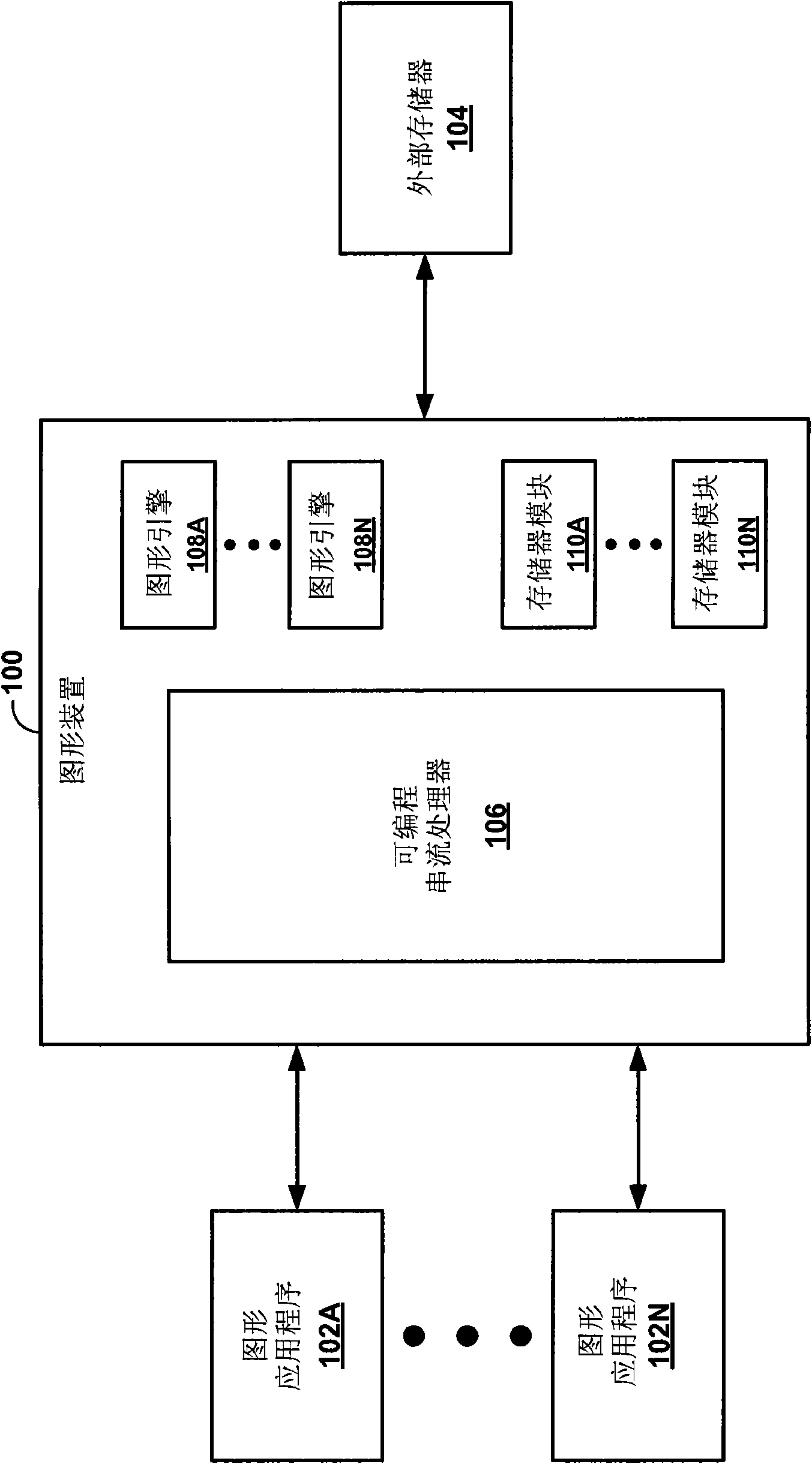

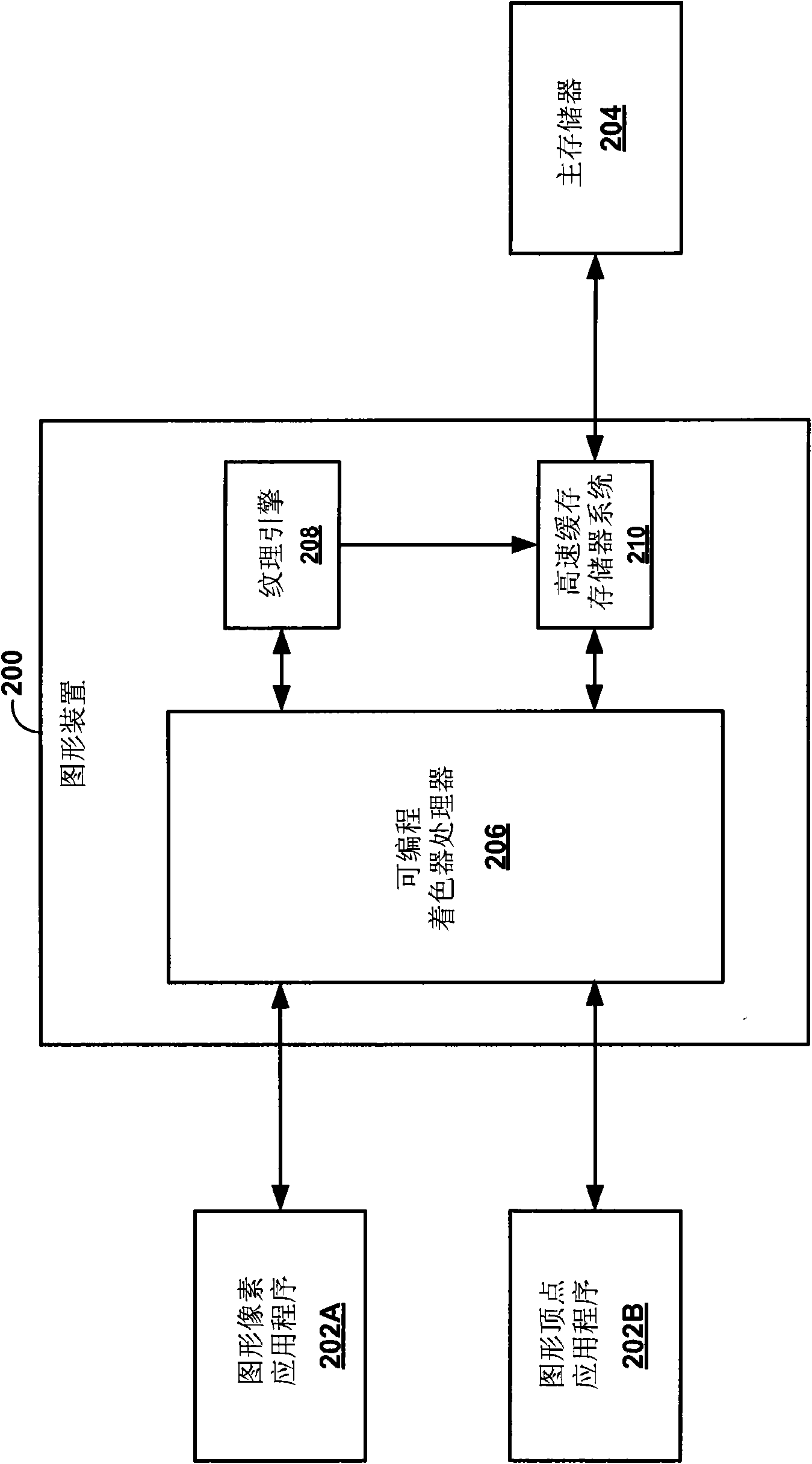

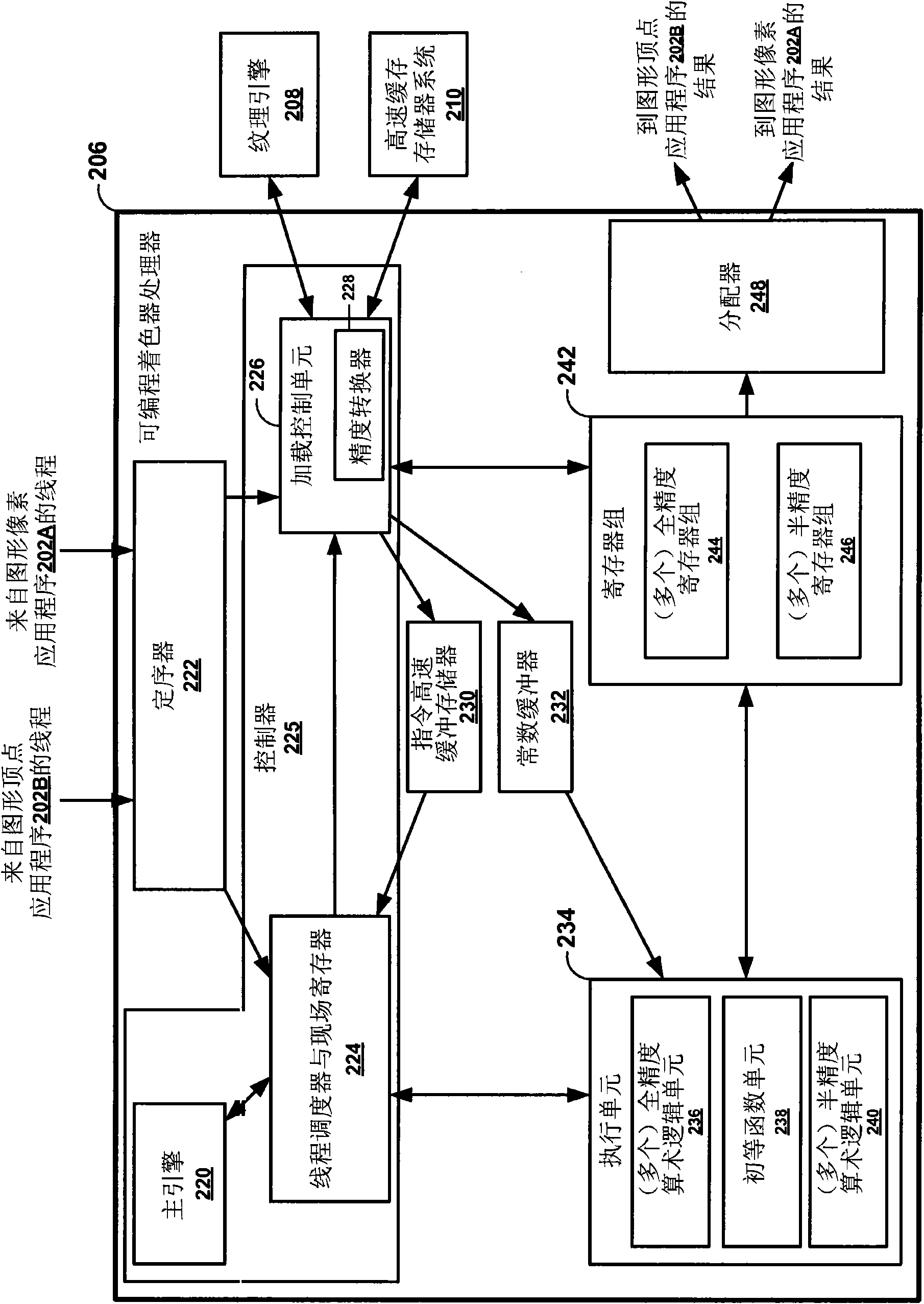

Programmable streaming processor with mixed precision instruction execution

ActiveCN102016926BIncrease flexibilityReduce or eliminate precision gainsProgram controlMemory systemsParallel computingExecution unit

The disclosure relates to a programmable streaming processor that is capable of executing mixed-precision (e.g., full-precision, half-precision) instructions using different execution units. The various execution units are each capable of using graphics data to execute instructions at a particular precision level. An exemplary programmable shader processor includes a controller and multiple execution units. The controller is configured to receive an instruction for execution and to receive an indication of a data precision for execution of the instruction. The controller is also configured to receive a separate conversion instruction that, when executed, converts graphics data associated with the instruction to the indicated data precision. When operable, the controller selects one of the execution units based on the indicated data precision. The controller then causes the selected execution unit to execute the instruction with the indicated data precision using the graphics data associated with the instruction.

Owner:QUALCOMM INC

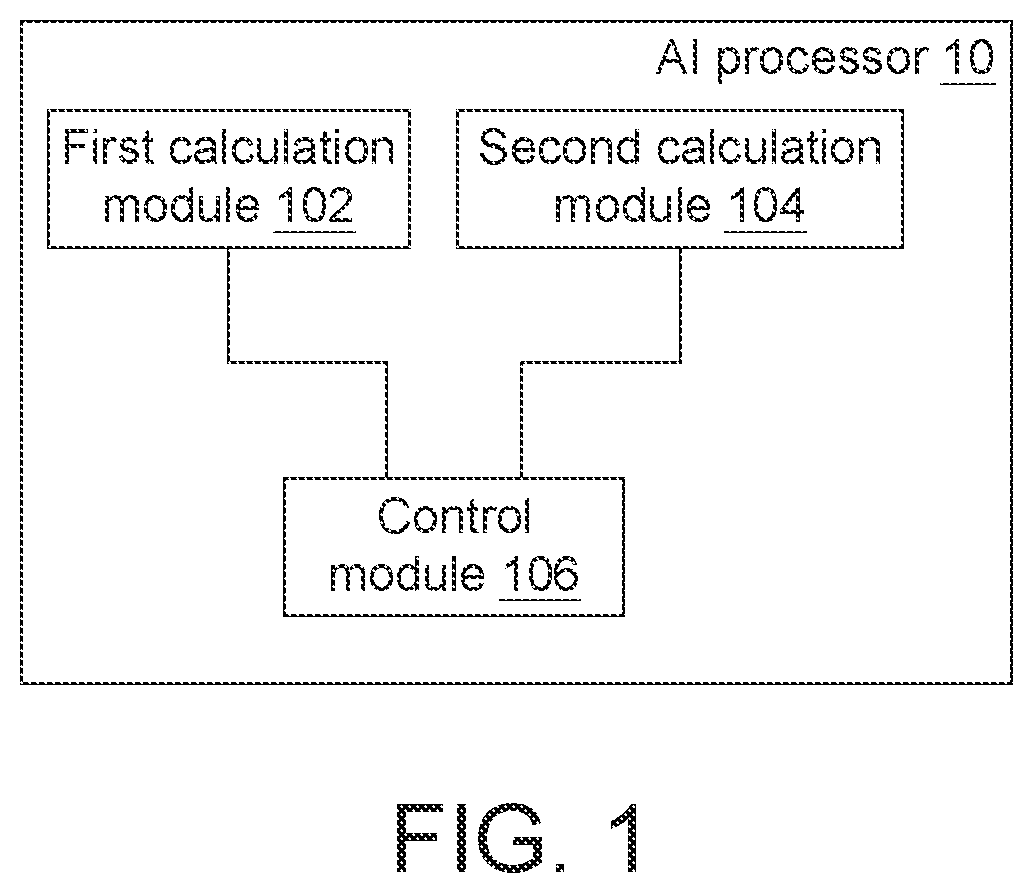

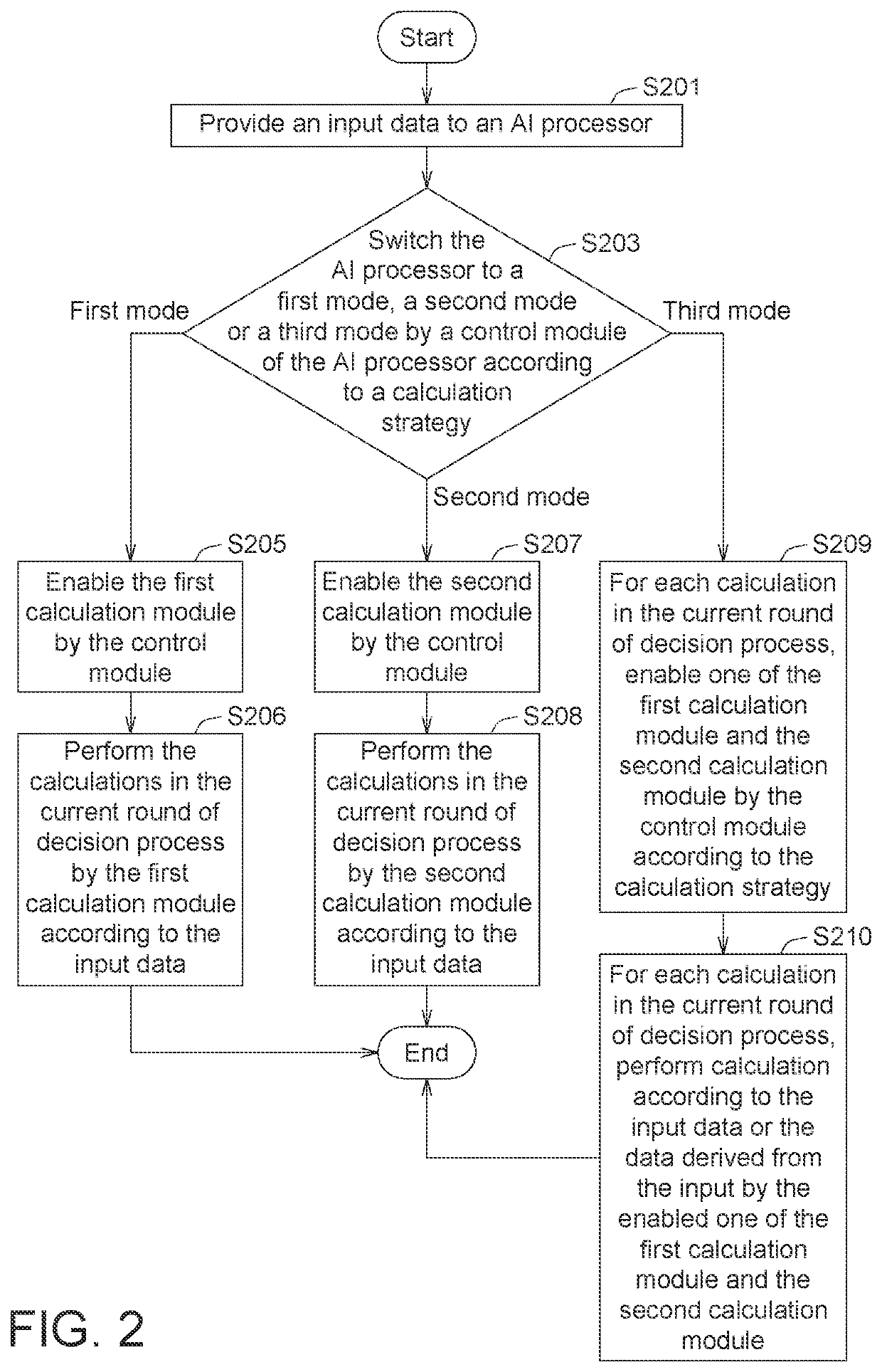

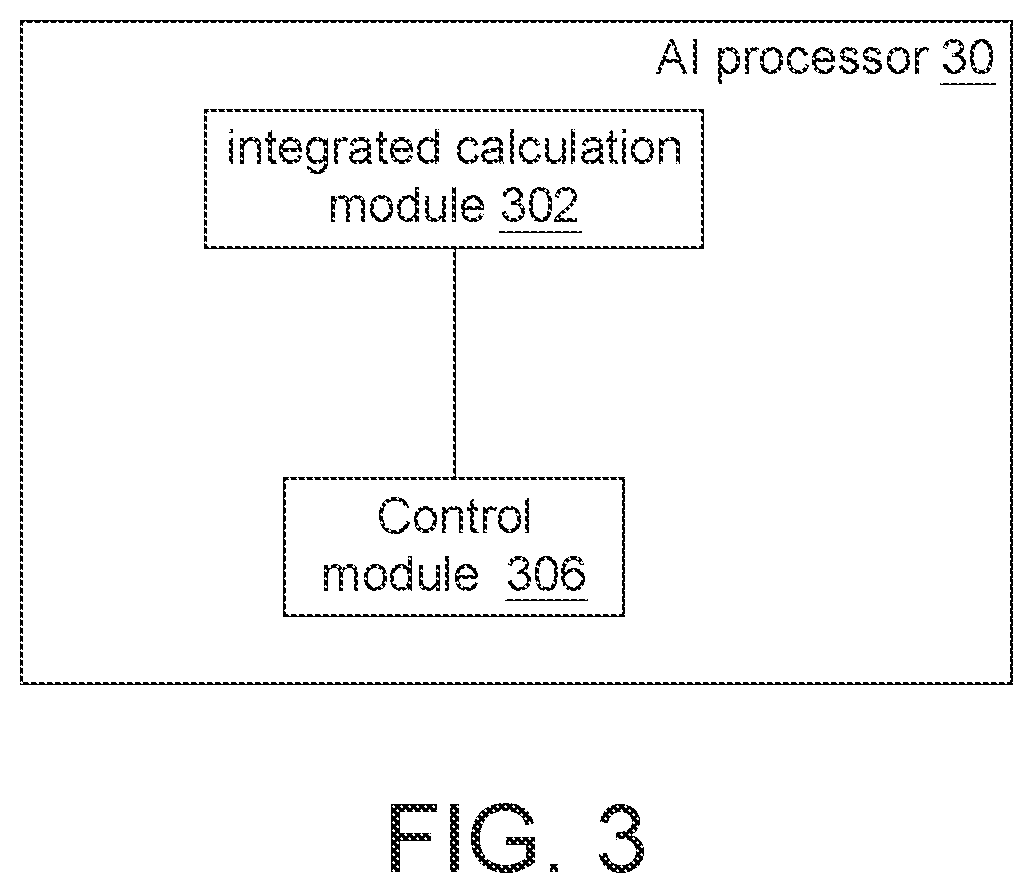

Mixed-precision ai processor and operating method thereof

A mixed-precision artificial intelligence (AI) processor and an operating method thereof are provided. The AI processor includes a first calculation module, a second calculation module and a control module. The first calculation module is configured to perform calculation based on the data with a first format. The second calculation module is configured to perform calculation based on the data with a second format different from the first format. The control module is coupled to the first calculation module and the second calculation module to select one of the first calculation module or the second calculation module to perform calculation based on an input data according to a calculation strategy.

Owner:BEIJING JINGSHI INTELLIGENT TECH CO LTD

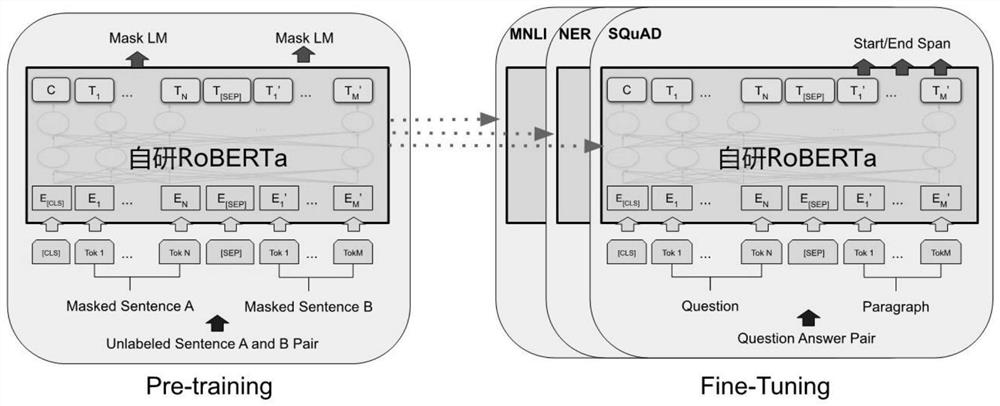

Automatic studying and judging method for emotional tendency of Internet information

PendingCN113535899AImprove generalization abilityImprove accuracySemantic analysisText database queryingThe InternetHuman–computer interaction

The invention discloses an automatic studying and judging method for emotional tendency of Internet information, relates to the technical field of language emotion analysis. According to the method, a method for pre-training by using a RoBERTa model on general corpora and finely adjusting downstream tasks is adopted; in a deep learning training process, a mixed precision and multi-machine multi-GPU training mode is used; and after super parameter searching training is completed, a model is deployed and an interface is provided to complete the work of automatic studying and judging. The method solves the problems that in traditional public opinion emotion studying and judging work, the accuracy is not high, the studying and judging model generalization effect is not good enough, and the performance is not good enough when coping with complex Chinese contexts such as obscure and ambiguity.

Owner:西安康奈网络科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com