Deep neural network accelerator based on hybrid precision storage

A deep neural network and accelerator technology, applied in the fields of calculation, counting, and computing, can solve problems such as operating power consumption limitation of neural network accelerator deployment, insufficient use of neural network advantages, and system stability to be verified, etc. Achieve the effect of compression and storage, reducing precision

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0015] The present invention is further illustrated below in conjunction with specific examples. It should be understood that these examples are only used to illustrate the present invention and are not intended to limit the protection scope of the present invention. After reading the present invention, various equivalents made by those skilled in the art Modifications of form all fall within the scope defined by the appended claims of this application.

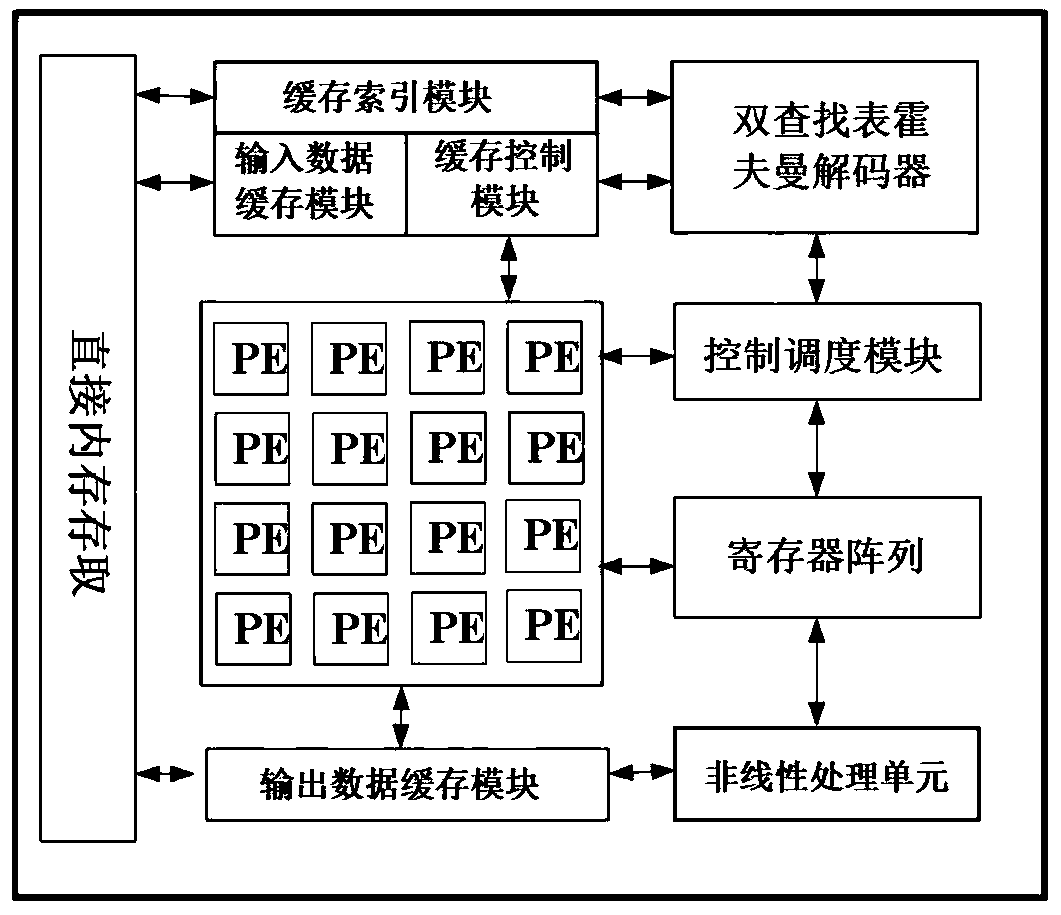

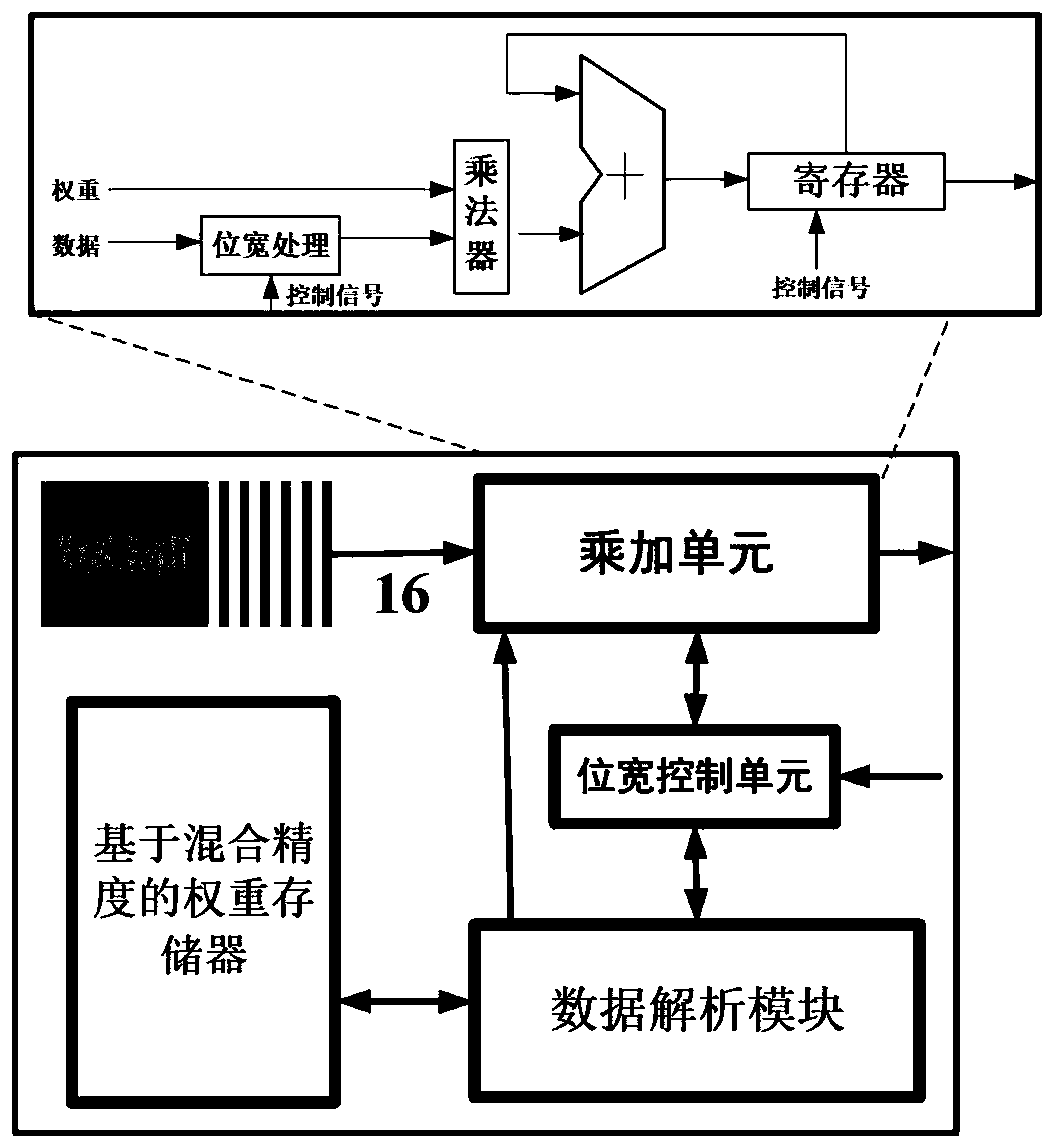

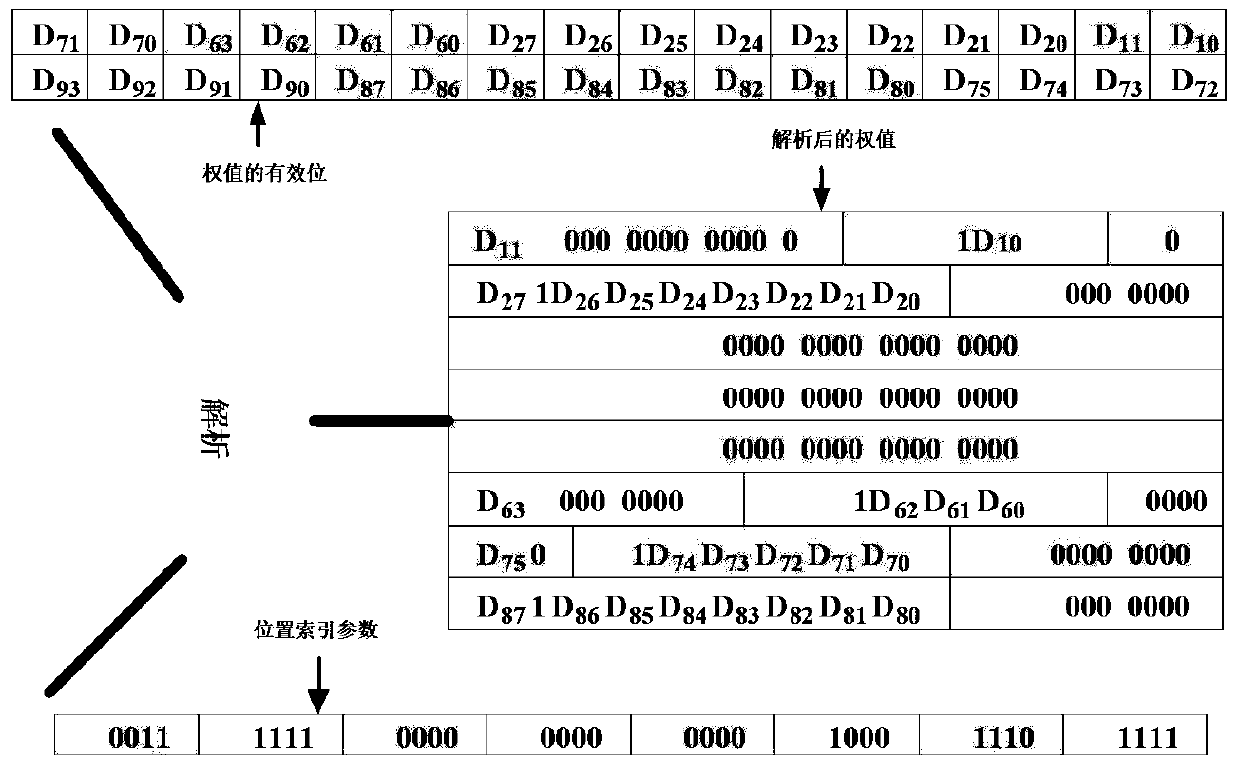

[0016] The overall architecture of the deep neural network accelerator based on mixed precision storage in the present invention is as follows: figure 1 As shown, when working, the accelerator receives weights from offline training and compression, and under the control and scheduling of the control module, it completes the decoding of different precision weights, the operation of the fully connected layer and the activation layer. The deep neural network accelerator based on mixed-precision storage includes 4 on-chip cache m...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com