Convolutional network three-dimensional model reconstruction method based on multi-view cost volume

A technology of convolutional network and 3D model, applied in the field of 3D model reconstruction of convolutional network based on multi-view cost volume, can solve the problems of unsatisfactory realization effect and susceptibility to the influence of multiple modes, so as to improve rationality and guarantee irrelevant effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

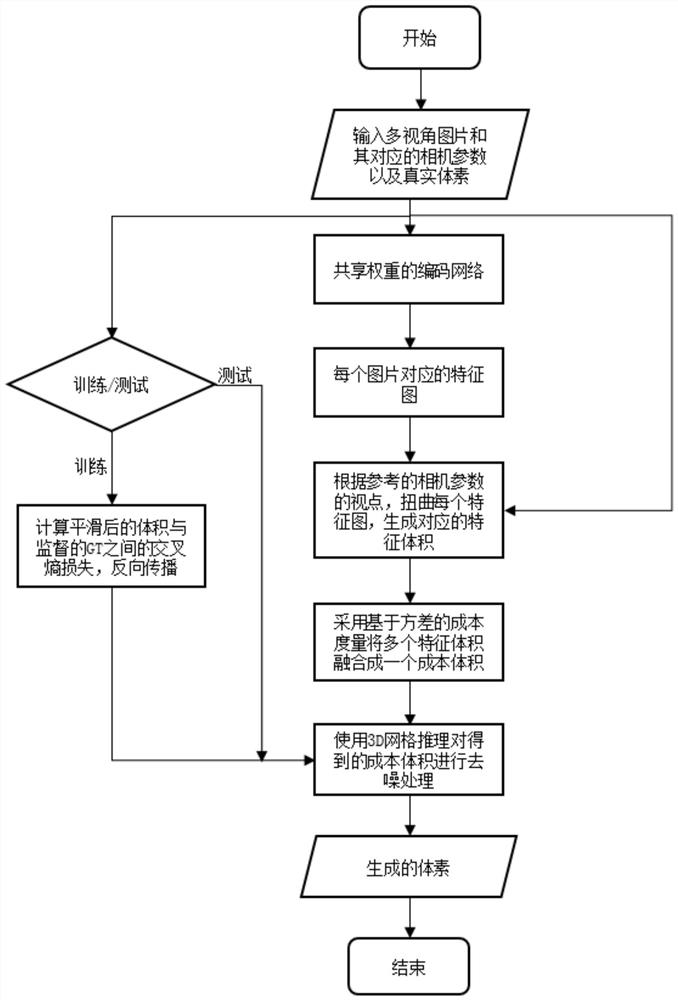

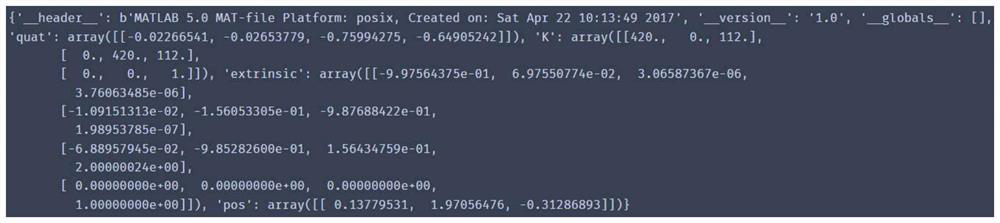

[0081] The objective tasks of the present invention are as follows figure 2 , image 3 , Figure 4 and Figure 5 as well as Image 6 shown, figure 2 is the input multi-view image, image 3 is the specific information of the camera parameters, Figure 4 is the voxel quantized representation for supervision, Figure 5 is the voxel visualization representation for supervision, Image 6 For the voxel results reconstructed by the network, the structure of the whole method is as follows Figure 7 shown. Each step of the present invention will be described below according to examples.

[0082] In step (1), a feature map of the input multi-view image data is extracted through a weight-sharing encoding network. Taking 3 perspectives as an example, it is divided into the following steps:

[0083] Step (1.1), the input dataset (taken from the 13 categories of the ShapeNet dataset) has a total of 20 rendering images for each sample (the format is .png, and the width, height a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com