Face recognition image fusion method

An image fusion and face recognition technology, which is applied in the field of face recognition image fusion, can solve the problems of low recognition efficiency and few facial features, and achieve the effect of low time cost, small amount of calculation, and not easy to be disturbed by the outside world

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

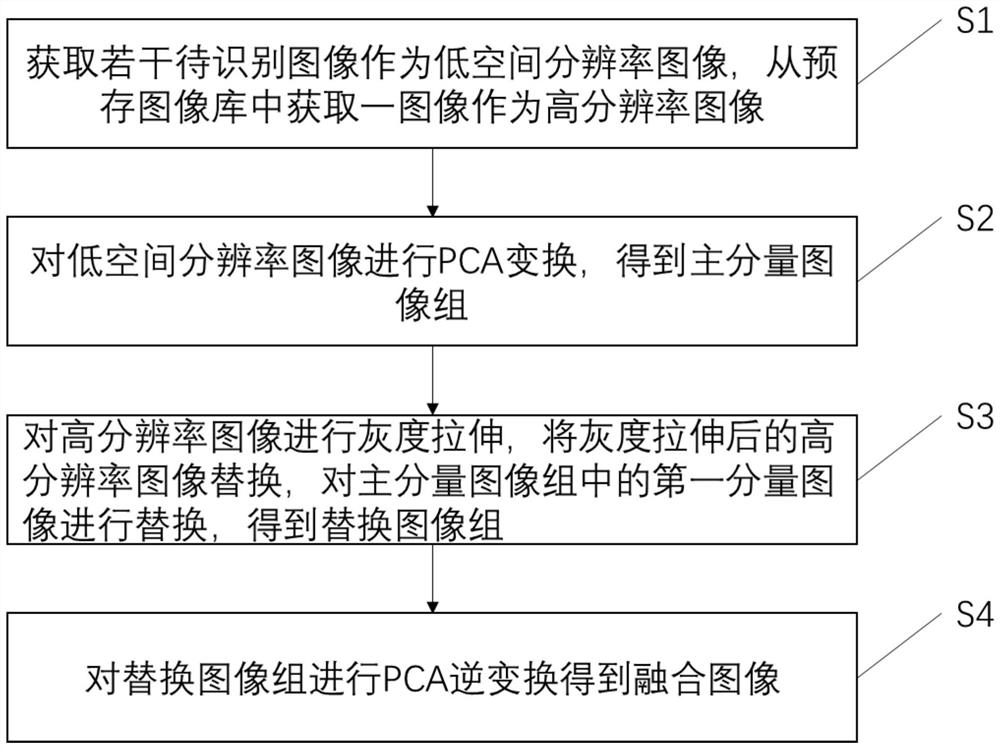

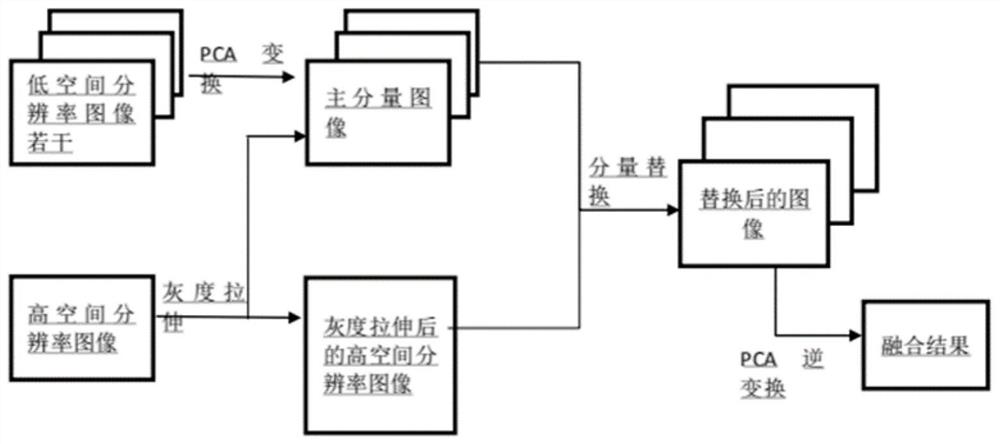

[0042] see figure 1 and figure 2 , this embodiment provides a face recognition image fusion method, including the following steps:

[0043] First, in step S1, several images to be recognized are acquired as low spatial resolution images, and an image is acquired from a pre-stored image library as a high resolution image.

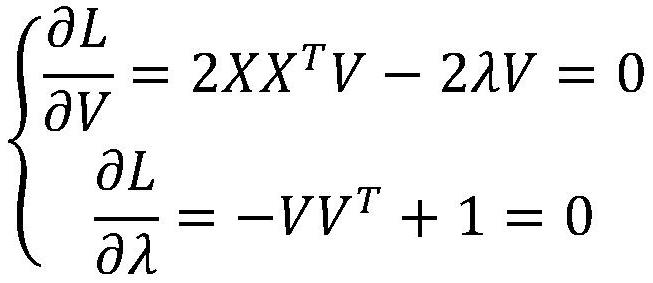

[0044] Then, in step S2, PCA transformation is performed on the low spatial resolution image to obtain a principal component image group. Specifically, read the RGB values of the low spatial resolution image and construct a corresponding three-dimensional column vector. The three-dimensional column vector includes the pixel position of the low spatial resolution image and the corresponding RGB value, as shown in the following formula [low_R(i ,j),low_G(i,j),low_B(i,j)]. i,j are the coordinate points of the low spatial resolution image, and low_R, low_G, and low_B refer to different colors. The three-dimensional column vector of each pixel is added and...

Embodiment 2

[0081] Based on the same inventive concept as in Embodiment 1, this embodiment also provides a computer-readable storage medium on which a computer program is stored, and when the computer program is executed by a processor, realizes a human face as in Embodiment 1 Identify image fusion methods.

[0082] The computer-readable storage medium in this embodiment stores a computer program that can be executed by the processor. When the computer program is executed, it first acquires several images to be recognized as low-spatial resolution images, and acquires an image from a pre-stored image library as a High-resolution images. Next, perform PCA transformation on the low spatial resolution image to obtain a principal component image group. Then, perform grayscale stretching on the high-resolution image, and replace the first component image in the principal component image group with the grayscale-stretched high-resolution image to obtain a replacement image group. Finally, per...

Embodiment 3

[0085] Based on the same inventive concept as Embodiment 1, this embodiment also provides a computer device, including a memory, a processor, and a computer program stored in the memory and called by the processor. When the processor executes the computer program, the A face recognition image fusion method of Embodiment 1.

[0086] In the process of executing the face recognition image fusion method, the processor of the computer device in this embodiment first acquires several images to be recognized as low spatial resolution images, and acquires an image from a pre-stored image library as a high resolution image. Next, perform PCA transformation on the low spatial resolution image to obtain a principal component image group. Then, perform grayscale stretching on the high-resolution image, and replace the first component image in the principal component image group with the grayscale-stretched high-resolution image to obtain a replacement image group. Finally, perform invers...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com