Multi-core neural network processor and multi-task allocation scheduling method for processor

A technology of neural network and scheduling method, applied in the fields of multi-core neural network processor, multi-task allocation and scheduling, can solve the problem of low utilization of system resources, and achieve the effect of improving utilization and performance and improving performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

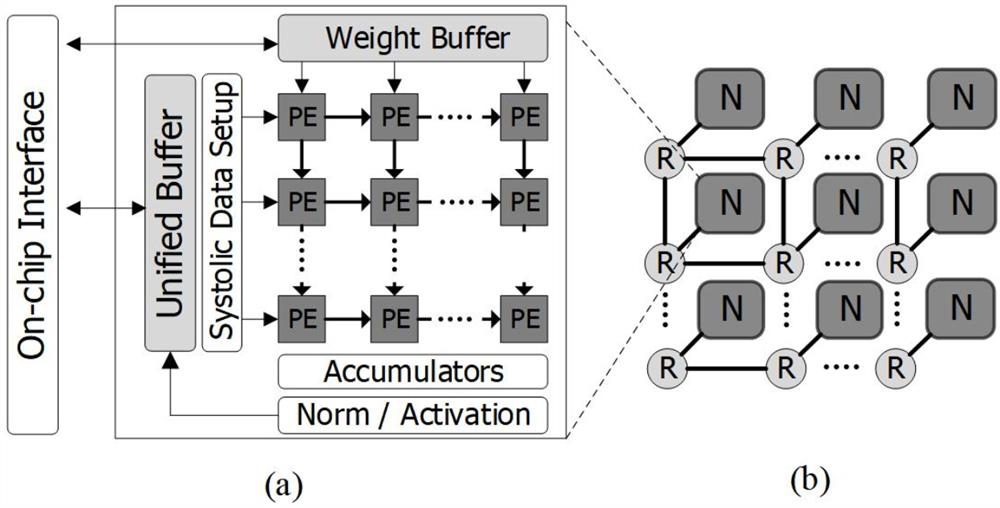

Image

Examples

Embodiment 1

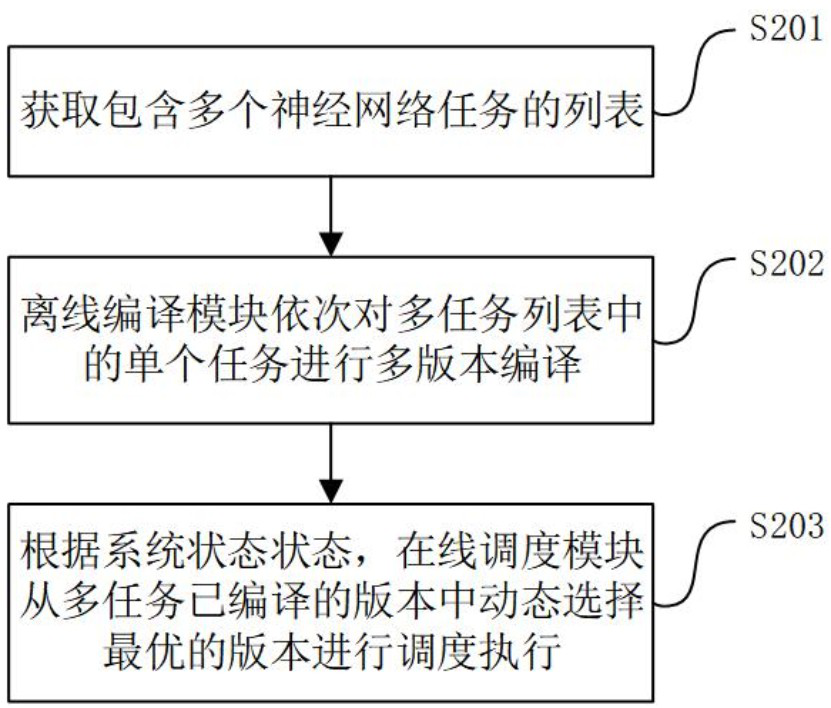

[0027] The multi-task allocation and scheduling method for the multi-core neural network processor is characterized by comprising the following steps:

[0028] like figure 2 As shown, this figure includes a general framework view of the invention.

[0029] Step 1: Get a list with multiple neural network tasks;

[0030] Obtain the information of multiple neural network tasks to be scheduled, including model information of each neural network task, including size information of each layer, calculation type, and deadline set for each neural network task.

[0031] Step 2: Use the offline compilation module to compile each task in the multi-task list in turn, and finally obtain a multi-version compilation result of each task;

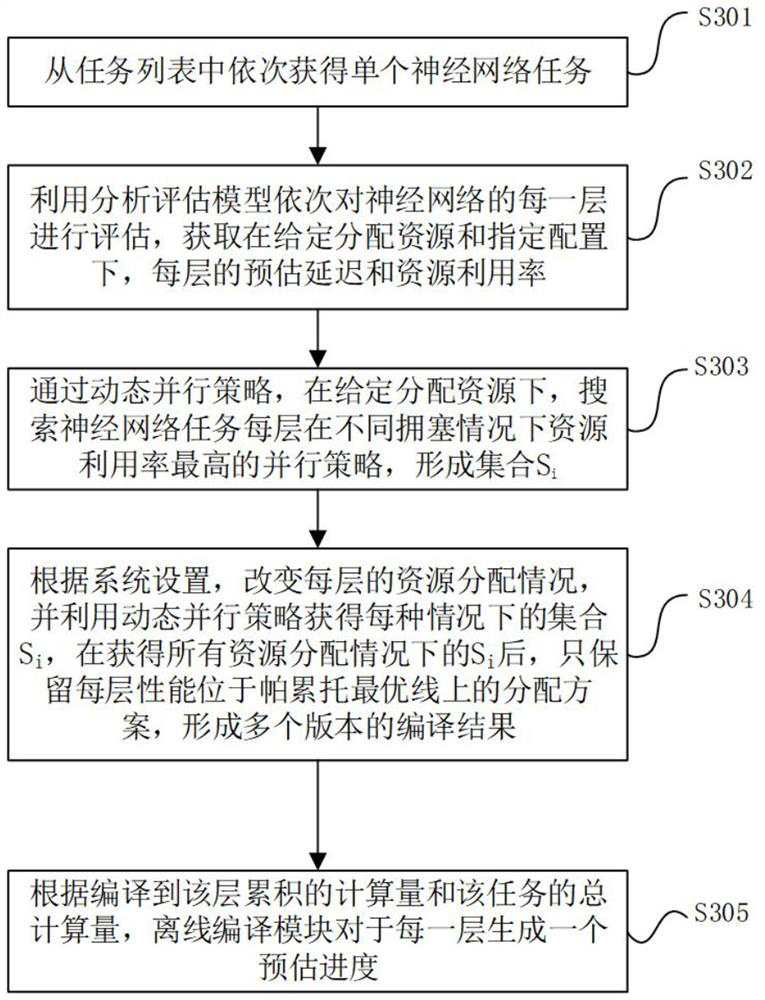

[0032] like image 3 shown, the figure lists the flow chart for offline compilation of modules.

[0033] Step S302, the offline compilation module evaluates each layer of the neural network in turn by using the analysis and evaluation model, and obtains...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com