Lip reading method based on multi-granularity spatiotemporal feature perception of event camera

A spatio-temporal feature and multi-granularity technology, applied in the field of lip reading, can solve the problems of low video time resolution, visual redundant information, high power consumption of equipment, etc., achieve good recognition ability, improve accuracy, and avoid spatio-temporal information lost effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

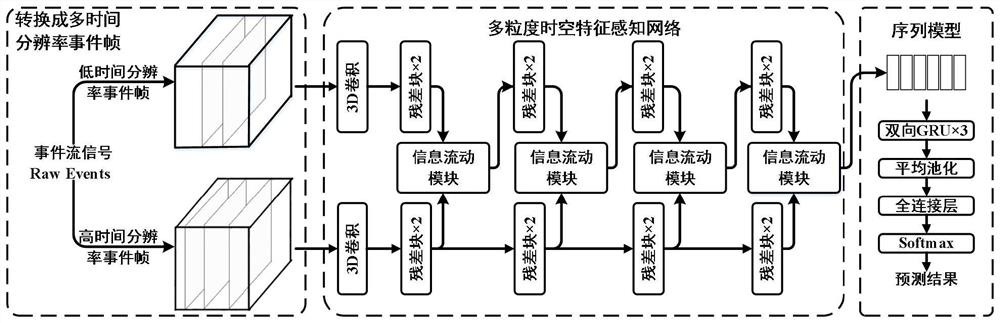

[0042] In this embodiment, the process of a lip-reading method based on event camera-based multi-granularity spatiotemporal feature perception is referenced figure 1 , specifically, follow the steps below:

[0043] Step 1. Event camera-based lip-reading data collection and preprocessing:

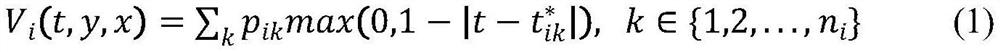

[0044] Volunteers were recruited, lip-reading data was collected using an event camera, and the collected data was segmented into word-level samples, and the spatial extent of each sample was cropped to the size of H×W, where H and W are height and width, respectively. The event data contained in the i-th sample is where x ik ,y ik ,t ik ,p ik Respectively represent the abscissa, ordinate, generated timestamp and polarity of the kth event in the ith sample, n i Indicates the total number of events contained in the i-th sample; repeat the shooting of the i-th sample multiple times, and record all the captured samples as the word set w i ; where w i ∈{1,2,...,m v ,...,V}, V is the nu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com