Achieving Low Grace Period Latencies Despite Energy Efficiency

a grace period and energy efficiency technology, applied in the field of computer systems and methods, can solve the problems of increasing grace period latencies, burdensome read-side lock acquisition, and sleeping with callbacks, and cannot take full advantage of subsequent grace periods

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

example embodiments

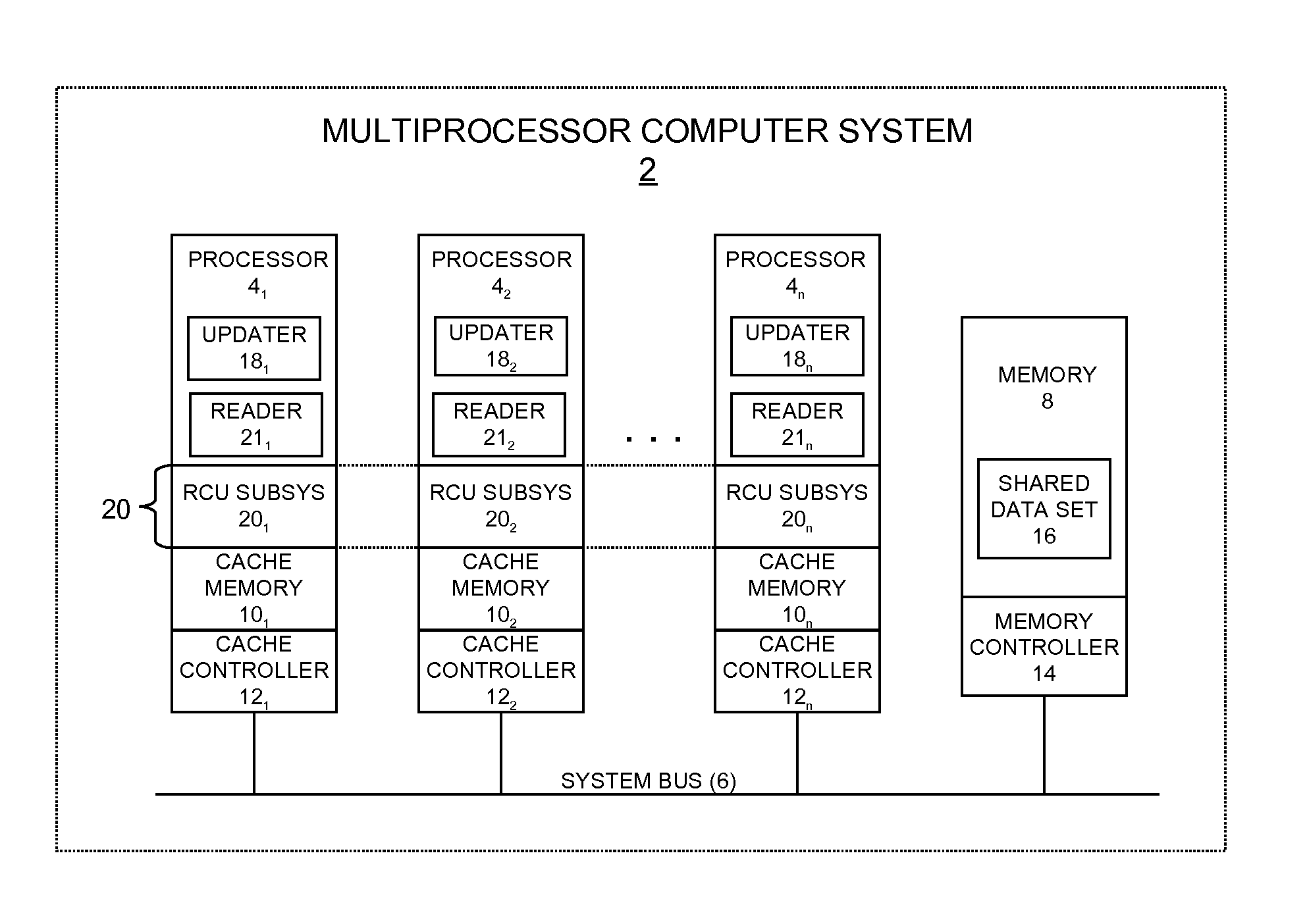

[0047]Turning now to the figures, wherein like reference numerals represent like elements in all of the several views, FIG. 4 illustrates an example multiprocessor computer system in which the grace period processing technique described herein may be implemented. In FIG. 4, a computer system 2 includes multiple processors 41, 42 . . . 4n, a system bus 6, and a program memory 8. There are also cache memories 101, 102 . . . 10n and cache controllers 121, 122 . . . 12n respectively associated with the processors 41, 42 . . . 4n. A conventional memory controller 14 is associated with the memory 8. As shown, the memory controller 14 may reside separately from processors 42 . . . 4n (e.g., as part of a chipset). As discussed below, it could also comprise plural memory controller instances residing on the processors 41, 42 . . . 4n.

[0048]The computer system 2 may represent any of several different types of computing apparatus. Such computing apparatus may include, but are not limited to, g...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com