Depth map encoding method and apparatus thereof, and depth map decoding method and apparatus thereof

a depth map and encoding technology, applied in the field of encoding and decoding of video data, can solve the problem that the depth map frame decoding apparatus may not unnecessarily perform the operation of obtaining differential information, and achieve the effect of efficiently de encoding a depth map imag

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

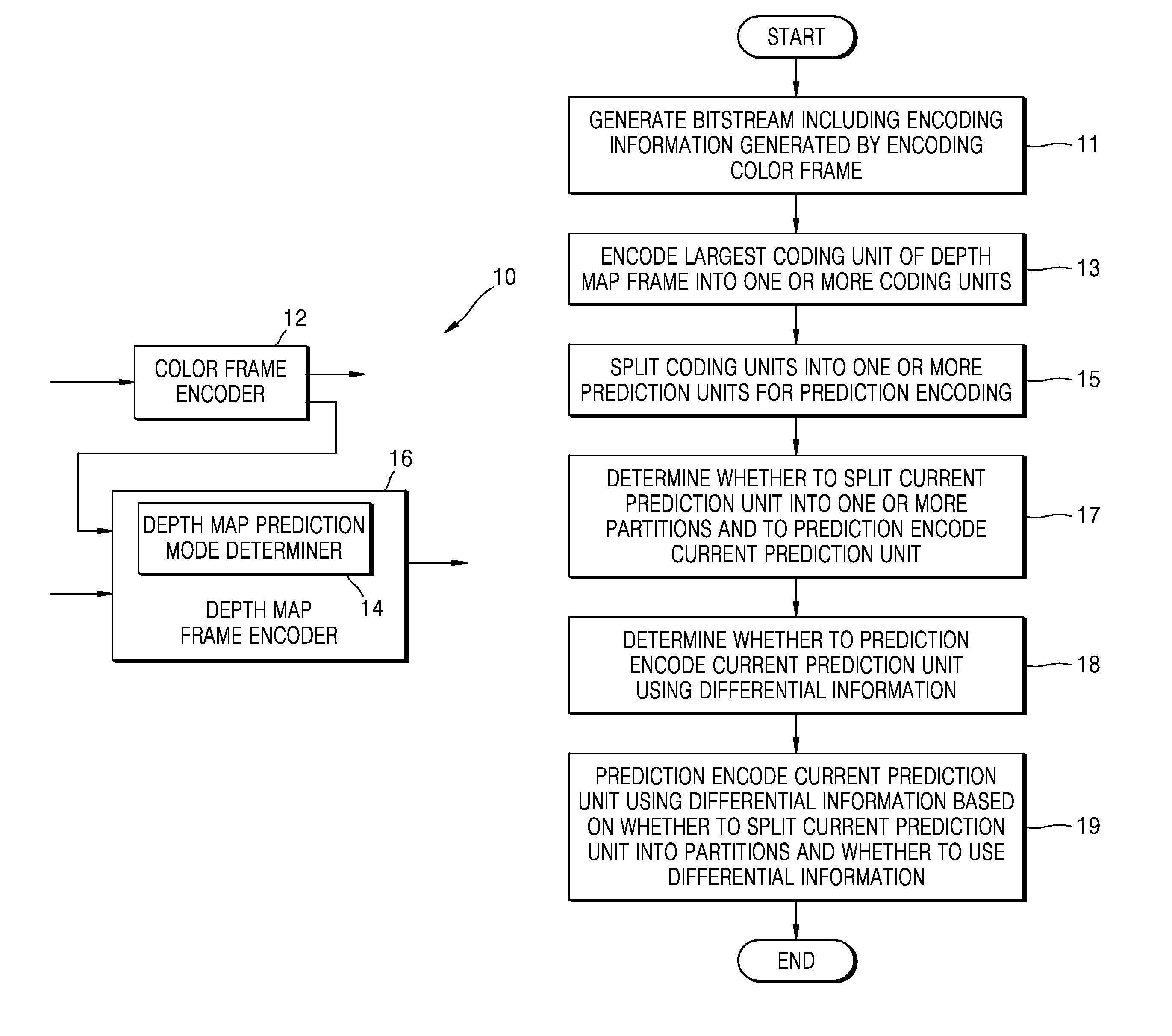

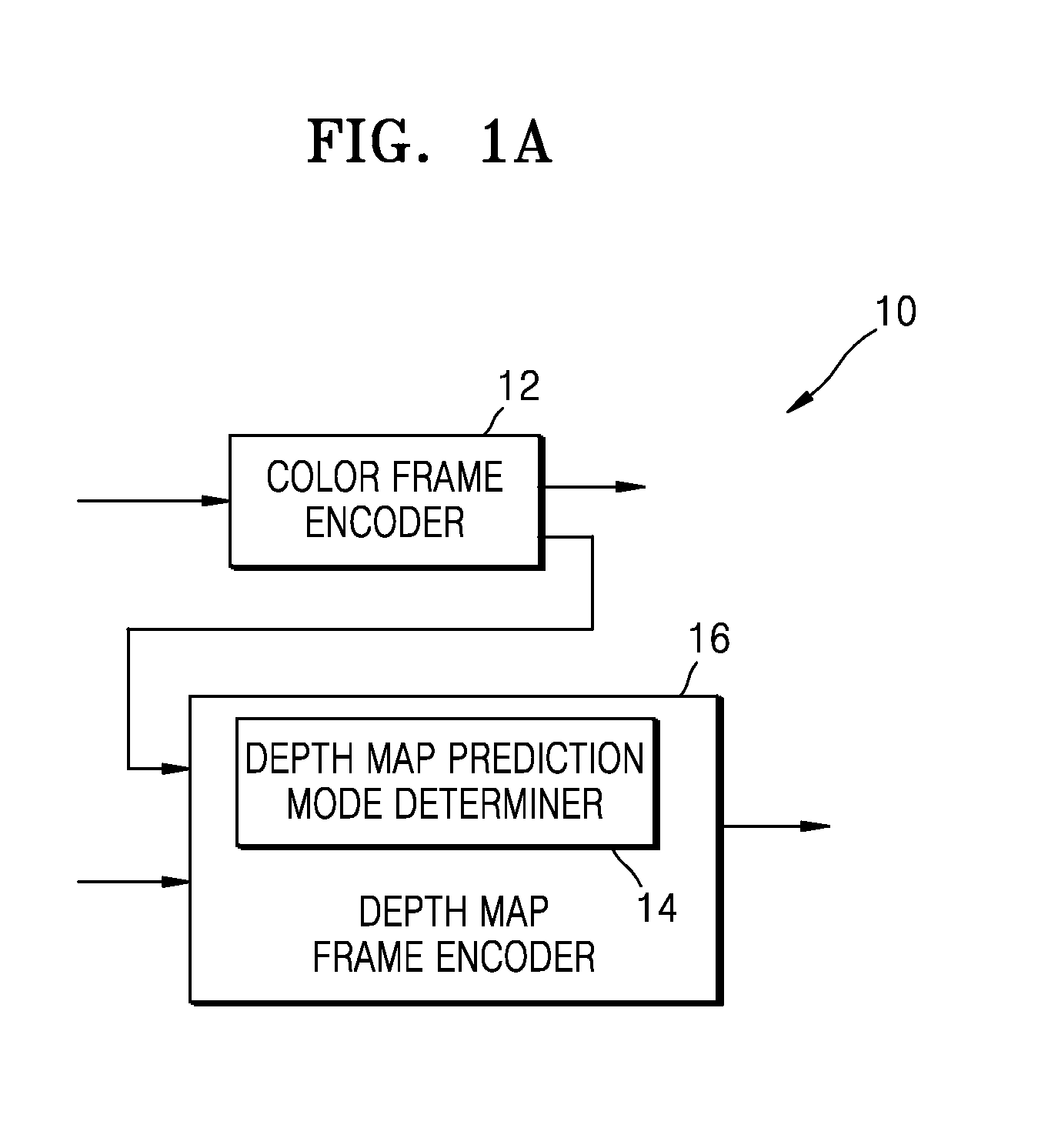

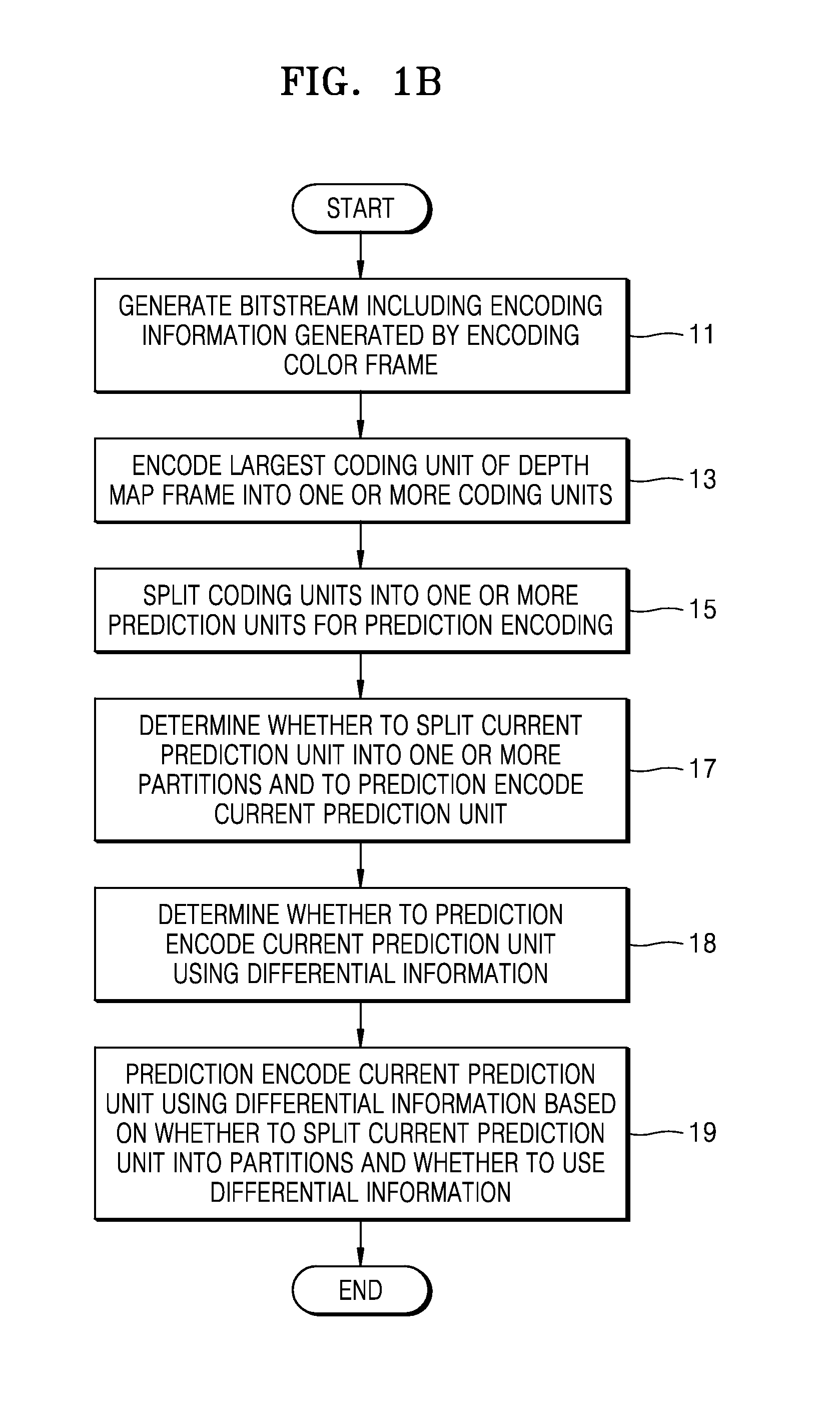

Method used

Image

Examples

Embodiment Construction

[0048]An optimally encoded prediction mode is selected based on cost. In this regard, differential information may include information indicating a difference between a representative value of a partition corresponding to an original depth map and a representative value of a partition predicted from neighboring blocks of a current prediction unit. The differential information may include delta constant partition value (CPV)(Delta DC). The delta CPV(Delta DC) means a difference between a DC value of the original depth map partition and a DC value of the predicted partition. For example, the DC value of the original depth map partition may be an average of depth values of blocks included in the partition. The DC value of the predicted partition may be an average of depth values of neighboring blocks of the partition or an average of depth values of partial neighboring blocks of the partition.

[0049]A depth map frame encoder 16 intra prediction decodes the current prediction unit by usi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com