Patents

Literature

82 results about "Depth map coding" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Encoding A Depth Map Into An Image Using Analysis Of Two Consecutive Captured Frames

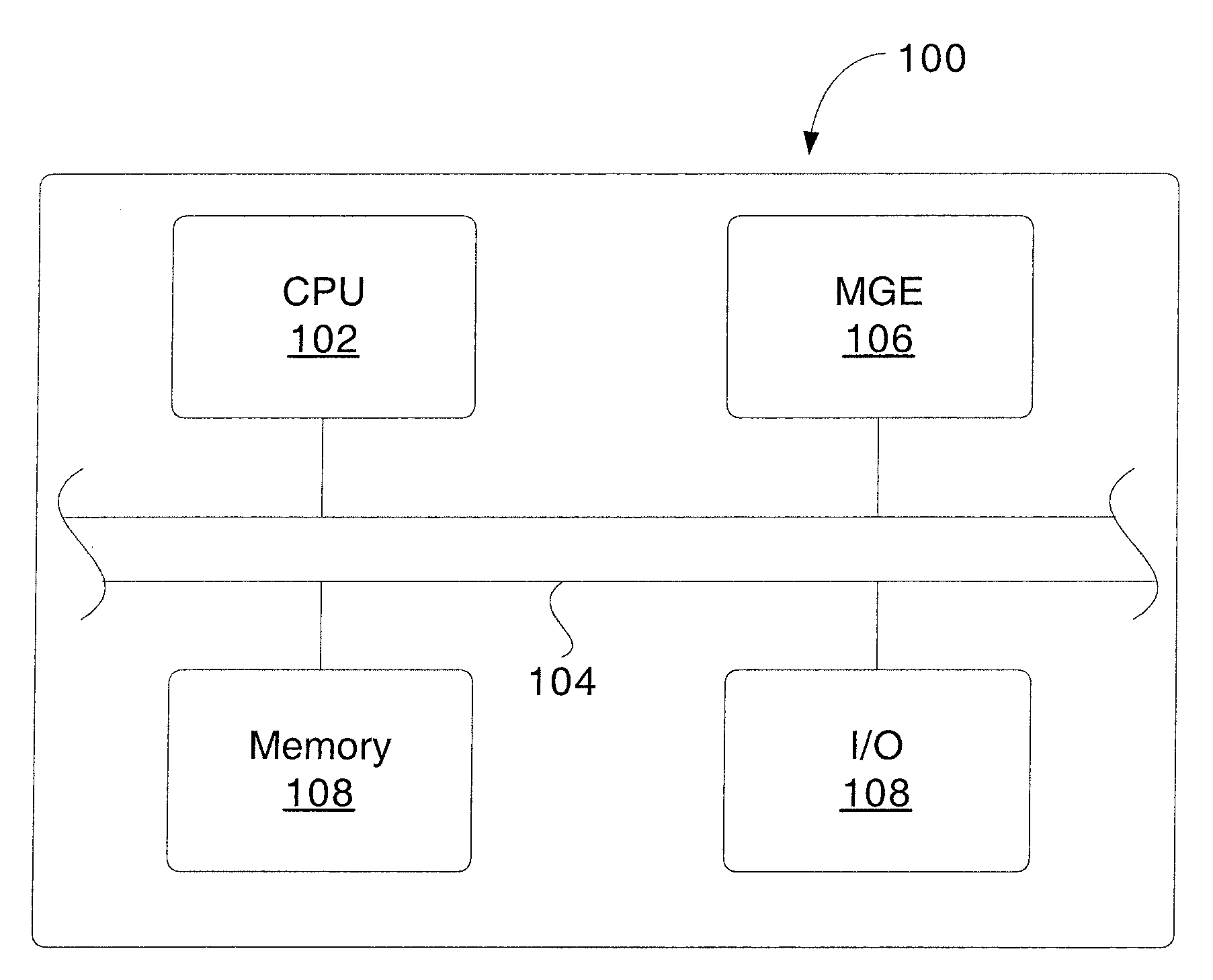

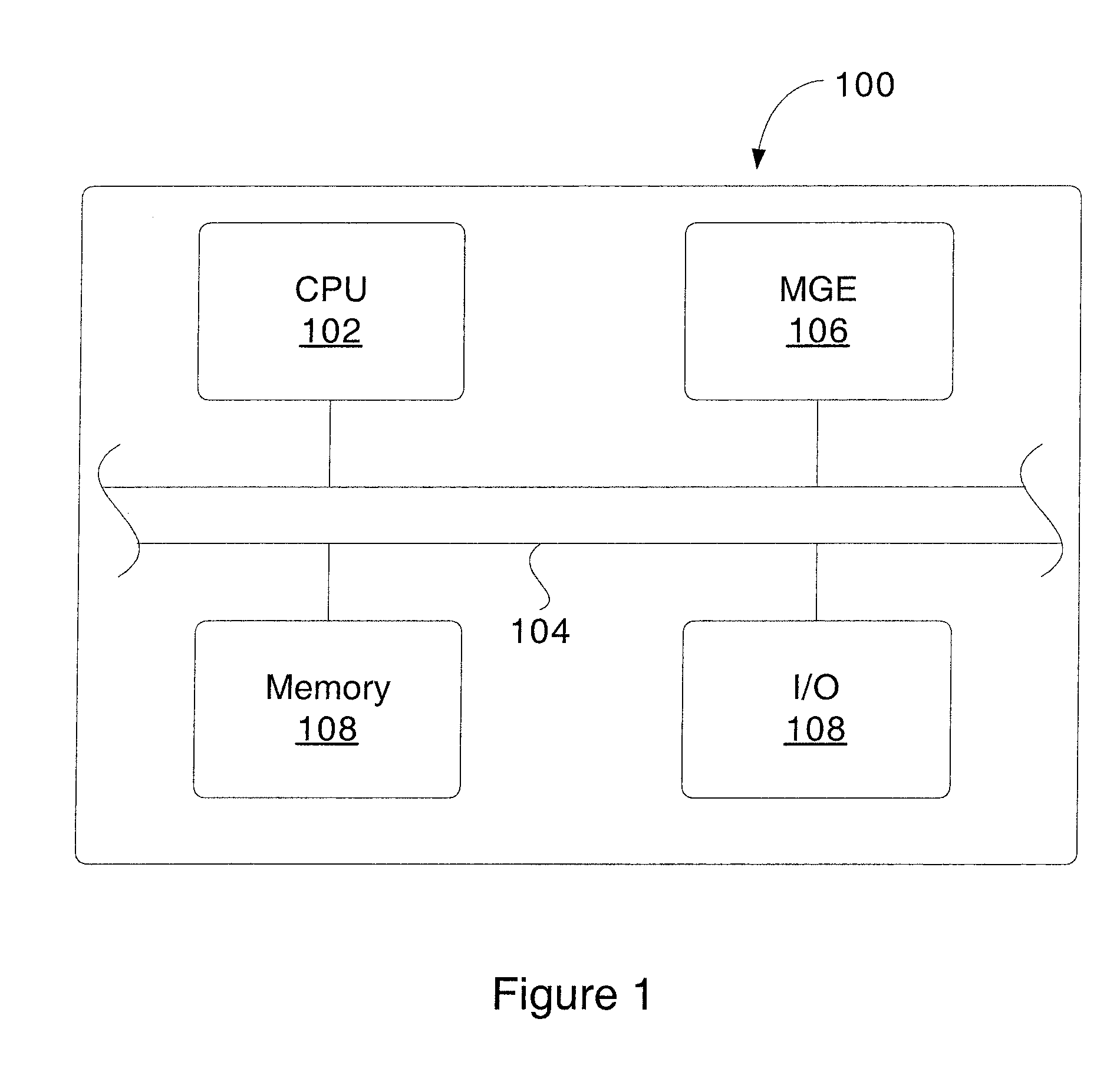

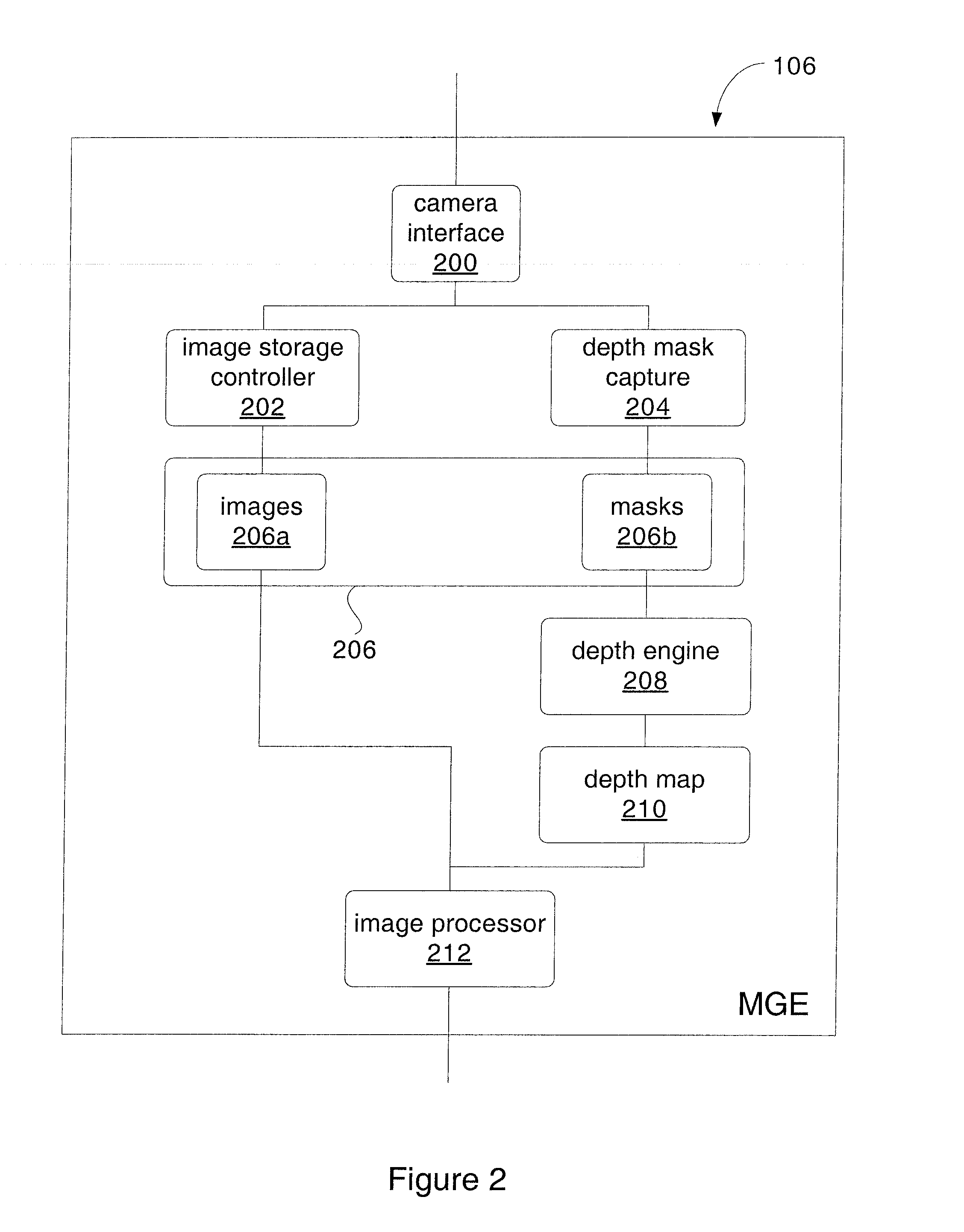

InactiveUS20090066693A1Digital video signal modification3D-image renderingDepth map codingSingle image

A computer implemented method of calculating and encoding depth data from captured image data is disclosed. In one operation, the computer implemented method captures two successive frames of image data through a single image capture device. In another operation, differences between a first frame of image data and a second frame of the image data are determined. In still another operation, a depth map is calculated when pixel data of the first frame of the image data is compared to pixel data of the second frame of the image data. In another operation, the depth map is encoded into a header of the first frame of image data.

Owner:SEIKO EPSON CORP

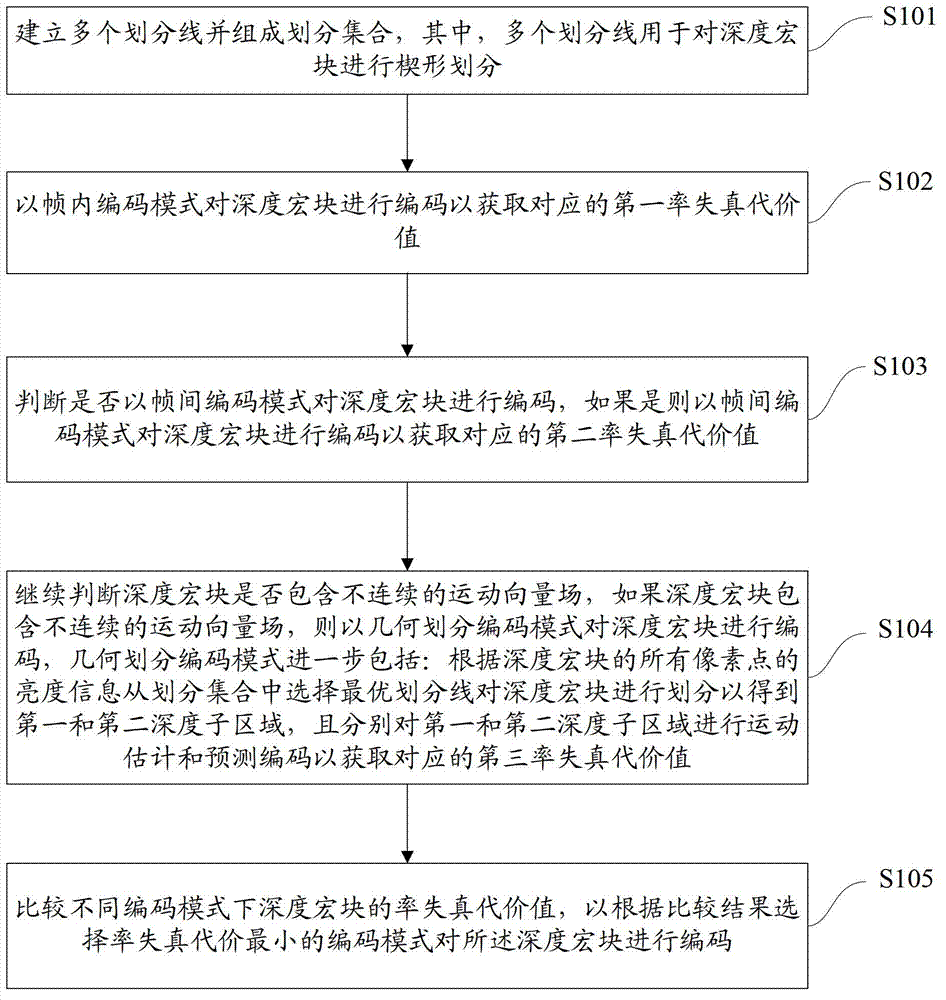

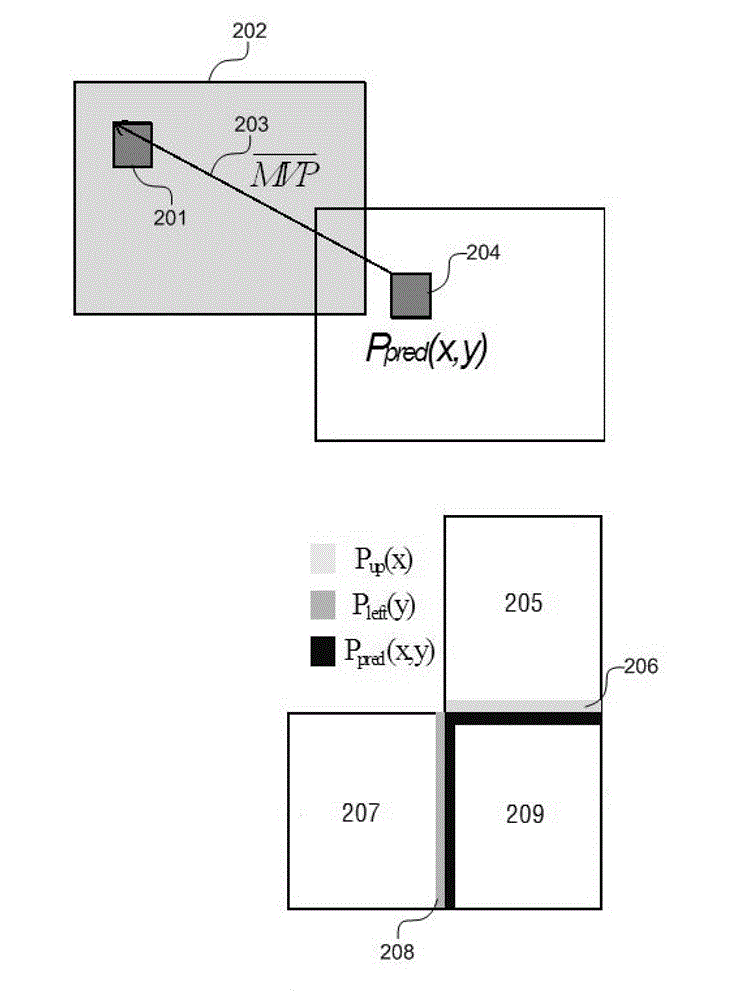

Depth map coding method and device

ActiveCN102790892AEfficient codingImprove compression efficiencyTelevision systemsDigital video signal modificationComputer architectureInterframe coding

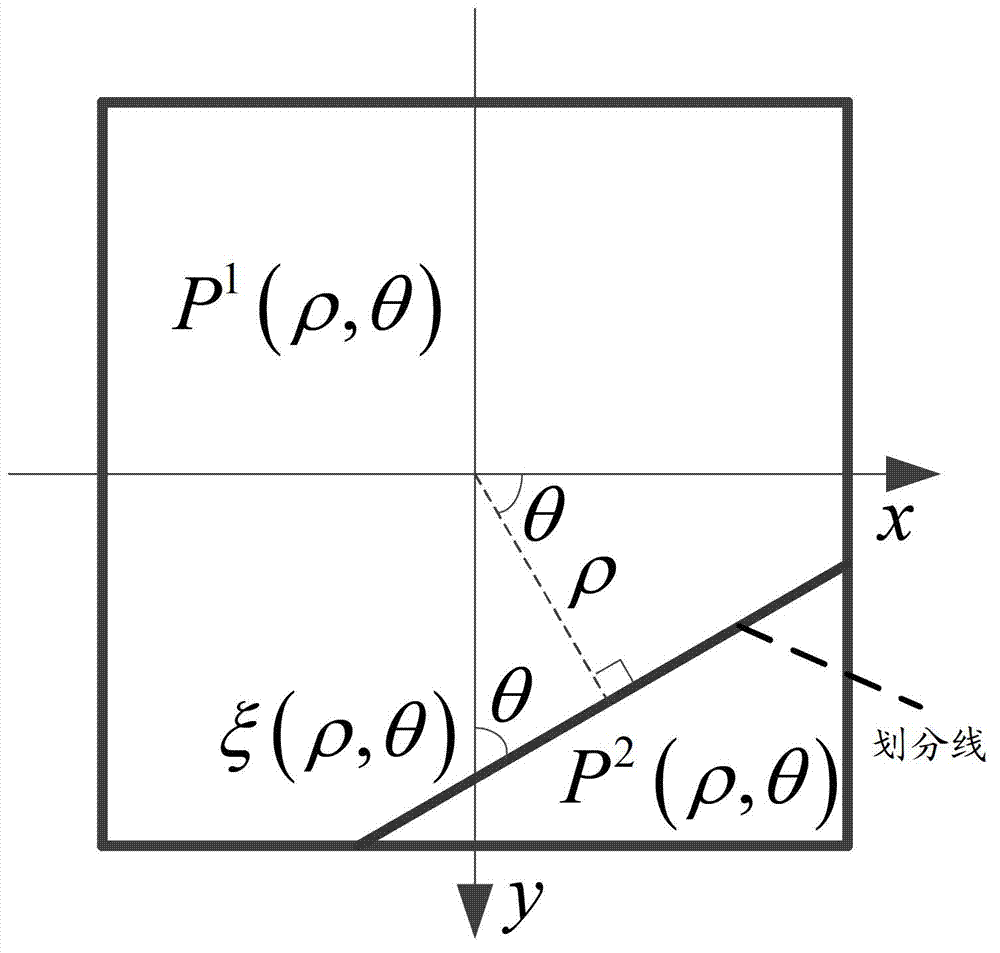

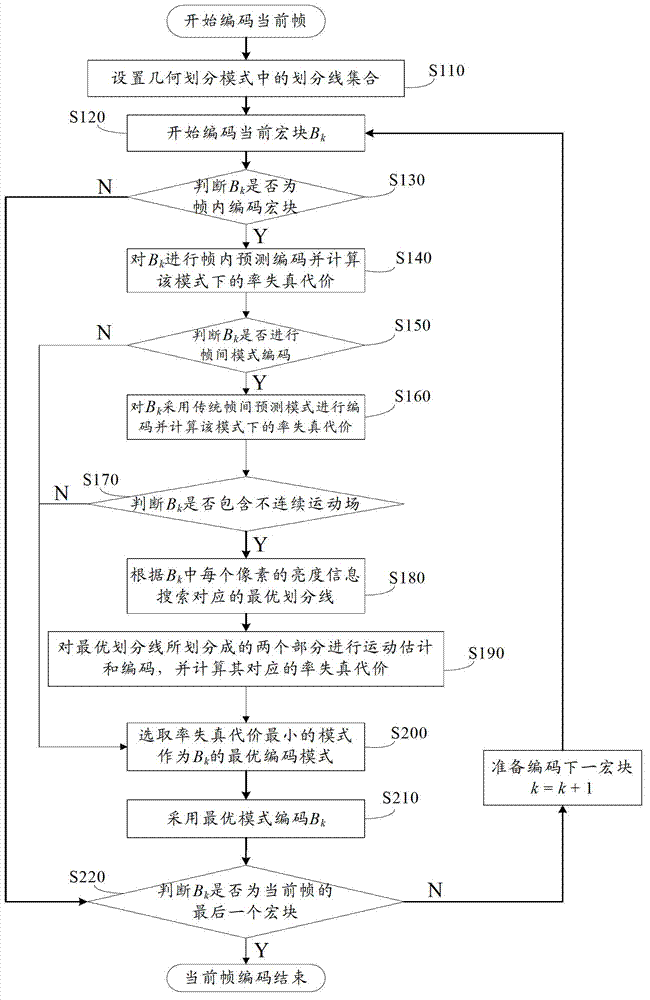

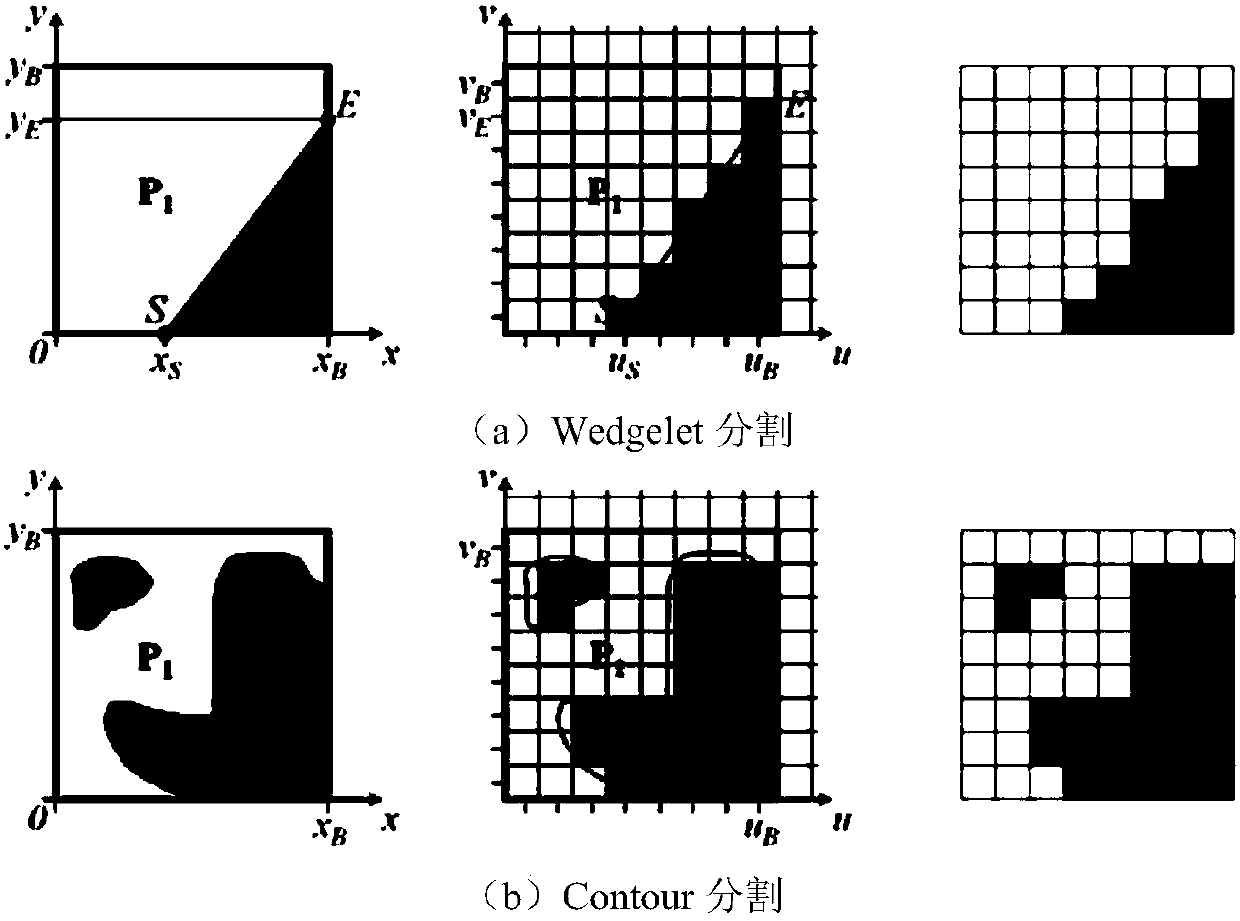

The invention provides a depth map coding method and a device. The depth map coding method includes establishing a plurality of partition lines which are used for performing wedge-shape partition to a depth macro block, and forming the partition lines into a partition set; using an intra coding mode to code the depth micro block to obtain a first rate-distortion cost value; judging whether an inter coding mode is used for coding the depth micro block and using the inter coding mode to code the depth micro block to obtain a second rate-distortion cost value if the inter coding mode is used for coding the depth micro block; and continuing judging whether the depth micro block contains a discontinuous motion vector field and using a geometric partitioning coding mode to code the depth micro block if the depth micro block contains the discontinuous motion vector field, wherein the geometric partitioning coding mode includes selecting an optimal partition line to partition the depth micro block to obtain a first depth subdomain and a second depth subdomain and subjecting the two subdomains to predictive coding to obtain a third rate-distortion cost value; and comparing the rate-distortion cost values in different coding modes so as to select the coding mode with the minimum rate-distortion cost value for coding the depth micro block. According to the depth map coding method and the device, depth map compression efficiencies are improved, and coding complexities are reduced.

Owner:TSINGHUA UNIV +1

Method and apparatus for block-based depth map coding and 3D video coding method using the same

ActiveUS20100231688A1Maximize advantageEfficient compressionPulse modulation television signal transmissionPicture reproducers using cathode ray tubesComputer architectureDepth map coding

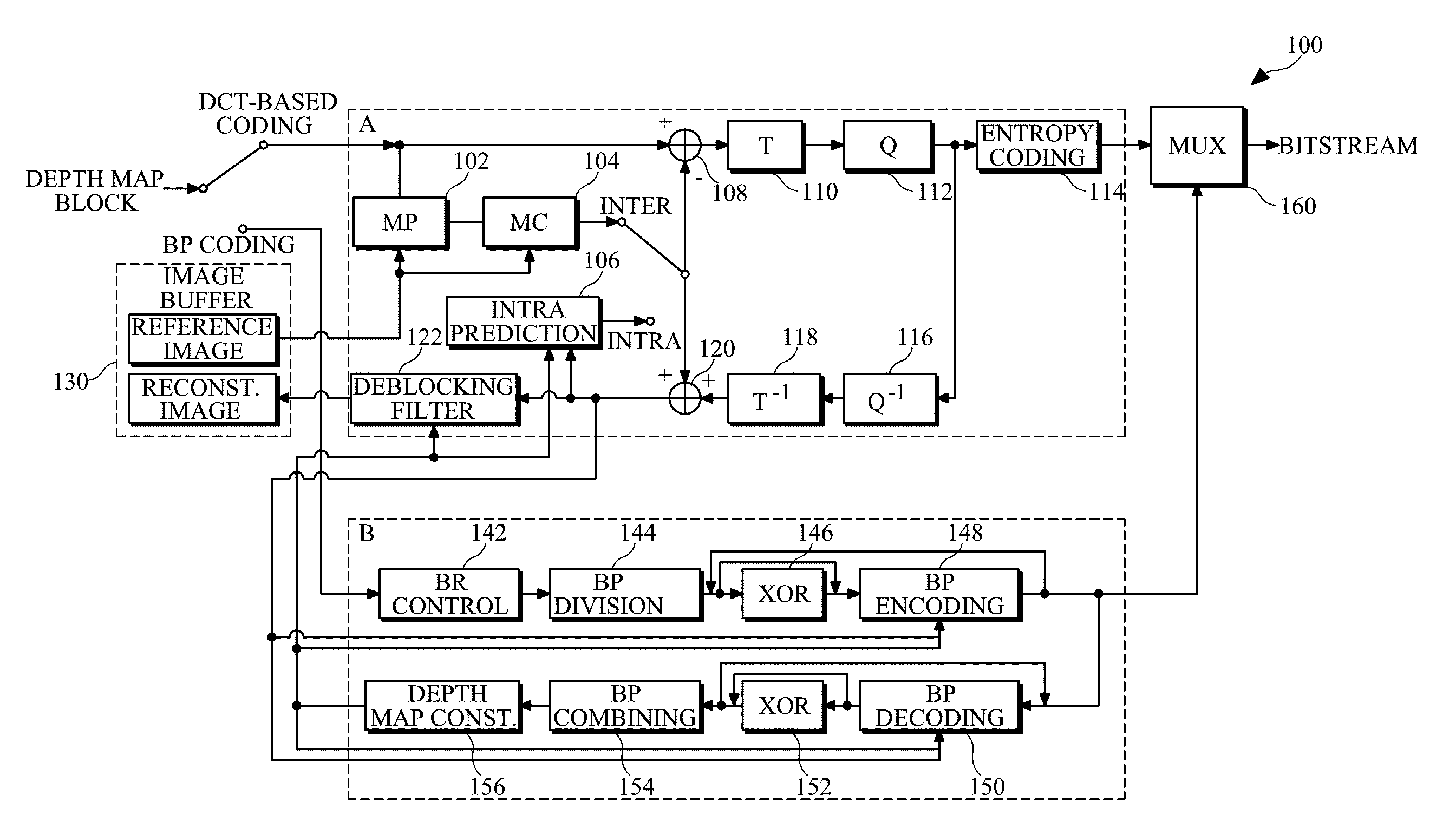

Provided are a block-based depth map coding method and apparatus and a 3D video coding method using the same. The depth map coding method decodes a received bitstream in units of blocks of a predetermined size using a bitplane decoding method to reconstruct a depth map. For example, the depth map coding method may decode the bitstream in units of blocks using the bitplane decoding method or an existing Discrete Cosine Transform (DCT)-based decoding method adaptively according to decoded coding mode information. The bitplane decoding method may include adaptively performing XOR operation in units of bitplane blocks. For example, a determination on whether or not to perform XOR operation may be done in units of bitplane blocks according to the decoded value of XOR operation information contained in the bitstream.

Owner:UNIV IND COOP GRP OF KYUNG HEE UNIV

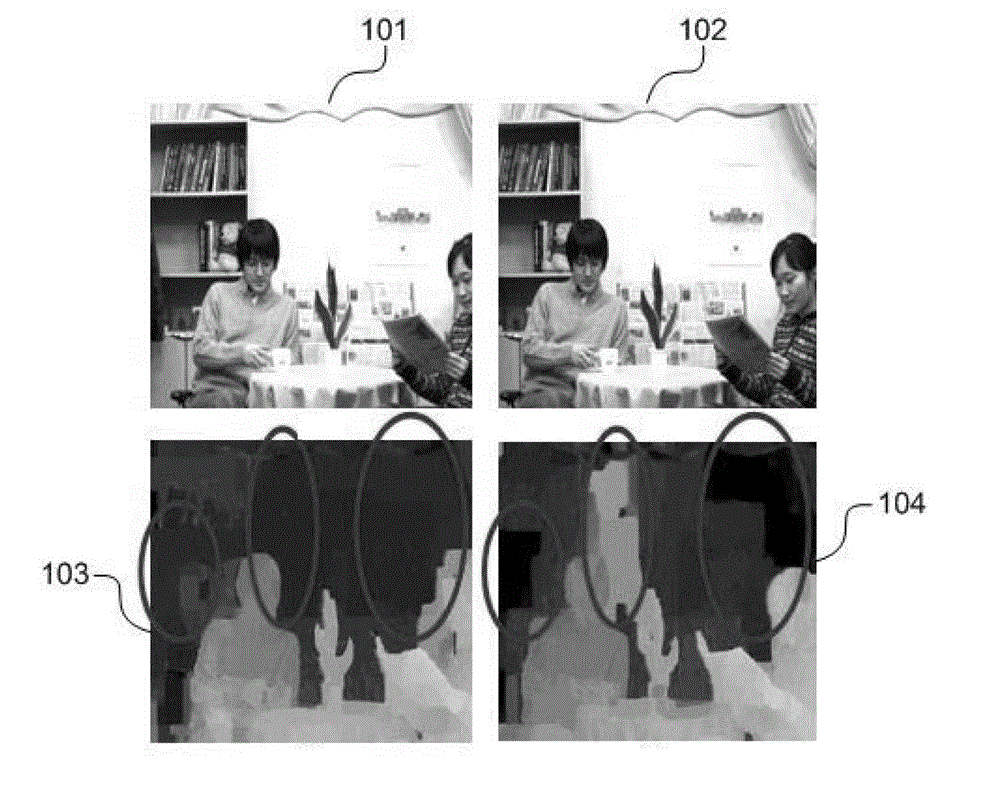

Hybrid skip mode used for depth map encoding and decoding

ActiveCN102752595ATelevision systemsDigital video signal modificationPattern recognitionDepth map coding

The invention provides a hybrid skip mode used for depth map encoding and decoding. Compared with a texture view, the differences are that a depth map image has a smooth area and has no complex texture at the edge of an object or rapid change of a pixel value. Although the conventional interframe predicting skip mode is very effective for encoding the texture view, no intra-frame predicting capabilities are included, and the intra-frame prediction is very effective for encoding the smooth area. The hybrid predicting skip mode provided by the invention comprises an interframe predicting skip mode which is coupled with various intra-frame predicting modes; and the predicting mode is selected through calculating the side matching distortion (SMD) of the predicting mode. As no additional indicator bit is required and the bit stream syntax is not changed, high encoding efficiency is kept; and moreover, the encoding program provided by the invention and used for encoding the depth map can be used as the extension of the existing standard and can be realized more easily.

Owner:HONG KONG APPLIED SCI & TECH RES INST

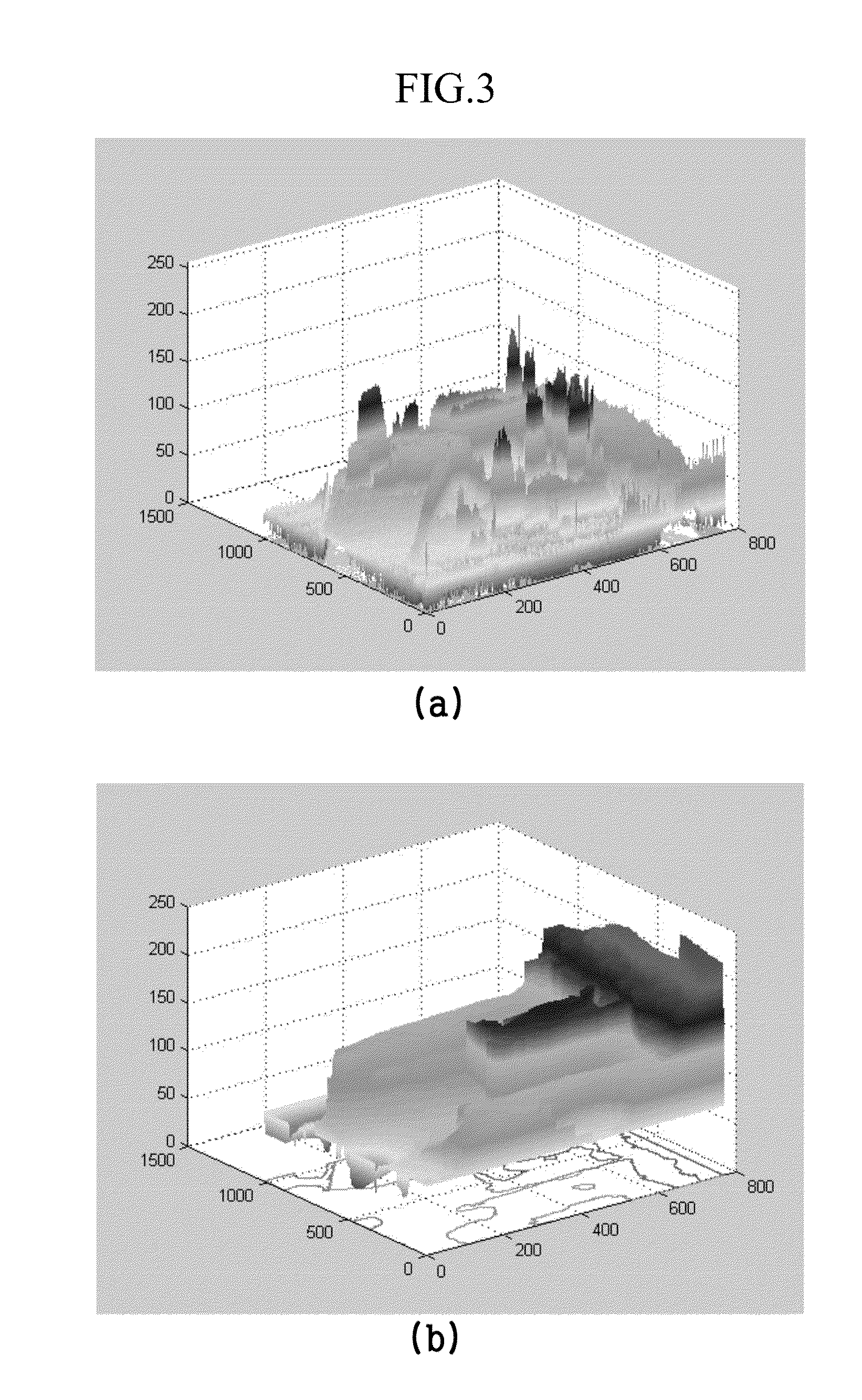

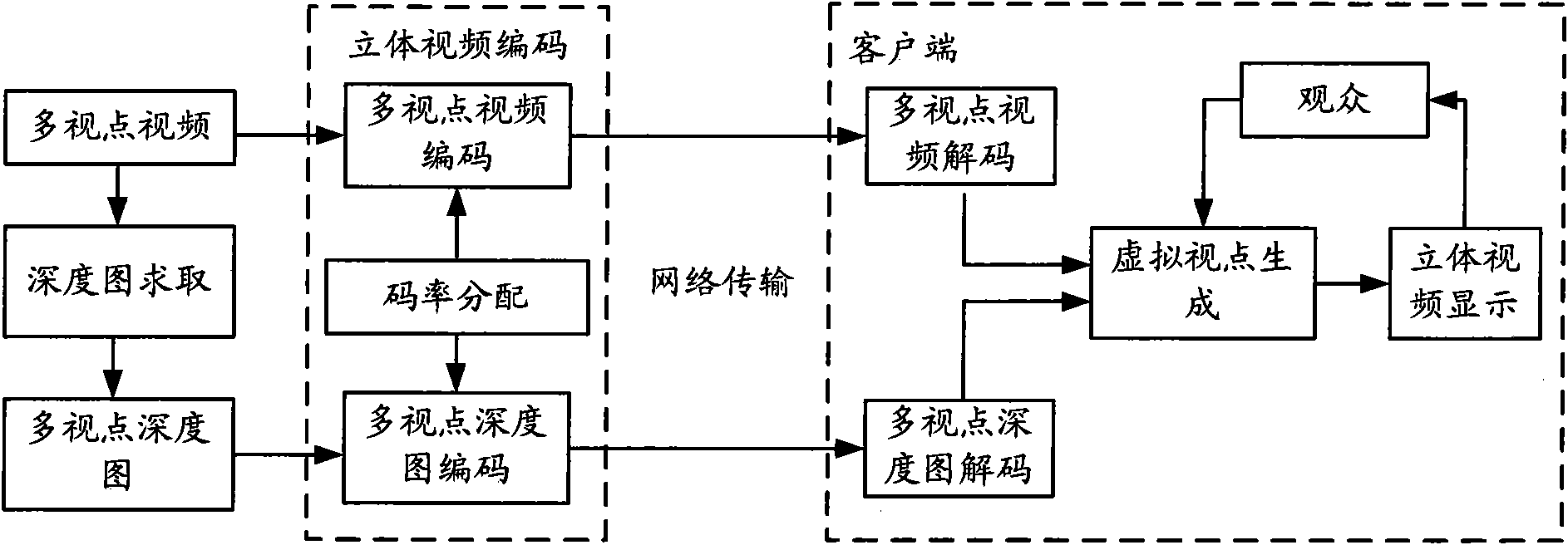

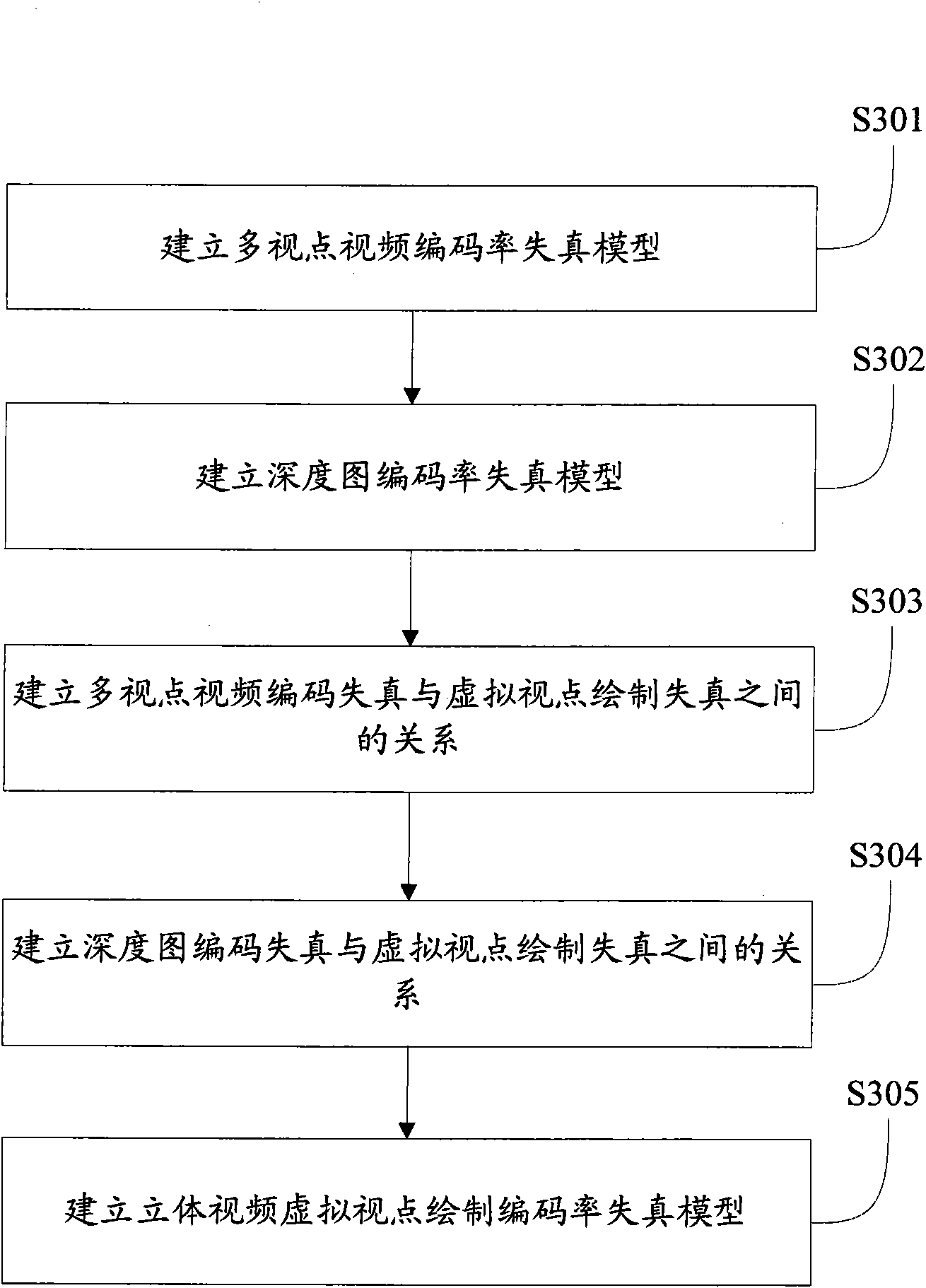

Estimation method of distortion performance of stereo video encoding rate

ActiveCN101888566AImprove coding efficiencyTelevision systemsDigital video signal modificationDepth map codingEstimation methods

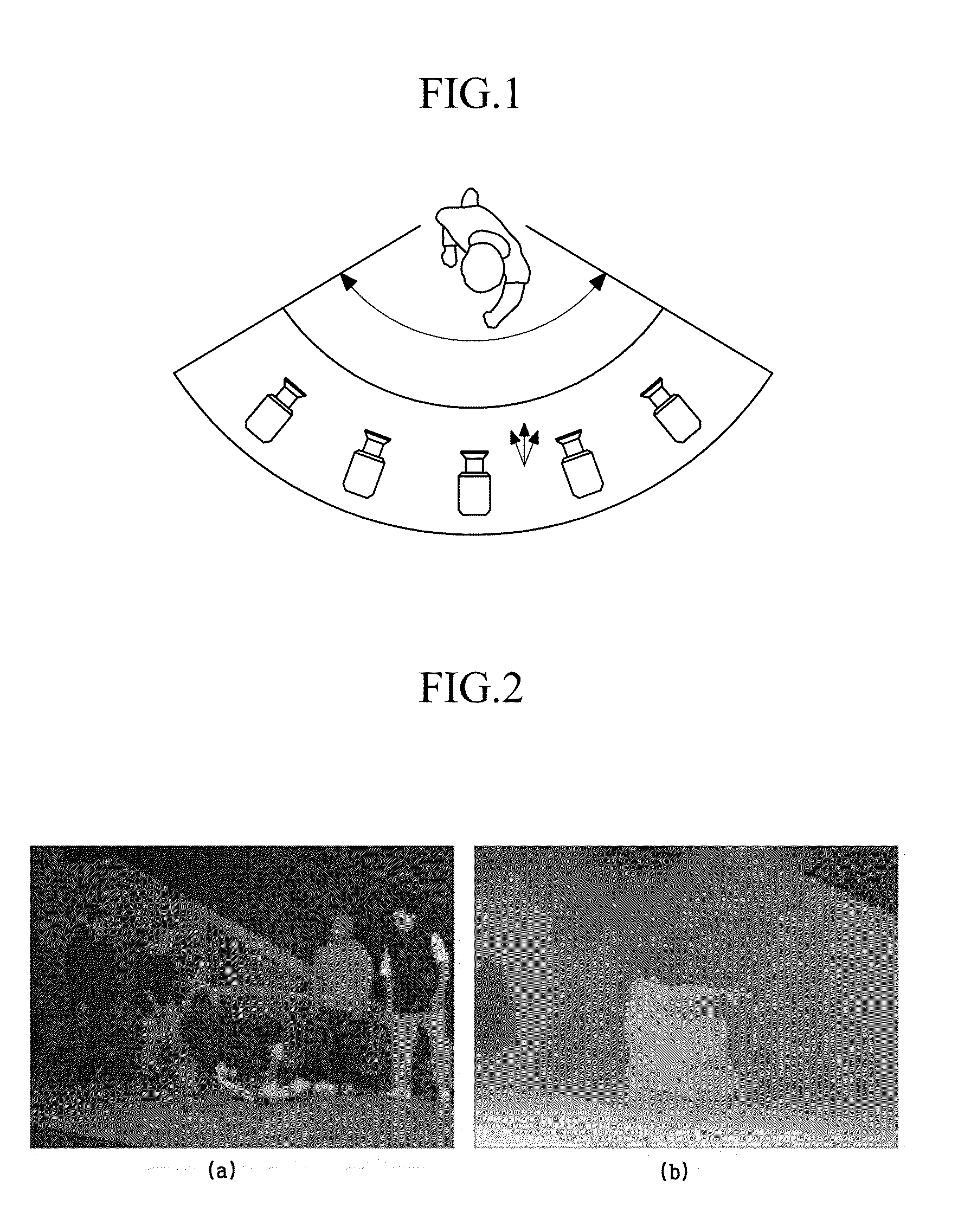

The invention provides an estimation method of distortion performance of a stereo video encoding rate, comprising the following steps: acquiring a multi-view video and acquiring a corresponding multi-view depth map according to the multi-view video; respectively obtaining a multi-view video encoding rate distortion model and a multi-view depth map encoding rate distortion model; respectively obtaining the relationship between multi-view video encoding distortion and virtual view rendering distortion as well as the relationship between the multi-view depth map encoding distortion and the virtual view rendering distortion; and finally establishing a virtual view encoding rendering rate distortion model in a stereo video based on the relationships. By the analysis method of virtual view encoding rendering rate distortion in the stereo video provided by the invention, the distortion performance of the virtual view encoding rendering rate in the stereo video can be accurately and rapidly established so as to provide model guide and solution for problems such as selection of coding parameters, code rate distribution and the like in the stereo video.

Owner:TSINGHUA UNIV

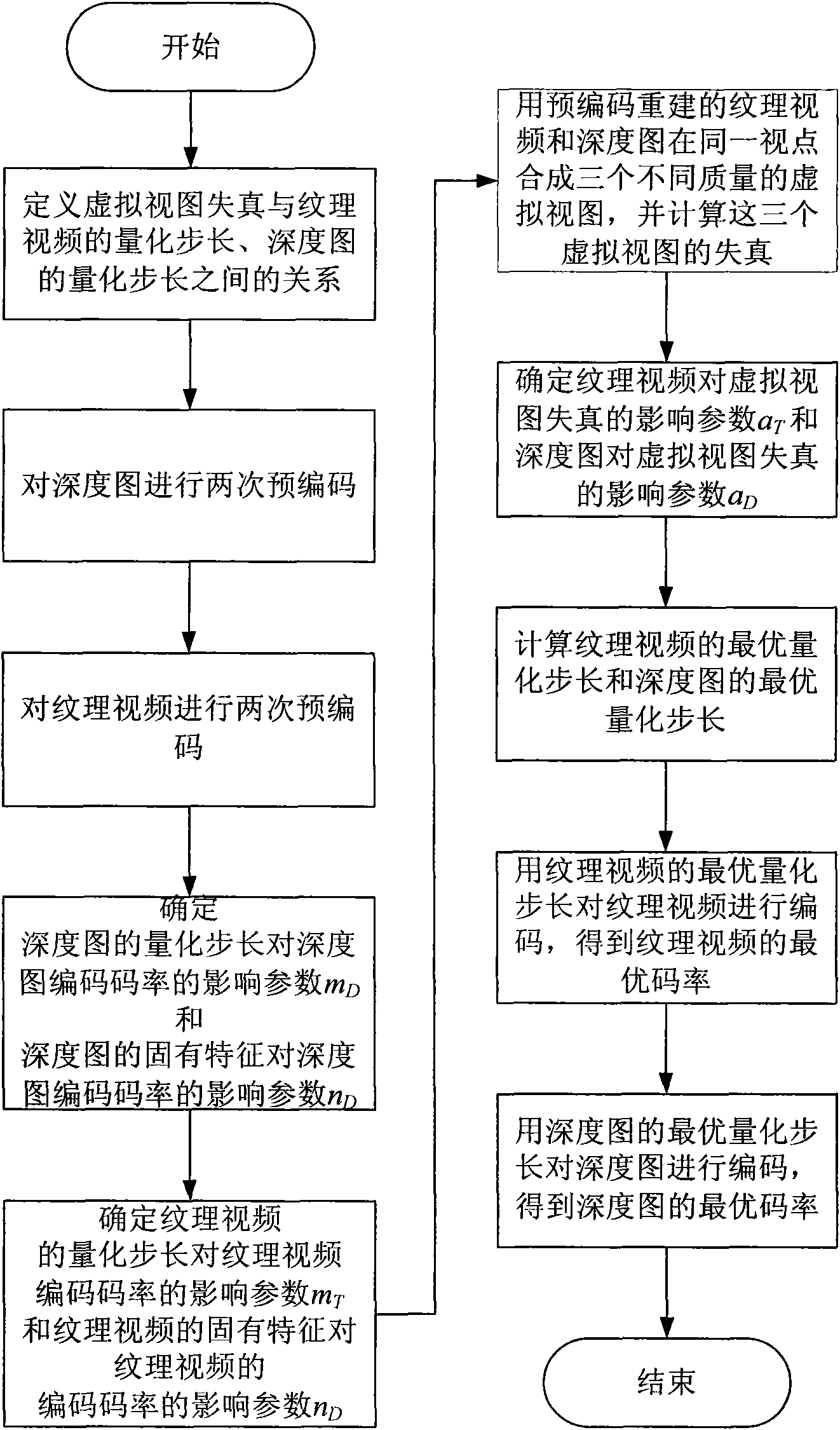

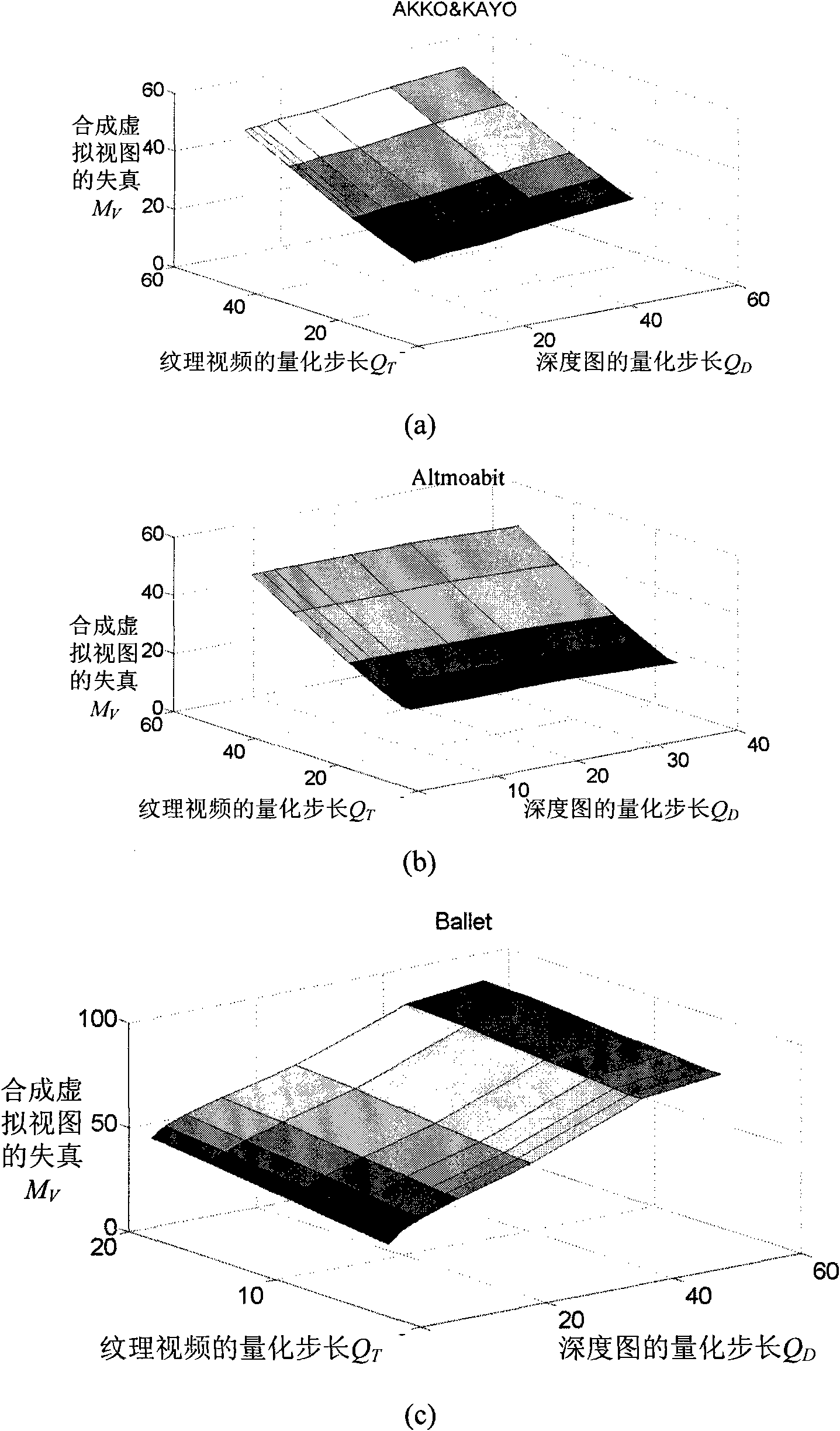

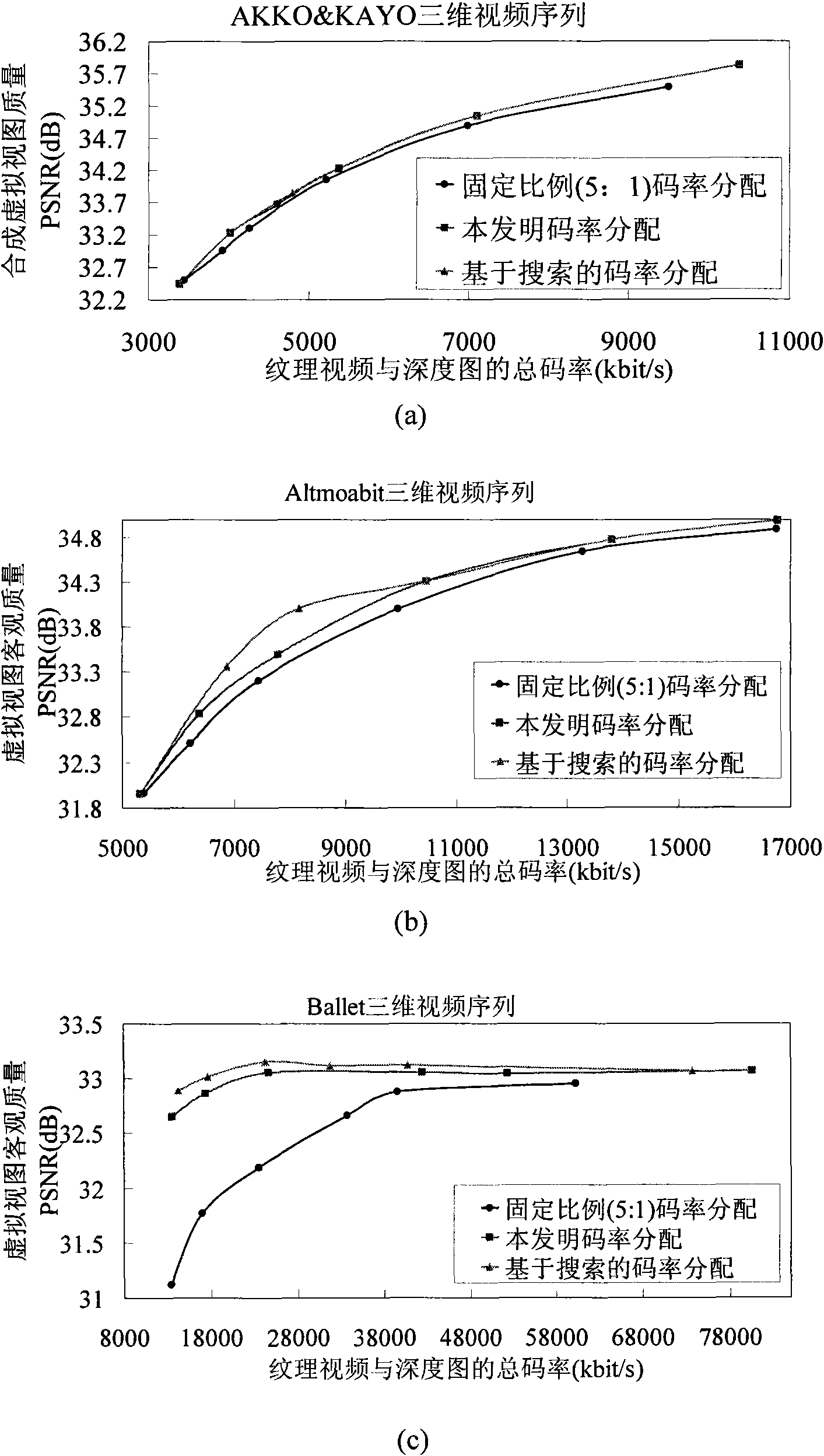

Allocation method for optimal code rates of texture video and depth map based on models

InactiveCN101835056AQuality improvementReduce computational complexityTelevision systemsDigital video signal modificationPattern recognitionDepth map coding

The invention discloses an allocation method for optimal code rates of a texture video and a depth map based on models, mainly solving the problem of code rate allocation of the texture video and the depth map in three-dimensional video encoding. The proposal is as follows: determining the relationship between virtual view distortion and the quantization step of texture video and the quantization step of the depth map; calculating the optimal quantization step of the texture video and the optimal quantization step of the depth map by using the relationship between the encoding rates of the texture video and the quantization step of the texture video and the relationship between the encoding rates of the depth map and the quantization step of the depth map; and encoding the texture video and the depth map with the optimal quantization step of the texture video and the optimal quantization step of the depth map to achieve allocation for the optimal coding rate of the texture video and the depth map. The method has the advantages that complexity is low and the optimal code rates of the texture video and the depth map can be reached. The method can be used for allocation of the code rates of the texture video and the depth map in three-dimensional video coding.

Owner:XIDIAN UNIV

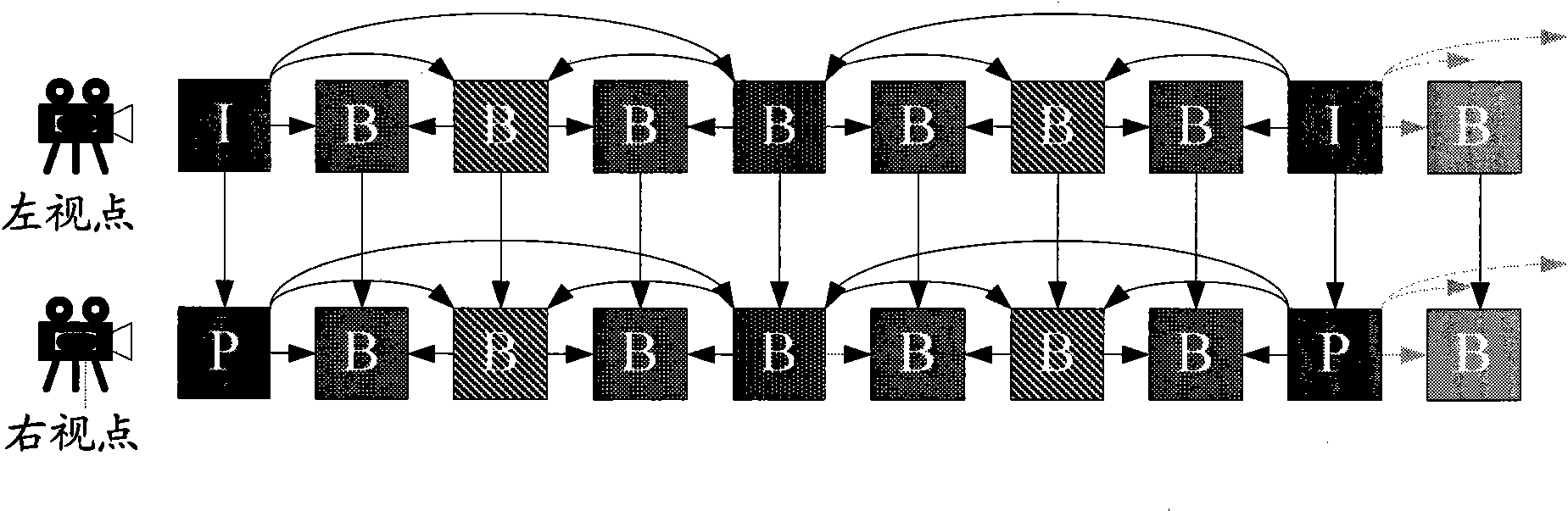

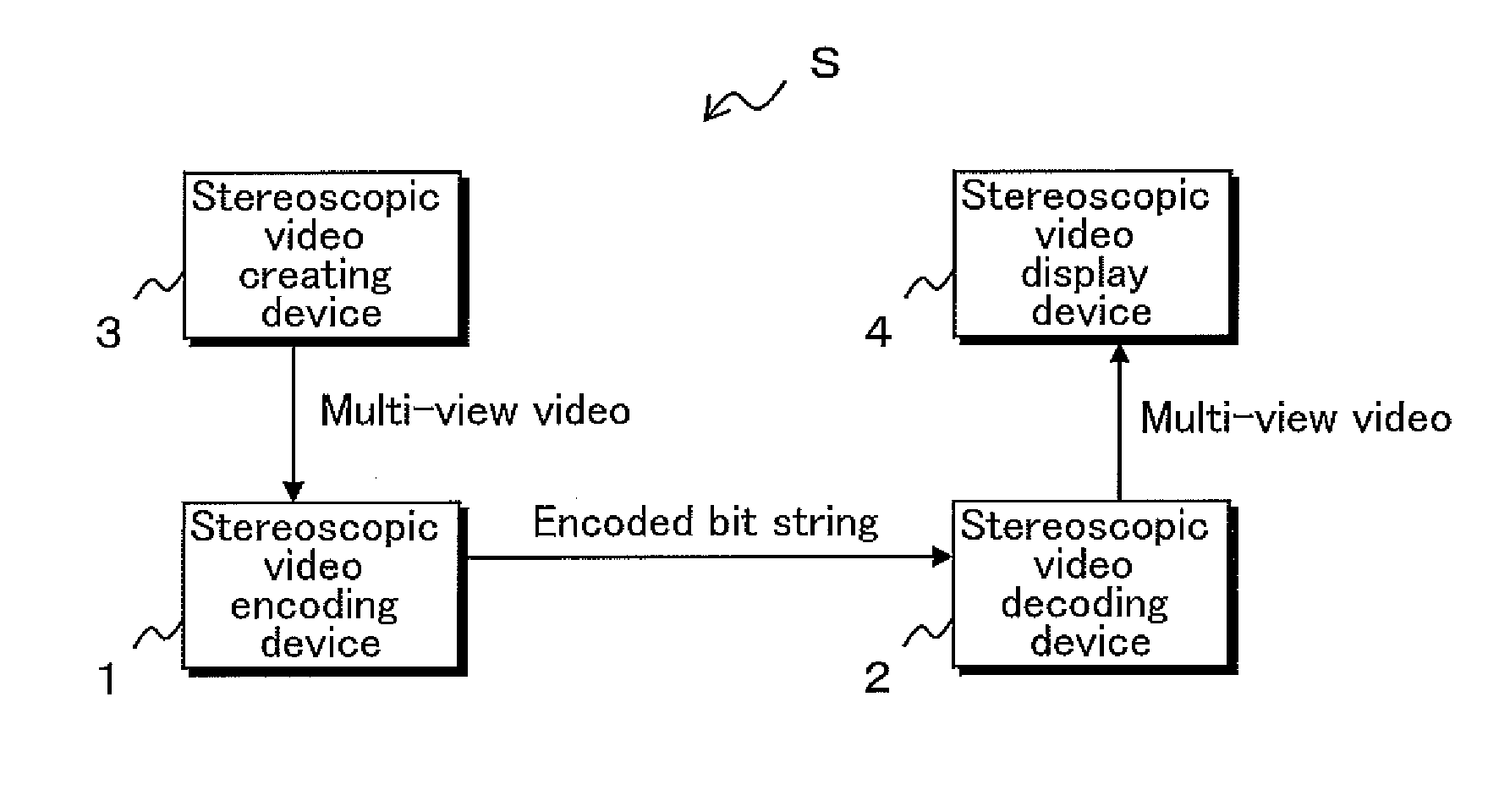

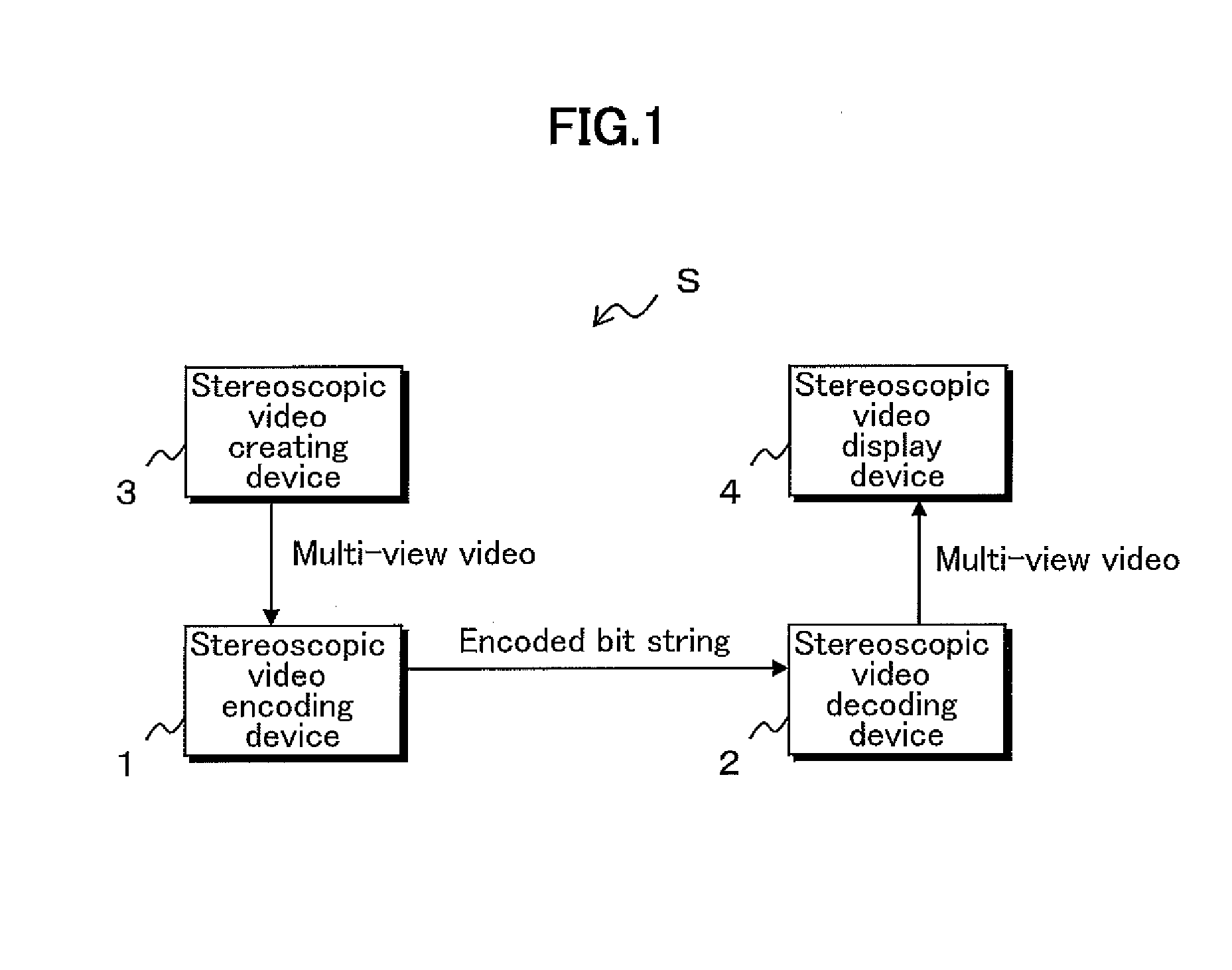

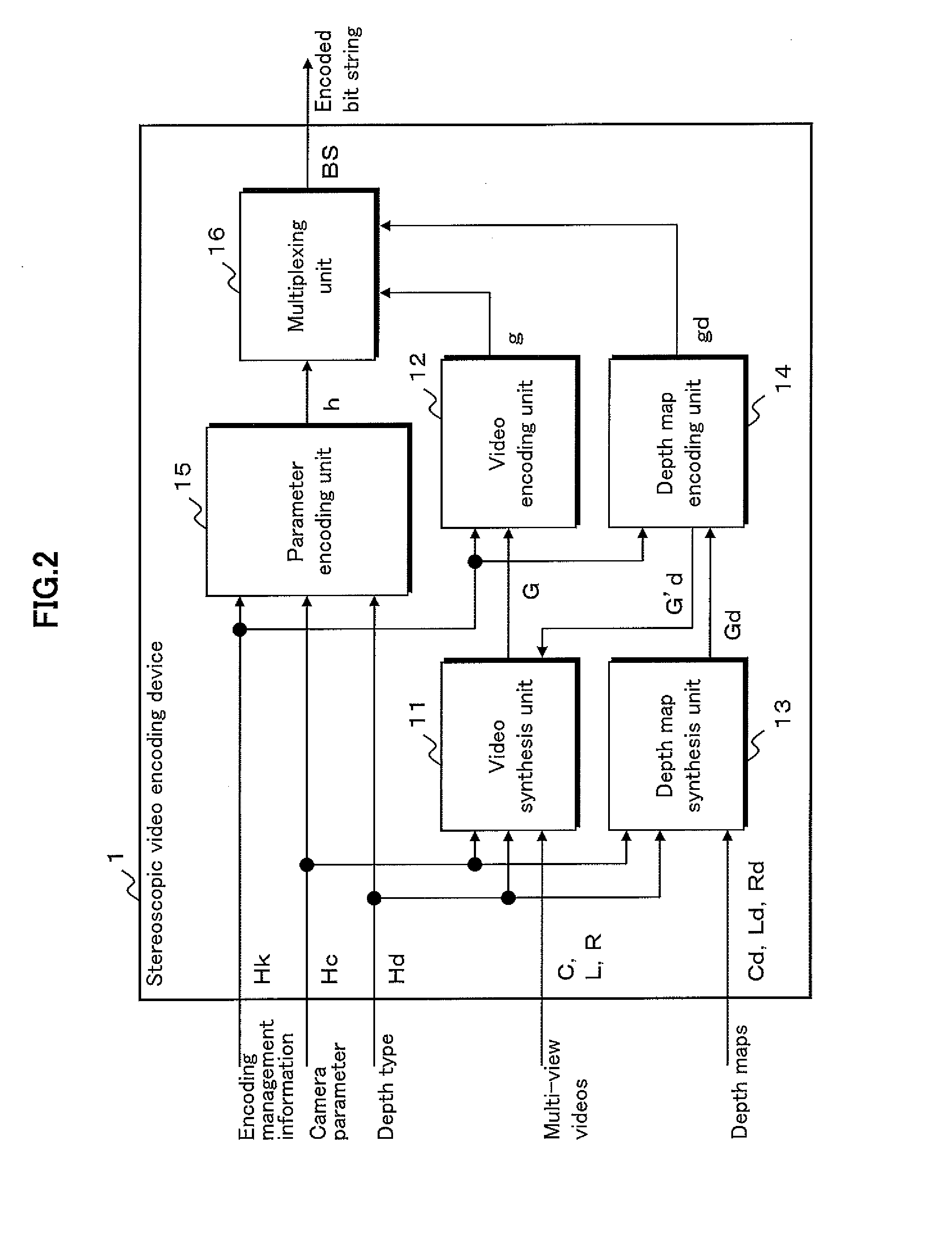

Stereoscopic video encoding device, stereoscopic video decoding device, stereoscopic video encoding method, stereoscopic video decoding method, stereoscopic video encoding program, and stereoscopic video decoding program

InactiveUS20150341614A1Avoid misuseQuick extractionDigital video signal modificationSteroscopic systemsMultiplexingStereoscopic video

A stereoscopic video encoding device inputs therein: a multi-view video constituted by a reference viewpoint video, a left viewpoint video, and a right viewpoint video; and a reference viewpoint depth map, a left viewpoint depth map, and a right viewpoint depth map, each of which is a map of a depth value associated with the multi-view video. In the stereoscopic video encoding device, based on a synthesis technique indicated by a depth type, a video synthesis unit synthesizes a plurality of videos, and a depth map synthesis unit synthesizes a plurality of depth maps. A video encoding unit, a depth map encoding unit, and a parameter encoding unit encode, by respective units, respective parameters containing a synthesized video, a synthesized depth map, and a depth type, respectively. A multiplexing unit multiplexes the encoded parameters into an encoded bit string and transmits the multiplexed encoded bit string.

Owner:NAT INST OF INFORMATION & COMM TECH

Depth map encoding compression method for 3DTV and FTV system

InactiveCN101374243AReduce coding complexityImprove encoding speedImage analysisTelevision systemsViewpointsBlock code

The invention discloses a depth map coding compression method applied to 3DTV and FTV systems. The depth map is divided into an immobile non-edge region, a non-edge region and an edge region in a moving object according to the edge information of the depth map and the motion information of viewpoint images, the depth map is coded after the coding of the viewpoint images is completed, and the searching range of a macro block coding mode in the immobile non-edge region and the non-edge region in the moving object can be reduced on the premise of the ensured coding quality of the depth map coding process by using the macro block coding mode and a motion vector determined after the viewpoint image coding in combination with the three regions of the depth map, thereby reducing the depth map coding complexity and improving the depth map coding speed.

Owner:上海贵知知识产权服务有限公司

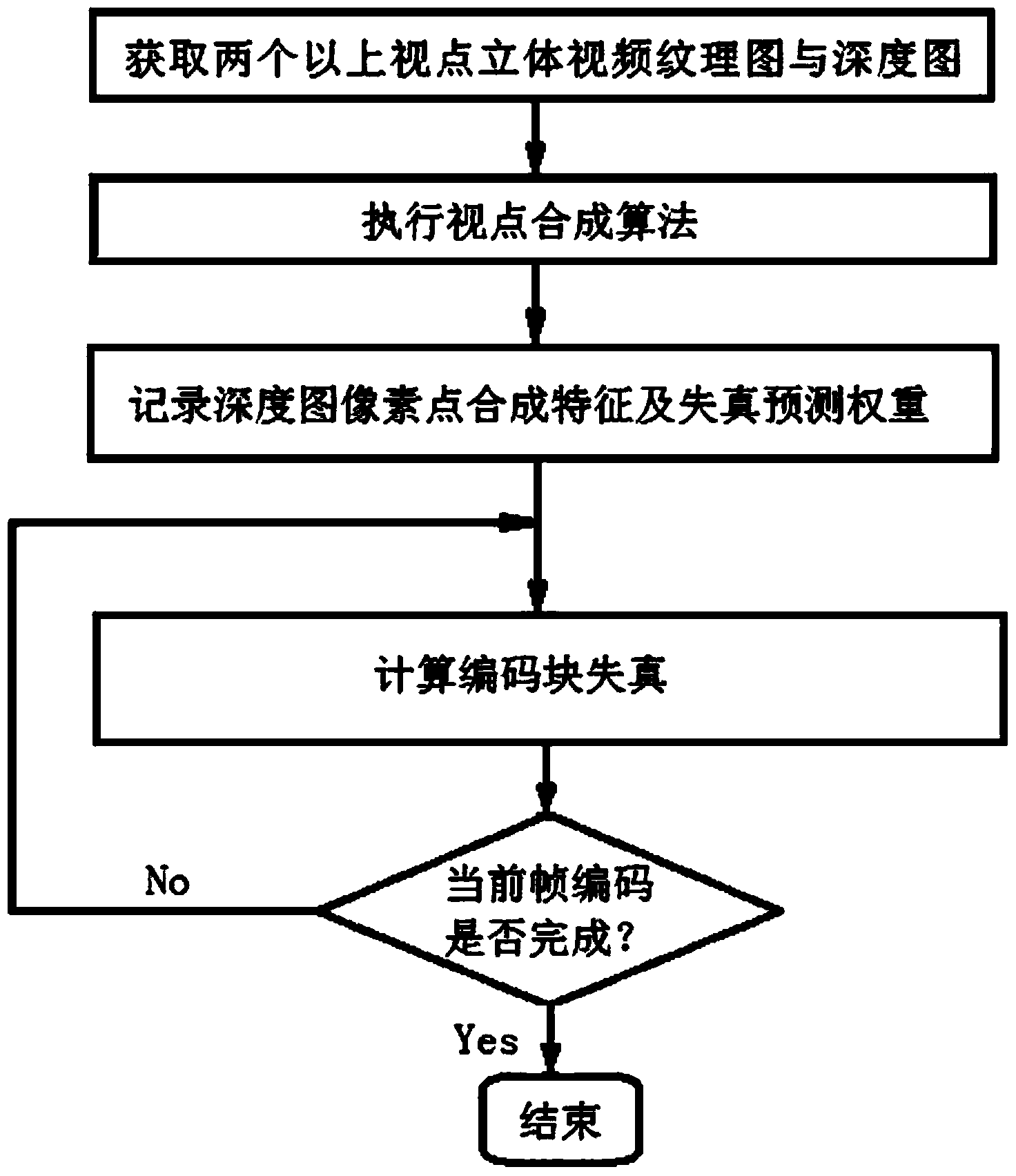

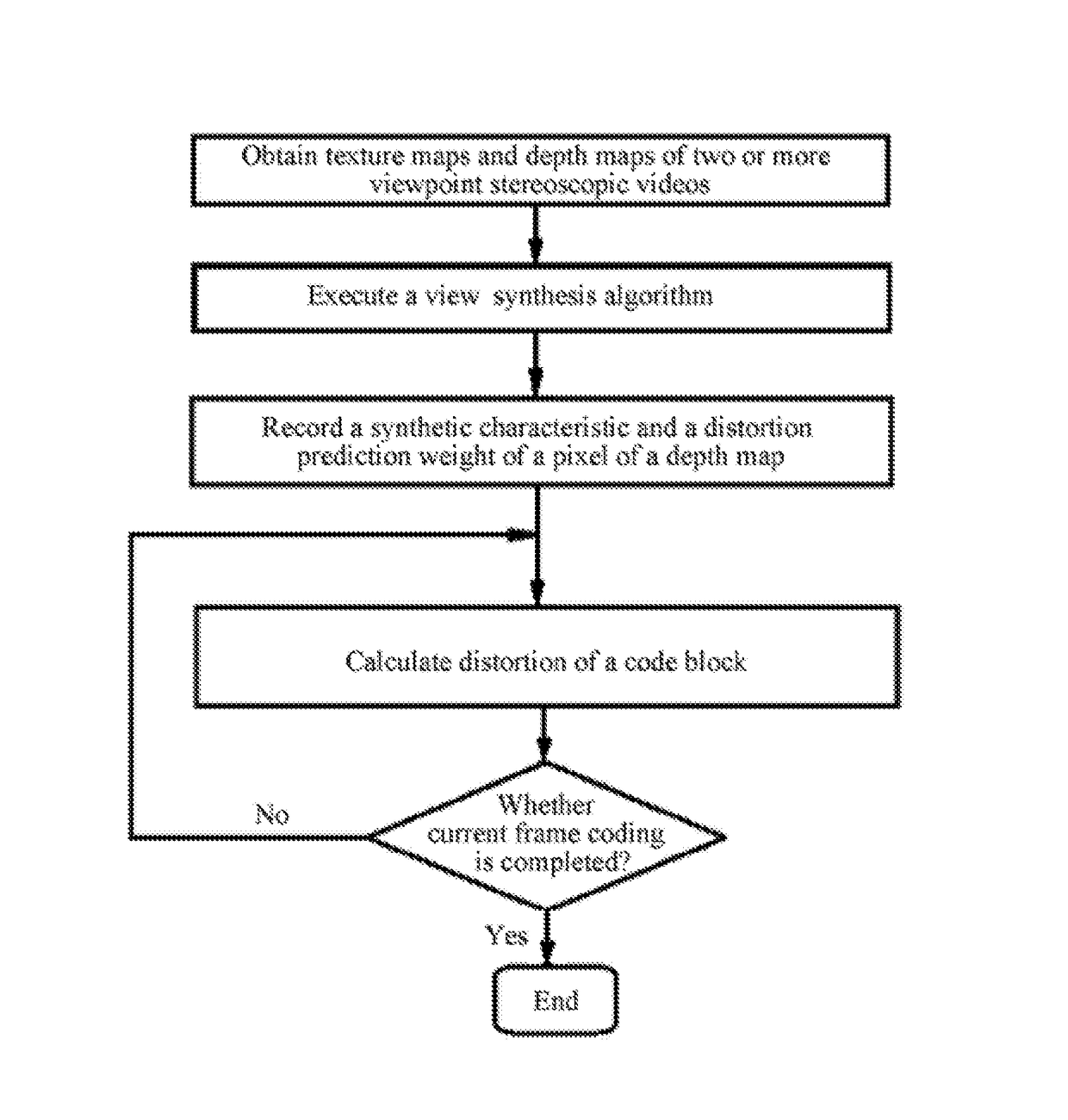

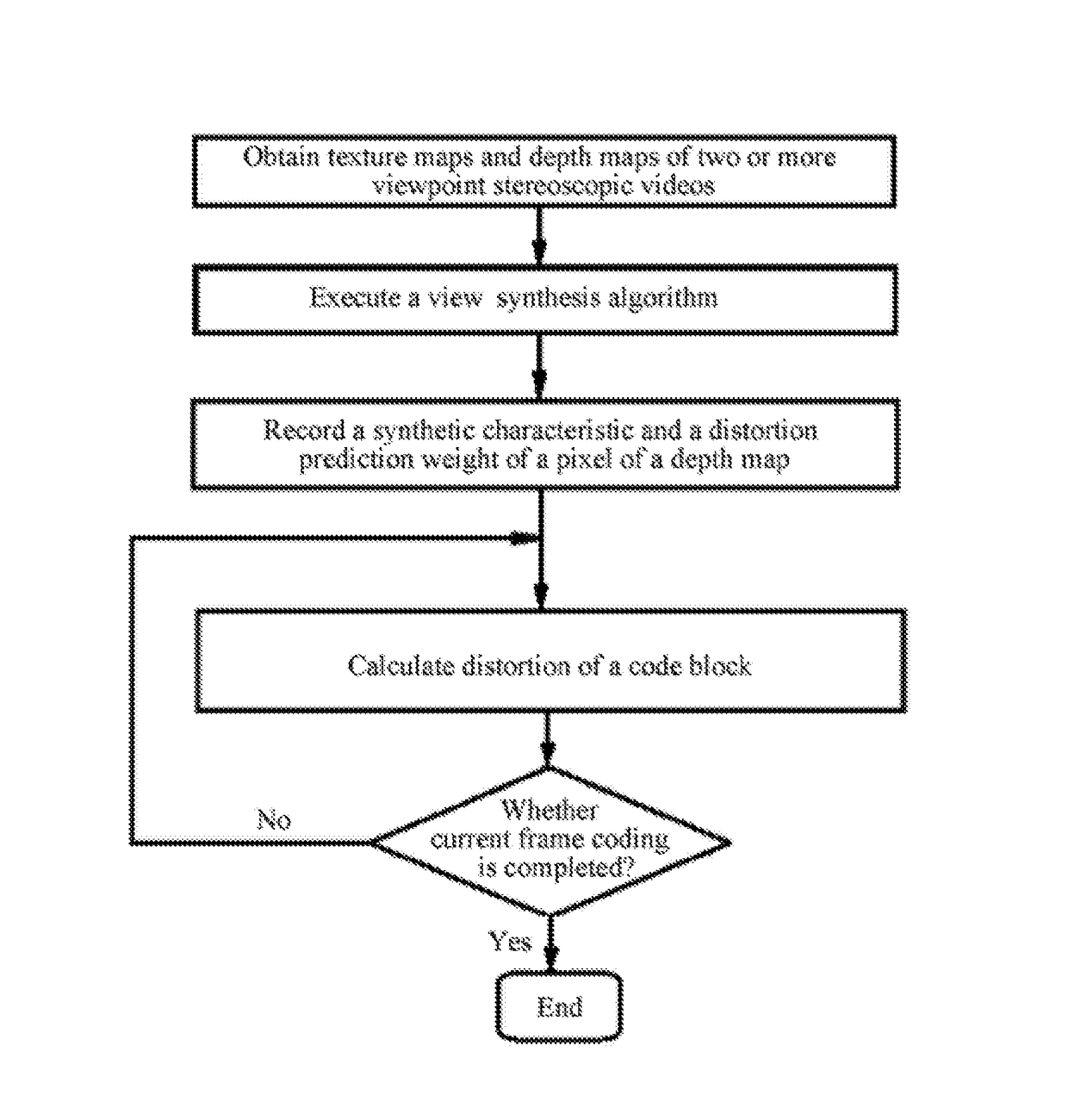

Free viewpoint video depth map coding method and distortion predicting method thereof

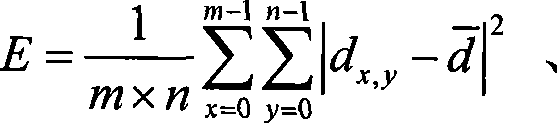

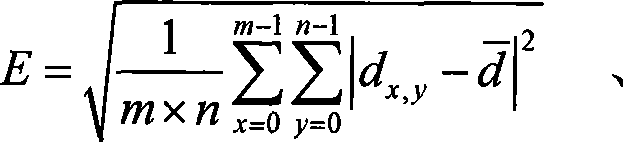

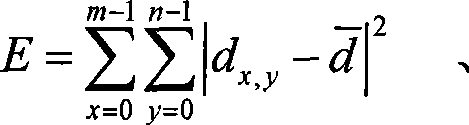

ActiveCN103402097AImprove accuracyAvoid repeated executionTelevision systemsDigital video signal modificationStereoscopic videoCoding block

The invention provides a free viewpoint video depth map coding method and a distortion predicting method thereof. The distortion predicting method comprises the following steps: A1, acquiring a stereoscopic video texture map with more than two viewpoints and a depth map; A2, adopting a viewpoint synthesis algorithm to synthesize the current viewpoint to-be-coded and a middle viewpoint of the viewpoint to-be-coded, adjacent to the to-be-coded viewpoint; A3, recording composite characters of all pixels in the current to-be-coded viewpoint depth map and generating corresponding distortion predicting weight according to synthesis results of the step A2; A4, carrying out distortion summation for all pixels in the coding block of a current depth map by using a distortion predicting model according to the composite characters of all pixels and the corresponding distortion predicting weight, so as to obtain total distortion. According to the invention, accuracy in predicting the distortion of the depth map during a free viewpoint video depth map coding process can be improved, and meanwhile, the calculating complexity of a distortion predicting algorithm in free viewpoint video depth map coding can be lowered greatly.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

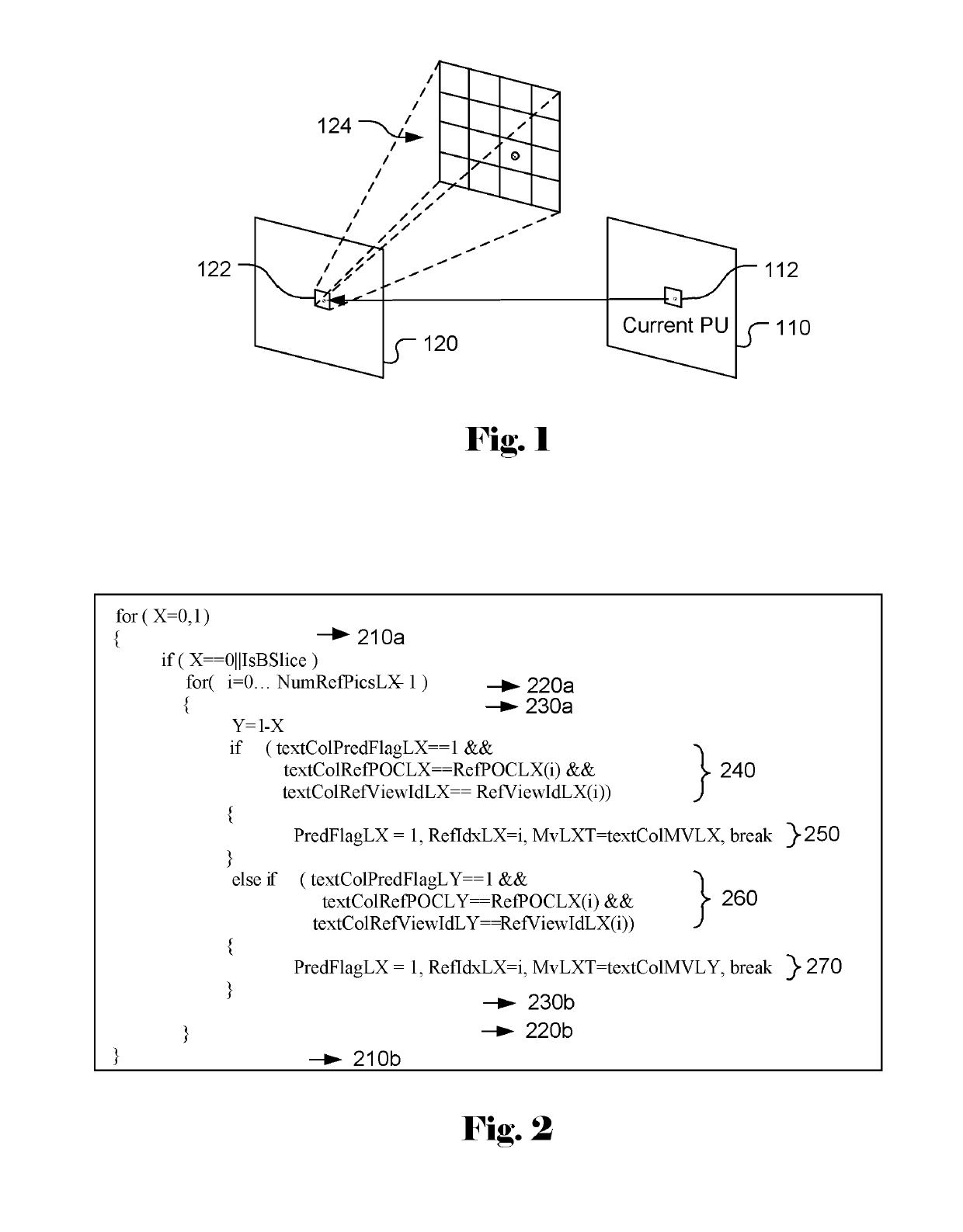

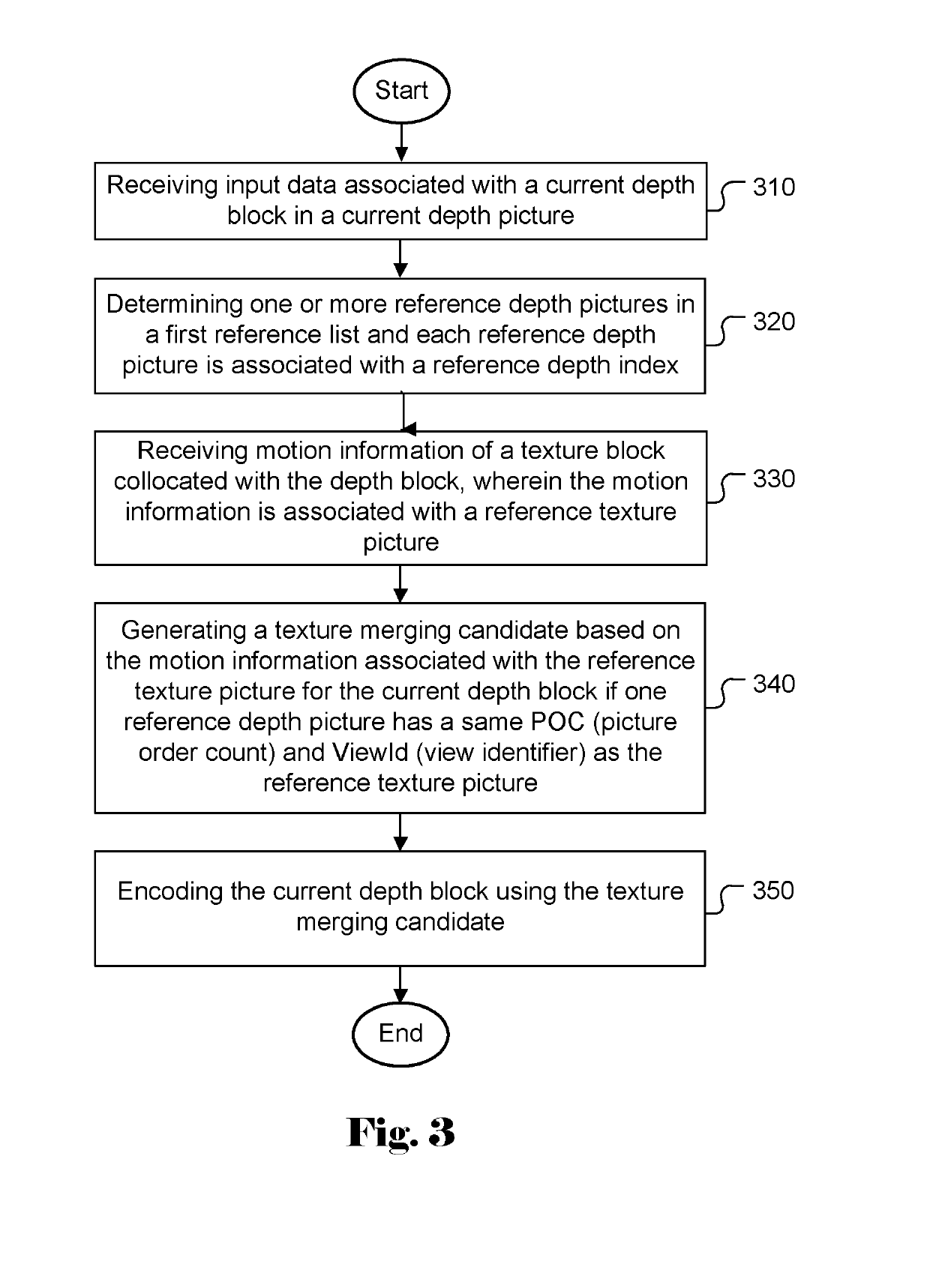

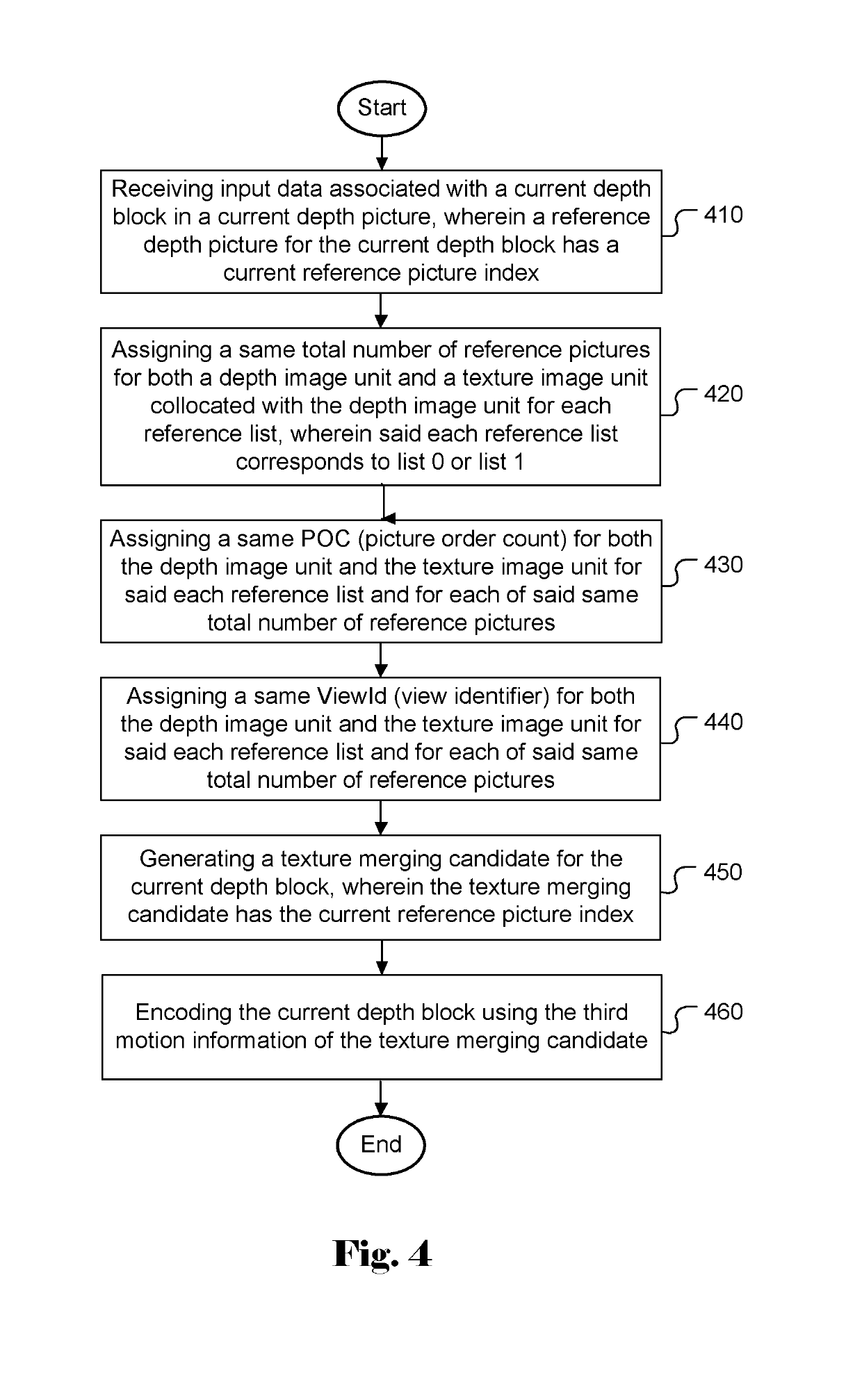

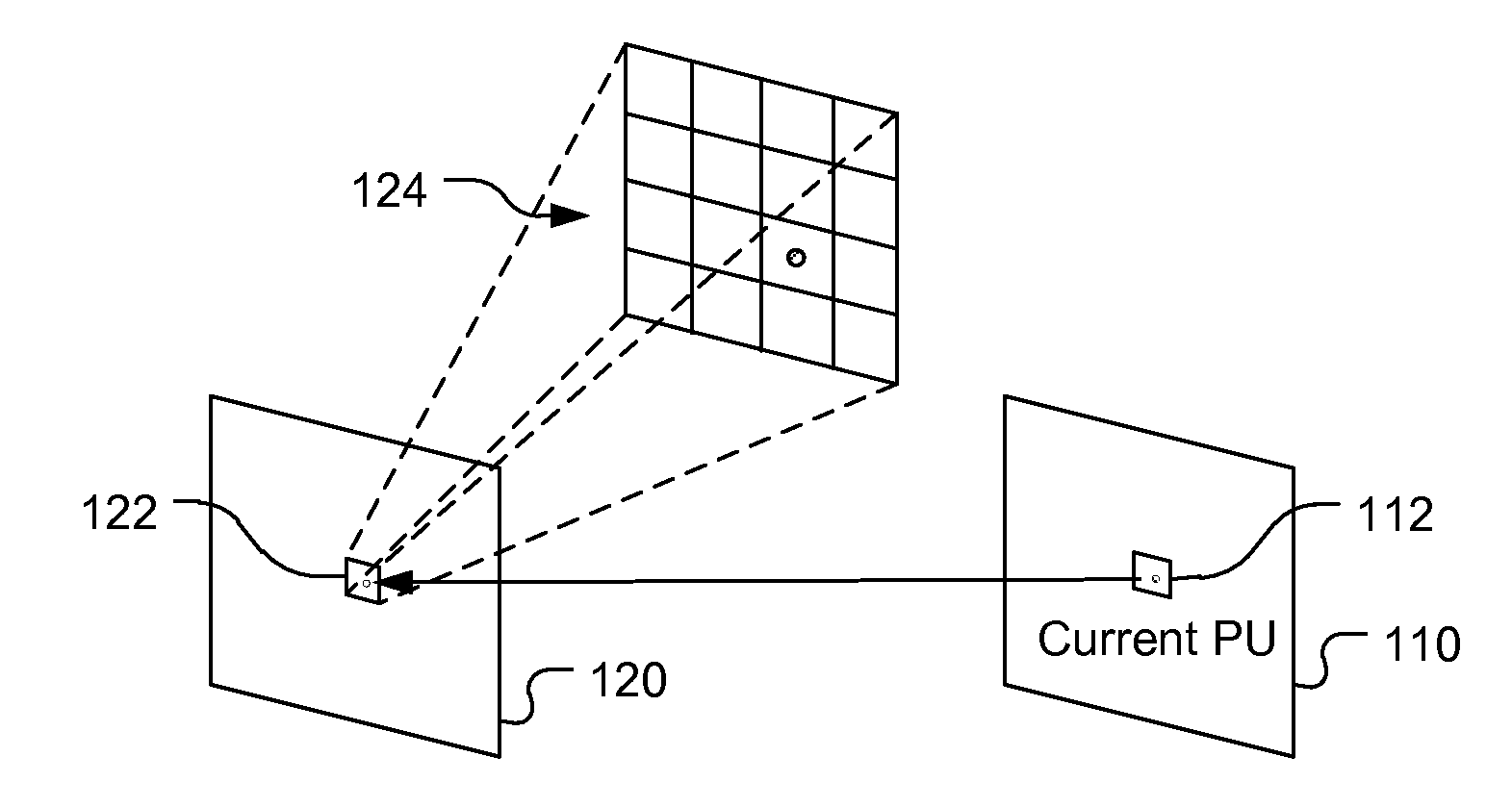

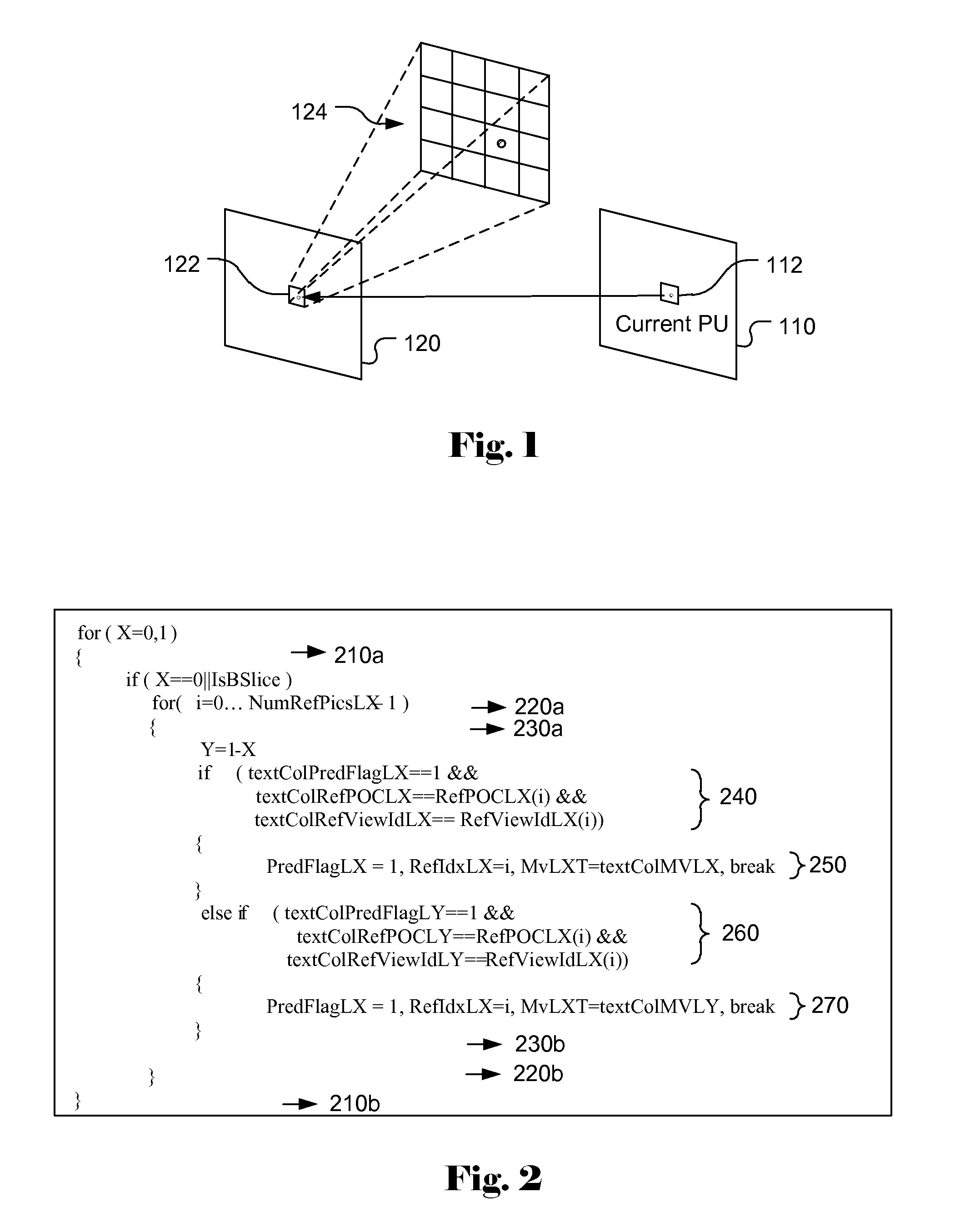

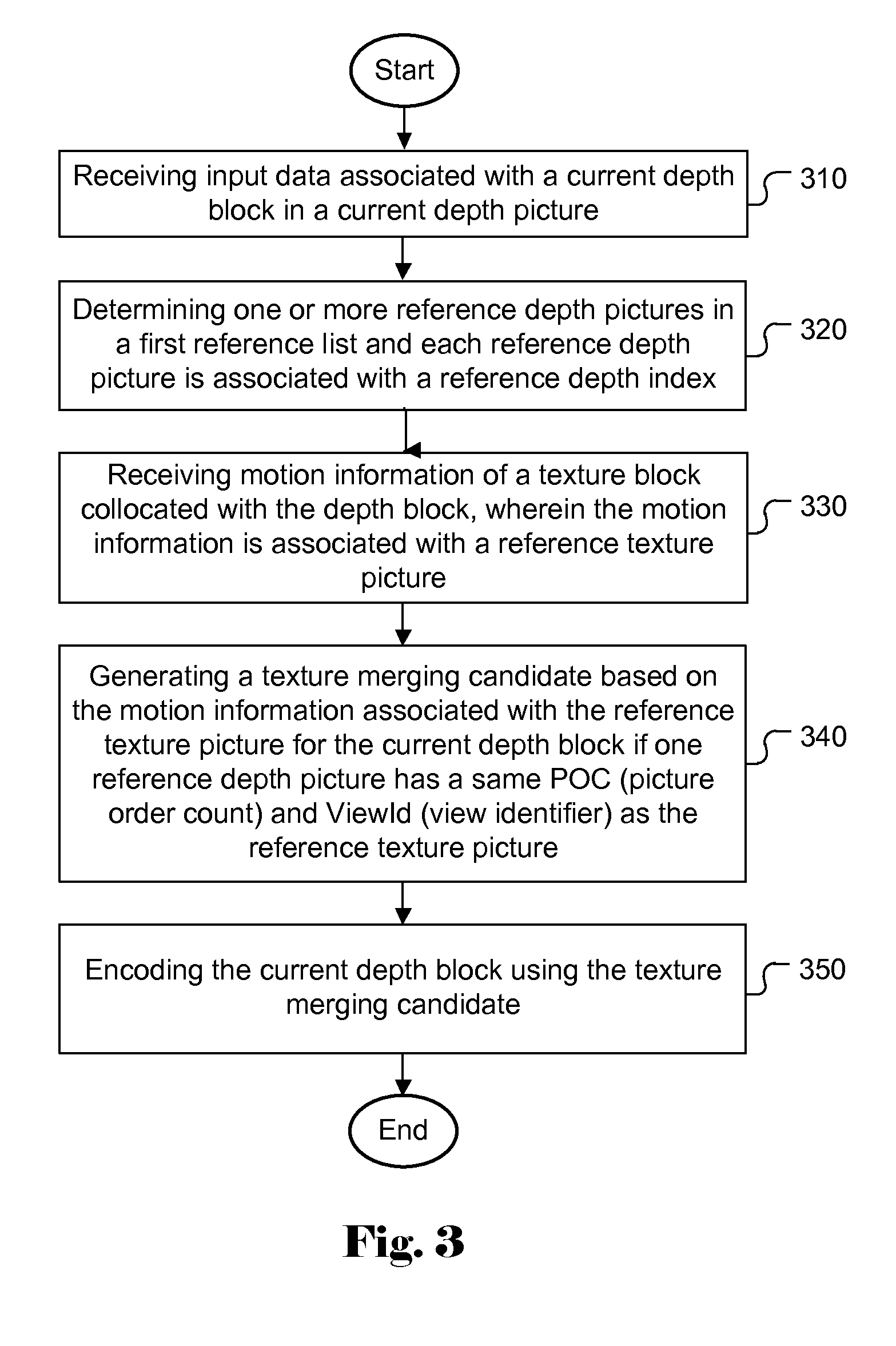

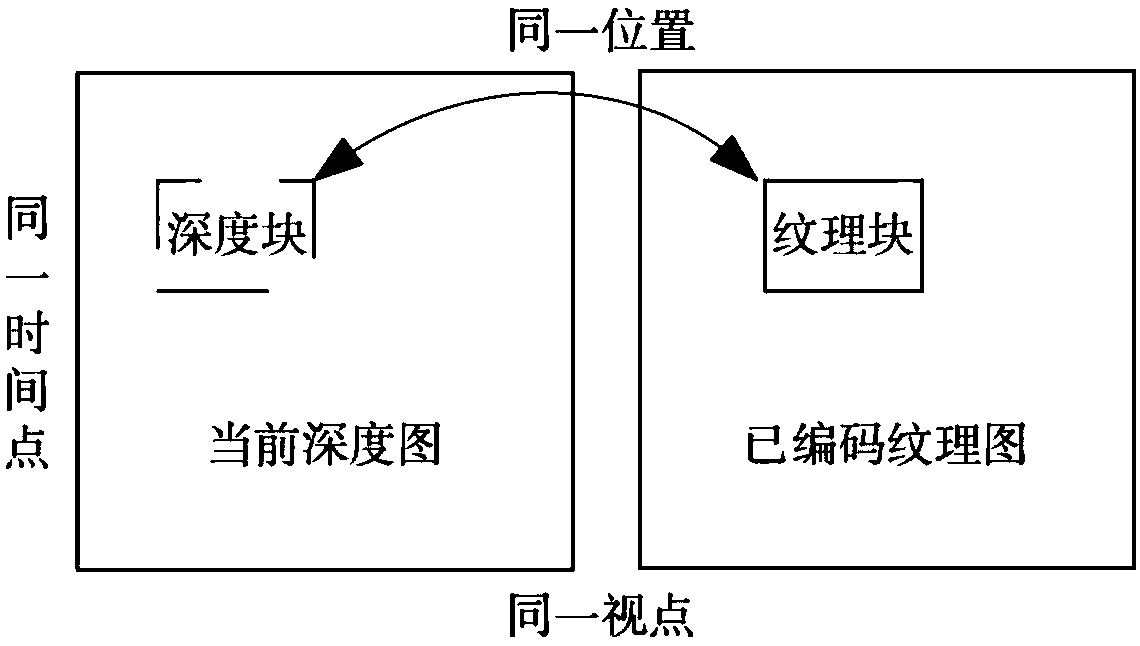

Method of texture merging candidate derivation in 3D video coding

A method of depth map coding for a three-dimensional video coding system incorporating consistent texture merging candidate is disclosed. According to the first embodiment, the current depth block will only inherit the motion information of the collocated texture block if one reference depth picture has the same POC (picture order count) and ViewId (view identifier) as the reference texture picture of the collocated texture block. In another embodiment, the encoder assigns the same total number of reference pictures for both the depth component and the collocated texture component for each reference list. Furthermore, the POC (picture order count) and the ViewId (view identifier) for both the depth image unit and the texture image unit are assigned to be the same for each reference list and for each reference picture.

Owner:HFI INNOVATION INC

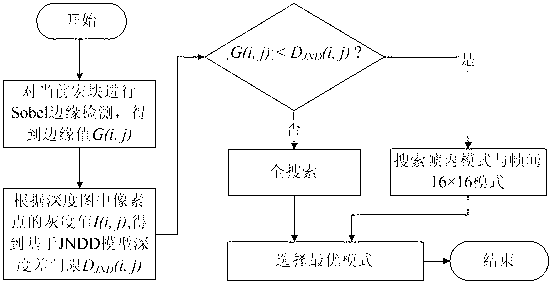

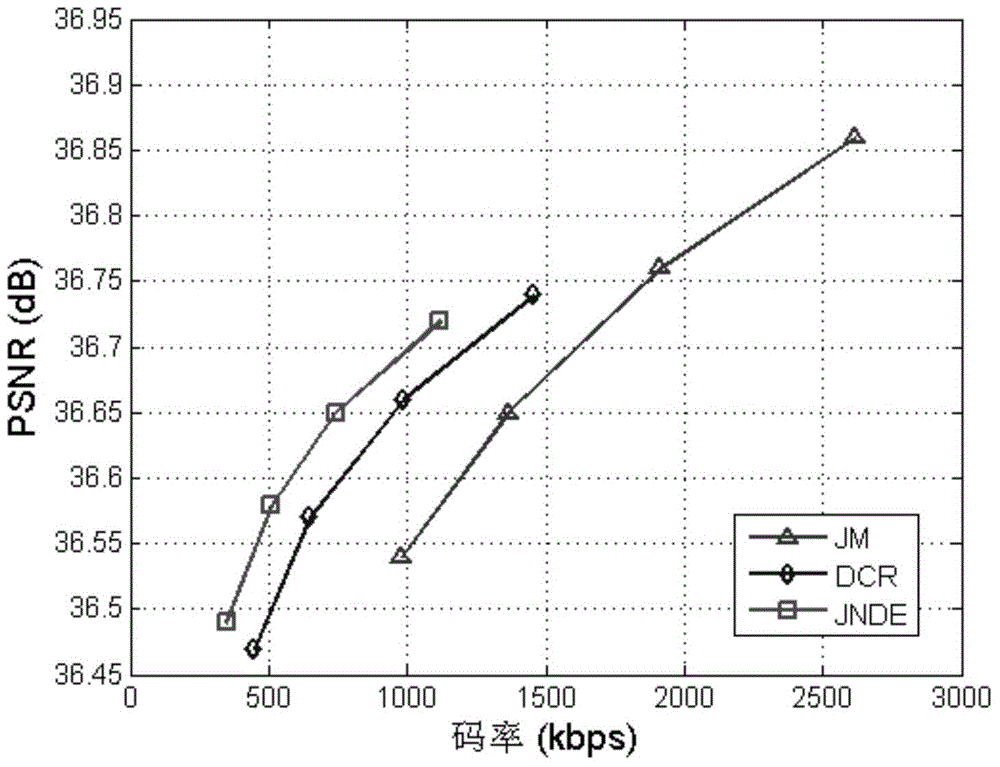

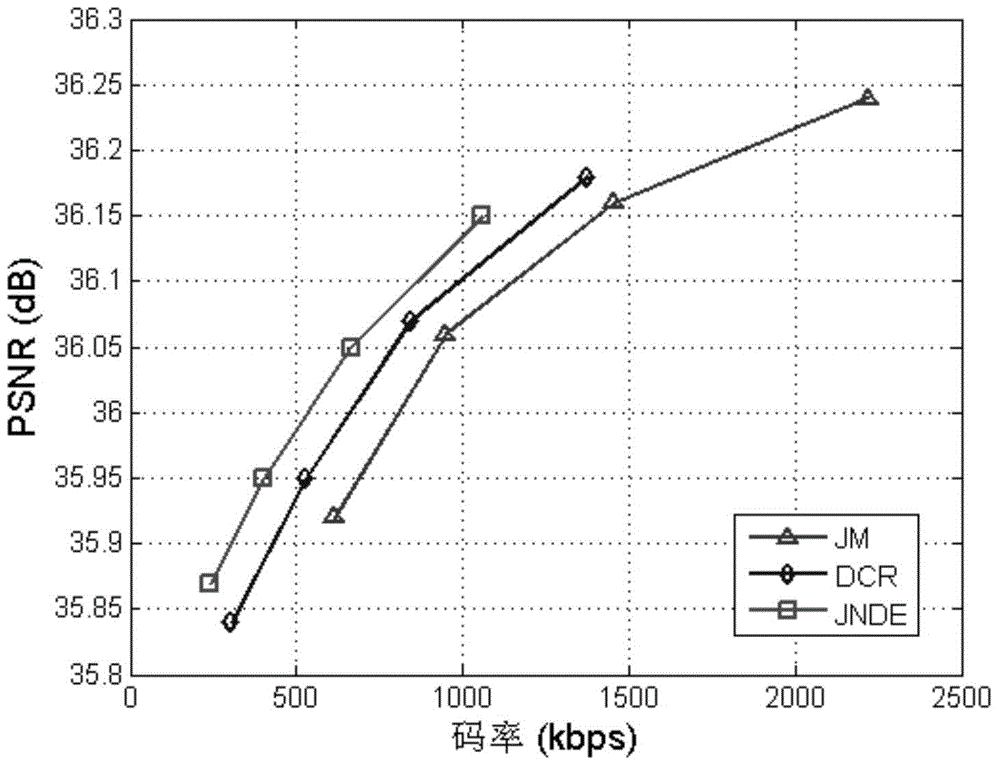

Rapid depth map coding mode selection method based on JNDD (Just Noticeable Depth Difference) model

ActiveCN102801996AReduce complexityTelevision systemsDigital video signal modificationCode moduleAlgorithm

The invention relates to a rapid depth map coding mode selection method based on an JNDD (Just Noticeable Depth Difference) model, and the method comprises the following steps of: carrying out edge detection on a coding macro-block to obtain an edge value of the current block; determining a threshold value which cannot be preceived by human eyes of a depth difference in different depth value regions in the macro-block by utilizing a minimum perceptible depth difference model, comparing the threshold value with the edge value, and dividing a depth map into a vertical edge region and a flat region; and adopting a full search strategy on the edge region, and adopting SKIP (Simple Key Internet Protocol) mode search, interframe 16*16 mode search and intraframe mode search on the flat region. By utilizing the rapid depth map coding mode selection method, according to the characteristics of depth data and influences of depth coding distortion on the drawing visual quality, on the premise of ensuring the virtual visual quality and the coding rate to be basically invariable, the coding complexity can be greatly lowered, and the coding speed of a depth map coding module in a three-dimensional video system is increased.

Owner:SHANGHAI UNIV

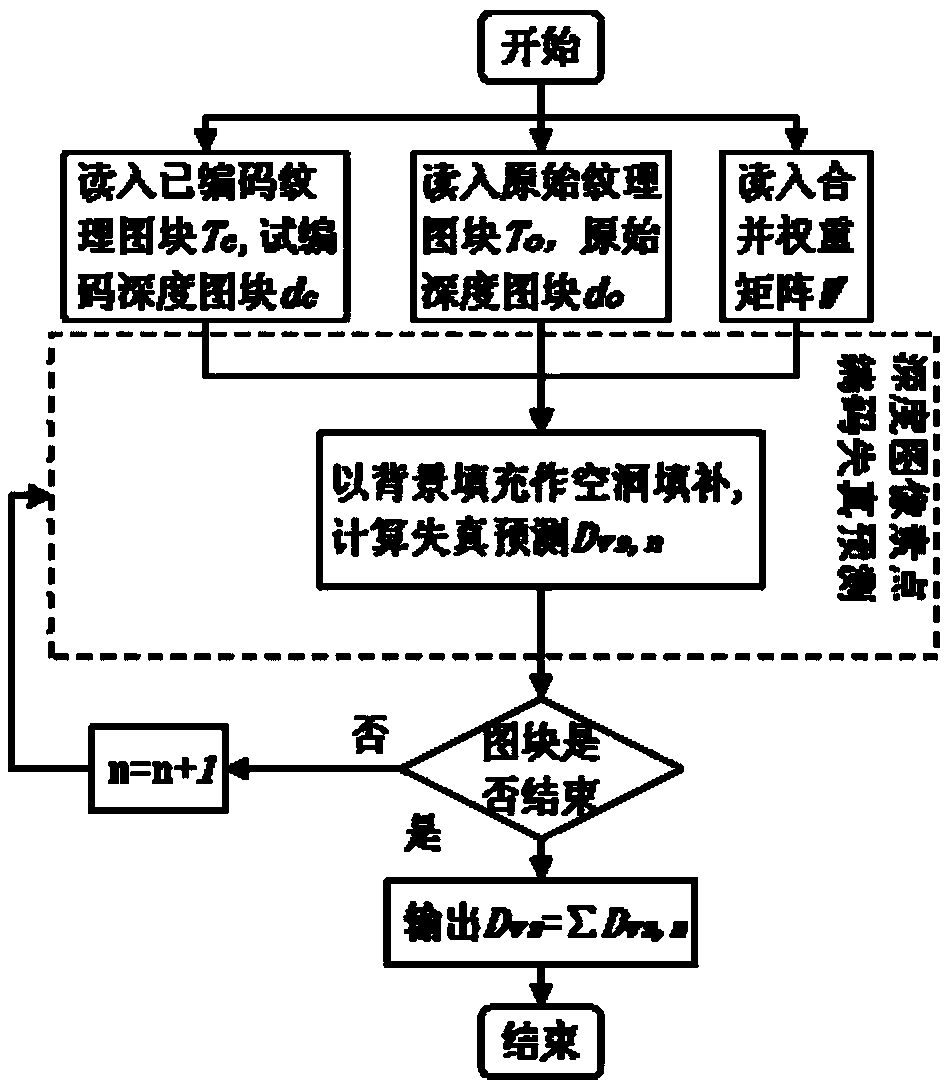

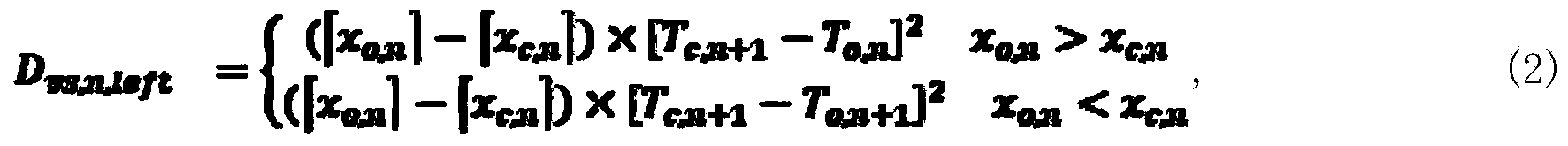

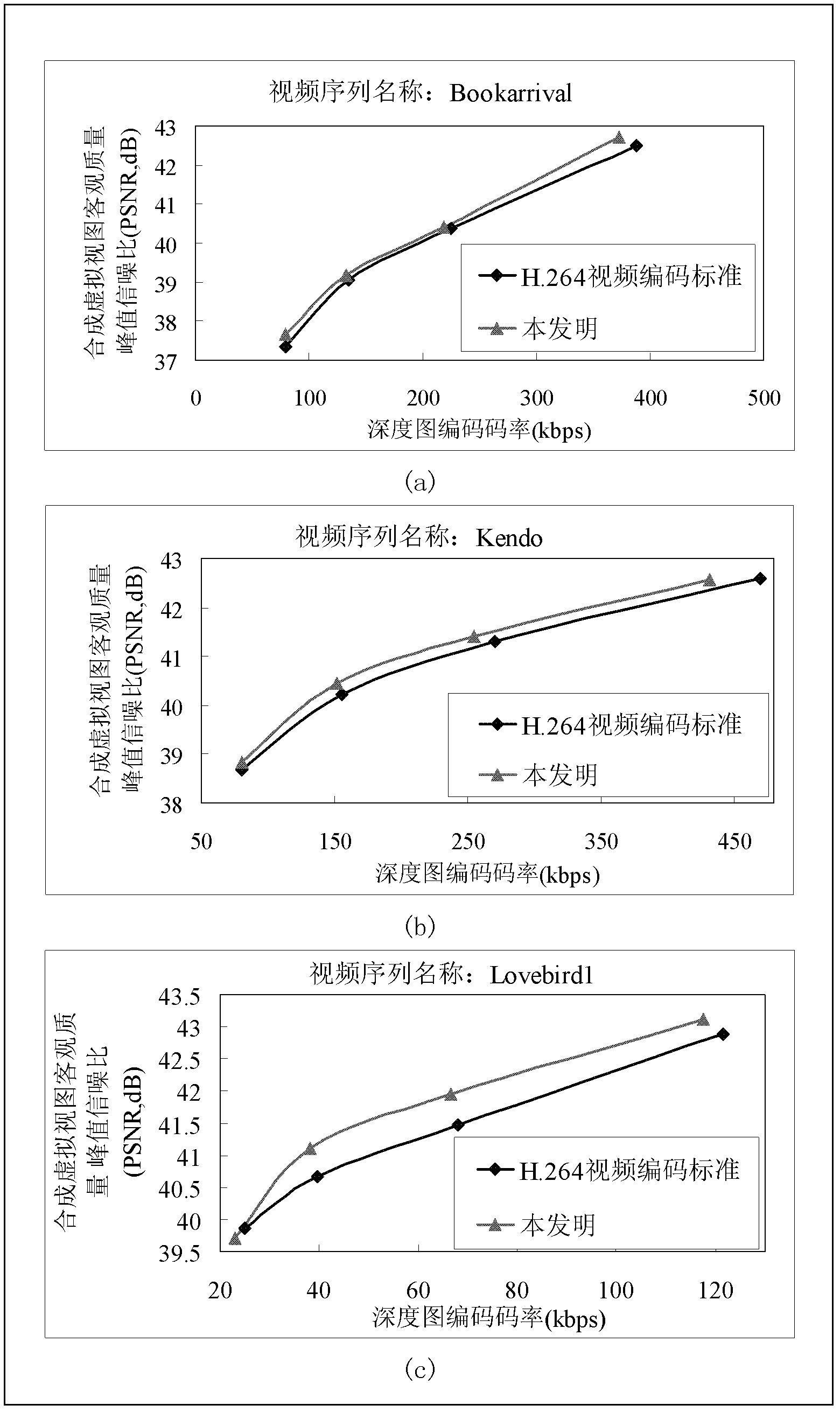

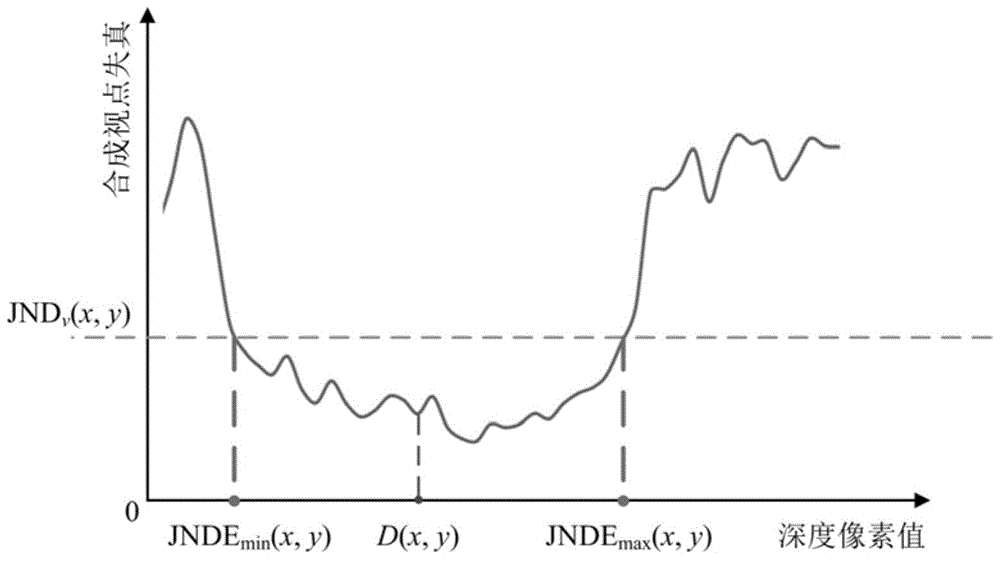

Free viewpoint video depth map distortion prediction method and free viewpoint video depth map coding method

ActiveCN103873867ACoding Distortion Accurately PredictedAvoid repeated executionDigital video signal modificationSteroscopic systemsComputation complexityViewpoints

The invention provides a free viewpoint video depth map distortion prediction method and a free viewpoint video depth map coding method. The free viewpoint video depth map distortion prediction method includes the steps that for a map block to be coded and used for hole-filling synthesis of a given frame of a multi-view three-dimensional video sequence given viewpoint, a coded texture map block, a depth map block coded by adopting a preselected coding mode in a trial mode, a corresponding original texture map block and an original depth map block are input; a combined weight matrix of the map block to be coded is input, wherein combined weight when synthetic viewpoint texture is obtained by using a left viewpoint texture map and a right viewpoint texture map is marked; distortion of the synthetic texture obtained after mapping and hole filling synthesis are completed by using pixel points in a depth map is calculated, and the distortion is used as a prediction value of synthetic viewpoint distortion; distortion prediction values of all pixels in the map block to be coded are summed to obtain a prediction value of synthetic viewpoint distortion caused by coding the map block to be coded. According to the method, coding distortion of the depth map can be predicated accurately, meanwhile the situation that a synthetic view algorithm is executed repeatedly is avoided, and the computation complexity is greatly lowered.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

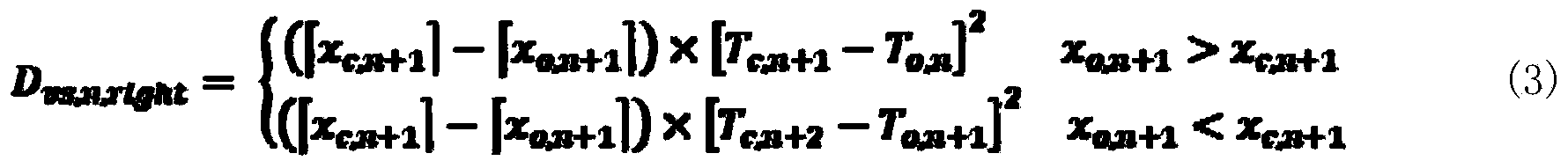

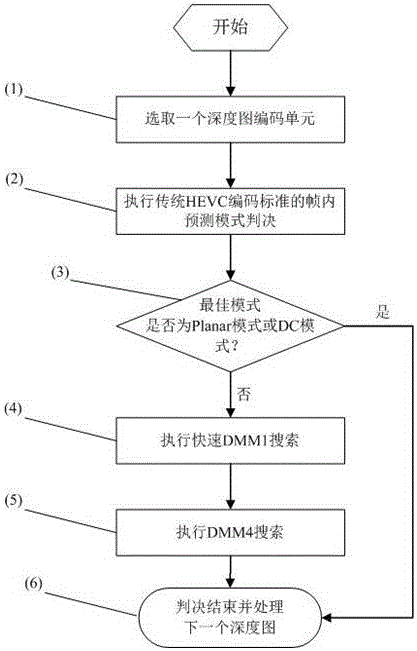

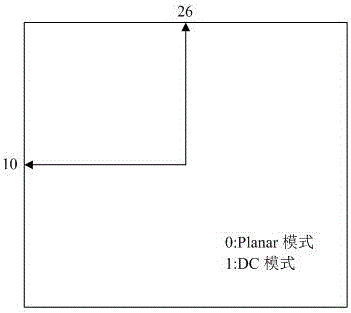

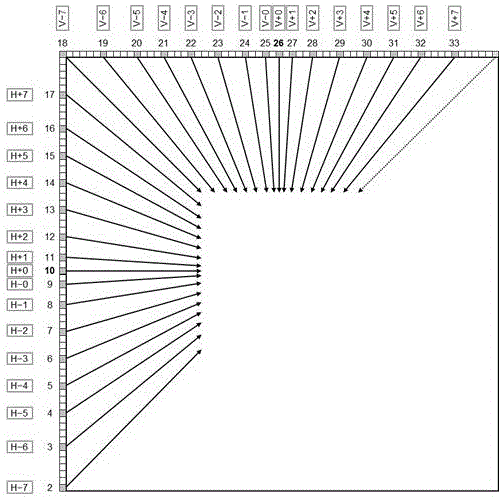

Rapid depth image frame internal mode type judgment method aiming at 3D-HEVC (Three Dimensional- High Efficiency Video Coding) standard

ActiveCN105898332APracticalSmall amount of calculationDigital video signal modificationInternal modeRate distortion

The invention provides a rapid depth image frame internal mode type judgment method aiming at a 3D-HEVC (Three Dimensional- High Efficiency Video Coding) standard. The rapid depth image frame internal mode type judgment method comprises the following steps: selecting a coding unit in a depth image; performing intra-frame predication mode judgment of a traditional HEVC standard and carrying out gross prediction; judging whether an optimal mode of the coding unit CU is a Planar mode or a DC mode; performing rapid DMM1 search in a 3D-HEVC coder to obtain a judgment formula; after judging the DMM1 search according to the judgment formula, performing DMM4 search in the 3D-HEVC coder and calculating a minimum rate distortion cost; and judging an optimal intra-frame predication mode and processing the next coding unit in the depth image. The current depth image intra-frame predication mode is primarily judged by utilizing the characteristics of the depth image, and an intra-frame mode, which does not usually occur in a coding process of certain depth images, is jumped; and the rapid depth image frame internal mode type judgment method has the prominent advantages that the calculation amount is small, the coding complexity is low, the coding time is short, and the compression performance is consistent to that of original 3D-HEVC and the like.

Owner:HENAN UNIVERSITY OF TECHNOLOGY

Depth image coding method based on edge lossless compression

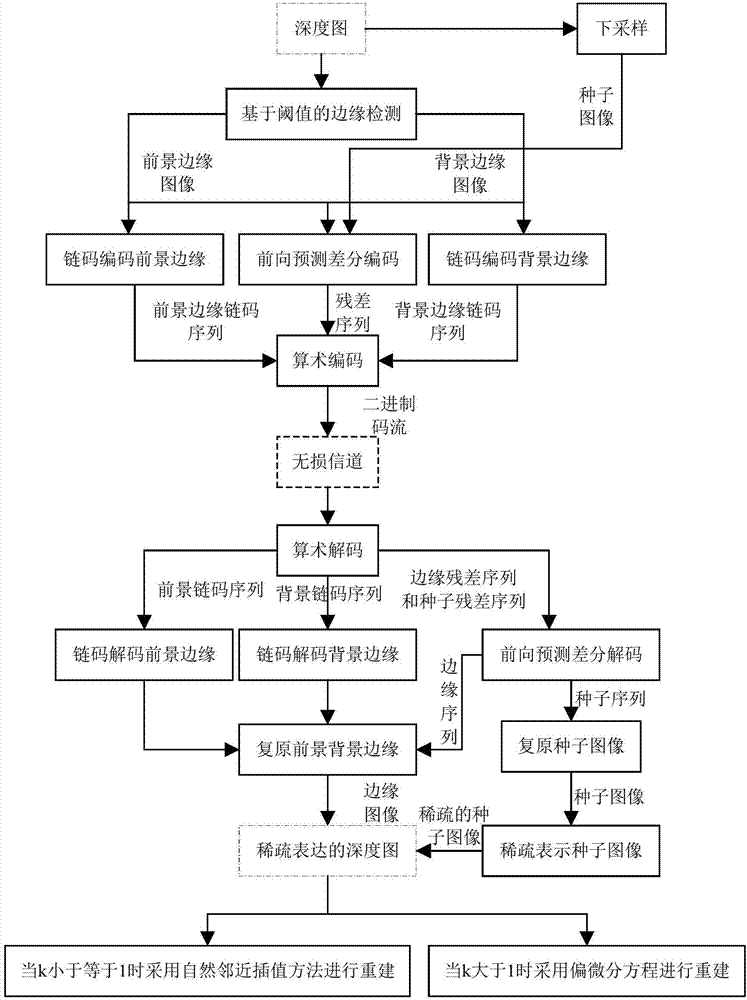

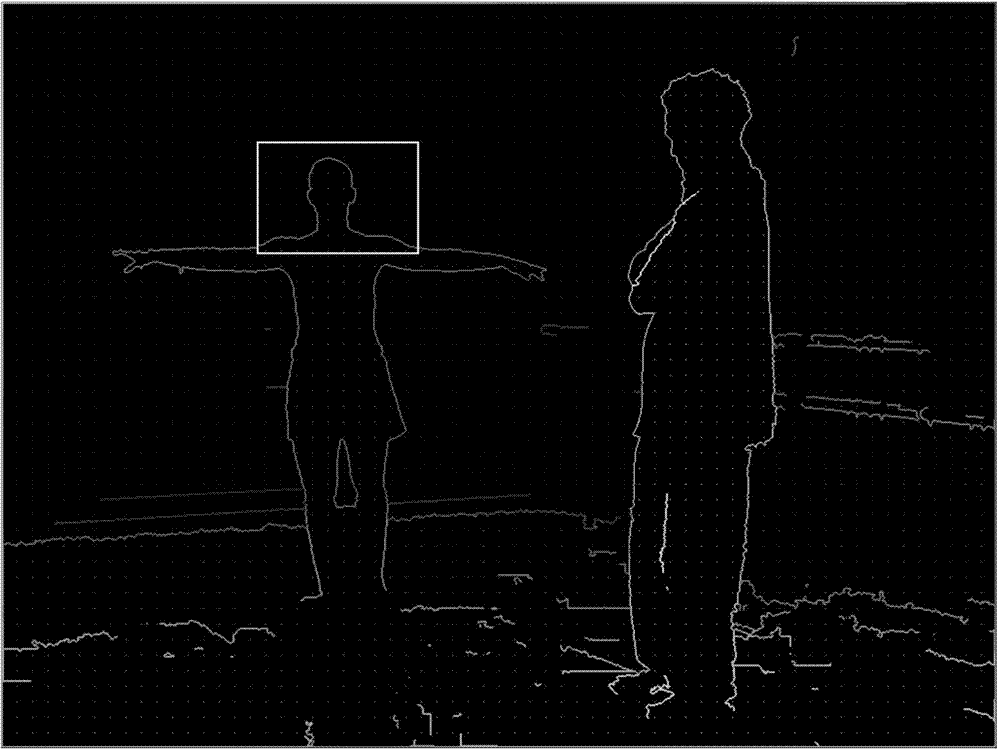

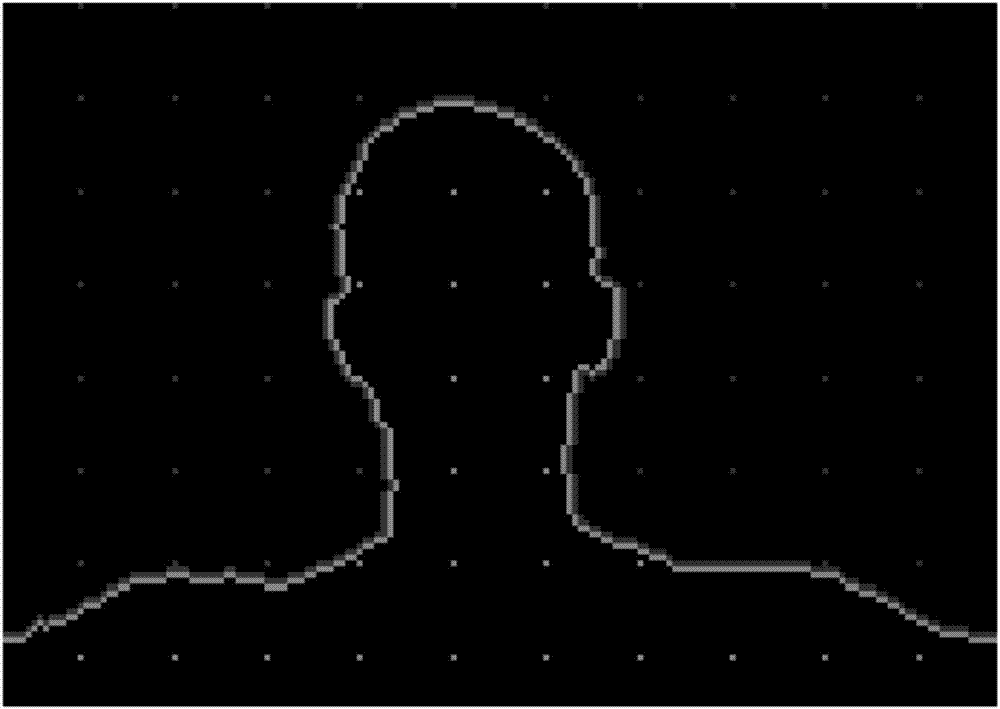

ActiveCN103763564ASuitable for image reconstructionImprove edge qualityDigital video signal modificationSteroscopic systemsViewpointsDepth map coding

The invention provides a depth image coding method based on edge lossless compression, and belongs to the field of 3D video coding. The depth image coding method based on edge lossless compression is characterized by comprising the following steps that edge detection based on threshold values is conducted; chain codes are respectively used for coding foreground edges and background edges; forward differential predictive coding is conducted so as to obtain edge pixel values; downsampling is conducted; forward differential predictive coding is conducted so as to obtain seedy images; arithmetic coding is conducted so as to obtain residual sequences and chain code sequences; binary code streams are transmitted; arithmetic decoding is conducted; chain-code decoding is conducted on the foreground edges and the background edges; forward predictive differential decoding is conducted; the seedy images are restored; the seedy images are represented sparsely; reconstruction is conducted by adopting a reconstruction method based on partial differential equations and a natural near interpolation reconstruction method so that restored images can be obtained. The depth image coding method based on edge lossless compression has the advantages that the characteristic that smooth regions of depth images are segmented by sharp edges can be effectively explored, coding performance of the depth images can be improved significantly, and meanwhile drawing quality of virtual viewpoints is also improved.

Owner:TAIYUAN UNIVERSITY OF SCIENCE AND TECHNOLOGY

Texture video and depth map code rate distributing method based on 3D-HEVC

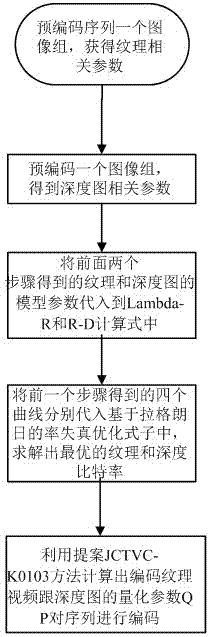

InactiveCN104717515AMaximize qualityPracticalDigital video signal modificationPattern recognitionDepth map coding

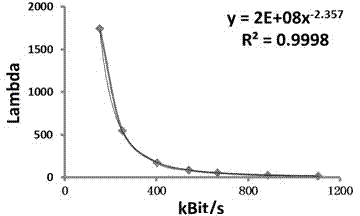

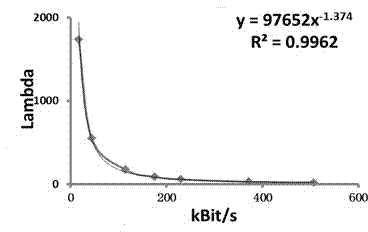

The invention provides a texture video and depth map code rate distributing method based on 3D-HEVC. The texture video and depth map code rate distributing method comprises the step 1 of precoding an image set to obtain related texture parameters, the step 2 of precoding another image set to obtain a Lambda-R curve model parameter and a R-D curve model parameter of a depth map, the step 3 of obtaining a Lambda-R curve parameter of a texture video, a Lambda-R curve parameter of the depth map, a Lambda-R curve parameter of the depth map and R-D curve parameters of the texture video, the step 4 of solving the optimal texture and depth bit rate, and the step 5 of utilizing a proposal JCTVC-K0103 method to calculate out a quantization parameter pair sequence for encoding the texture video and depth map and encoding the texture video and the depth map. The texture video and depth map code rate distributing method based on 3D-HEVC can effectively improve the encoding quality.

Owner:SHANGHAI UNIV

Depth map encoding method and apparatus thereof, and depth map decoding method and apparatus thereof

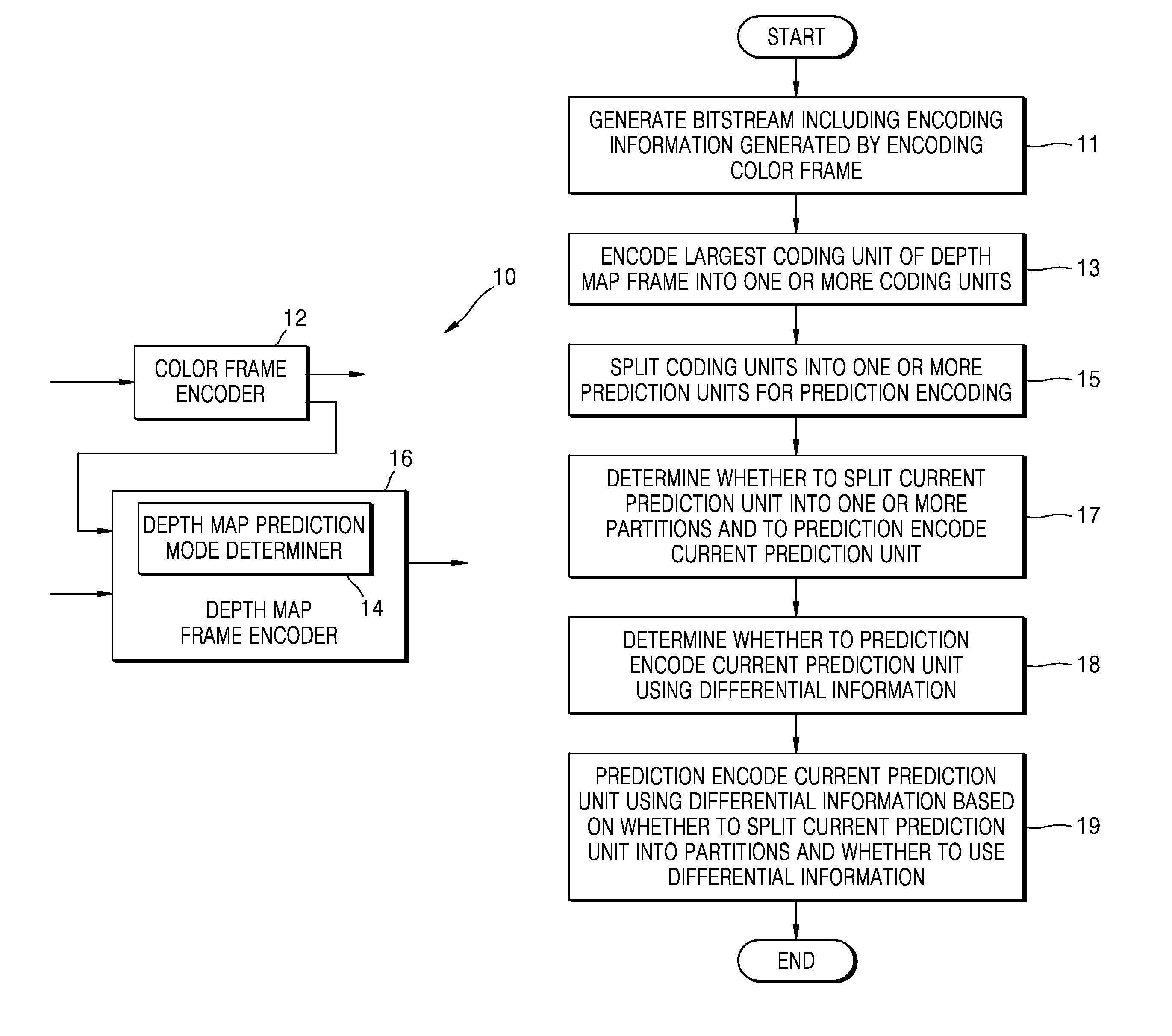

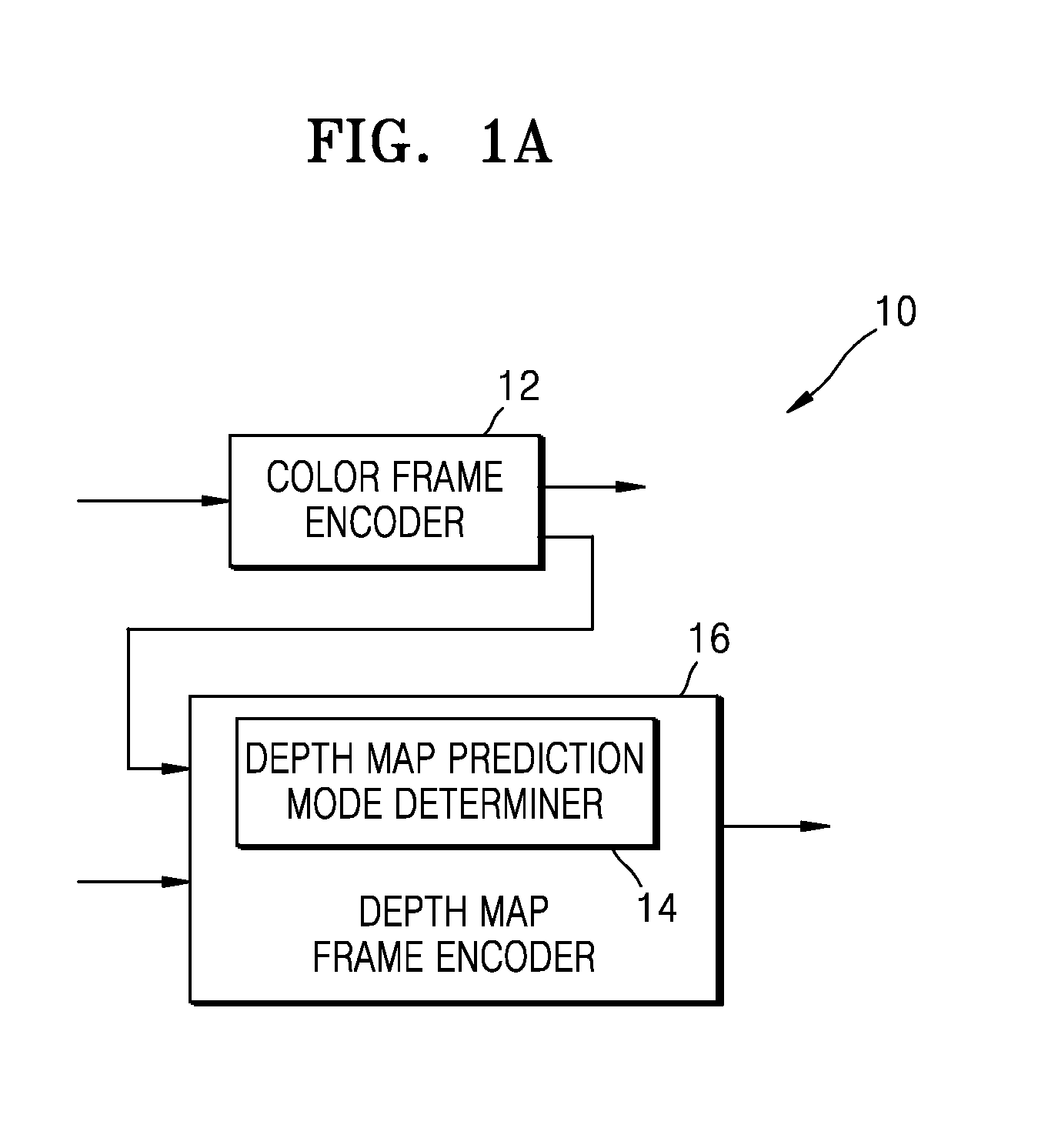

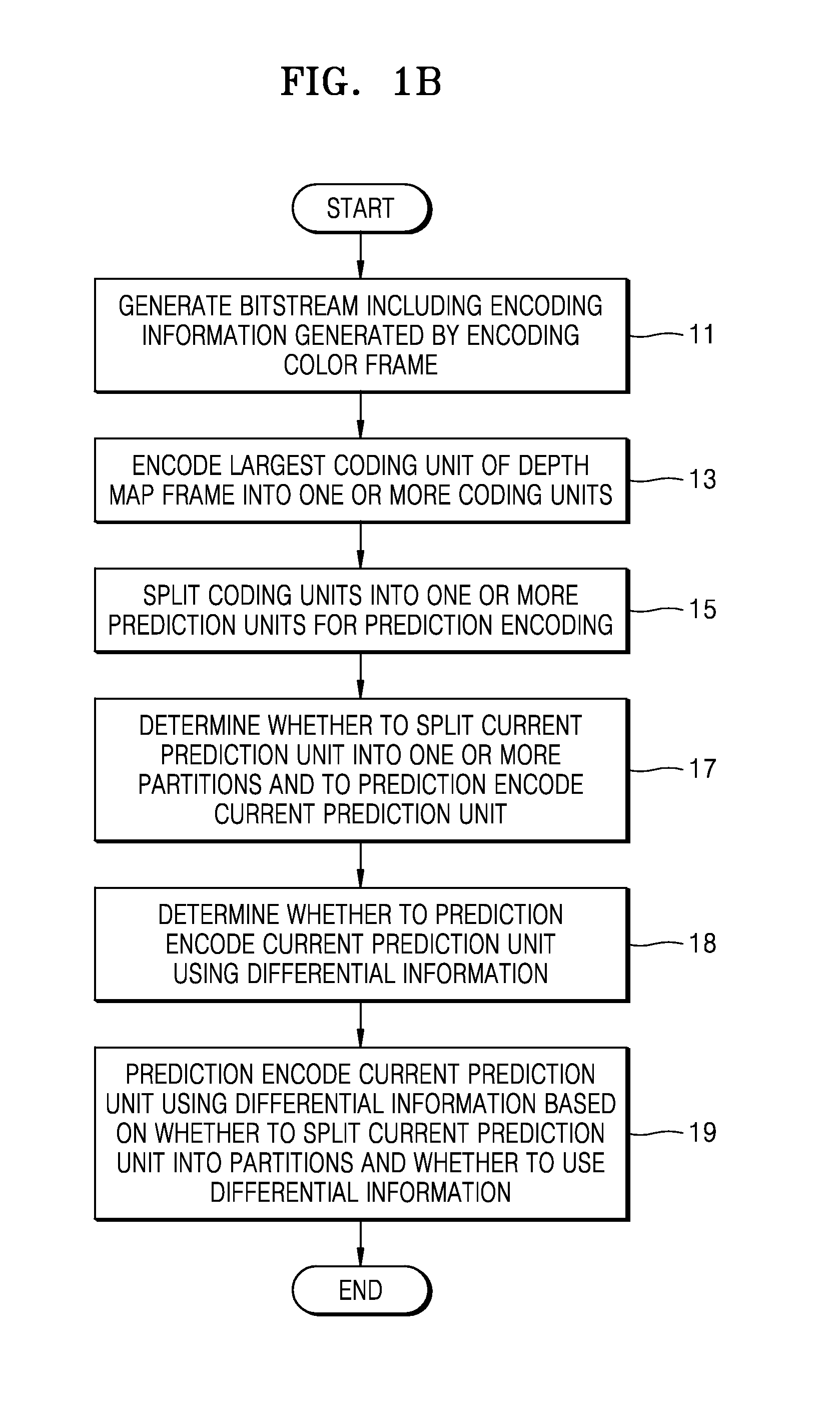

ActiveUS20160073129A1Effective imagingEfficiently depth mapColor television with pulse code modulationColor television with bandwidth reductionDepth map codingDifferential information

Disclosed is a depth map frame decoding method including reconstructing a color frame obtained from a bitstream based on encoding information of the color frame; splitting a largest coding unit of a depth map frame obtained from the bitstream into one or more coding units based on split information of the depth map frame; splitting the one or more coding units into one or more prediction units for prediction decoding; determining whether to split a current prediction unit into at least one partition and decode the current prediction unit by obtaining information indicating whether to split the current prediction unit into the at least one or more partitions from the bitstream; if it is determined that the current prediction unit is to be decoded by being split into the at least one or more partitions, obtaining prediction information of the one or more prediction units from the bitstream and determining whether to decode the current prediction unit by using differential information indicating a difference between a depth value of the at least one or more partitions corresponding to an original depth map frame and a depth value of the at least one or more partitions predicted from neighboring blocks of the current prediction unit; and decoding the current prediction unit by using the differential information based on whether to split the current prediction unit into the at least one or more partitions and whether to use the differential information.

Owner:SAMSUNG ELECTRONICS CO LTD

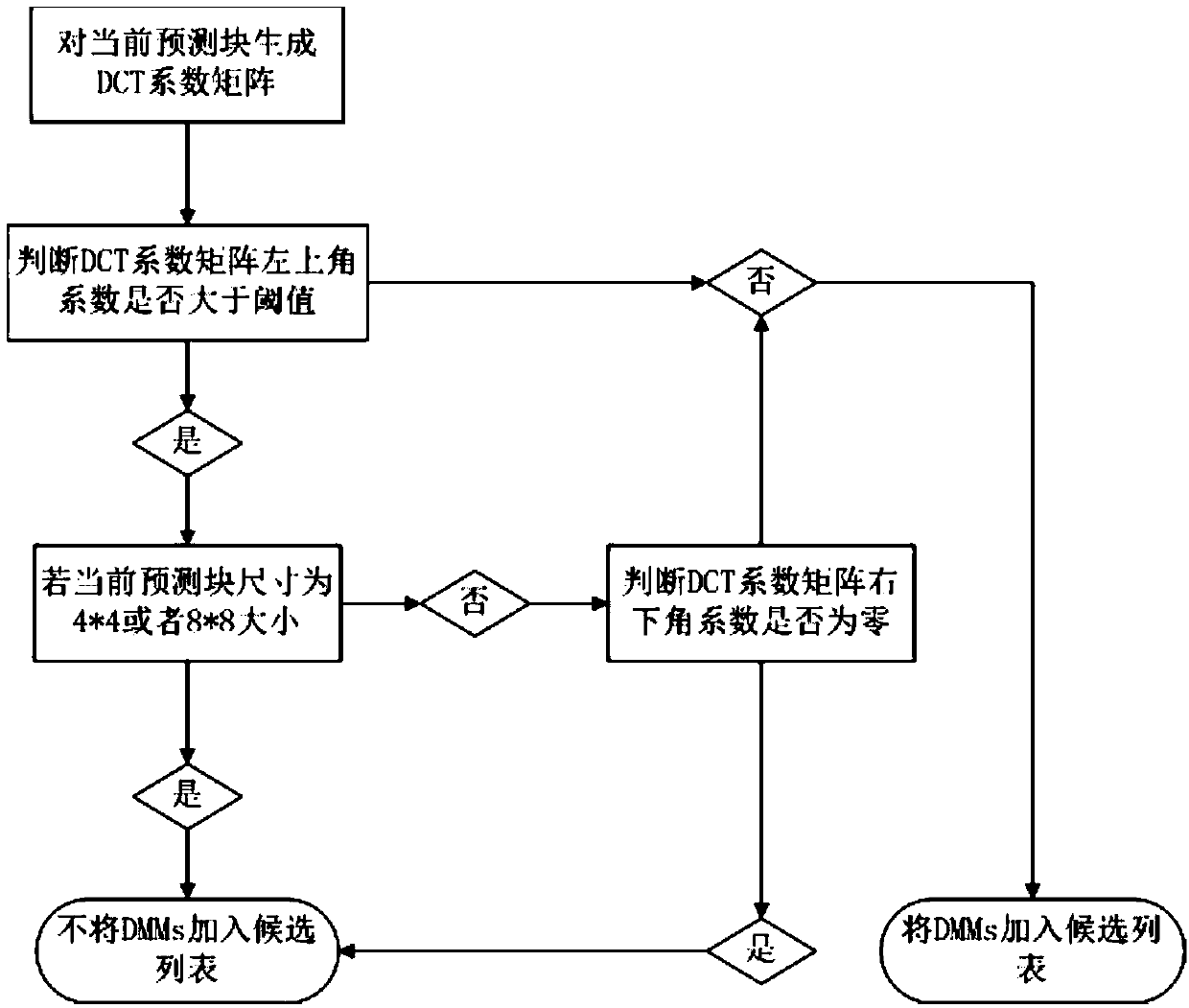

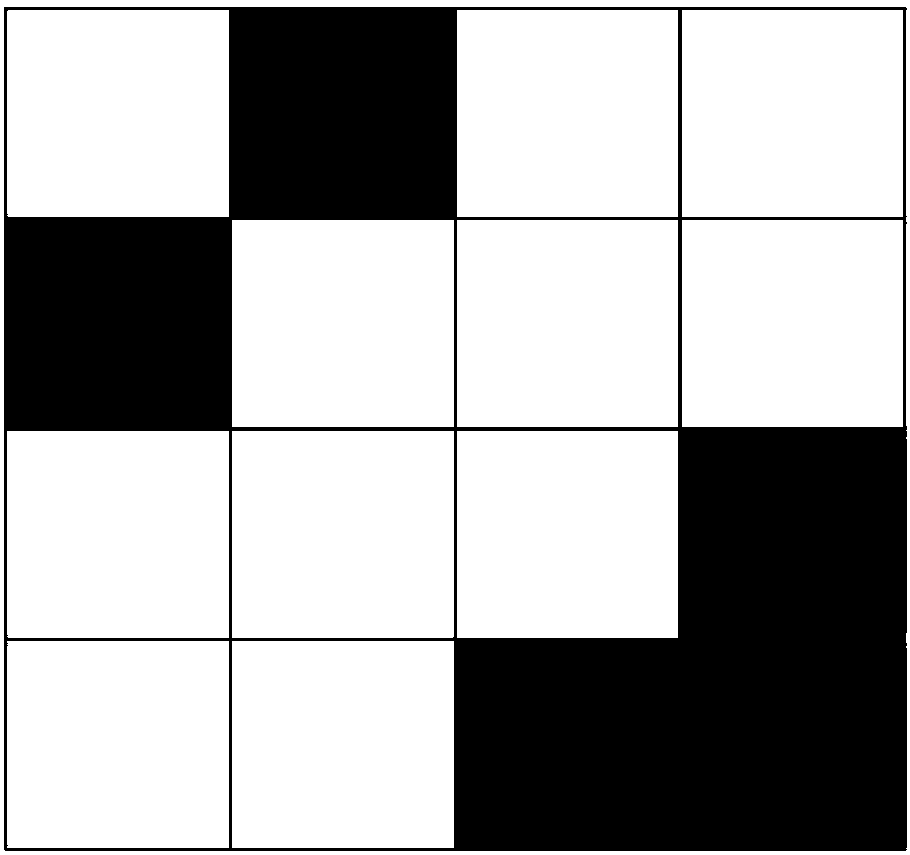

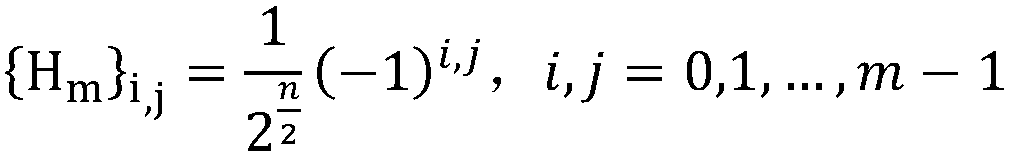

DCT-based 3D-HEVC fast intra-frame prediction decision-making method

ActiveCN107864380AEasy to distinguishHigh speedDigital video signal modificationCoding blockComputation complexity

The invention discloses a DCT-based 3D-HEVC fast intra-frame prediction decision-making method. The method comprises the following steps: firstly, calculating a DCT matrix of a current prediction block by utilizing a DCT formula; then, judging whether the left upper corner coefficient of a current coefficient block is provided with an edge and further judging whether the right lower corner coefficient thereof is provided with an edge; and finally, judging whether DMMs need to be added into an intra-frame prediction mode candidate list through judging whether the edges are provided. According to the DCT-based 3D-HEVC fast intra-frame prediction decision-making method, a depth map is introduced into the 3D-HEVC to achieve better view synthesis; aiming at the depth map intra-frame predicationcoding, a 3D video coding extension development joint cooperative team proposes four new kinds of intra-frame predication modes DMMs for the depth map. The DCT has the characteristic of energy aggregation, so that whether a coding block is provided with an edge can be obviously distinguished in the 3D-HEVC depth map coding process. The DCT-based 3D-HEVC fast intra-frame prediction decision-makingmethod has the advantages of low calculation complexity, short coding time and good video reconstruction effect.

Owner:HANGZHOU DIANZI UNIV

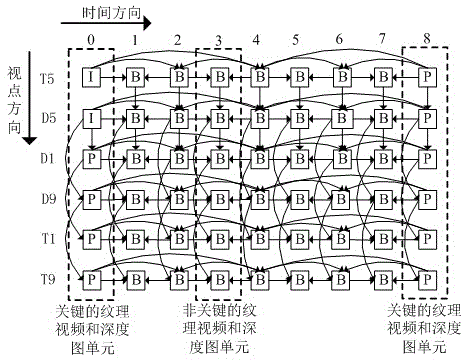

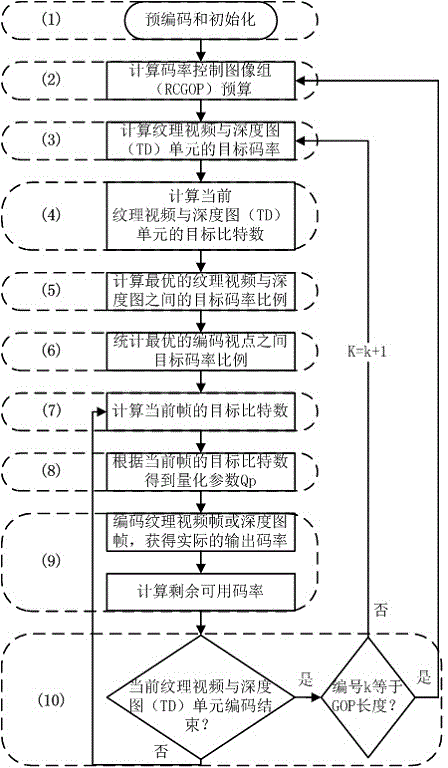

Code rate control method for multi-view texture video and depth map coding

ActiveCN104159095AImprove rate control accuracyImprove coding efficiencyDigital video signal modificationSteroscopic systemsDepth map codingComputation complexity

The invention discloses a code rate control method for multi-view texture video and depth map coding. The method comprises the following steps: giving the target bit rate R[target], and performing code rate allocation between coding view points, code rate allocation between a texture video and a depth map, code rate allocation of the code rate control image group (RCGOP) level, code rate allocation of the texture video and depth map (TD) unit level and code rate allocation of the frame level by a coding end based on multi-view texture video and depth map coding. According to the method, a common processing framework is provided for code rate control of multi-view texture video and depth map coding, the code rate control precision can be improved, the calculation complexity can be lowered, and the coding efficiency is improved.

Owner:SHANGHAI UNIV

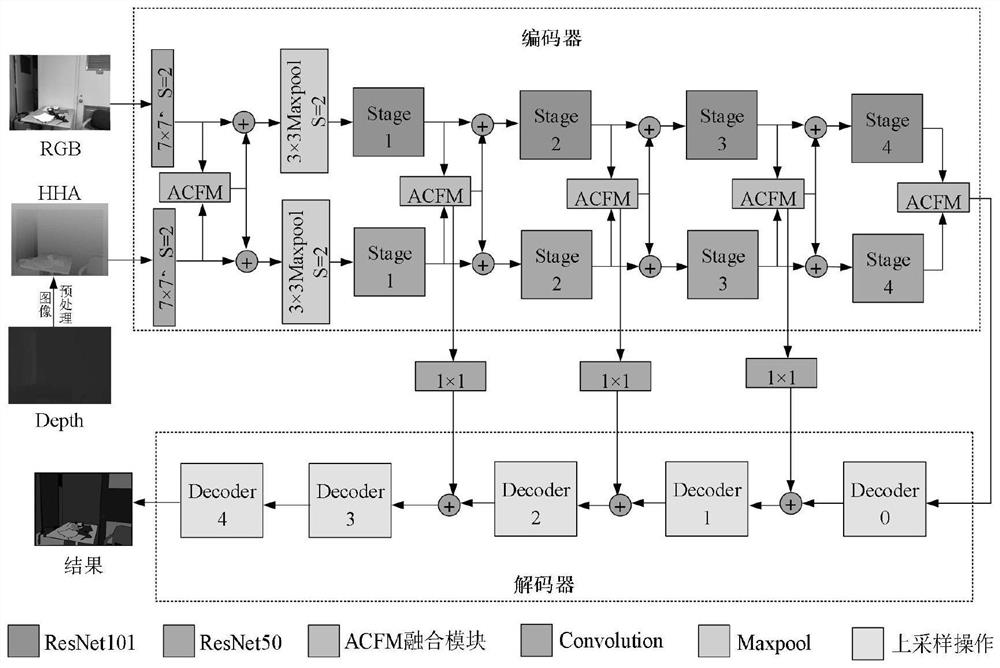

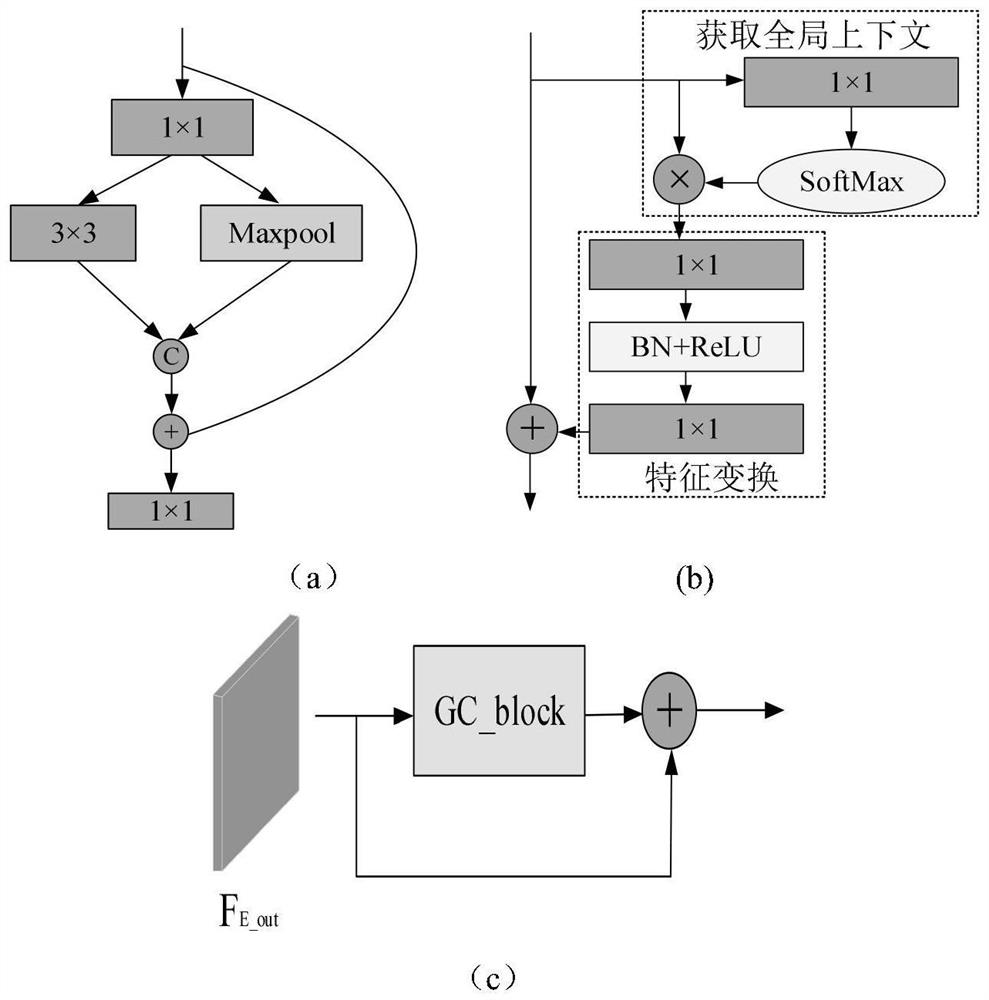

RGB-D image semantic segmentation method based on multi-modal feature fusion

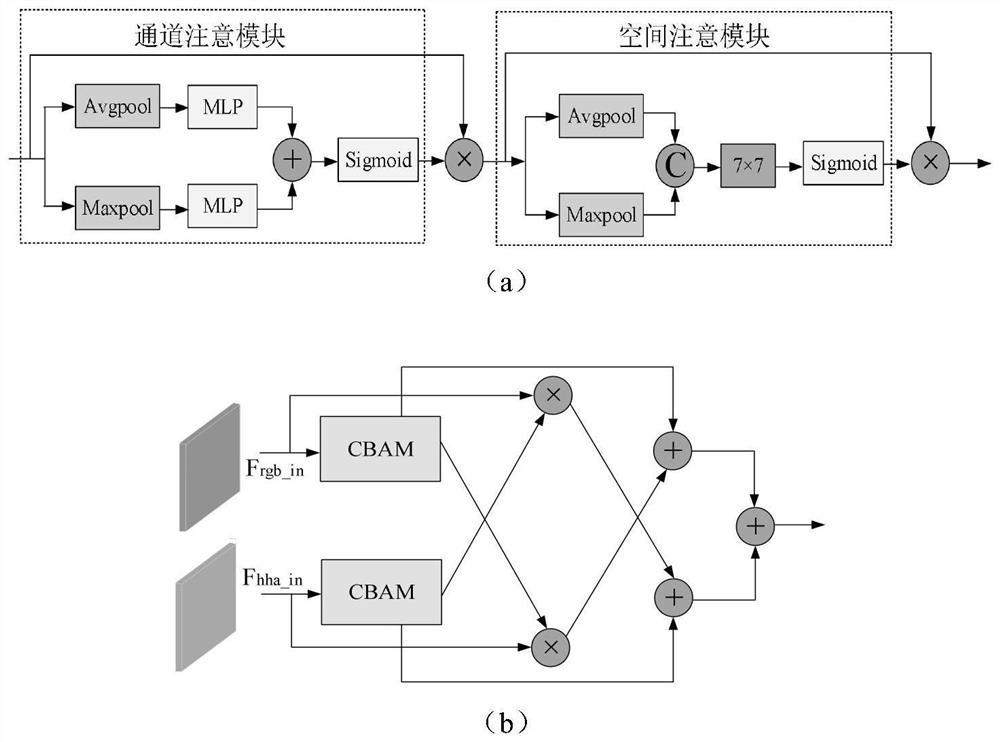

The invention belongs to the field of computer vision, and particularly relates to an RGB-D image semantic segmentation method based on multi-modal feature fusion. Due to the internal difference of RGB and depth features, how to more effectively fuse the two features is still a problem to be solved. In order to solve the problem, an attention guidance multi-mode cross fusion segmentation network (ACFNet) is provided, an encoder-decoder structure is adopted, a depth map is encoded into an HHA image, an asymmetric double-flow feature extraction network is designed, ResNet-101 and ResNet-50 are used as main networks by RGB and a depth encoder respectively, and a global-local feature extraction module (GL) is added in the RGB encoder. In order to effectively fuse RGB and depth features, an attention guidance multi-modal cross fusion module (ACFM) is proposed to better utilize fused enhanced feature representation in multiple stages.

Owner:ZHONGBEI UNIV

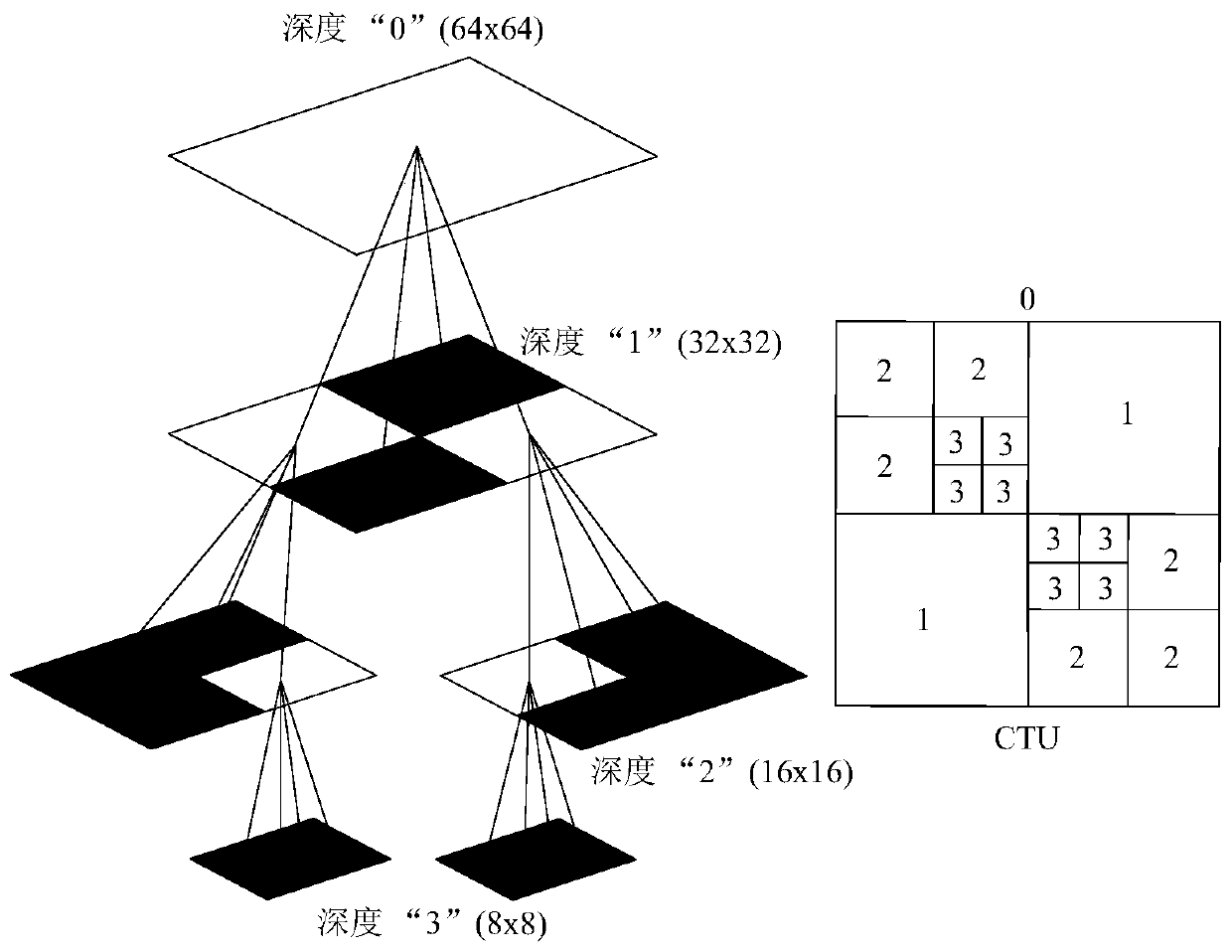

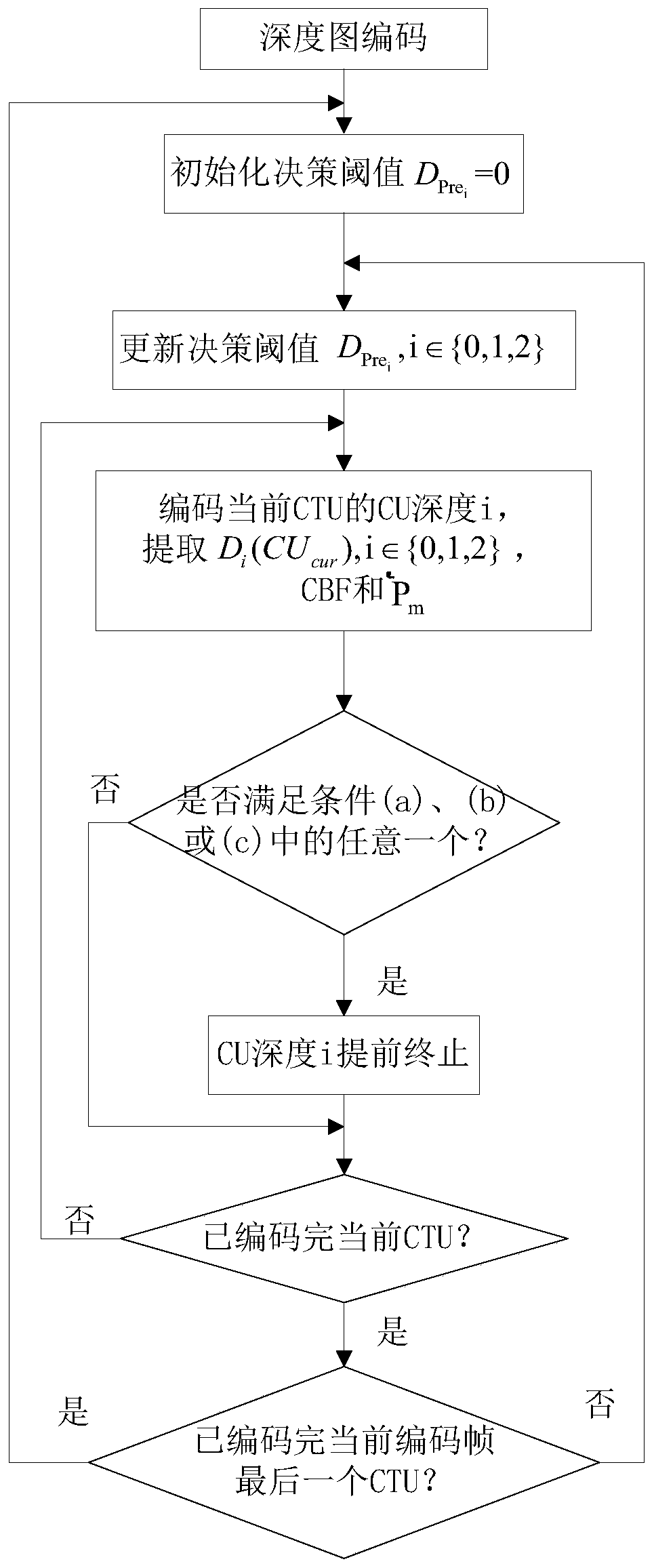

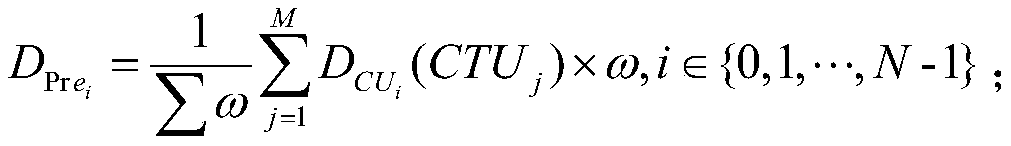

Rapid CU depth selection method for 3D-HEVC intra-frame depth map

ActiveCN110446052AReduce encoding computational complexitySimple method designDigital video signal modificationDepth map codingIntra-frame

The invention discloses a 3D-HEVC intra-frame depth map fast CU depth selection method, which comprises the following steps of firstly learning an optimal depth division result obtained by a coded CTUof a current coding frame, and obtaining an early termination division threshold; secondly, obtaining the optimal prediction distortion of the current coding CU at the depth i, a corresponding codingparameter CBF and an optimal prediction mode Pm; and finally, comparing the optimal prediction distortion of the current coding CU with an early termination division threshold, and judging whether toterminate the depth i of the current coding CU in advance to continue downward division or not in combination with the coding parameter CBF of the current coding CU and the optimal prediction mode Pm. Through the above processing process, advanced division and rapid termination of the depth map CU depth i are realized, and the 3D-HEVC intra-frame depth map coding time is greatly reduced under thecondition of ensuring that the coding quality is basically unchanged.

Owner:NANHUA UNIV

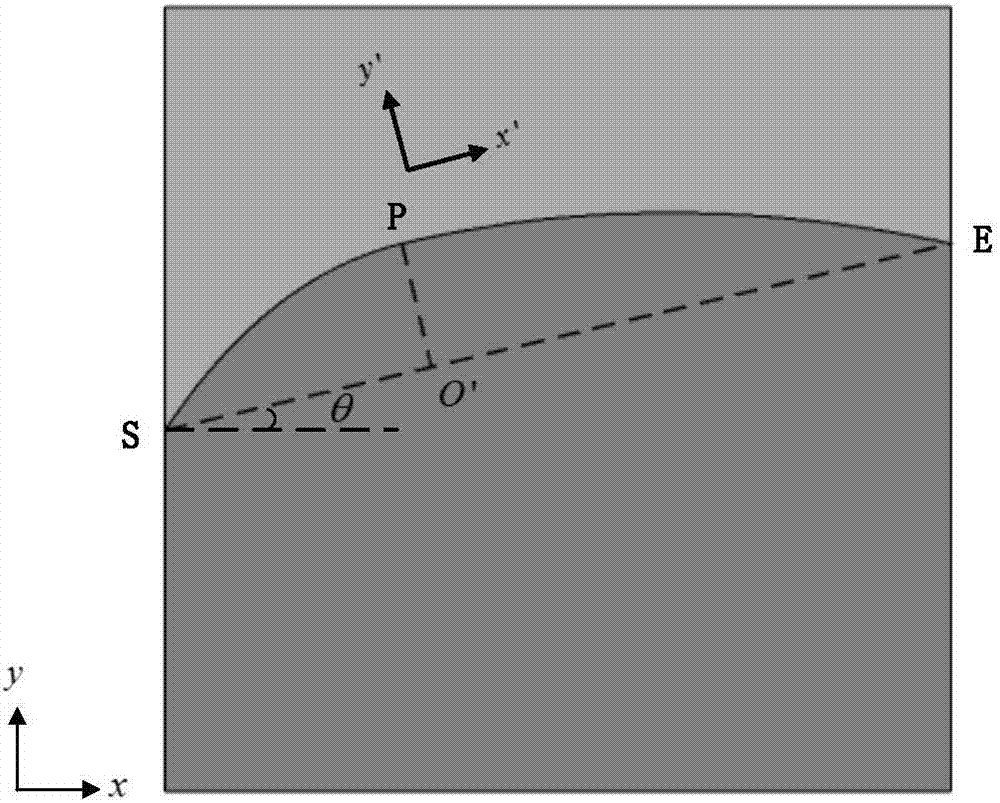

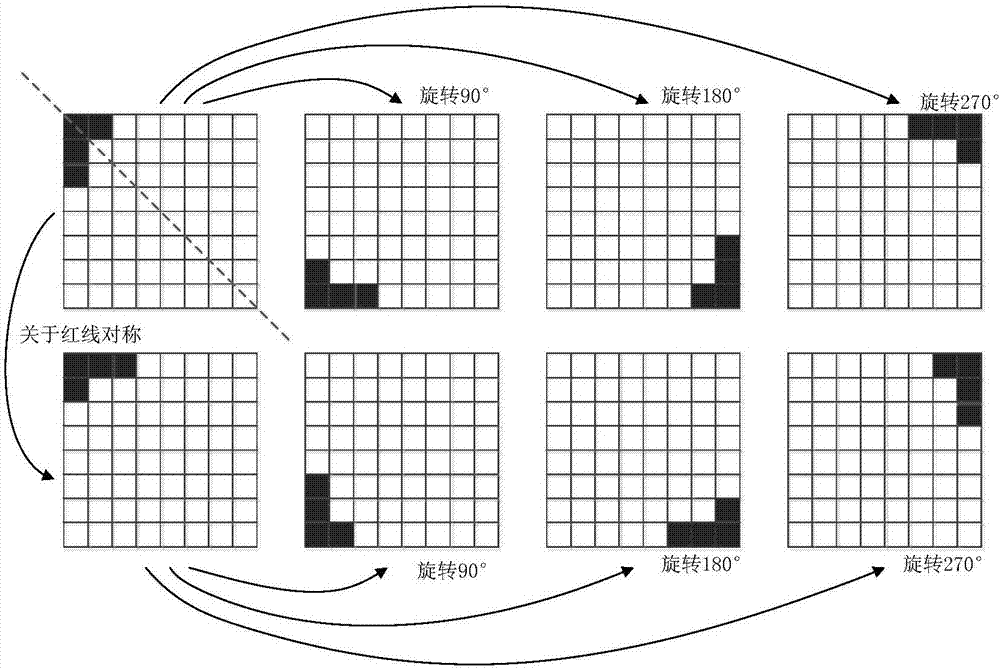

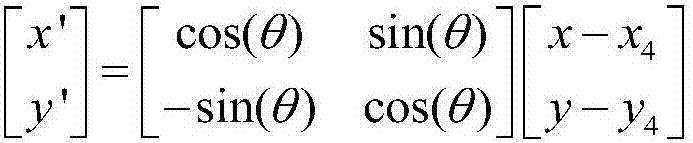

Depth image coding method based on double parabolic partitioning template

ActiveCN107071478ASmall amount of calculationImprove consistencyDigital video signal modificationSteroscopic systemsPattern recognitionSplit lines

The present invention belongs to the technical field of video coding, and particularly relates to a depth image coding method based on a double parabolic partitioning template. The method comprises the steps of fitting a depth boundary split line by using two parabolas, so as to divide a block into two partitioning templates; and adjusting the obtained partitioning templates. Compared with the existing Wedgelet mode, the partitioning split line is fit by using double parabolas, so that the partitioning boundary is smoother, the boundary of an object in the depth image can be described by using a larger block, and complex boundary conditions in reality can be better accustomed to; further, only some templates are generated by using double parabolas, and other templates are obtained through rotating and symmetry, so that calculation amount for template generation is greatly reduced, and the generated templates are more consistent. Thresholds are set for repeatability of 16*16 and 32*32 templates, so that the templates are reduced, and the later coding time is shortened.

Owner:成都图必优科技有限公司

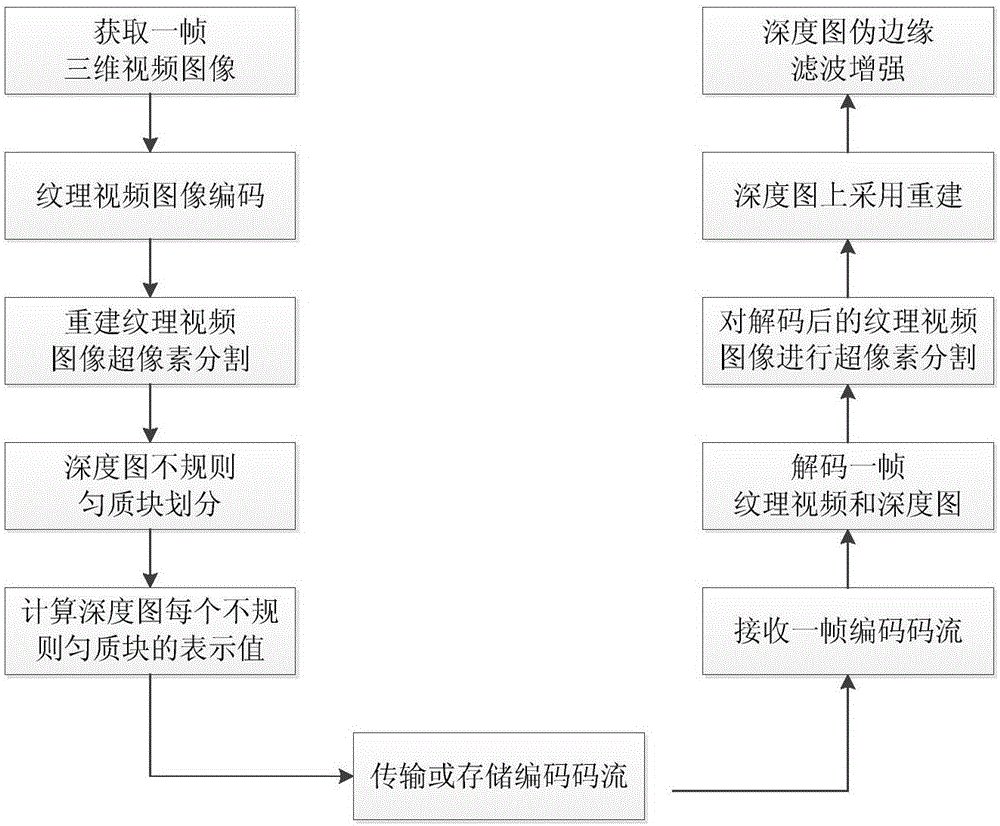

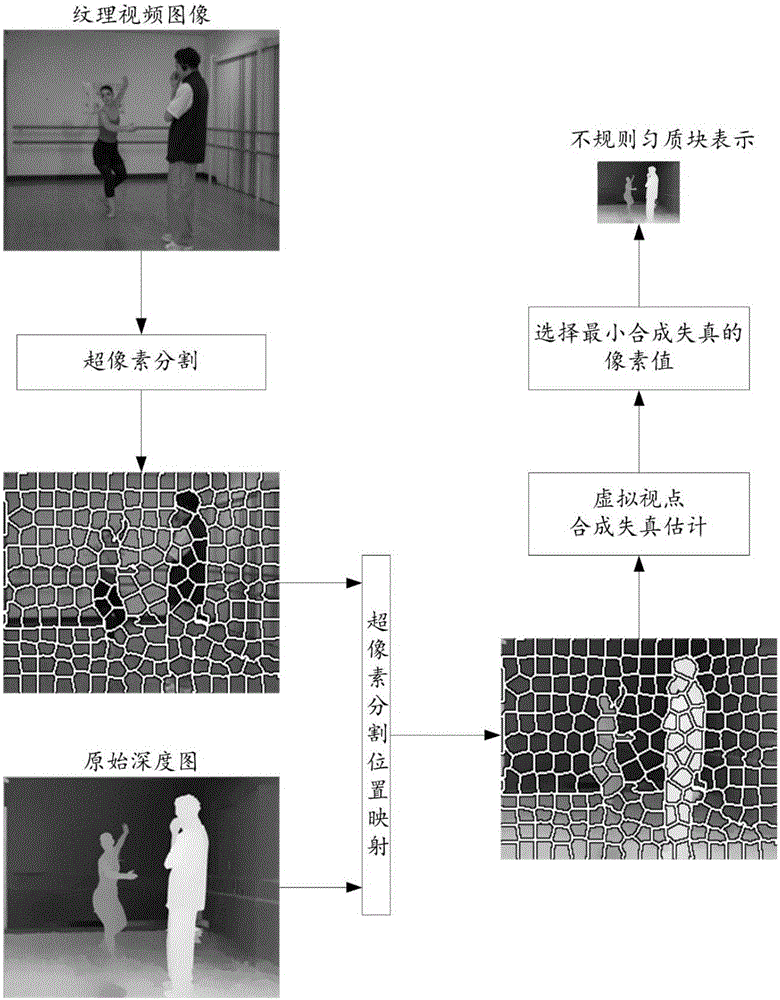

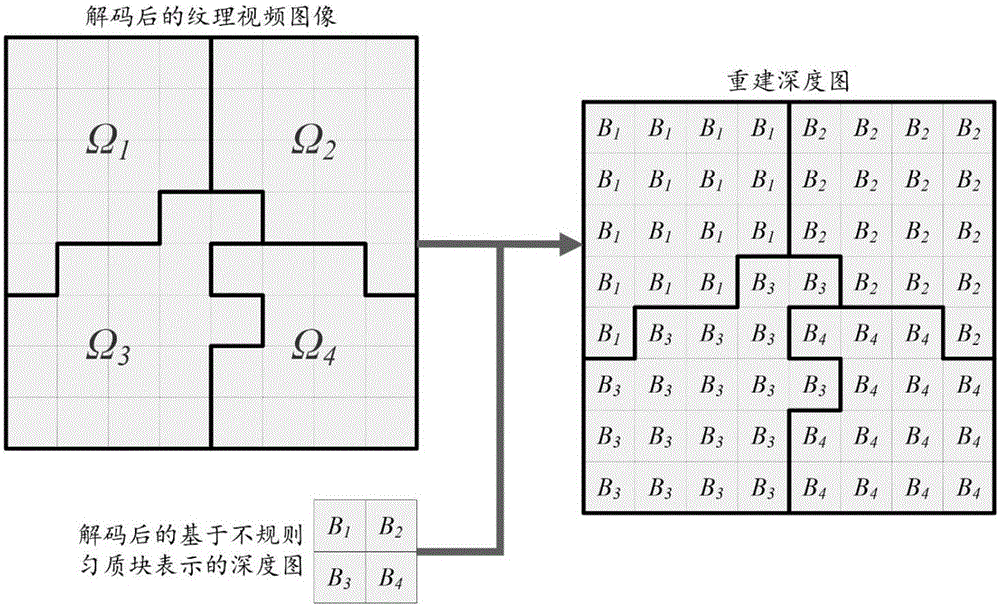

Method for coding and decoding three-dimensional video depth map based on segmentation of irregular homogenous blocks

ActiveCN106162198AImprove coding efficiencyReduce computational complexityDigital video signal modificationSteroscopic systemsLossless codingComputation complexity

The invention relates to a method for coding and decoding a three-dimensional video depth map based on the segmentation of irregular homogenous blocks. The method comprises the following steps: (1) inputting a frame of depth map and a corresponding texture image; (2) coding a texture video by virtue of a standard coding method; (3) carrying out superpixel segmentation on the reconstructed texture video; (4) dividing the depth map into the irregular homogenous blocks; (5) calculating to enable the irregular homogenous blocks to correspond to a depth pixel value with minimum distortion in a synthetic region, wherein the depth pixel value represent the whole irregular homogenous block; (6) carrying out lossless coding on the depth map represented by the irregular homogenous blocks; (7) receiving and decoding a frame of three-dimensional video image code stream; (8) carrying out superpixel segmentation on the decoded video image; (9) reconstructing the decoded depth map; and (10) carrying out quality enhancement on the reconstructed depth map by virtue of a fake edge filtering method. By adequately considering the internal characteristic of smooth fragments of the depth map and the synthetic distortion of a virtual viewpoint, the coding efficiency of the depth map can be improved, the computation complexity of the coding of the depth map can be decreased, and the method can be compatible with any standard depth map coding method.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

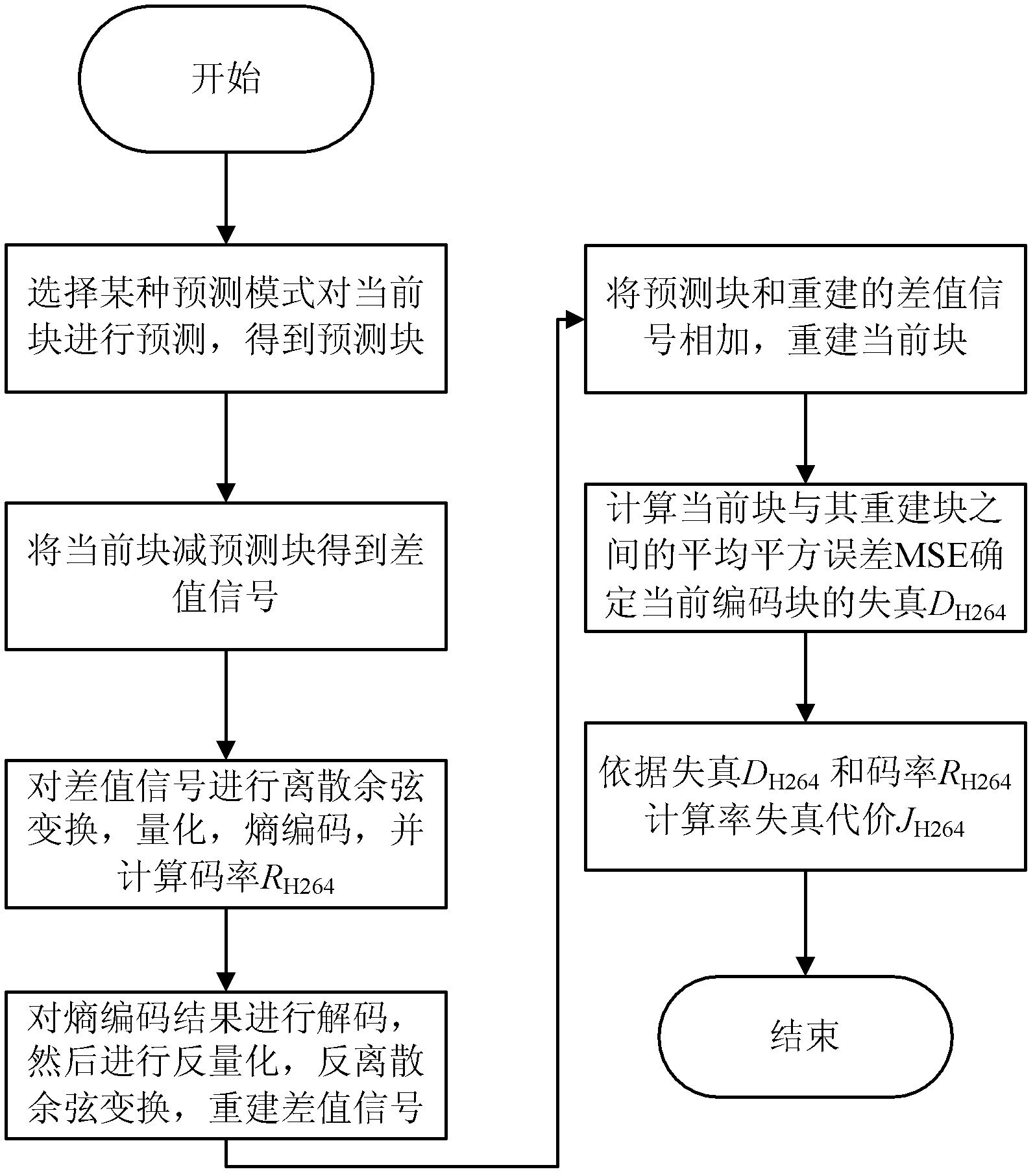

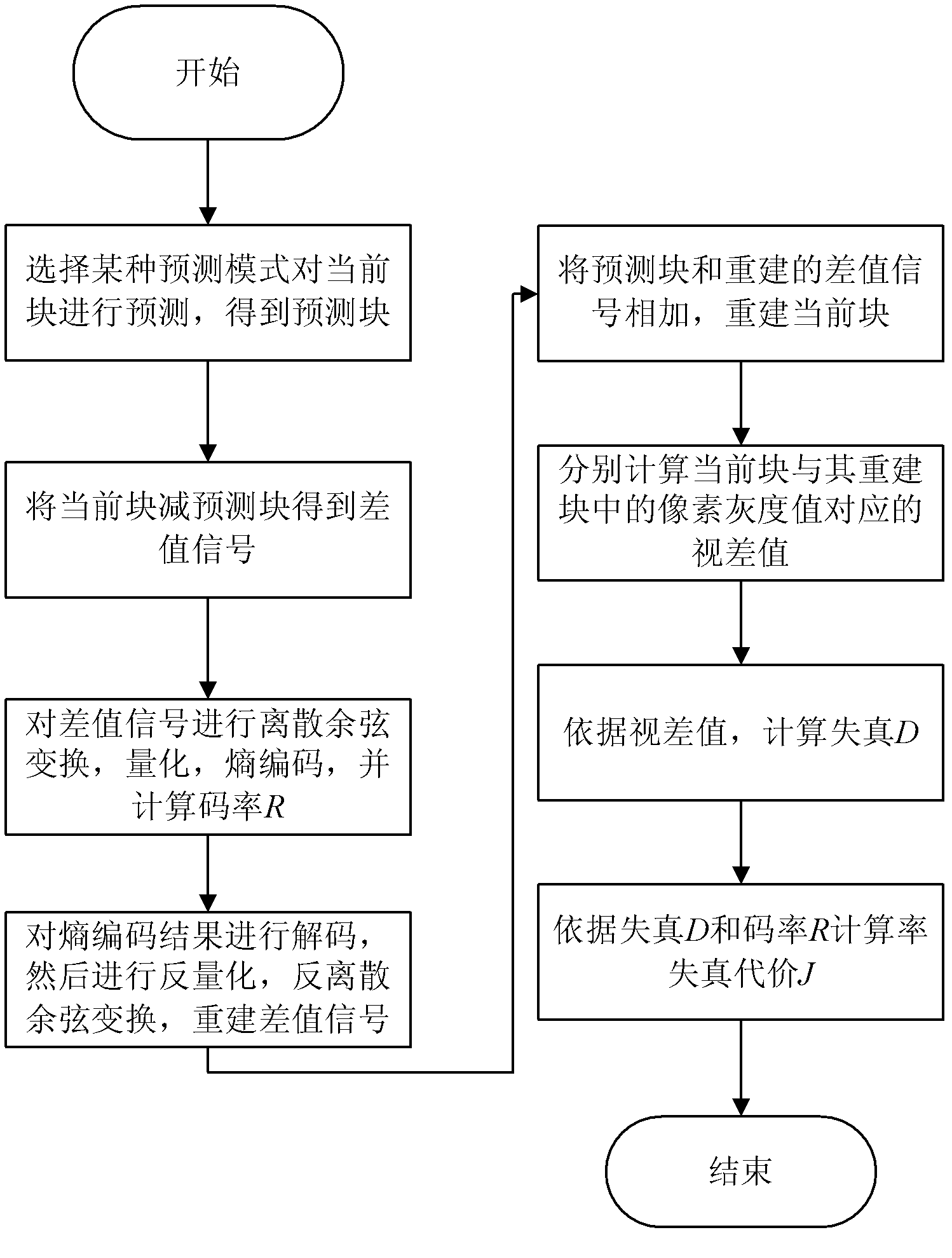

Depth view encoding rate distortion judgment method for virtual view quality

InactiveCN102158710AImprove coding efficiencySimple calculationTelevision systemsDigital video signal modificationParallaxDepth map coding

The invention discloses a depth view encoding rate distortion judgment method for virtual view quality. The method comprises the steps of performing predication on a current encoding block to obtain a predicated block; computing the difference between the current encoding block and the predicated block, and performing discrete cosine transform, quantification and entropy coding on the difference to obtain the code rate of the current encoding block; converting pixel gray values of the current encoding block and the predicated block into parallax values; then computing the distortion of the current encoding block according to the converted parallax values; and finally computing the rate-distortion cost of the current encoding block according to the distortion and the code rate. The method better reflects the influence on the composite virtual view quality by the compression distortion of a depth view according to the influence on the composite virtual view quality by the compression distortion of the depth view, improves the encoding code rate of three-dimensional video, and can be applied to the coding standard of three-dimensional videos.

Owner:SHANDONG UNIV

Method for predicting depth map coding distortion of two-dimensional free viewpoint video

ActiveUS20170324961A1Reduce calculationReflection distortionDigital video signal modificationSteroscopic systemsStereoscopic videoViewpoints

Disclosed is a method for predicting depth map coding distortion of a two-dimensional free viewpoint video, including: inputting sequences of texture maps and depth maps of two or more viewpoint stereoscopic videos; synthesizing a texture map of a first intermediate viewpoint of a current to-be-coded viewpoint and a first adjacent viewpoint, and synthesizing a texture map of a second intermediate viewpoint of the current to-be-coded viewpoint and a second adjacent viewpoint by using a view synthesis algorithm; recording a synthetic characteristic of each pixel according to the texture map and generating a distortion prediction weight; and calculating to obtain total distortion according to the synthetic characteristic and the distortion prediction weight.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

Three-dimensional video depth map coding method based on just distinguishable parallax error estimation

ActiveCN103826135AReduce bit rateImprove subjective qualityDigital video signal modificationParallaxViewpoints

The invention discloses a three-dimensional video depth map coding method based on just distinguishable parallax error estimation. The method comprises the following steps: (1), inputting a frame of three-dimensional video depth map and a corresponding texture image; (2), synthesizing the texture image of a virtual view point; (3), calculating the just distinguishable error graph of the texture image of the virtual view point; (4), calculating the scope of a just distinguishable parallax error of the three-dimensional video depth map; (5), performing intraframe and interframe prediction on the three-dimensional video depth map, and selecting a prediction mode, which is provided with minimum prediction residual error energy, of the three-dimensional video depth map; (6), performing prediction residual error adjusting on the three-dimensional video depth map, and obtaining a prediction residual error block, which has a minimum variance, of the three-dimensional video depth map; and (7), encoding the three-dimensional video depth map of a current frame. The method provided by the invention, can greatly reduce the code rate of depth map coding under the condition that the invariability of the PSNR of a virtual synthesis video image is ensured and can also substantially improve the subjective quality of a virtual synthesis viewpoint at the same time.

Owner:ZHEJIANG UNIV

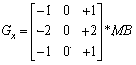

Depth video encoding method based on edges and oriented toward virtual visual rendering

InactiveCN103997653AProtect edge informationLight in massDigital video signal modificationAlgorithmSobel edge detection

The invention discloses a depth video encoding method based on edges and oriented toward virtual visual rendering. The method comprises the steps that (1) the edges are detected, macro blocks of a depth map are processed through a Sobel edge detection algorithm, and edge values of the macro blocks of the depth map are detected out; (2) the types of the macro blocks of the depth map are classified, a threshold value lambda used for classifying the macro blocks is set, the edge values of the macro blocks of the depth map are compared with the set threshold value lambda, and the macro blocks are classified into edge areas and flat areas; (3) the macro blocks of the depth map are encoded to obtain encoded macro blocks of the depth map, and different encoding forecasting modes are adopted; (4) mid-value mean shift filtering is carried out, a mean shift filter is used for removing a block effect of the encoded macro blocks of the depth map in the edge areas, and the edges are protected. The algorithm improves the compression speed of the depth map and the encoding quality of the depth map of the virtual vision on the premise that the subjective quality of virtual vision videos is basically not changed.

Owner:SHANGHAI UNIV

Method of Texture Merging Candidate Derivation in 3D Video Coding

ActiveUS20160050435A1Color television with pulse code modulationColor television with bandwidth reductionDepth map codingEncoder

A method of depth map coding for a three-dimensional video coding system incorporating consistent texture merging candidate is disclosed. According to the first embodiment, the current depth block will only inherit the motion information of the collocated texture block if one reference depth picture has the same POC (picture order count) and ViewId (view identifier) as the reference texture picture of the collocated texture block. In another embodiment, the encoder assigns the same total number of reference pictures for both the depth component and the collocated texture component for each reference list. Furthermore, the POC (picture order count) and the ViewId (view identifier) for both the depth image unit and the texture image unit are assigned to be the same for each reference list and for each reference picture.

Owner:HFI INNOVATION INC

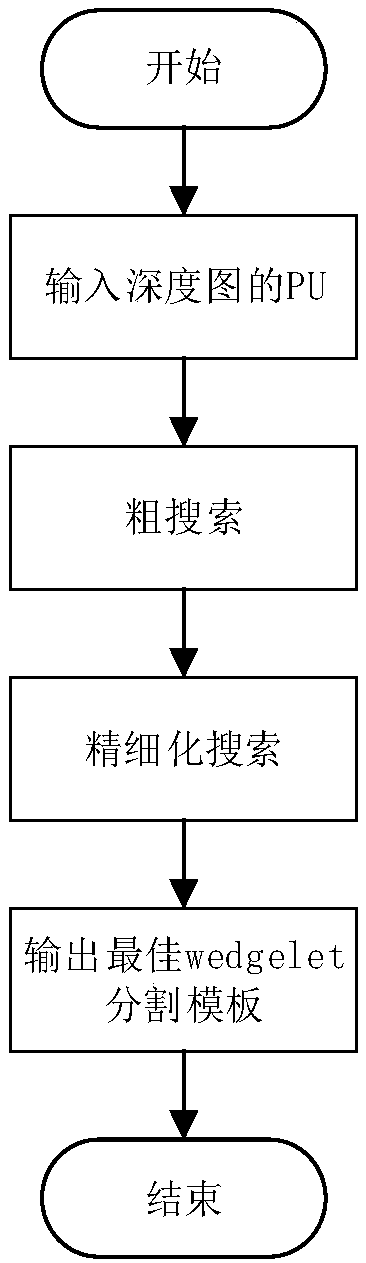

Method of reducing stereo video depth map coding complexity

ActiveCN107592538AReduce computational complexityHigh speedDigital video signal modificationComputation complexityRound complexity

The present invention discloses a method of reducing stereo video depth map coding complexity. The method is mainly used to reduce the coding complexity of a depth map edge in the 3D-HEVC, and comprises the steps of using a K-means clustering method to divide the inputted depth map PU block pixels into two categories having obvious difference, and generating a K-means clustering template; calculating the similarity matching degree of the K-means clustering template and the wedge segmentation templates generated at the coding initialization, and recording the optimal similarity matching degreeand an index value of the wedge segmentation template corresponding to the optimal similarity matching degree; according to the optimal similarity matching degree, determining a search radius for searching the optimal wedge segmentation template, calculating the rate distortion of all wedge segmentation templates within the search radius, and finding the optimal wedge segmentation template havingthe smallest rate distortion. The method of the present invention abandons a search mode that needs to store the wedge nodes at a coding / decoding end in advance, saves the system cache, enables the calculation complexity of a DMMI mode to be reduced, and saves 7.1% of the total coding time averagely while guaranteeing the coding quality.

Owner:HUAZHONG UNIV OF SCI & TECH

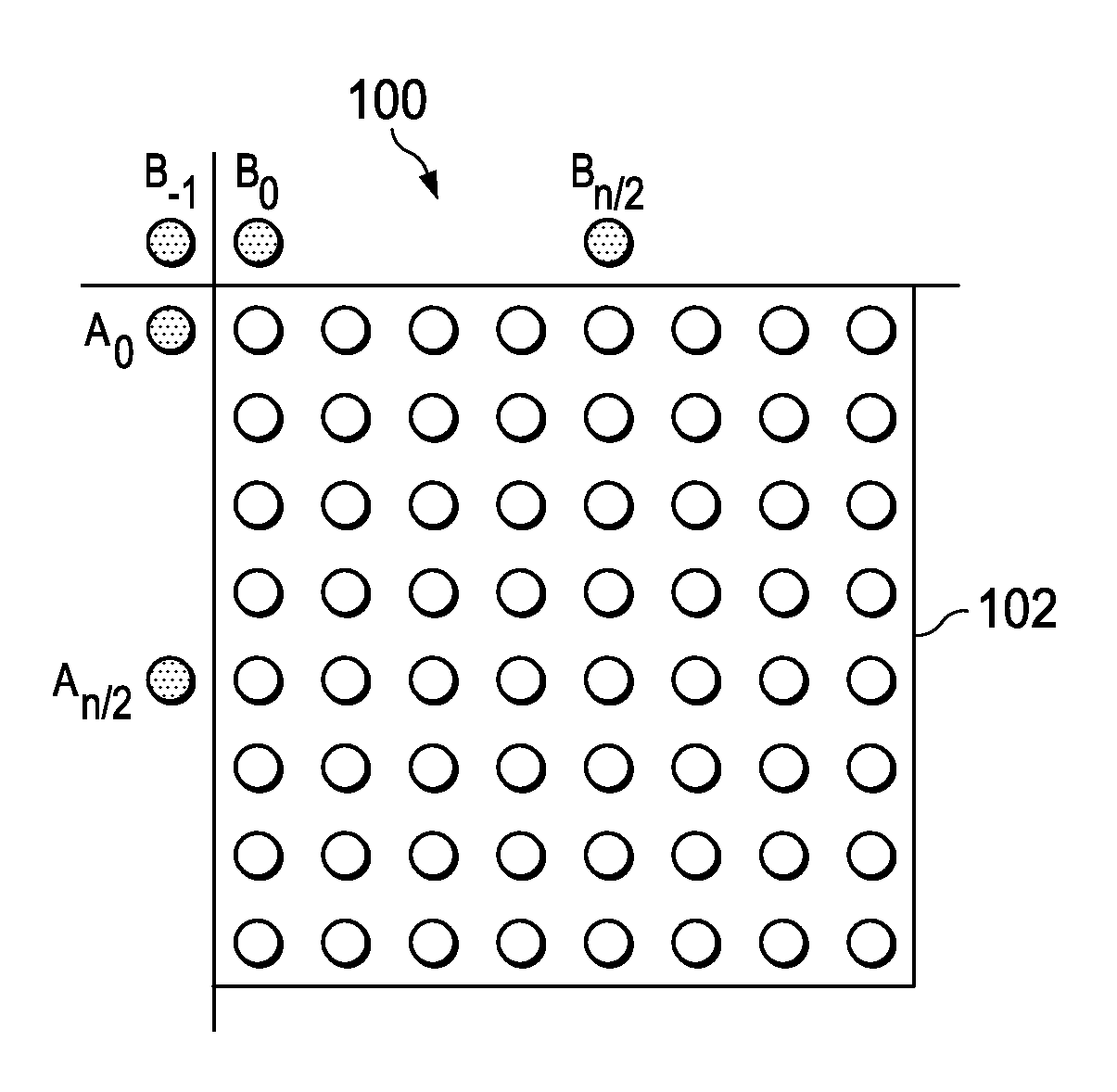

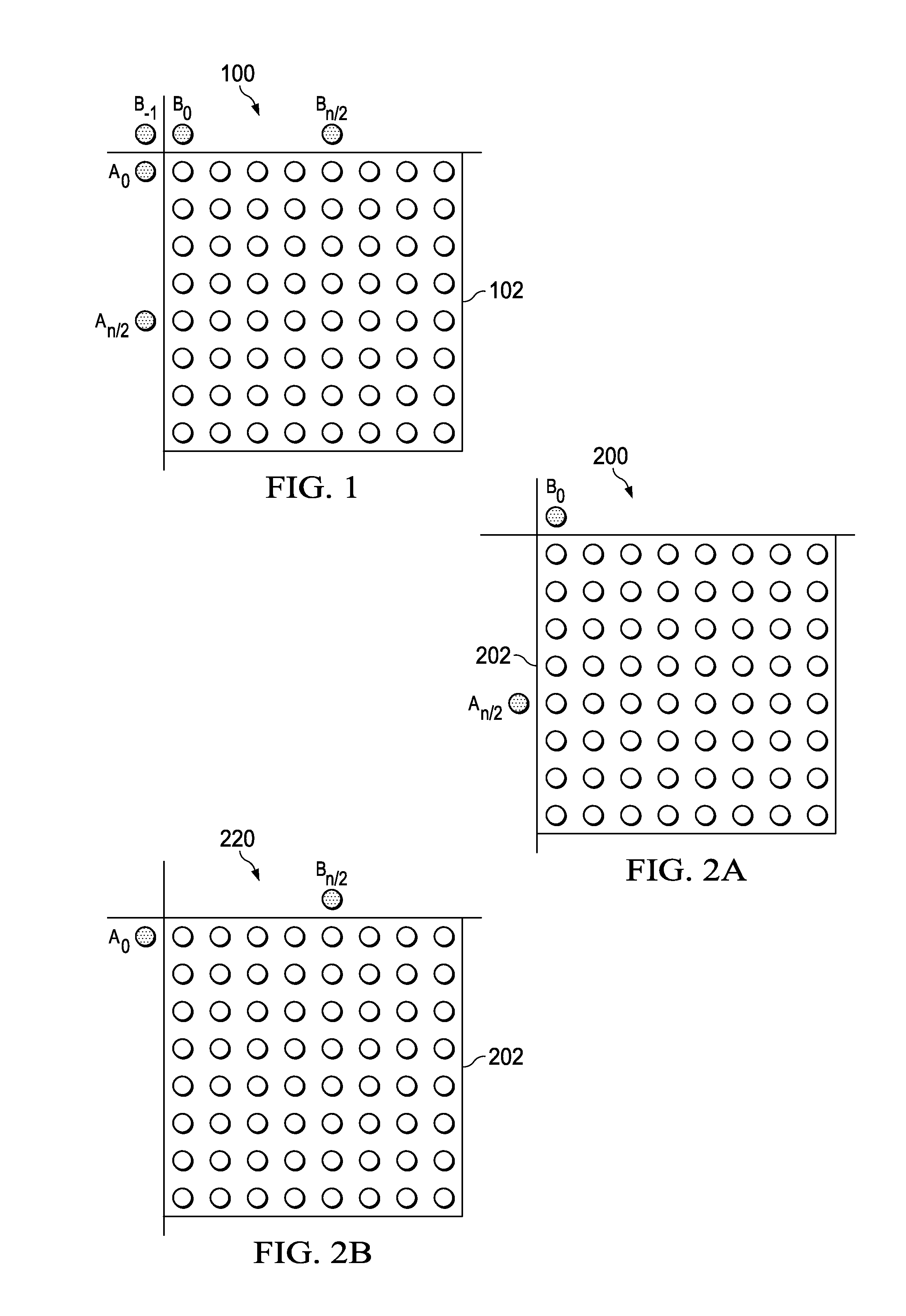

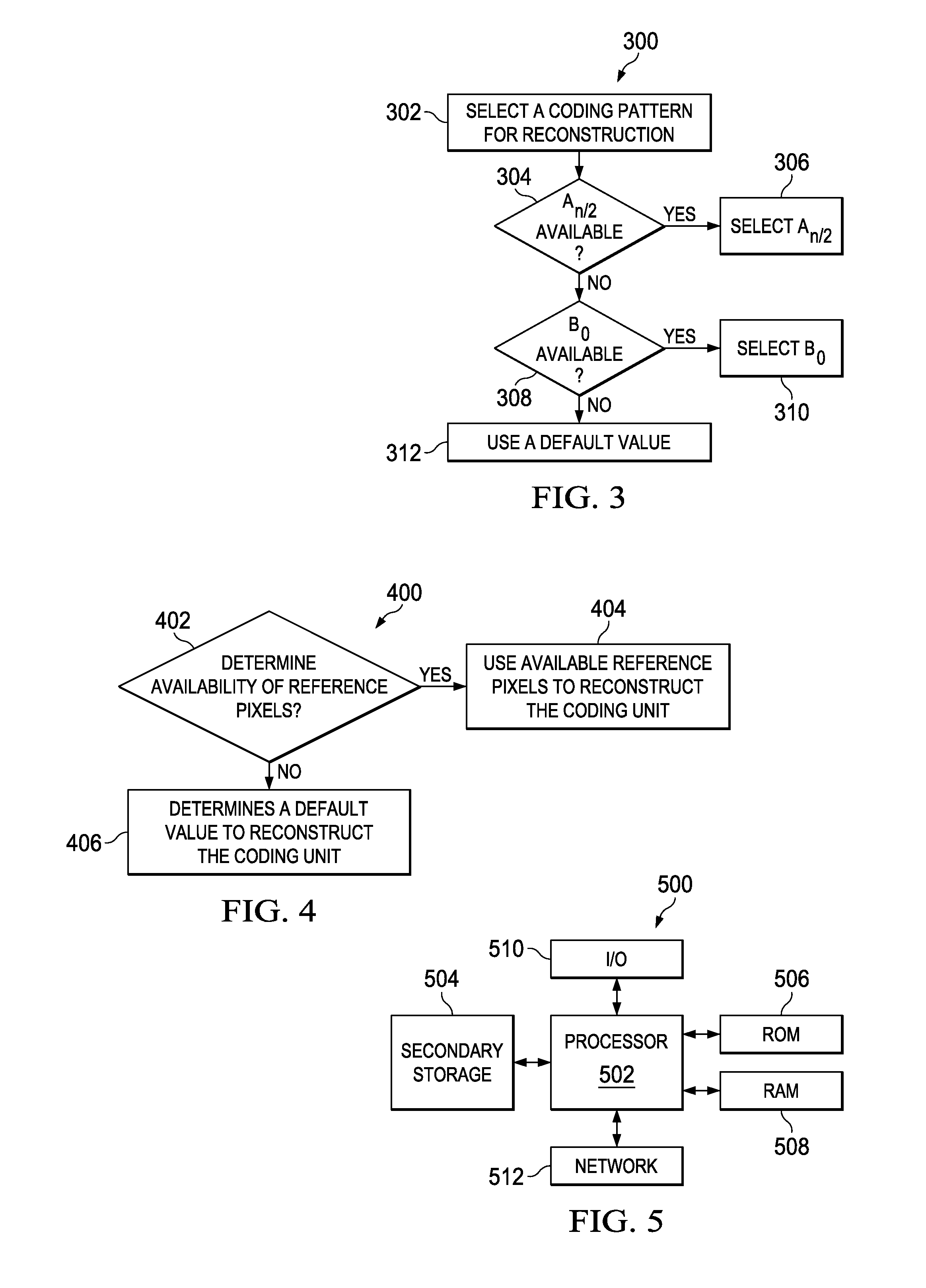

System and Method for Depth Map Coding for Smooth Depth Map Area

ActiveUS20160105672A1Color television with pulse code modulationColor television with bandwidth reductionSingle sampleComputer architecture

A method for coding a coding unit that is coded with a single sample value is provided. The method selects a coding pattern from at least two predetermined coding patterns, each of which includes a plurality of boundary neighboring samples of the coding unit that have been reconstructed, and decodes the coding unit according to a value of at least one of the plurality of boundary neighboring samples of the selected coding pattern that is available.

Owner:FUTUREWEI TECH INC +1

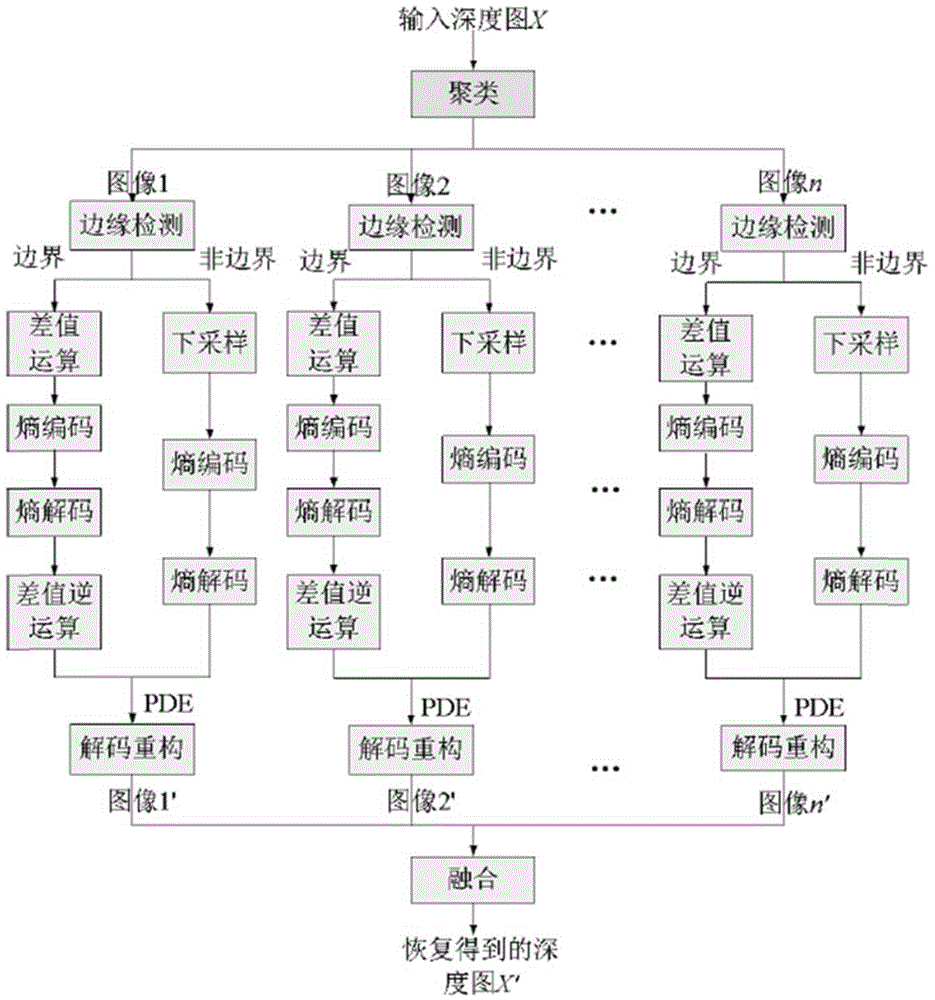

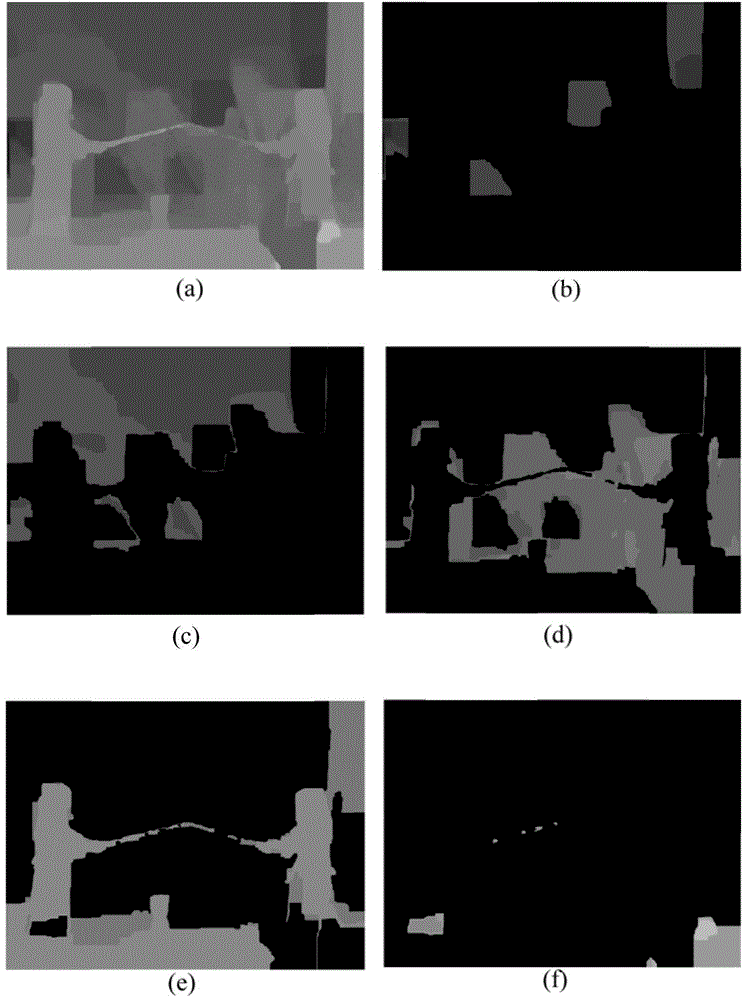

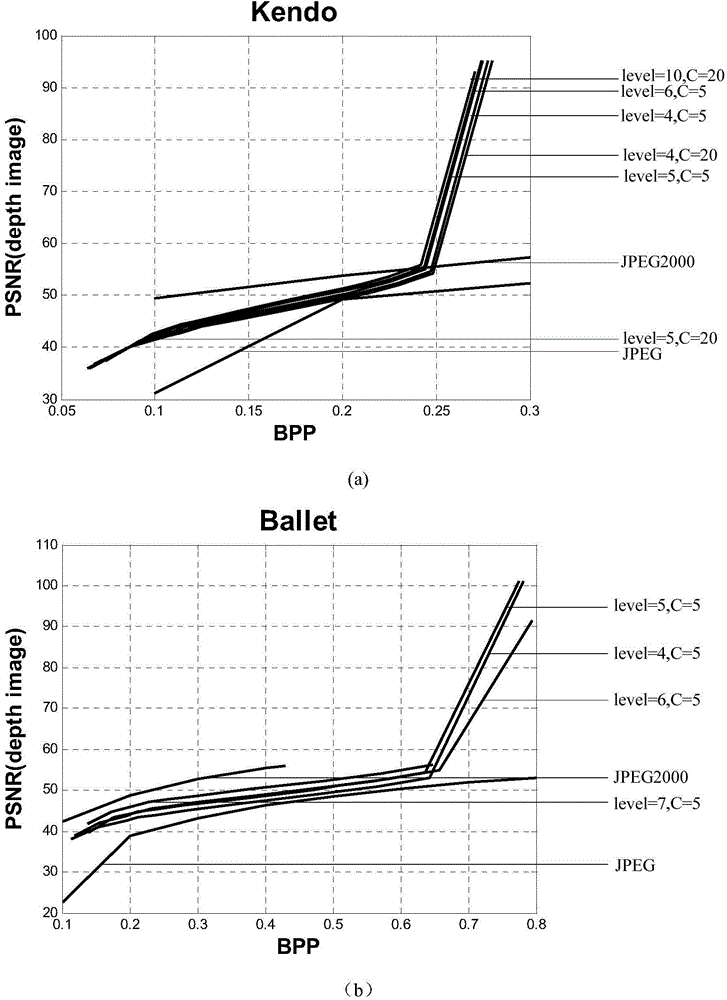

K-means clustering based depth image encoding method

ActiveCN104883558AQuality improvementEfficient compressionDigital video signal modificationSteroscopic systemsPattern recognitionViewpoints

The invention relates to a K-means clustering based depth image encoding method, and belongs to the field of depth image encoding and decoding in 3D video. The K-means clustering based depth image encoding method is characterized by comprising the steps of segmenting a depth image into n types by adopting K-means clustering; extracting the boundary of a new depth image formed by each type of the depth image after segmentation, carrying out entropy encoding and transmitting the entropy encoded boundaries to a decoding terminal; carrying out down sampling on non boundary region pixel values, and carrying out entropy encoding on down sampling values; transmitting an encoded bit stream to the decoding terminal; recovering each type of data by using a partial differential equation (PDE) method to acquire n reconstructed depth images at the decoding terminal; overlaying the n reconstructed depth images acquired by recovery so as to form a complete depth image; and synthesizing a required virtual viewpoint image by using depth image based viewpoint synthesis technologies. The advantage is that the quality of a virtual viewpoint synthesized under guidance of the depth image acquired by compression according to the scheme provided by the invention is higher than JPEG and JPEG2000 compression standards.

Owner:TAIYUAN UNIVERSITY OF SCIENCE AND TECHNOLOGY

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com