Depth video encoding method based on edges and oriented toward virtual visual rendering

A technology of depth video and coding method, which is applied in the fields of digital video signal modification, electrical components, image communication, etc., can solve the problem of limited improvement of coding efficiency, and achieve the effect of protecting edge information, shortening coding time, and improving quality

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] One implementation of the invention is as follows.

[0038] The present invention takes encoding reference software JM18.0 and virtual visual synthesis reference software VSRS3.5 as experimental platforms, and the video sequences in the test are as follows: figure 1 As shown, the table shows the parameters of Akko&Kayo sequence, Breakdancers sequence and Ballet sequence. The above three video sequences are 50 frames, 100 frames and 100 frames respectively, and the resolutions are 640×480, 1024×768 and 1024×768. Akko&Kayo The coding viewpoints of the sequence are viewpoint 27 and viewpoint 29, and the coding viewpoints of Breakdancers sequence and Ballet sequence are both viewpoint 0 and viewpoint 2.

[0039] see figure 2 , a kind of edge-based coding method of the depth video drawn for virtual view of the present invention, its steps are:

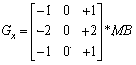

[0040](1), edge detection: use the Sobel edge detection algorithm to process the macroblock (Microblock, MB) of the depth map, a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com