Free viewpoint video depth map coding method and distortion predicting method thereof

A technology of depth map coding and prediction method, which is applied in the field of video signal processing, can solve the problems of limited accuracy of estimation model, large amount of calculation, unfavorable real-time system application, etc., and achieve the effect of reducing computational complexity and improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

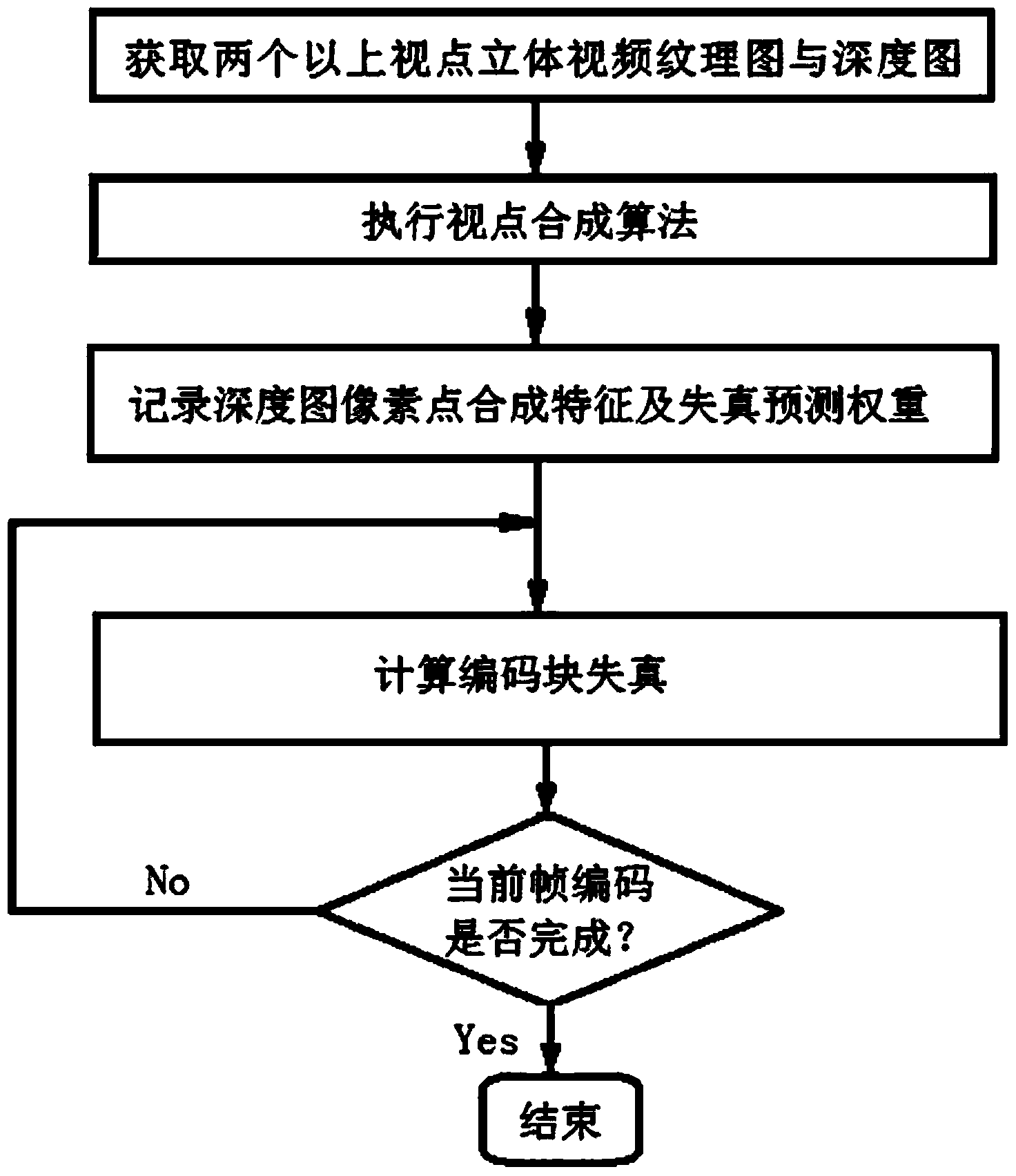

[0039] The idea of the present invention is mainly to use one-time viewpoint pre-synthesis (the current general method is to repeatedly perform the viewpoint synthesis algorithm during encoding, while the method of the present invention is to use only one synthesis algorithm to record some information, and then only need to repeatedly perform the simplification Algorithm on the line), obtain the coded depth map pixel synthesis features and distortion prediction weights, and then map to generate a depth map coded distortion prediction model. Due to the effective definition and use of synthetic feature data reflecting the impact of depth map lossy compression on viewpoint synthesis, repeated execution of the synthesis algorithm in the depth map coding process is avoided, and the present invention can significantly improve the depth map distortion in the free viewpoint video depth map coding process predictive accuracy while substantially reducing the computational complexity of...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com