K-means clustering based depth image encoding method

A depth map coding and depth map technology, applied in the field of depth map coding based on clustering, can solve the problems of high bandwidth pressure, gray level difference, insufficient characteristic analysis, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

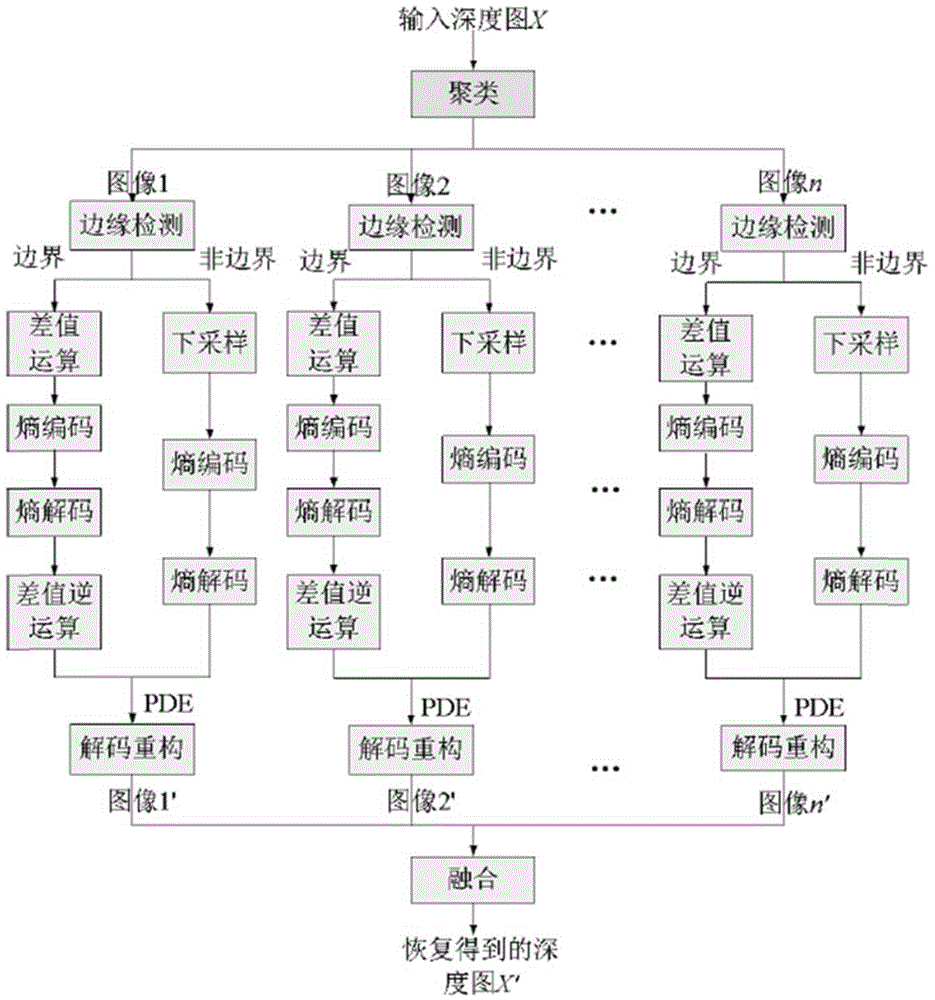

Method used

Image

Examples

Embodiment Construction

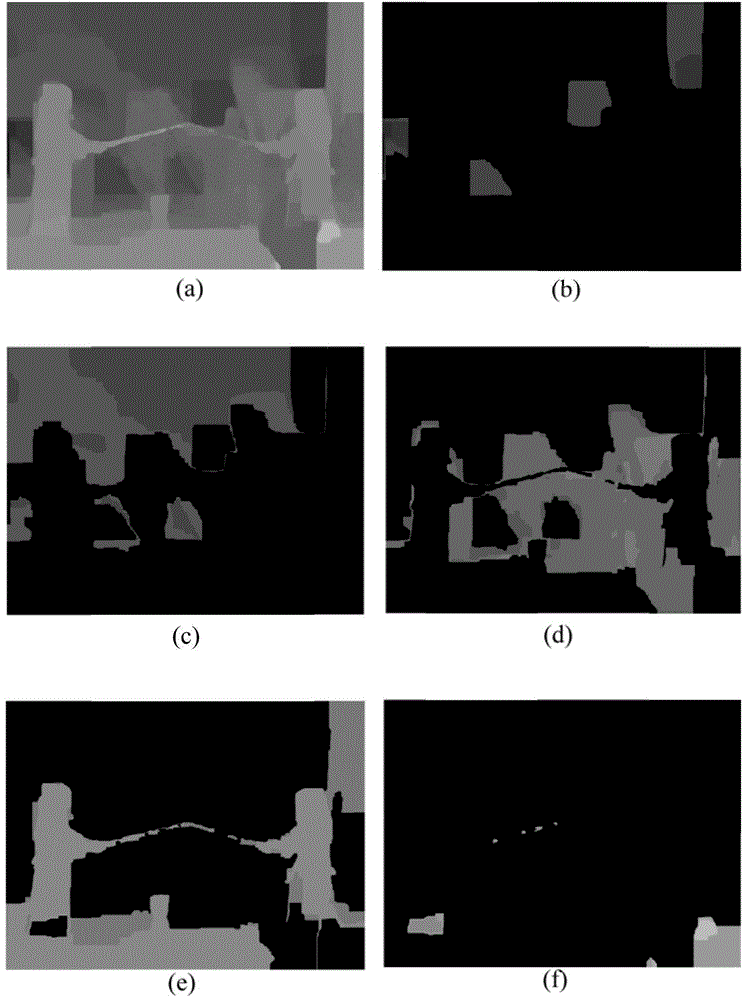

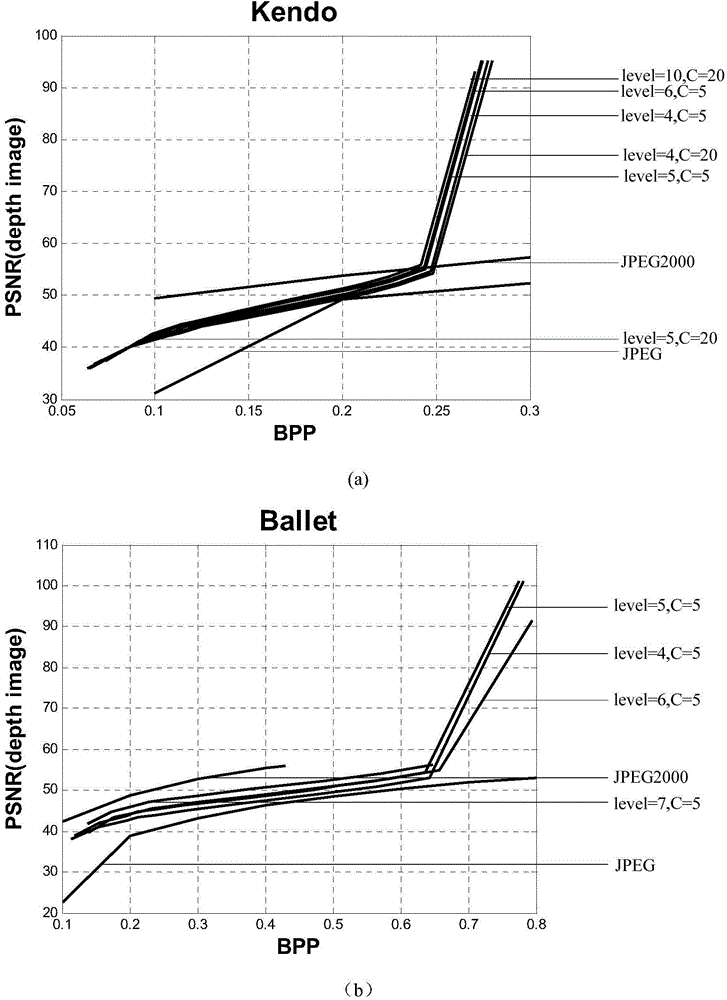

[0028] This example figure 2 As shown, three 1024×768 images Kendo, BookArrival and Ballet are used as test images, where Kendo and BookArrival are taken from viewpoint 1 and viewpoint 8 in its multi-view depth map sequence, and Ballet is taken from its multi-view depth map sequence Viewpoint 4, 3D mapping and median filtering are used in the process of synthesizing viewpoints using DIBR technology.

[0029]The specific steps are as follows:

[0030] Step 1: Read in a depth map Kendo, and cluster the depth map into 5 categories according to the clustering level=5 and the cluster center C=5. The depth map Kendo read after clustering is divided into 5 new images. The specific method is: set a zero matrix A1 with the same dimension as the original image, and divide the original image corresponding to the position of the pixel in the first category after clustering. The depth value is assigned to the zero matrix A1 to form the first new depth map D1, and so on until all classes...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com