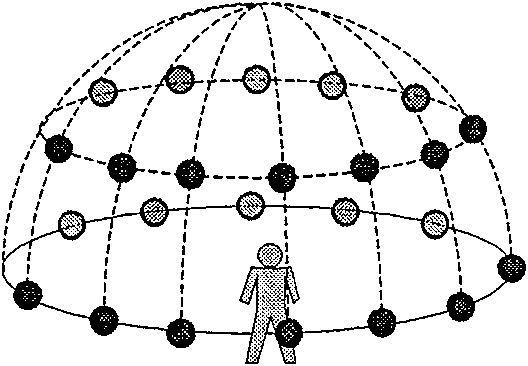

An identification method for movement by human bodies irrelevant with the viewpoint based on stencil matching

A technology of human action recognition and template matching, applied in the field of human action recognition

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

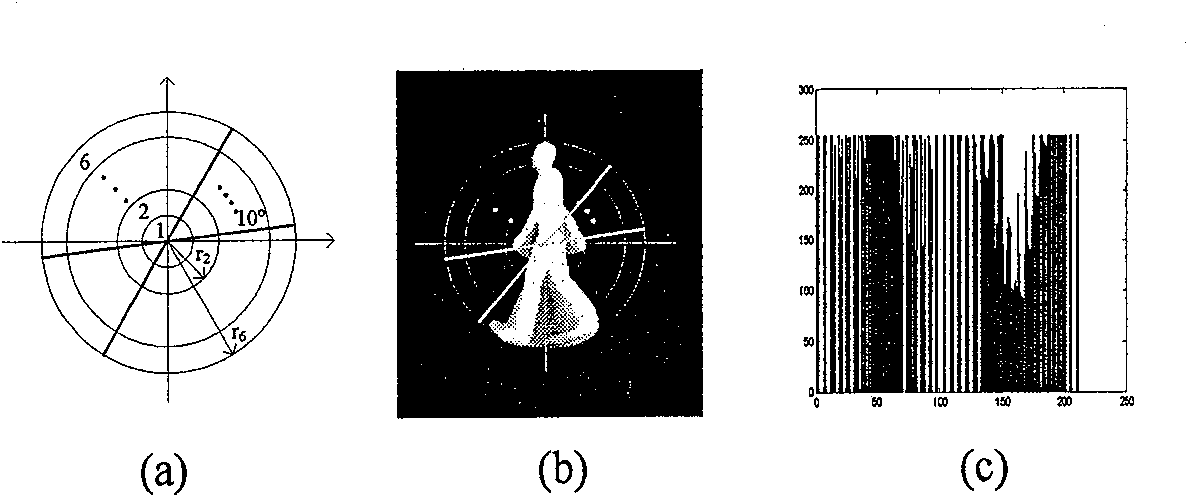

Method used

Image

Examples

Embodiment 1

[0062] An example of action recognition based on a synthetic data set:

[0063]For this dataset, there are 18 different choices for each action, and since one action has already been selected into the action template, the remaining 17 actions can be used as test cases. The algorithm of the present invention is compared with two algorithms, that is, the temporal template matching method proposed by Bobick et al. and the k-nearest neighbor (kNN) classification method. In the kNN method, we still extract the multi-viewpoint polar coordinate features of the sample action when constructing the template data, and use the nonlinear dimensionality reduction method to map it to the four-dimensional subspace, but when classifying, the method based on the hypersphere Replaced by kNN classification method. Since the method proposed by Bobick is viewpoint-dependent, 24 timing templates are constructed for this method based on the same sample actions (these sample actions are also used to ...

Embodiment 2

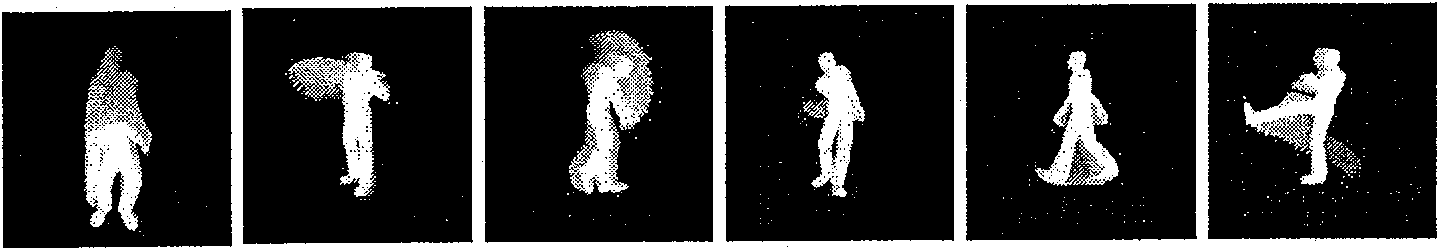

[0065] Example of action recognition based on public data sets:

[0066] Bobick's method in Example 1 has similar performance to the method described in the present invention, because the viewpoint of the input action was strictly restricted in the previous test to match the viewpoint of the action template. The second group of tests is to compare the generalizability of Bobick's method with the method of the present invention. We execute both algorithms on the IXMAS dataset, which is publicly available for download at INRIA PERCEPTION's site. This dataset contains 13 daily human movements, each performed 3 times by 11 actors. Actors freely change their orientation during performance to reflect viewpoint independence. So for an action, there are 33 test cases to choose from. We selected four actions of walking, punching, kicking and squatting under 5 free viewpoints in the IXMAS dataset, and calculated their motion history maps and polar coordinate features as input to the ...

Embodiment 3

[0068] Embodiment of action recognition based on real video:

[0069] We take real videos of other people in the laboratory in the school parking lot, and the moving human silhouette can be obtained by the background modeling method. Here, how to obtain meaningful "action" fragments from a time-series human silhouette collection as an algorithm input is a very critical issue. We use a segmentation algorithm based on subspace analysis to segment dynamic human movements in the time domain. Pre-segment 30 frames of human body contours (video frame rate is 30fps) every 1 second, initially extract different action segments, and calculate the motion history map and corresponding polar coordinate features as the input of the algorithm. A total of 10 tests were repeated, and the average distance between the action to be recognized and the sample action in the action template is as follows: Image 6 As shown, it can be seen that the method of the present invention is also effective fo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com