Pronunciation quality assessment and error detection method based on fusion of multiple characteristics and multiple systems

A technology for quality assessment and error detection, applied in speech analysis, speech recognition, instruments, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

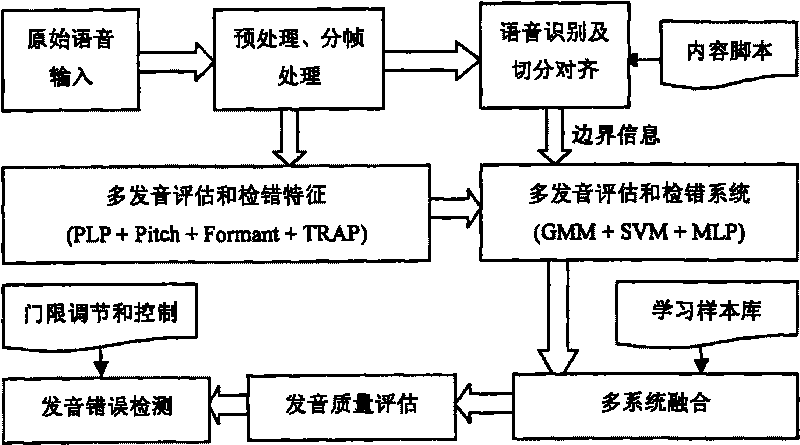

[0119] see Figure 1 to Figure 6 As shown, it is a flow chart of the first embodiment of the present invention, which evaluates and detects errors in the quality of vocabulary pronunciation, and its steps are:

[0120] Step 101, the user reads out the vocabulary speech that needs to be evaluated and checked;

[0121] Step 102, the original voice is preprocessed, the frame length is 25ms, and the frame interval is 10ms, and the processing is repeated until the voice signal ends;

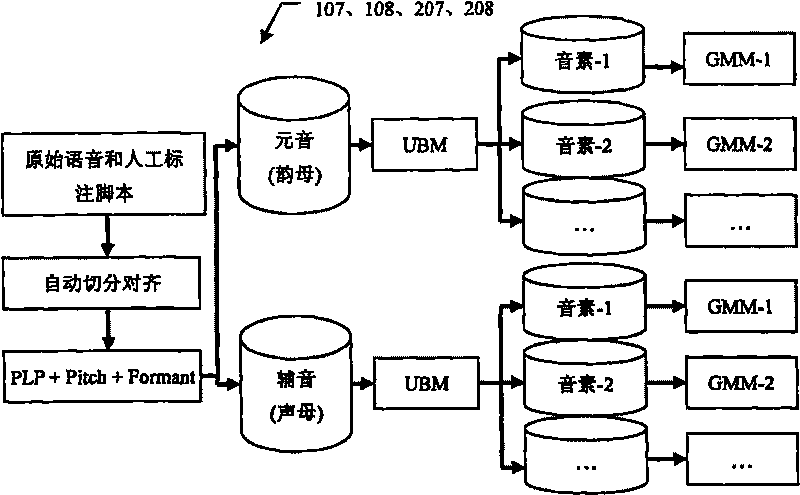

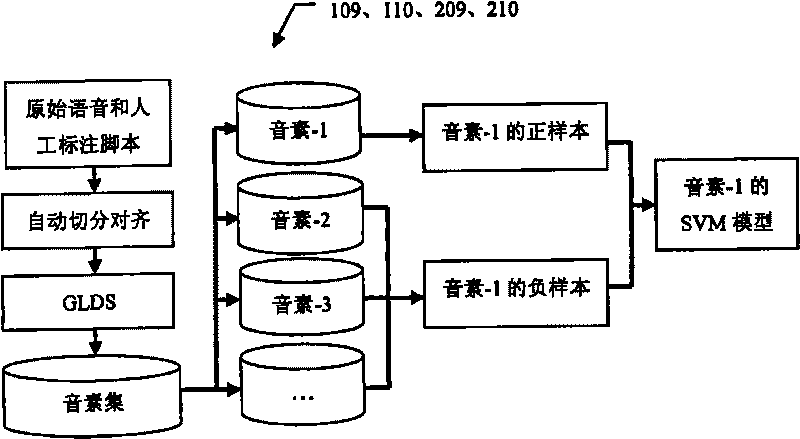

[0122] Step 103, when the content of the vocabulary is known, the speech is automatically segmented and aligned, and the speech recognition link is omitted for the vocabulary speech. The pronunciation model used for segmentation and alignment is trained using a large number of manually labeled Chinese or English corpora. English uses 44 phoneme pronunciation models (20 vowels, 24 consonants), and Chinese uses 61 phoneme pronunciation models (36 finals, 25 initials, including zero initials). The tra...

no. 2 example

[0141] see Figure 1 to Figure 5 ,as well as Figure 7 Shown, be the flow chart of the second embodiment of the present invention, the pronunciation quality of paragraph reading question type in the oral English test is evaluated and error detection, and its steps are:

[0142] Step 201, the examinee reads the English paragraph that needs to be evaluated and checked;

[0143] Step 202, the original voice is preprocessed, the frame length is 25ms, and the frame interval is 10ms, and the processing is repeated until the voice signal ends;

[0144] Step 203, performing speech recognition and automatic segmentation and alignment when the content of the paragraph is known. A large number of artificially labeled English corpus was used to train the pronunciation model, a total of 44 phoneme models (20 vowels, 24 consonants). Using the BEEP dictionary with pronunciation variation, the language model required in the recognition process is generated using known paragraph content scr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com