Apparatus and method for objective perimetry visual field test

An objective and well-equipped technology, applied in the field of visual field test equipment, can solve problems such as aggravated fatigue, difficulty in maintaining gaze, and long test time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0045] Throughout the following description, specific details are set forth in order that those skilled in the art may provide a thorough understanding of the present invention. . However, to avoid unnecessarily obscuring the disclosure, some well known elements have not been shown or described in detail. Accordingly, the description and drawings are to be regarded as illustrative rather than restrictive.

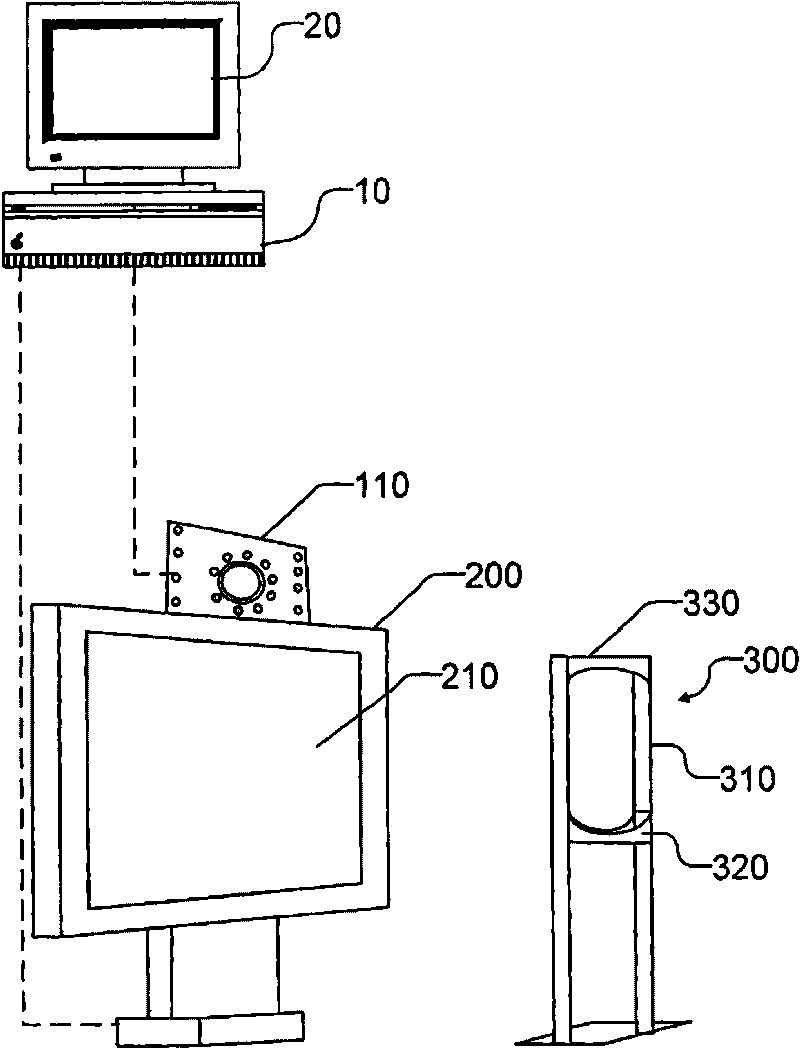

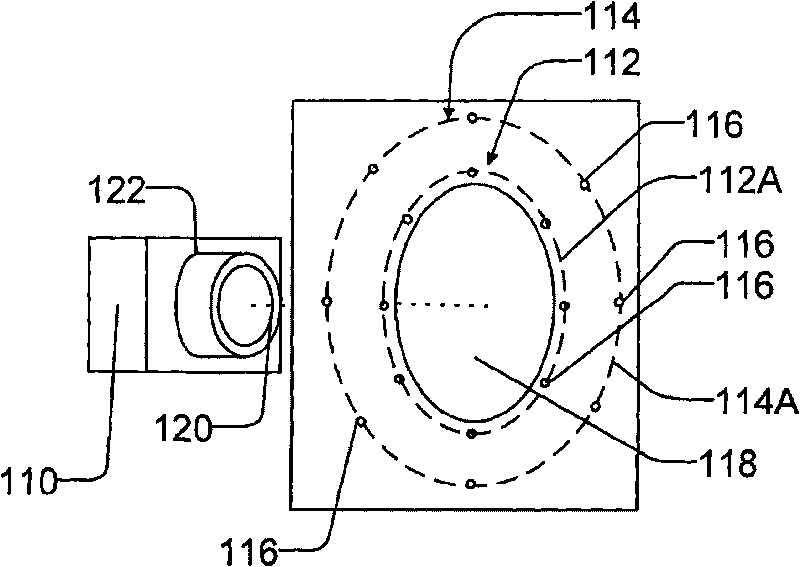

[0046] refer to Figure 1 to Figure 8 , the device 11 according to one embodiment comprises a personal computer 10 . Computer 10 may include components and peripherals typically associated with a personal computer. The computer 10 is connected to and controls a main display 200 and a second optional monitor 20 . The computer 10 is not limited to a personal computer. Computer 10 may include any suitable data processing device, such as an embedded processor, microprocessor, application server, network computer, or similar device.

[0047] In the illustrated embodiment, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com