Semantic relationship network-based cross-mode information retrieval method

A technology of semantic association and information retrieval, applied in the field of information retrieval, can solve the problem of unimproved semantic matching, and achieve the effect of fast cross-modal retrieval, reducing errors and improving retrieval accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

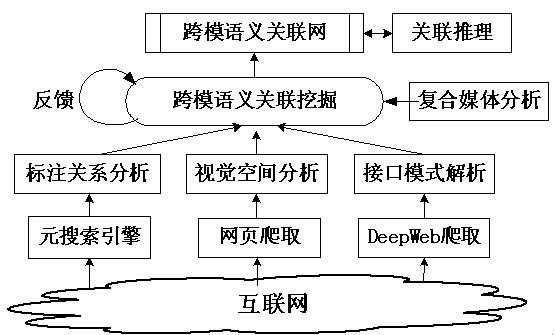

[0056] The present invention proposes a cross-modal information retrieval method based on semantic association network, the principle of which is:

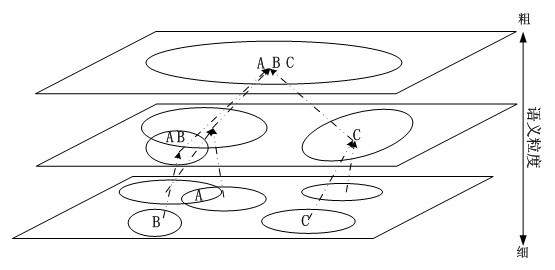

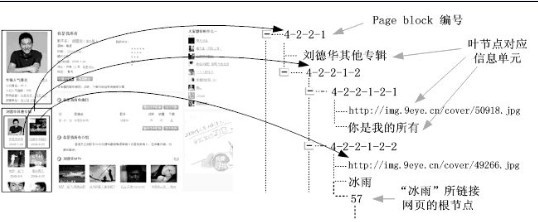

[0057] Traditional multimedia search engines mainly use feature vectorization and vector hashing technology to construct indexes, and then realize retrieval based on the principle of vector matching. However, in the field of cross-modal retrieval, the structure and characteristics of different modal data are quite different, resulting in different dimensions of feature vectors. Although dimensionality reduction techniques can be used to make the vector dimensions corresponding to various modes the same, the meanings of each dimension and the entire feature space are still different, and it is meaningless to directly perform vector matching. Therefore, in order to achieve cross-modal indexing, this patent uses the cross-modal association knowledge acquired before, and obtains multi-modal data sets with the same semantics at differe...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com