Generating method and system of video scene database and method and system for searching video scenes

A technology of video scenes and video files, which is applied in the field of video search, can solve the problems of not having and spending a lot of time on target video scene fragments, and achieve the effect of saving time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0073] The content, format, type and other attributes of the video files involved in the solution of the present invention do not affect the implementation of the solution of the present invention. In the following example, a general English movie video file is taken as an example, but the implementation of the solution of the present invention is not limited to the video file of an English movie. For example, the present invention is also applicable to Chinese-language movies, other foreign-language movies, and non-movie videos.

Embodiment

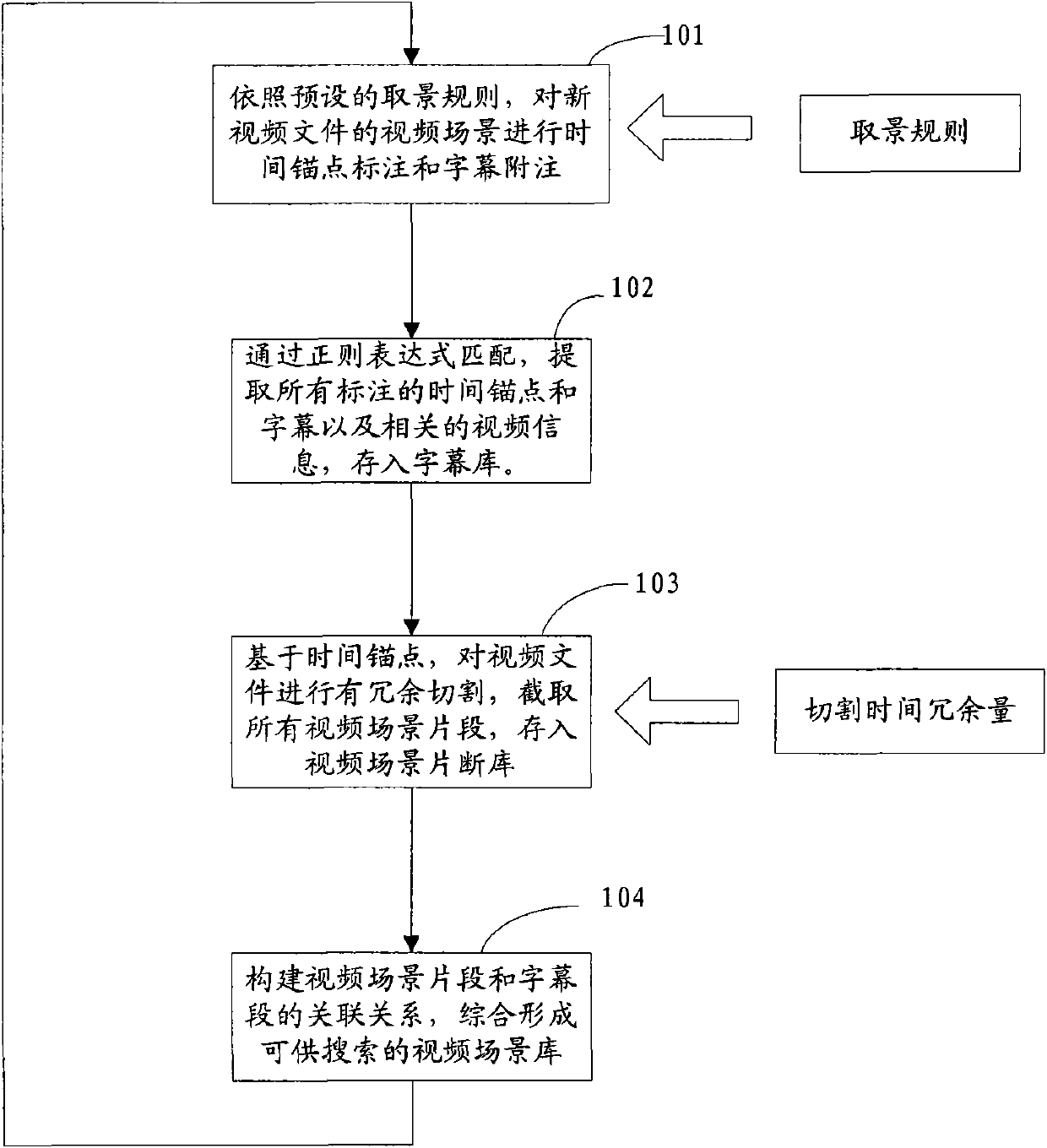

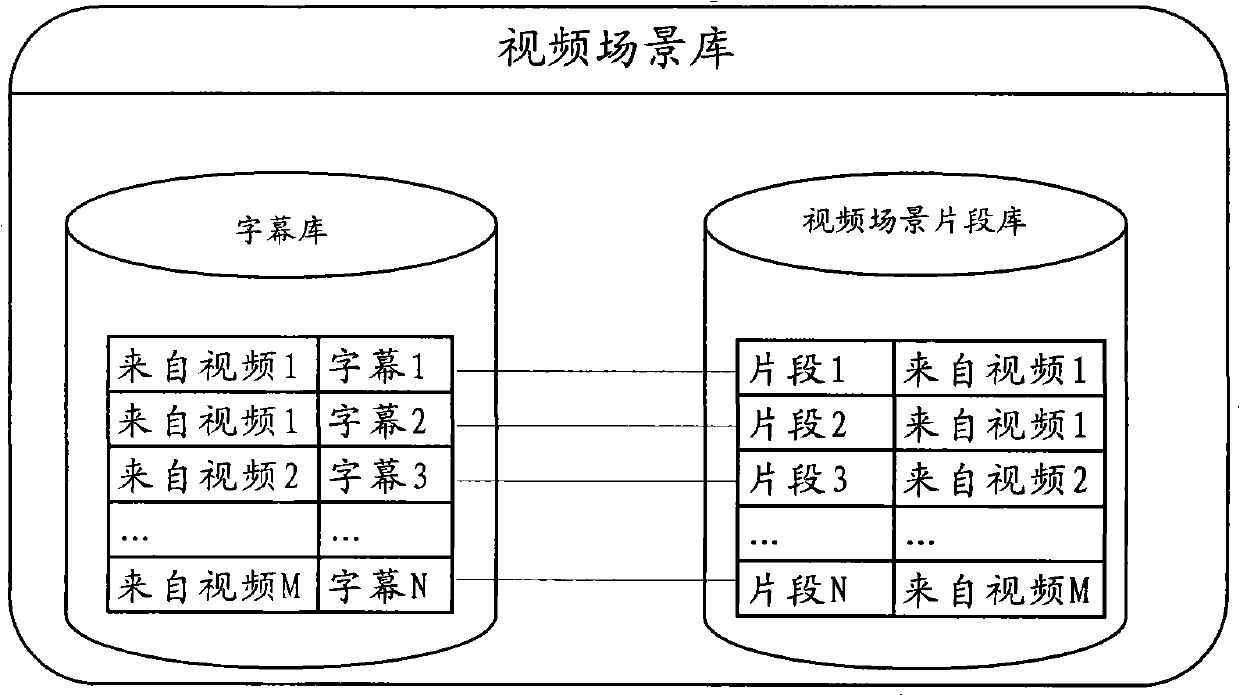

[0074] refer to figure 1 , figure 1 A preferred implementation example of the generation method of the video scene library of the present invention is disclosed, and the method includes the following steps:

[0075] 【Step 101】

[0076] According to the preset framing rules, time anchor mark and subtitle annotation are carried out for each video scene of the video file. Typical framing rules include taking each complete dialogue or narration in the video as a scene unit, and taking a specific scene as a scene unit. The subtitle content can be the original text of the dialogue / narration, or a synonymous explanation or summary of the dialogue / narration, which corresponds to a dialogue or narration-type video scene, or a scene description tag, which corresponds to a descriptive video scene.

[0077] Take the video file of the film "Forrest Gump" as an example, assuming that the preset framing rule is to frame each complete dialogue or narration in the video, and also to frame s...

Embodiment 2

[0121] The difference between this embodiment and Embodiment 1 is that in this embodiment, no video scene library is constructed, and when the user makes a request to search for video scene segments, the time anchor points corresponding to the subtitle segments obtained from the search are compared to the time anchor points in the data source. The video file is cut in real time, and the target video scene segment is returned to the user. For the technical details of each step and the working principles of each unit, refer to Embodiment 1, and details are not repeated here.

[0122] A method for directly searching video scene segments, said method comprising the steps of:

[0123] A', the video scene in the video file in the data source is marked with time anchor point and subtitle annotation;

[0124] B', extracting marked time anchors and subtitle segments and related video file information are stored in the subtitle library;

[0125] C', the user inputs a keyword to reques...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com