Robust real-time on-line camera tracking method

A camera, robust technology, applied in image communication, computer components, color TV components, etc., can solve problems such as camera shake, image blur, and insufficient resistance, so as to avoid unreliable estimation and reduce errors and mistakes , the effect of improving time efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

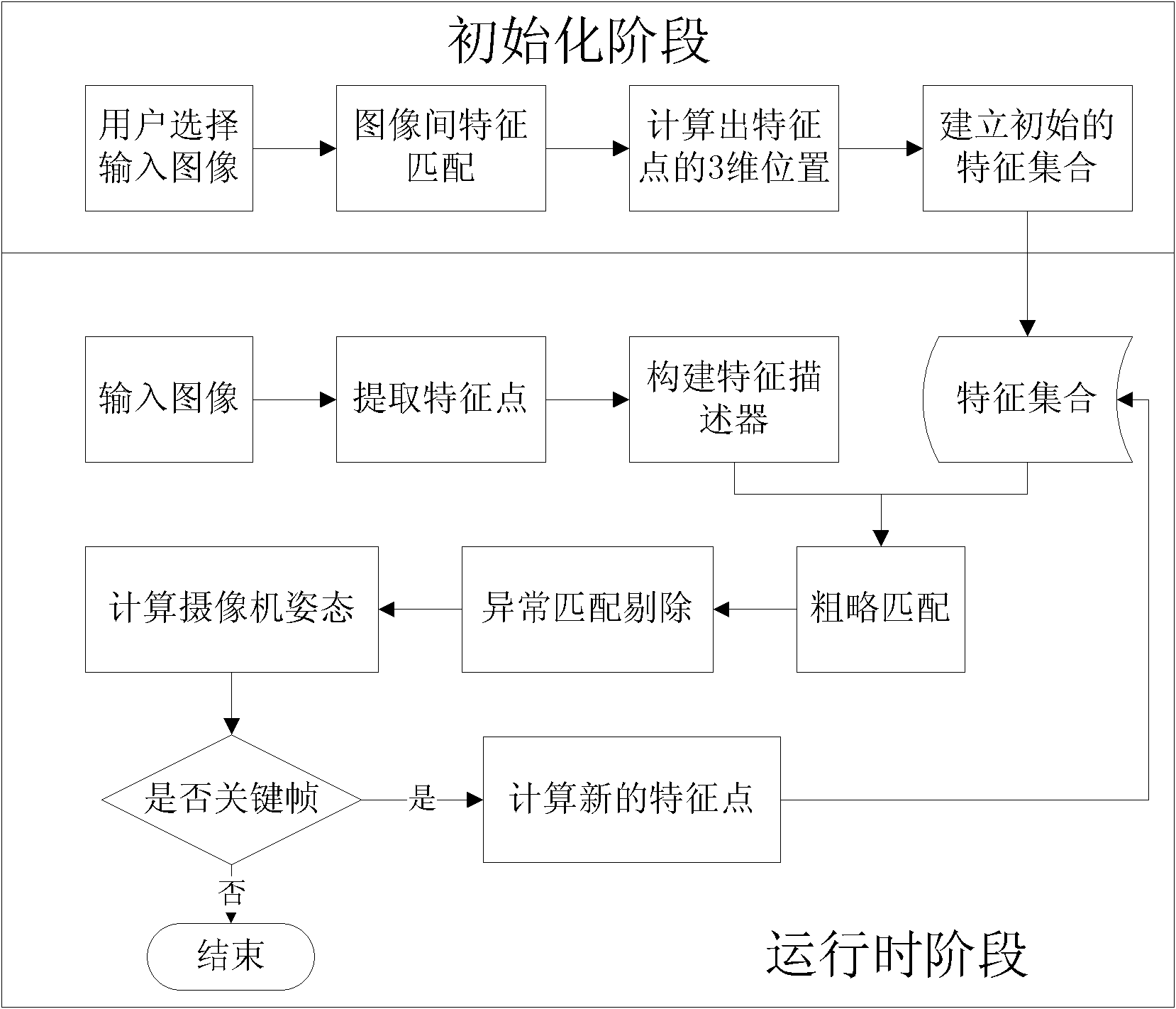

[0023] Such as figure 1 As shown, the implementation process of the present invention includes two parts, the initialization phase and the runtime phase.

[0024] The first stage: the initialization part includes five steps: selecting the input image, extracting feature points in the image, matching features between images, calculating the three-dimensional position of feature points, and establishing an initial set of feature points.

[0025] Step 1: Select an input image.

[0026] The user selects two frames of images with similar content as the initial input image to determine the starting position of the system according to their actual needs, such as the superimposed position of the virtual object in the augmented reality application, the starting position of the navigation in the autonomous navigation application, etc. . The system takes the starting position as the origin of the world coordinate system, and establishes the world coordinate system based on the common p...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com