Impression degree extraction apparatus and impression degree extraction method

A technology for extracting devices and impressions, applied in psychological devices, TV, sports accessories, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach 1

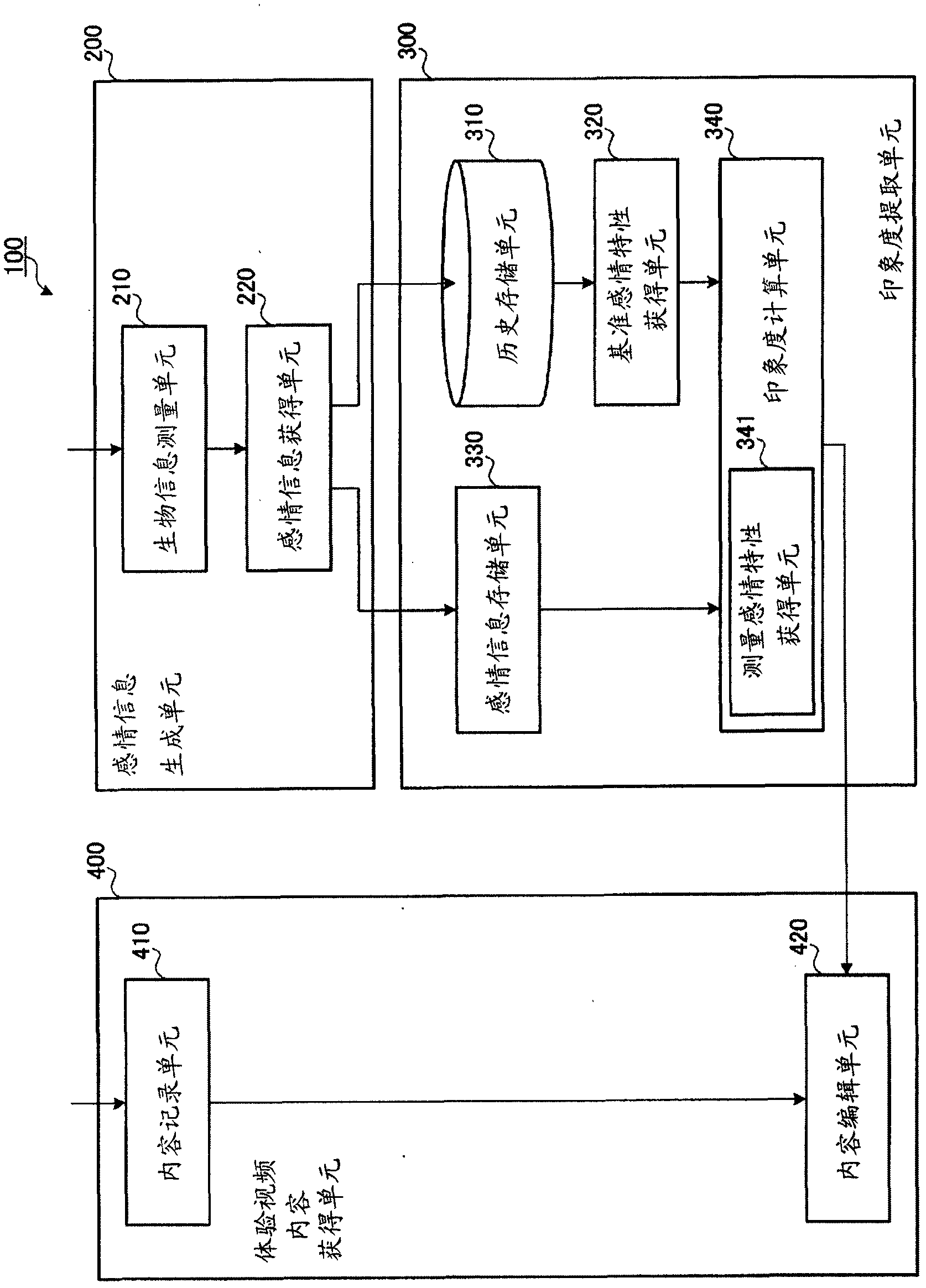

[0043] figure 1 It is a block diagram of a content editing device including the impression degree extracting device according to Embodiment 1 of the present invention. The embodiment of the present invention is an example of a device suitable for shooting video with a body-worn camera and editing the captured video (hereinafter simply referred to as "experience video content") at an amusement park or tourist site.

[0044] exist figure 1 Among them, the content editing device 100 is roughly divided, and has an emotion information generating unit 200 , an impression degree extracting unit 300 , and an experience video content obtaining unit 400 .

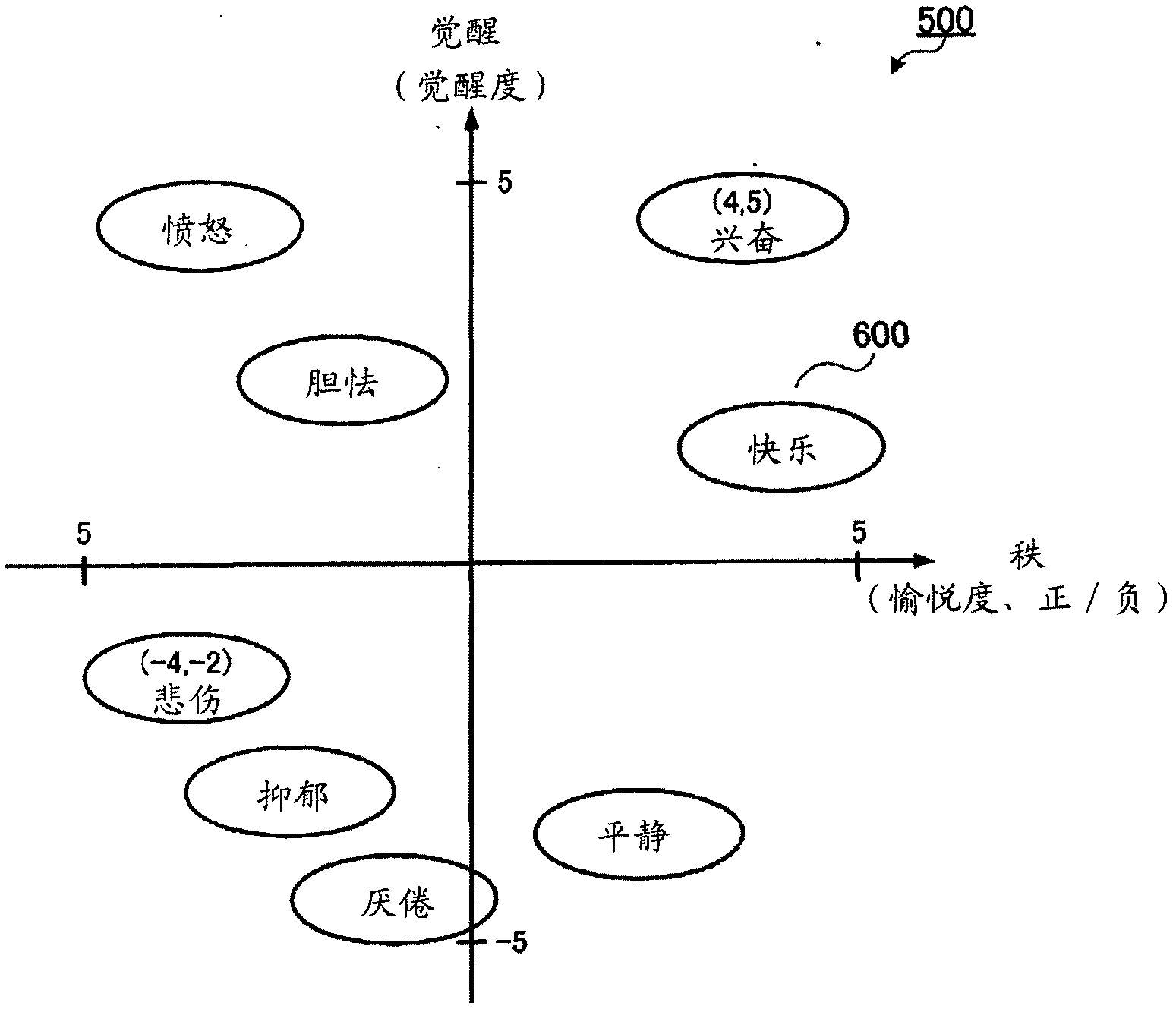

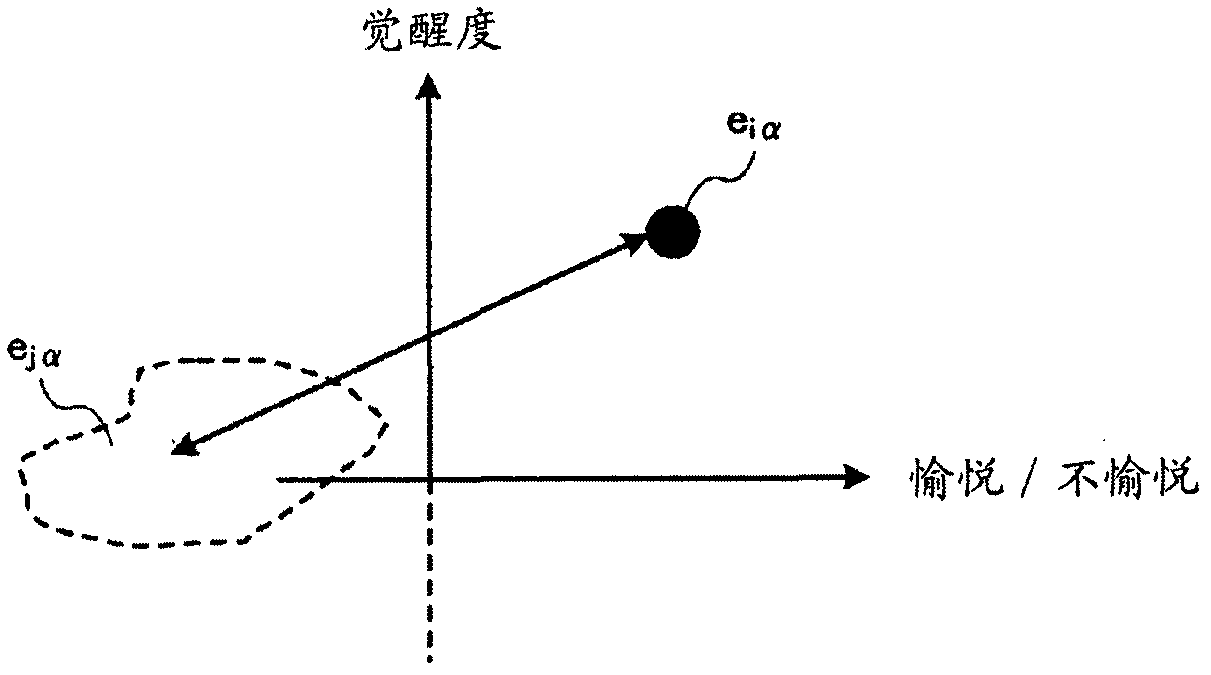

[0045] The emotion information generation unit 200 generates emotion information representing the emotion generated by the user from the biometric information of the user. Here, the so-called emotions are not only emotions such as joy, anger, sorrow, and joy, but also the whole mental state including emotions such as relaxation. E...

Embodiment approach 2

[0230] As Embodiment 2 of the present invention, a case will be described in which the present invention is applied to game content in which selective actions are performed on a wearable game terminal. The wearable game terminal has the impression degree extracting device of this embodiment.

[0231] Figure 19 It is a block diagram of a game terminal including the impression extraction device according to Embodiment 2 of the present invention, which is different from that of Embodiment 1. figure 1 correspond. right with figure 1 The same parts are denoted by the same reference numerals, and descriptions of these parts are omitted.

[0232] exist Figure 19 In, the game terminal 100a replaces figure 1 The experience video content obtaining unit 400 has a game content executing unit 400a.

[0233] The game content execution unit 400a executes game content that performs selective actions. As for the game content, here is a game in which the user raises a pet virtually, an...

Embodiment approach 3

[0242] As Embodiment 3 of the present invention, a case where the present invention is applied to editing of a standby screen of a mobile phone will be described. The mobile phone has the impression extraction device of this embodiment.

[0243] Figure 21 is a block diagram of a mobile phone including the impression degree extracting device according to Embodiment 3 of the present invention, which is different from that of Embodiment 1 figure 1 correspond. right with figure 1 The same parts are denoted by the same reference numerals, and descriptions of these parts are omitted.

[0244] exist Figure 21 , the mobile phone 100b replaces figure 1 The experience video content obtaining unit 400 has a mobile phone unit 400b.

[0245] The mobile phone unit 400b realizes functions of a mobile phone including display control of a standby screen of a liquid crystal display (not shown). The mobile phone 400b has a screen design storage unit 410b and a screen design change unit ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com