Block matching parallax estimation-based middle view synthesizing method

A parallax estimation and intermediate view technology, applied in the field of image-based virtual viewpoint rendering, can solve problems such as difficult algorithm, large amount of data, and inapplicability to real scenes

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

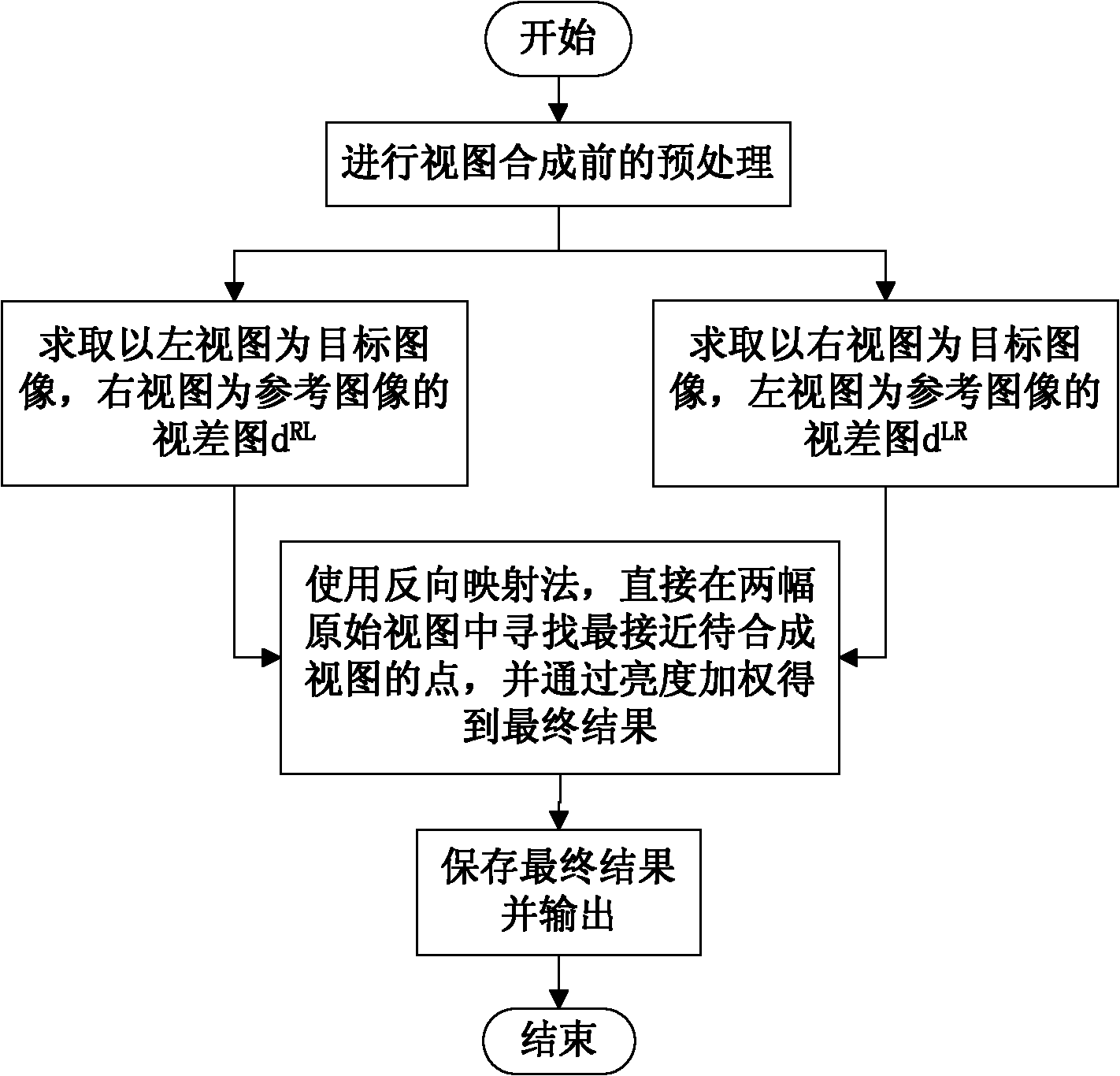

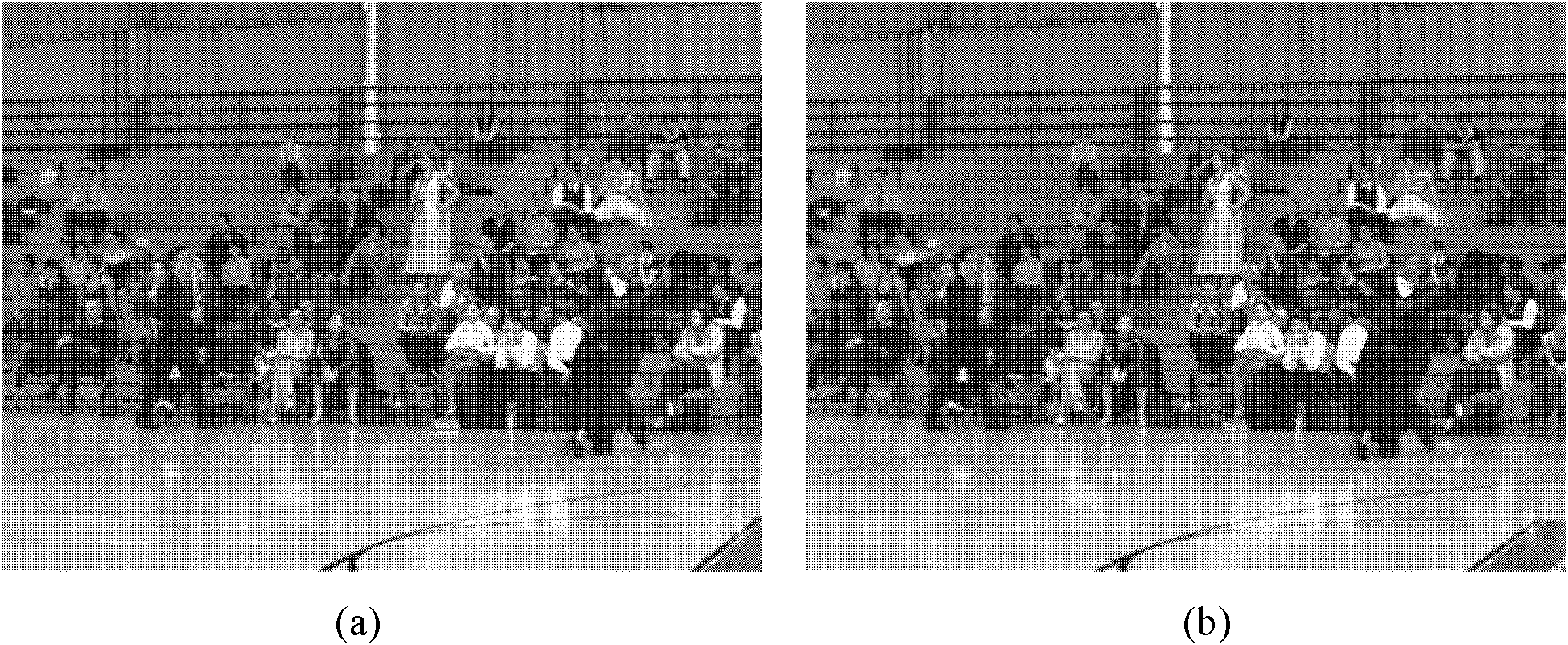

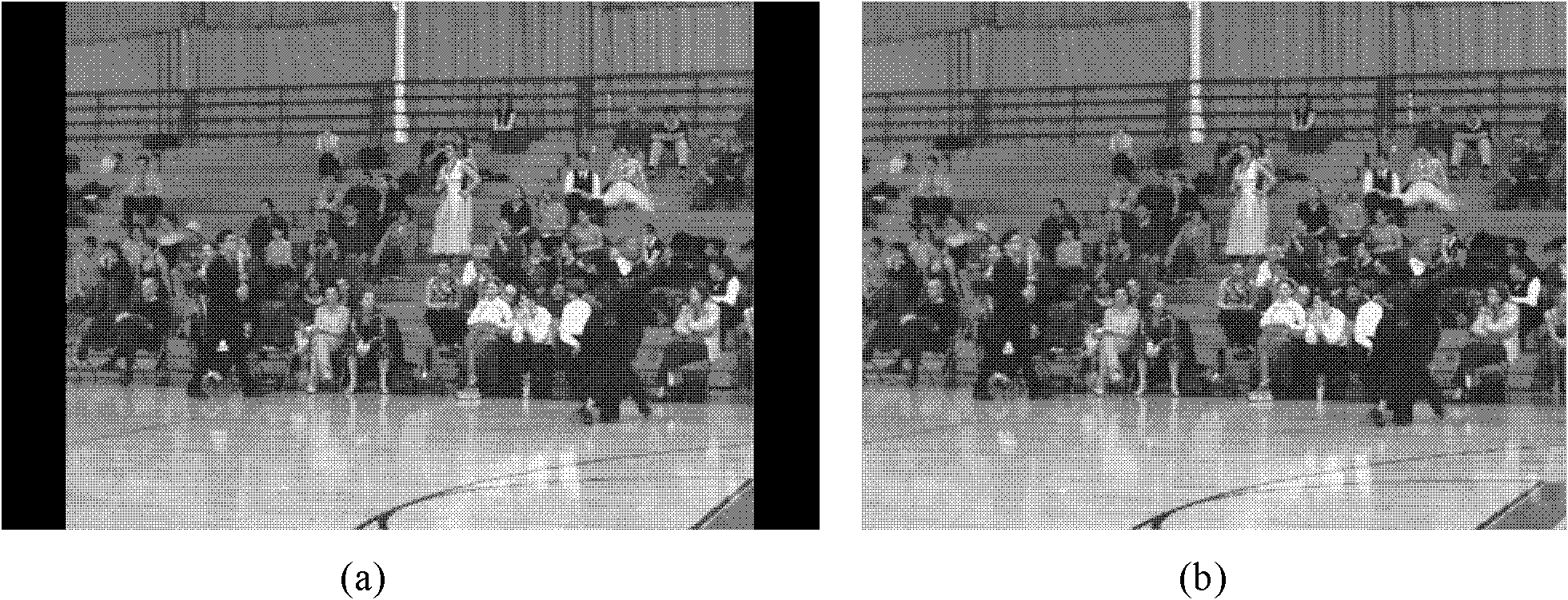

[0047] The present invention is a virtual viewpoint rendering method based on adaptive parallax estimation, which belongs to the category of virtual viewpoint rendering in the field of multi-eye digital image processing, and specifically performs parallax estimation of self-adaptive selection window mode for two images captured synchronously by a horizontal camera group , to obtain a two-way disparity map, and then according to the obtained disparity information and the grayscale information of the input view, according to the reverse mapping principle, treat each coordinate position in the synthesized view, and find the view closest to the viewpoint to be synthesized in the left and right views respectively The corresponding points of the grayscale are finally weighted by brightness to obtain the virtual viewpoint view to be synthesized.

[0048] All "views" and "images" in this example refer to digital bitmaps. The abscissa is from left to right, and the ordinate is from top ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com