Method and system for changing player skin

A player and skin technology, applied in the field of player skin transformation, can solve problems such as invariance and inconvenient viewing, and achieve the effect of meeting experience needs and improving convenience

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

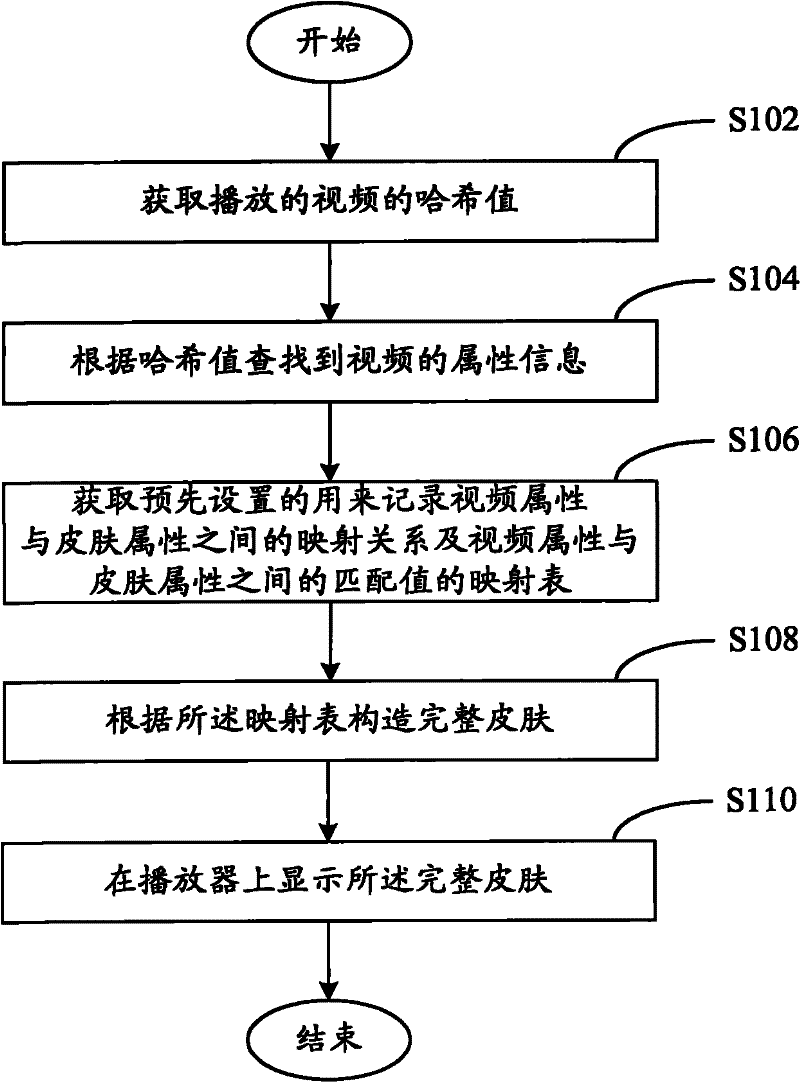

[0044] figure 1 The method flow of player skin transformation in an embodiment is shown, and the method flow includes the following steps:

[0045] In step S102, the hash value of the played video is acquired. The hash value can be used to uniquely identify a file. When the video player is used to play the video, the hash value calculation can be performed on the played video, for example, a logical operation is performed on the content data of the played video to obtain the hash value of the currently playing video.

[0046] In step S104, the attribute information of the video is found according to the hash value. The attribute information of the video is defined in advance and stored in the background database, and the video attribute may include the main attribute of the video and the additional attribute of the video. Among them, the main attribute of the video can be used to describe the type of video or the emotion reflected by the video. For example, the video emotion...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com