Video monitoring method and system

A video surveillance system and video surveillance technology, applied in closed-circuit television systems, image data processing, instruments, etc., can solve problems such as increasing the amount of calculation, and achieve the effect of reducing false negatives and improving accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022] In order to describe the technical content, structural features, achieved goals and effects of the present invention in detail, the following will be described in detail in conjunction with the embodiments and accompanying drawings.

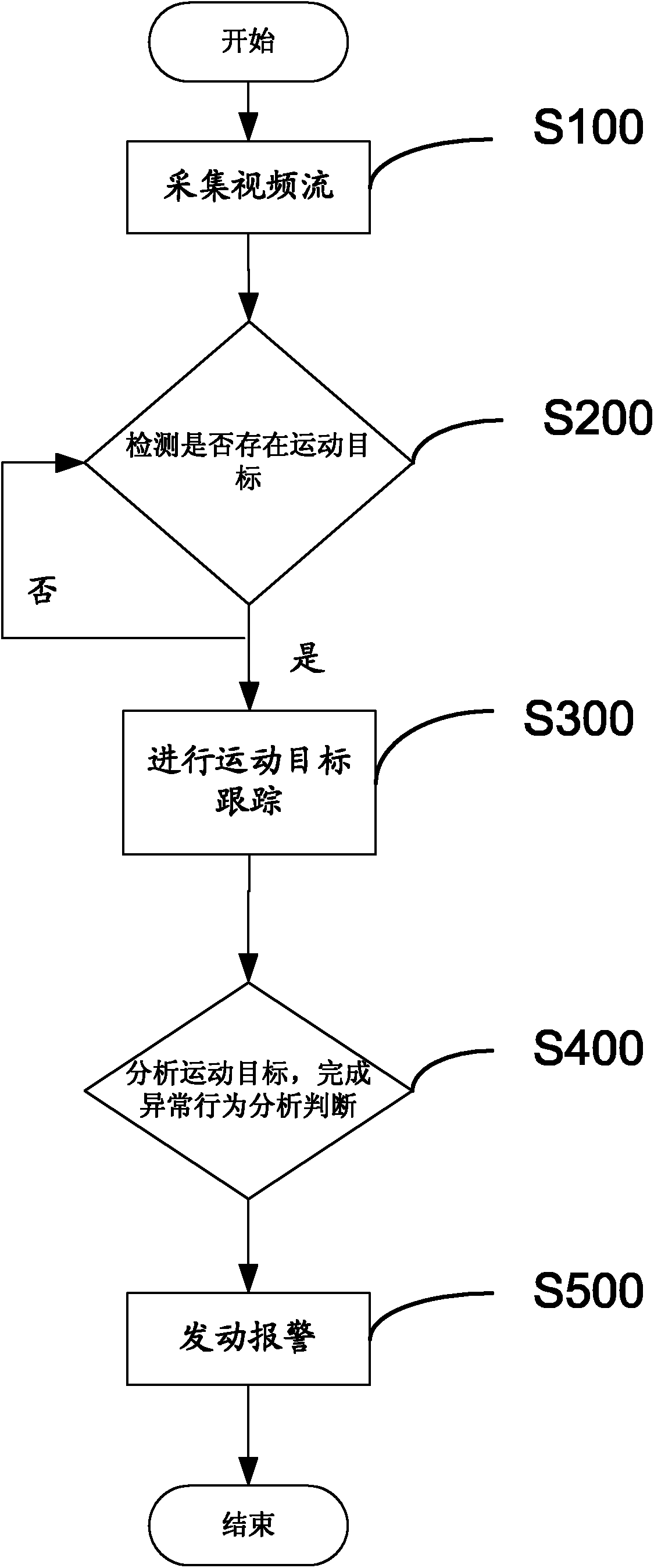

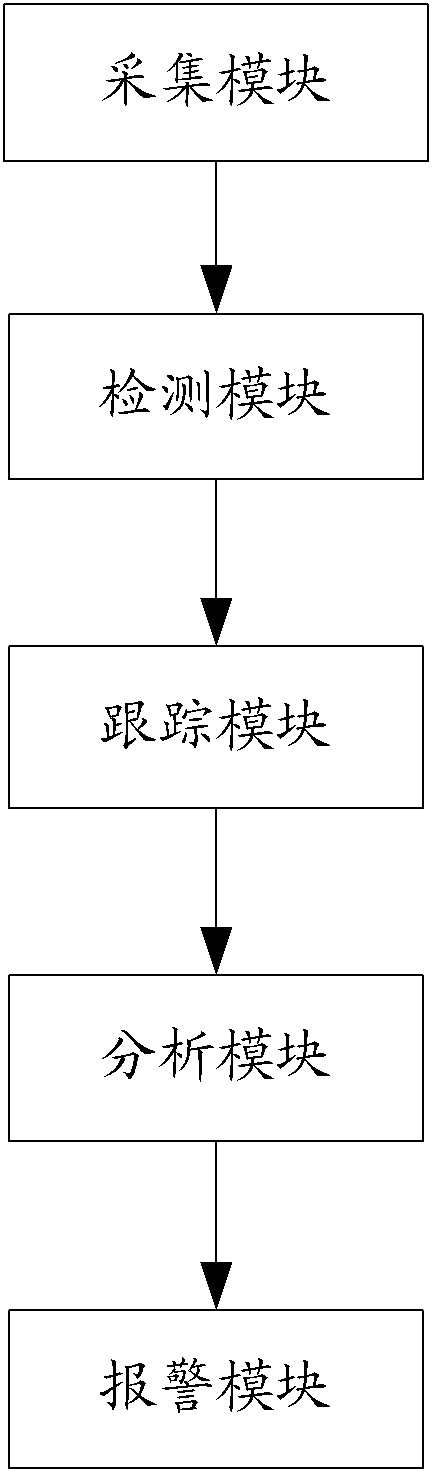

[0023] see figure 1 , a kind of video surveillance method that the present invention provides is characterized in that, comprises the following steps:

[0024] S100: Collect a video stream that needs to be analyzed currently; specifically, the video stream includes a network video stream provided by a hard disk video recorder, a video stream provided by a video capture card, and a file video stream.

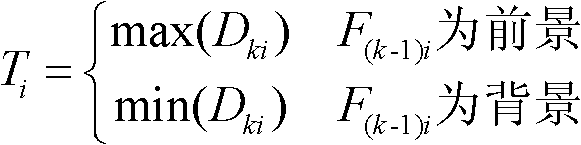

[0025] S200: Detect whether there is a moving target in the video stream, analyze the image information of the collected video stream, if there is a moving target, perform adaptive background modeling, initially obtain the foreground, perform morphological processing on the foreground, and fill the target area And reduce the small fragment a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com