A visually disabled person-oriented automatic picture description method for web content barrier-free access

A technology for the visually impaired and web page content, applied in the field of automatic picture description, can solve problems such as difficulties, narrow bandwidth of screen reading software, lack of visual information assistance, etc., and achieve the effect of improving understanding

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

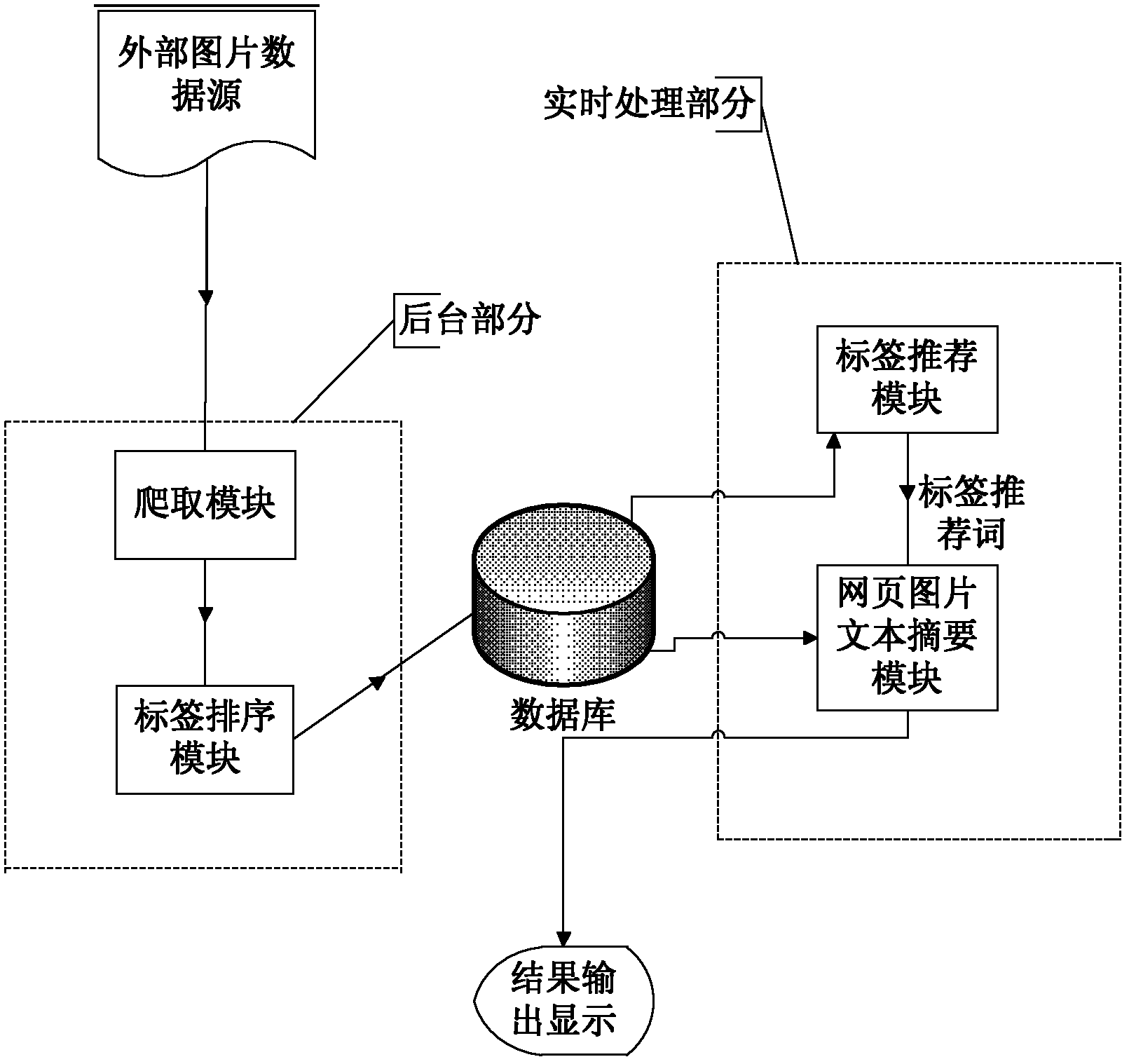

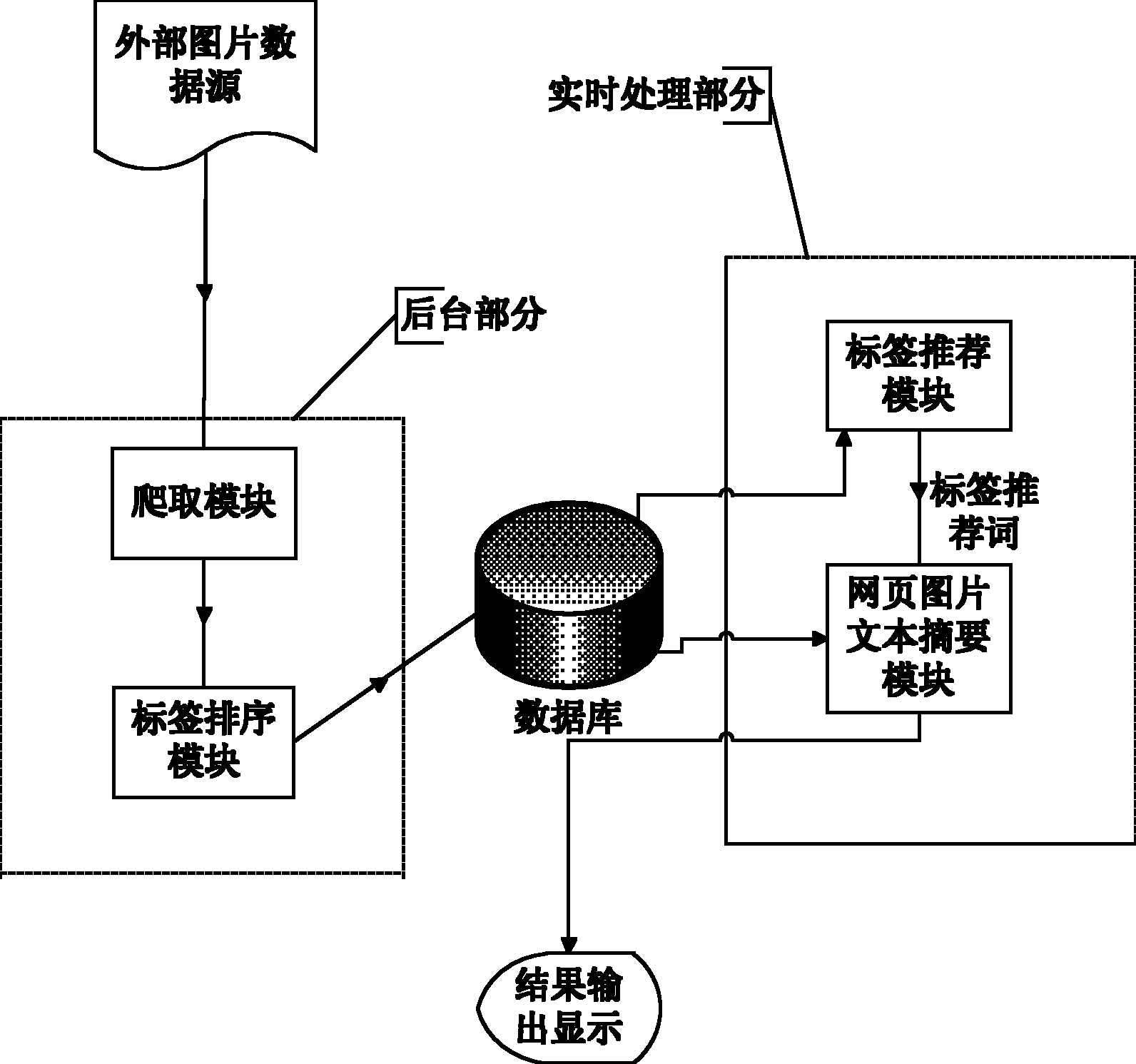

[0033] The present invention will be further described below in conjunction with the accompanying drawings and embodiments.

[0034] 1. Capture labeled pictures on the Internet as a sample library of marked pictures: capture pictures and corresponding labels from many current picture sharing websites. Commonly used image sharing sources include: (1) Flickr, currently the most widely used image sharing website, and provides a complete download API; you can use the Flickr API to grab a large number of images and corresponding tags. (2) LabelMe, the image source provides a large number of high-quality labeled images.

[0035] 2. Sort the image tags in the labeled image sample library to achieve tag denoising:

[0036] 2.1) Use the image color, texture and shape features to measure the similarity between images, and use neighbor voting to sort the image tags as the initial result of the sorting;

[0037] 2.2) Construct the jump probability matrix in the graph sorting algorithm a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com