Multi-device mirror images and stripe function-providing disk cache method, device, and system

A device and disk technology, which is applied in the direction of memory system, response error generation, memory address/allocation/relocation, etc., can solve the problem that data cannot be distributed to multiple physical or logical cache devices, so as to improve data reliability and increase Mirror copies, performance-enhancing effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

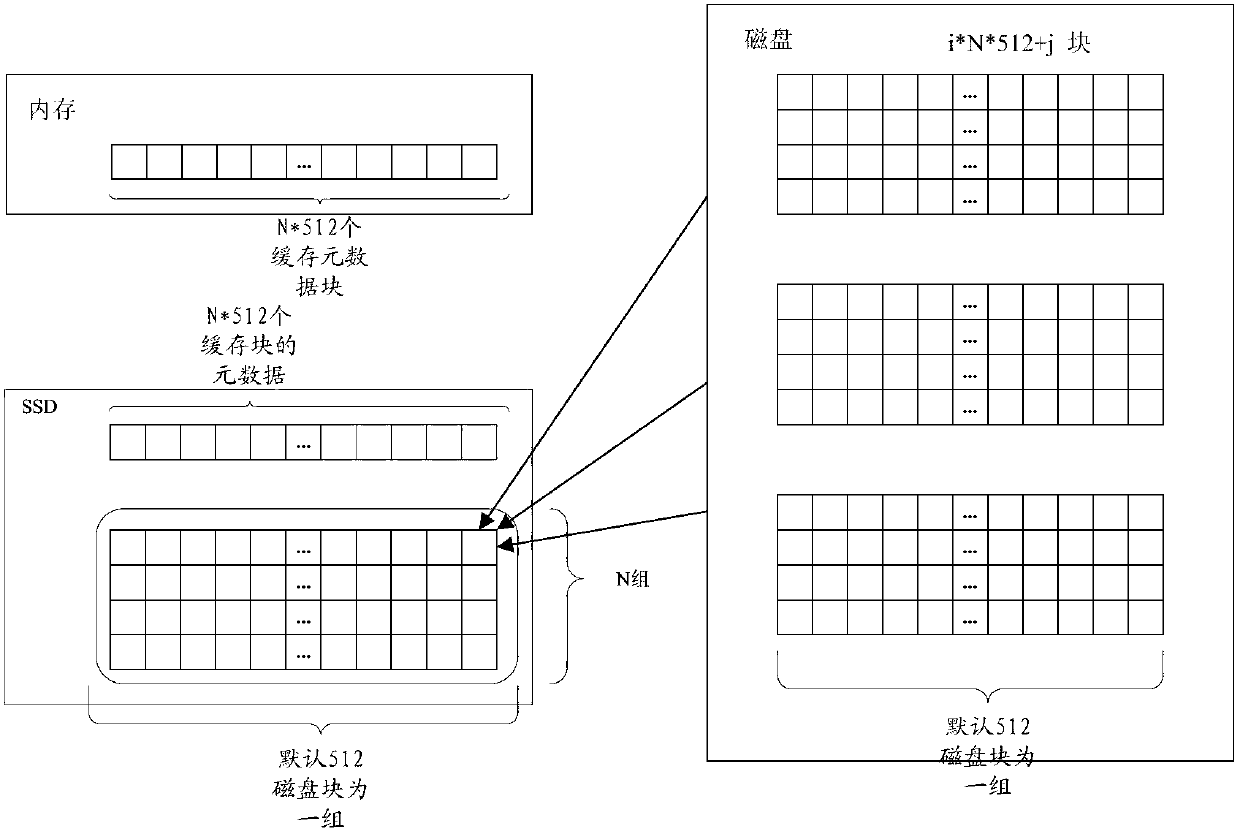

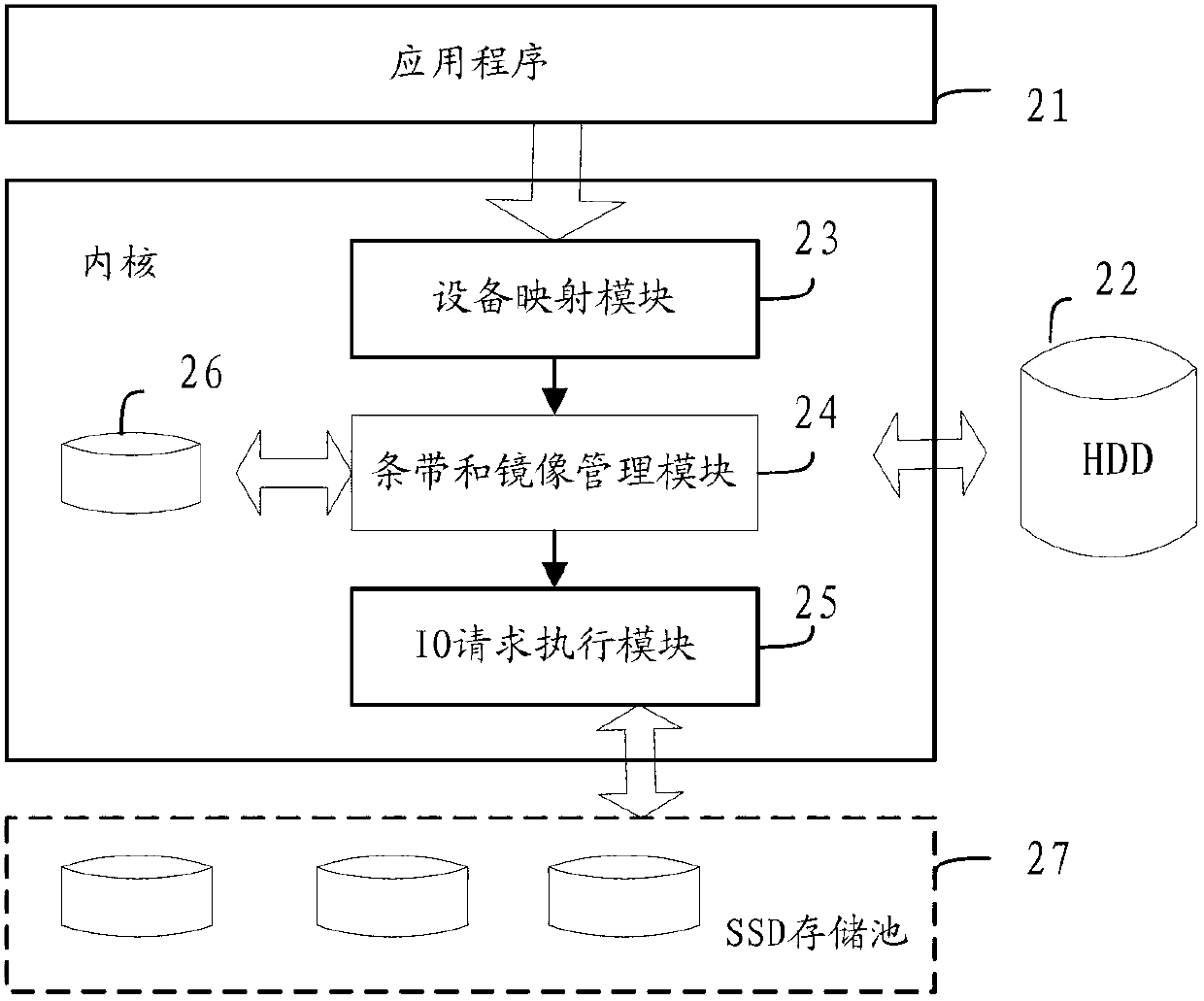

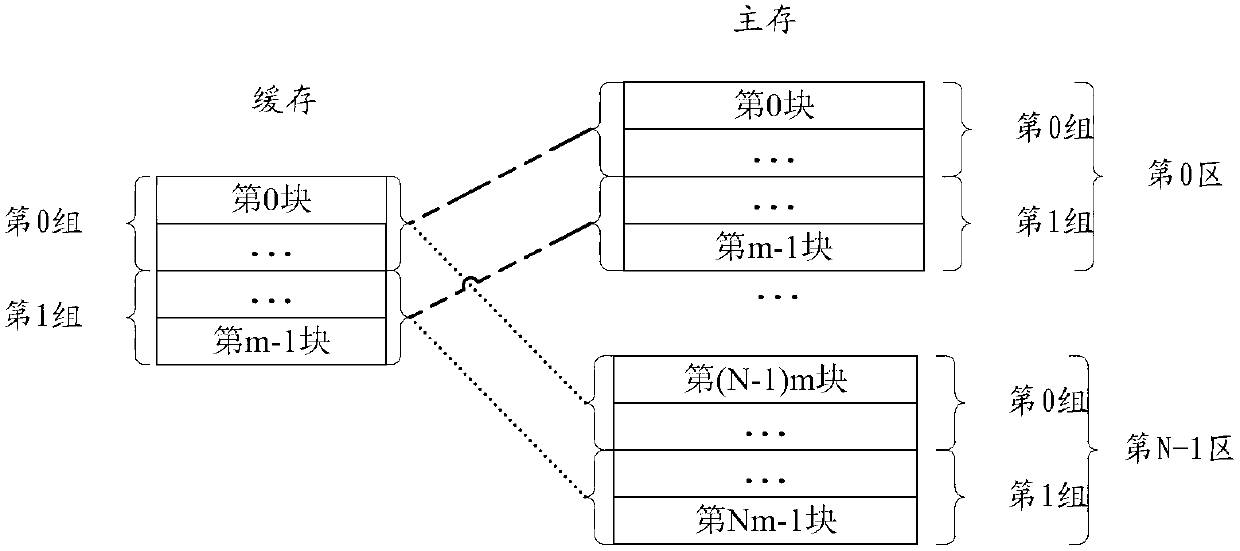

[0036] The technical solution of the present invention realizes the mirroring and striping functions of the cache data inside the cache by improving the cache device management mode of the disk cache and the address mapping method of the cache data block, so that the user can adjust the cache data according to the different characteristics of the cache data block or the user's needs. Perform differentiated mirror and stripe configurations to achieve the optimal combination of performance and reliability.

[0037] In one embodiment, the present invention provides a method for processing cached data. The method includes: a cache management module reads cache block information, and the cache block information includes at least two addresses, and the at least two addresses point to at least two different SSD cache devices; the cache management module according to the cache At least two addresses in the block information initiate data read operations to the at least two SSD cache d...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com