Real-time human face attitude estimation method based on depth video streaming

A technology of deep video streaming and facial gestures, applied in the field of recognition, can solve problems such as manual intervention, decreased accuracy rate, and easy noise interference of collected data

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022] The preferred embodiments of the present invention will be described in detail below in conjunction with the accompanying drawings, so that the advantages and features of the present invention can be more easily understood by those skilled in the art, so as to define the protection scope of the present invention more clearly.

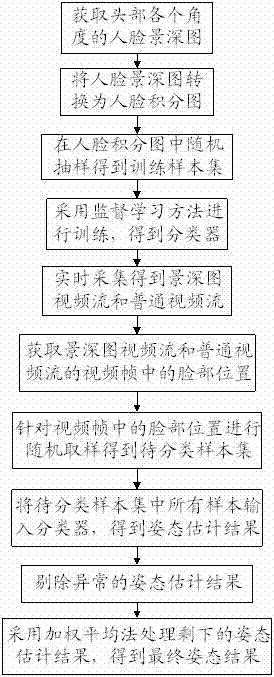

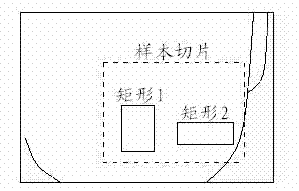

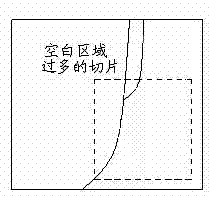

[0023] see Figure 1 to Figure 3 , figure 1 It is a structural schematic diagram of a preferred embodiment of the real-time face pose estimation method based on depth video stream in the present invention; figure 2 is a schematic diagram of sliced samples and test selection; image 3 is a schematic diagram of a slice with too much white space.

[0024] The present invention provides a real-time face pose estimation method based on depth video stream, the steps include: sampling and training stage and real-time estimation stage; in the sampling and training stage, the steps include: obtaining the depth of field of the face at each angle of th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com