Method for detecting submerged mangrove forest distribution in high tide by facing object classification method and based on remote sensing satellite image

An object-oriented, remote sensing satellite technology, applied in image analysis, image data processing, instruments, etc., can solve the problem that remote sensing technology cannot accurately detect, achieve the effect of fast and effective extraction, and improve accuracy and reliability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

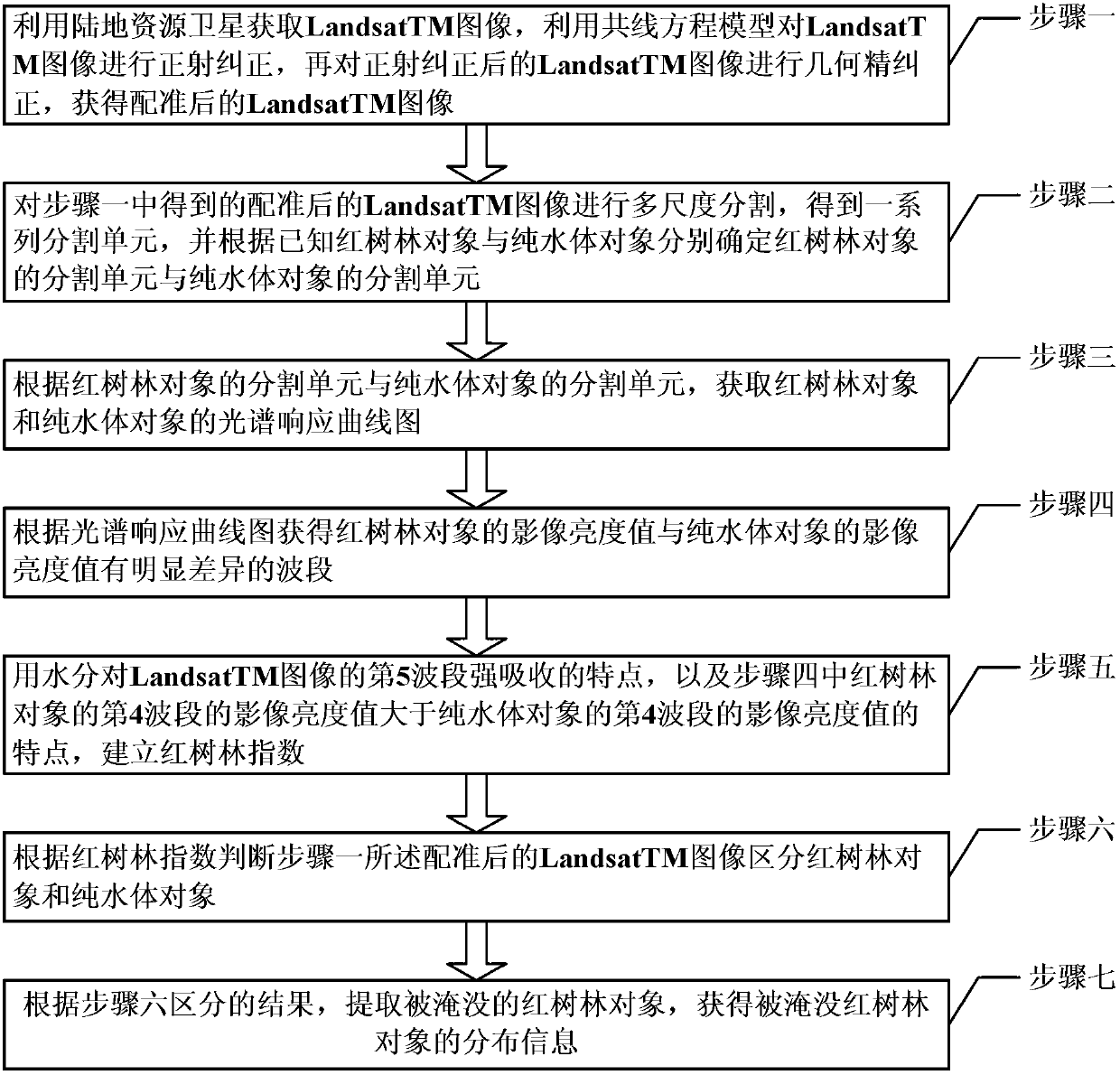

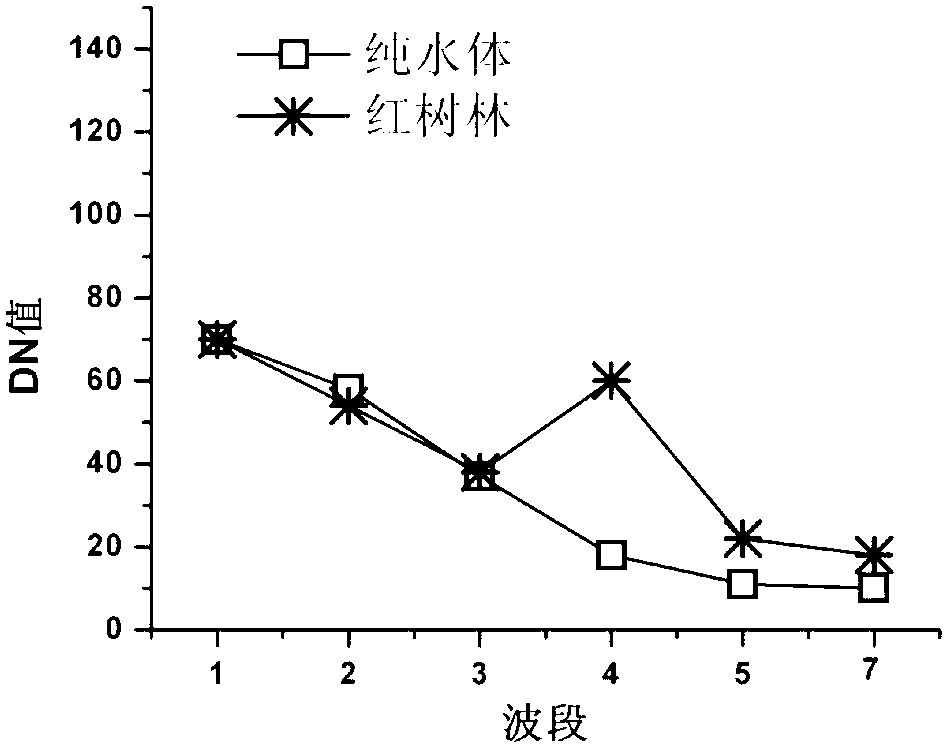

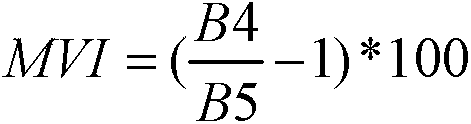

[0018] Specific implementation mode 1. Combination figure 1 and figure 2 This specific embodiment will be described. A method for detecting the distribution of submerged mangroves during high tide based on remote sensing satellite images and object-oriented classification, it includes the following steps:

[0019] Step 1: Obtain LandsatTM images using Landsat, use the collinear equation model to perform orthorectification on the LandsatTM images, and then perform geometric fine correction on the orthorectified LandsatTM images to obtain the registered LandsatTM images;

[0020] The geometric fine correction is a double-line interpolation geometric fine correction;

[0021] Step 2: Carry out multi-scale segmentation on the registered LandsatTM image obtained in step 1 to obtain a series of segmentation units, and determine the segmentation units of mangrove objects and pure water bodies respectively according to the known mangrove objects and pure water objects object segme...

specific Embodiment approach 2

[0029] Embodiment 2. The difference between this embodiment and Embodiment 1 is that Step 2: the process of multi-scale segmentation of the registered LandsatTM image obtained in Step 1 is:

[0030] Step 2 1: Quartering the registered LandsatTM images without overlapping to obtain R i , where i=1, 2, 3, 4;

[0031] Step 2 2: Judging the consistency of tone and texture of the internal pixels in the equally divided area, and splitting when the consistency is below 85%;

[0032] The splitting process is to divide the equally divided regions into four equal parts that do not overlap;

[0033] Step 2 3: Judging the consistency of the tone and texture of the internal pixels in adjacent equally divided areas, when the consistency is greater than or equal to 85%, the merging process is performed;

[0034] Both the splitting process and the merging process are continued until there are no equally divided regions that can be split and merged, that is, the internal consistency of the s...

specific Embodiment

[0041]Step 1: Download the medium-resolution remote sensing image LandsatTM data, the track number is P124R45, the time is October 30, 2006, the tide level is 187cm, and a large part of the mangroves in the image have been submerged by the tide. The collinear equation model is used to perform orthorectification on the LandsatTM data of the medium-resolution remote sensing image, and then use the 1:50000 terrain data to select the ground control points in the ERDAS software, and perform geometric fine correction on the orthorectified image to obtain the registration LandsatTM image after;

[0042] Step 2: Carry out multi-layer and multi-scale segmentation on the registered LandsatTM image obtained in step 1 to obtain a series of segmentation units. Each segmentation unit is composed of spatially adjacent pixels with a homogeneity of more than 85%. Treat each segmentation unit as an object;

[0043] Step 3: Utilize object-oriented classification software to directly extract the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com