Virtual hair style modeling method of images and videos

A modeling method and hairstyle model technology are applied in the field of virtual character modeling and image and video editing, and can solve the problem of not being able to generate a physically reasonable three-dimensional hairstyle model.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

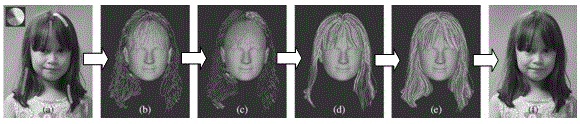

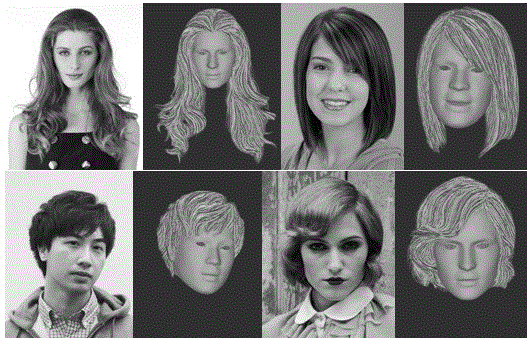

[0095] The inventor has realized an implementation example of the present invention on a machine equipped with an Intel Xeon E5620 central processing unit and an NVidia GTX680 graphics processor. The inventors obtained all experimental results shown in the accompanying drawings using all parameter values listed in the detailed description. All generated hair models consist of approximately 200,000 to 400,000 hairline curves, each individual hairline is represented as a line segment connected by multiple vertices, and the vertex colors are sampled from the original image, and these hairlines are rendered during the actual rendering process. Real-time rendering via geometry shaders expanded into screen-aligned polygon strips.

[0096] The inventor invited some users to test a prototype system of the method. The results show that users generally only need to draw about 2 to 8 simple strokes to interact with an ordinary portrait. For an image of approximately 500×800 size, the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com