Haptic rendering method based on texture image

A technology of tactile reproduction and texture image, applied in the field of image processing, it can solve the problems of inability to simulate texture contact, limited texture types, lack of realism, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0047] The technical solution of the present invention will be specifically described below in conjunction with the accompanying drawings.

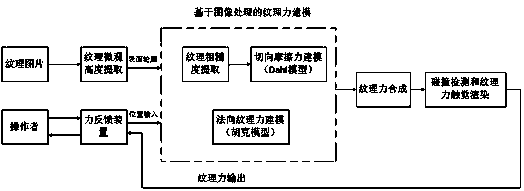

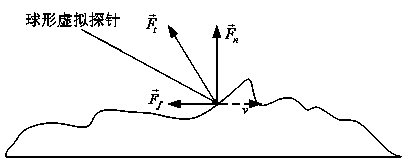

[0048] Such as figure 1 with figure 2 As shown, a kind of haptic reproduction method based on texture image of the present invention, the haptic reproduction method includes the following process:

[0049] Step 10) Extracting texture features, the texture features include the microscopic height of the texture surface and the dynamic friction coefficient representing the texture roughness;

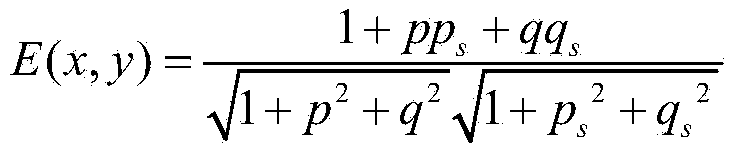

[0050] The microscopic height of the textured surface is extracted using a shape recovery method from shading. The specific process is as follows: first, according to the method of recovering shape from light and shade, assuming that the light source is a point light source at infinity, the reflection model is a Lambertian reflection model, and the imaging geometric relationship is an orthogonal projection, the texture shown in formula (1) is establ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com