Data caching method and device

A data cache and data technology, applied in the direction of electrical digital data processing, special data processing applications, instruments, etc., can solve the problem that the cache capacity is not enough to store a large amount of cache data, the data cannot be stored in the memory, and the data cannot be guaranteed to exist and other issues to achieve the effect of avoiding insufficient cache capacity, saving cache space, and reducing response time consumption

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

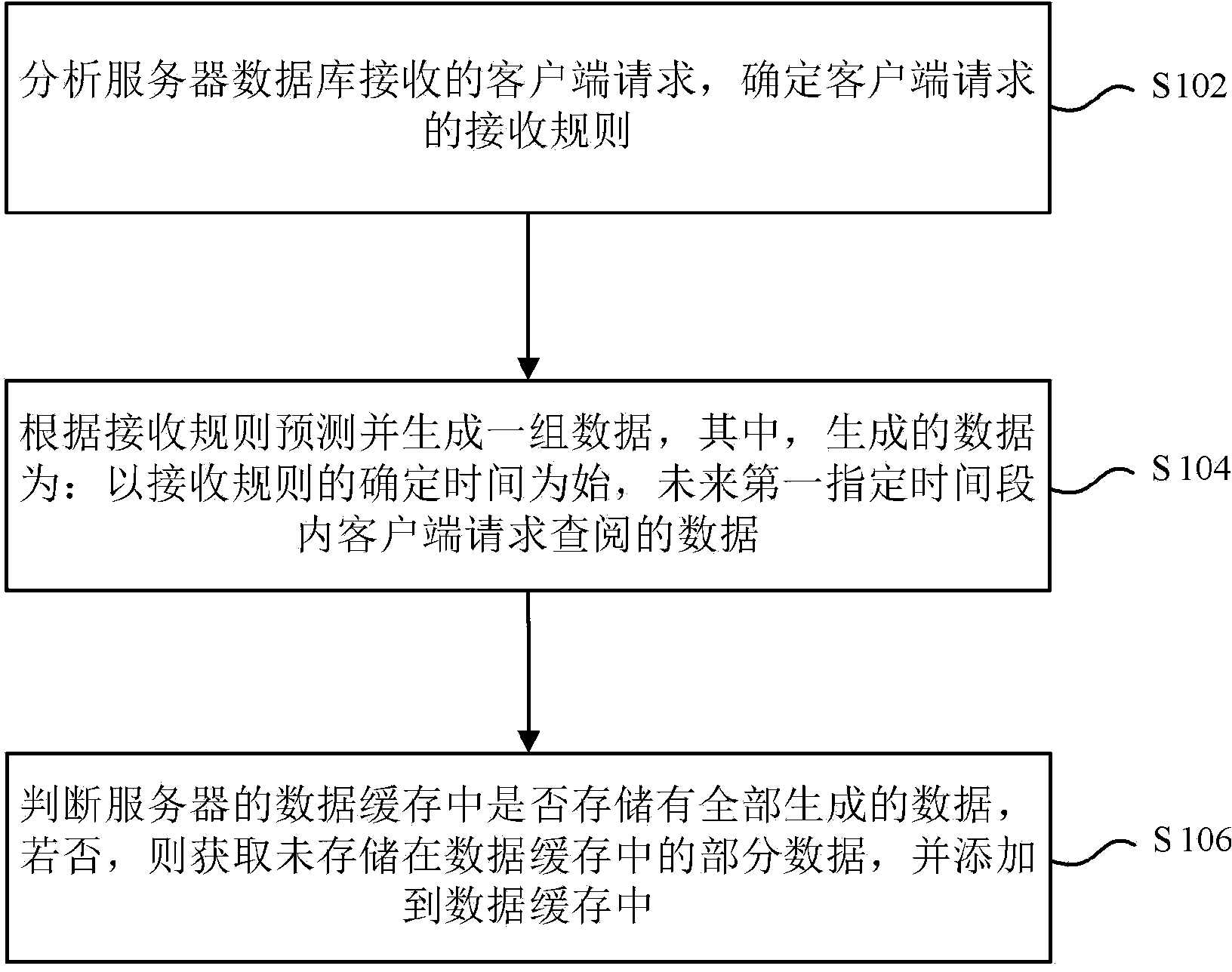

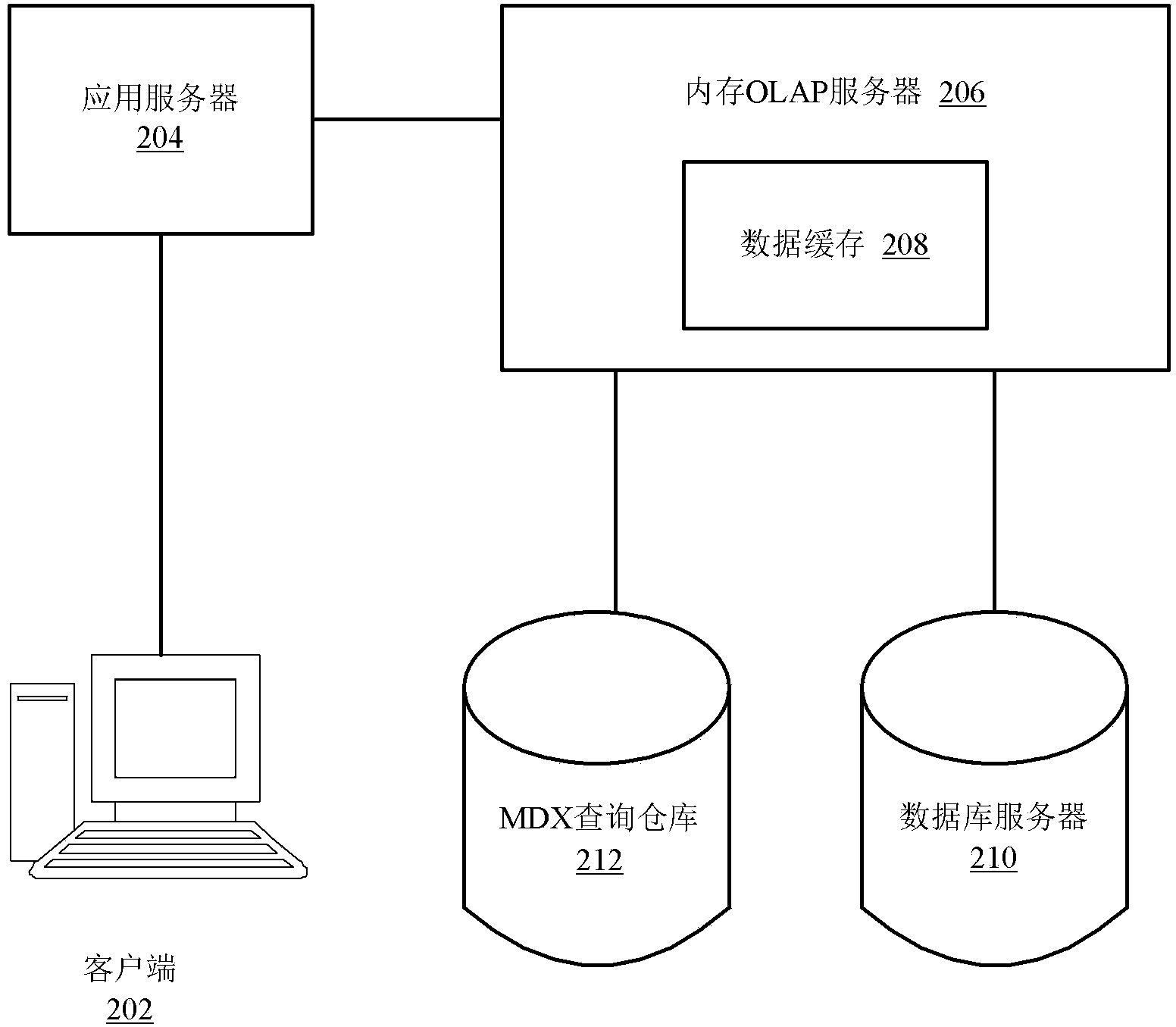

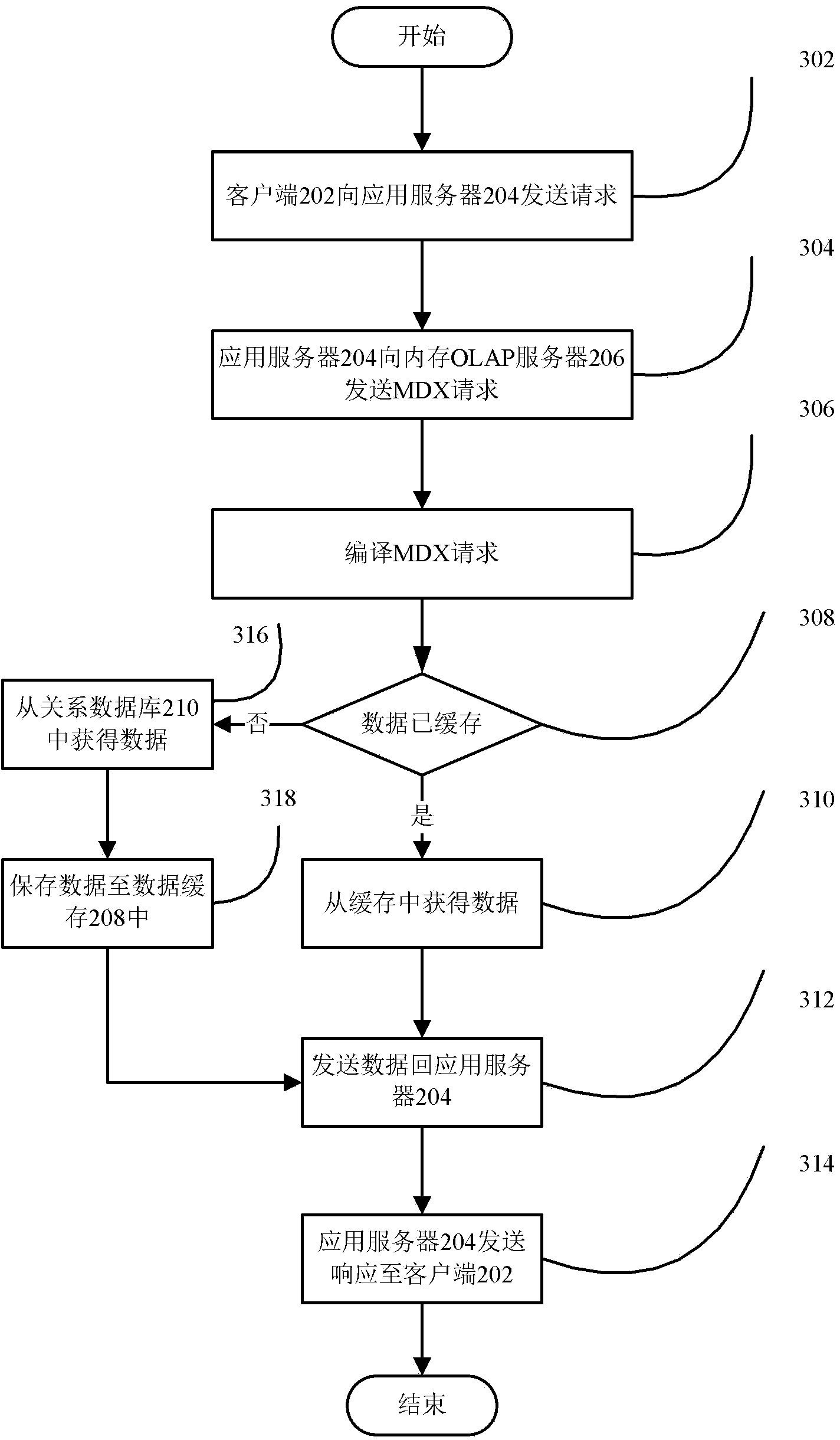

[0038] In order to illustrate the data caching method provided by the embodiment of the present invention more clearly, a preferred embodiment is used for description. In this preferred embodiment, the data caching method is implemented through a multi-layer architecture. Such as figure 2 A schematic structural diagram of a multi-layer architecture according to a preferred embodiment of the present invention is shown. A multi-tier architecture includes database servers, application servers, and clients. In addition, in the multi-tier architecture, business logic can also be added to realize business functions, or all functions can be realized through the application server. in such as figure 2 The multi-tier architecture shown includes a client 202, an application server 204, a memory OLAP (On line analytical processing, hereinafter referred to as OLAP) server 206 (that is, a database server), a data cache 208 in the memory OLAP server 206, and Database server 210 and MD...

Embodiment 2

[0086] According to the introduction of the determination of the receiving rule in the first embodiment, the second embodiment introduces the data caching method provided by the embodiment of the present invention when the receiving rule is used in the batch processing mode or the real-time filling mode.

[0087] Figure 5 A processing flowchart of a data caching method using a batch processing mode according to an embodiment of the present invention is shown. Among them, the purpose of the batch mode is to fill the data cache 208, and the receiving rule begins to request data from the client, and the requested data already exists in the data cache 208, thereby reducing the response time of the request. see Figure 5 , the process includes at least step 502 to step 508.

[0088] Step 502, select a receiving rule, and refresh the clustering model.

[0089] Specifically, a system administrator or a system process can identify and select the most appropriate cluster and subseq...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com