KNN-GPU acceleration method based on OpenCL

A KNN algorithm and distance technology, applied in machine execution devices, concurrent instruction execution, etc., can solve problems such as high computing overhead, and achieve the effect of reducing time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

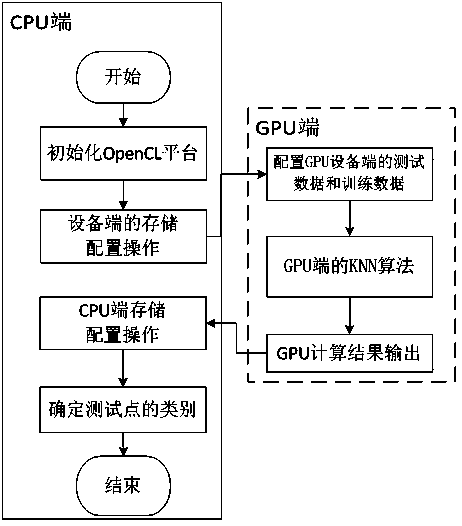

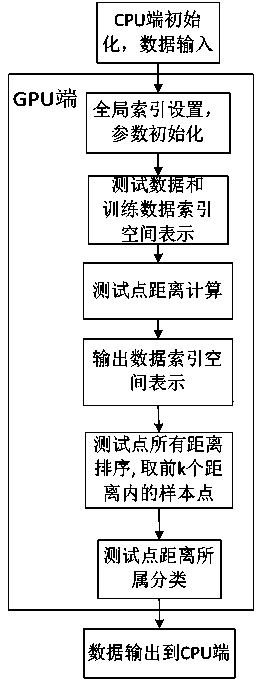

[0022] Embodiment one: A preferred embodiment of the KNN-GPU acceleration method based on OpenCL is described as follows in conjunction with the accompanying drawings. In this embodiment, the dimension is 4, the number of test points is 100, and the number of training data 15 is an example for illustration, which is divided into 6 steps:

[0023] Step 1: Initialize the OpenCL platform: first obtain the OpenCL platform information, then obtain the device ID, and finally create the context of the device's operating environment.

[0024] Step 2: Storage configuration operation on the device side: Three memories are configured on the CPU side: 1 is used to store input training data, 2 is used to store input test data, and 3 is used to store output classification data; the GPU side uses the corresponding memory read data in.

[0025] Step 3: Configure the test data and training data on the GPU device side: allocate the number of threads according to the GPU device side, set the si...

Embodiment 2

[0045] Embodiment 2: In this embodiment, the dimension is 8 and the number of test points is 4000 as an example for illustration. The steps are the same as those in Embodiment 1 and will not be repeated here.

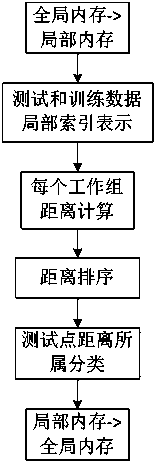

[0046] In this embodiment, the working group size is set to 256, and the global index space is divided into N / 256 working groups. The test result is: under the same classification effect, the calculation time of the previous KNN classification method on the CPU It is 7.641 seconds, but with the OpenCL-based KNN-GPU acceleration method proposed by the present invention, its operation time only needs 0.923 seconds, and the speed is increased to 8.28 times. It can be seen that the growth of the data dimension will bring the performance advantages of the GPU into full play.

[0047] Experimental results:

[0048] The present invention has been carried out based on the experiment of OpenCL1.0 platform, and experimental environment selects Intel CORE i5 processor for CPU, me...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com