Automatic ground testing and relative camera pose estimation method in depth image

A technology of depth camera and depth image, which is applied in image enhancement, image analysis, image data processing, etc. It can solve problems such as lack of performance in complex environments, limited system application range, difficult height information, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0031] Explanation: In the formula describing the present invention, multi-dimensional vectors and matrices are represented in bold with regular fonts, such as A, depth, etc., while italics are not bold to indicate one-dimensional variables, such as x, y, z, u, v, etc.

[0032] The realization steps of the present invention are as follows:

[0033] The first step, calibrate the image:

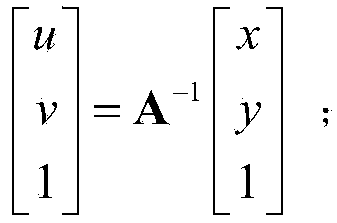

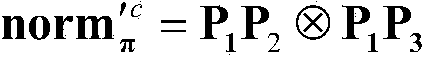

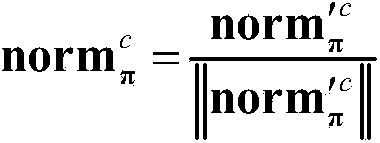

[0034] Assuming that the known internal parameter matrix of the depth camera is denoted as A, the internal parameter matrix can be offline through a classic calibration method such as Tsai's two-step method [1] and Zhang Zhengyou's planar method [2] Wait for it to be calculated. After obtaining the internal parameter matrix, convert the pixel coordinates of the depth image to radian coordinates, the following formula is wrong! Reference source not found. Shown:

[0035] u v ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com